只能用於文本與影像數據?No!看TabTransformer對結構化業務數據精準建模

💡 作者:韓信子@ShowMeAI

📘 深度學習實戰系列://www.showmeai.tech/tutorials/42

📘 TensorFlow 實戰系列://www.showmeai.tech/tutorials/43

📘 本文地址://www.showmeai.tech/article-detail/315

📢 聲明:版權所有,轉載請聯繫平台與作者並註明出處

📢 收藏ShowMeAI查看更多精彩內容

自 Transformers 出現以來,基於它的結構已經顛覆了自然語言處理和電腦視覺,帶來各種非結構化數據業務場景和任務的巨大效果突破,接著大家把目光轉向了結構化業務數據,它是否能在結構化表格數據上同樣有驚人的效果表現呢?

答案是YES!亞馬遜在論文中提出的 📘TabTransformer,是一種把結構調整後適應於結構化表格數據的網路結構,它更擅長於捕捉傳統結構化表格數據中不同類型的數據資訊,並將其結合以完成預估任務。下面ShowMeAI給大家講解構建 TabTransformer 並將其應用於結構化數據上的過程。

💡 環境設置

本篇使用到的深度學習框架為TensorFlow,大家需要安裝2.7或更高版本, 我們還需要安裝一下 📘TensorFlow插件addons,安裝的過程大家可以通過下述命令完成:

pip install -U tensorflow tensorflow-addons

關於本篇程式碼實現中使用到的TensorFlow工具庫,大家可以查看ShowMeAI製作的TensorFlow速查手冊快學快用:

接下來我們導入工具庫

import math

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import tensorflow_addons as tfa

import matplotlib.pyplot as plt

💡 數據說明

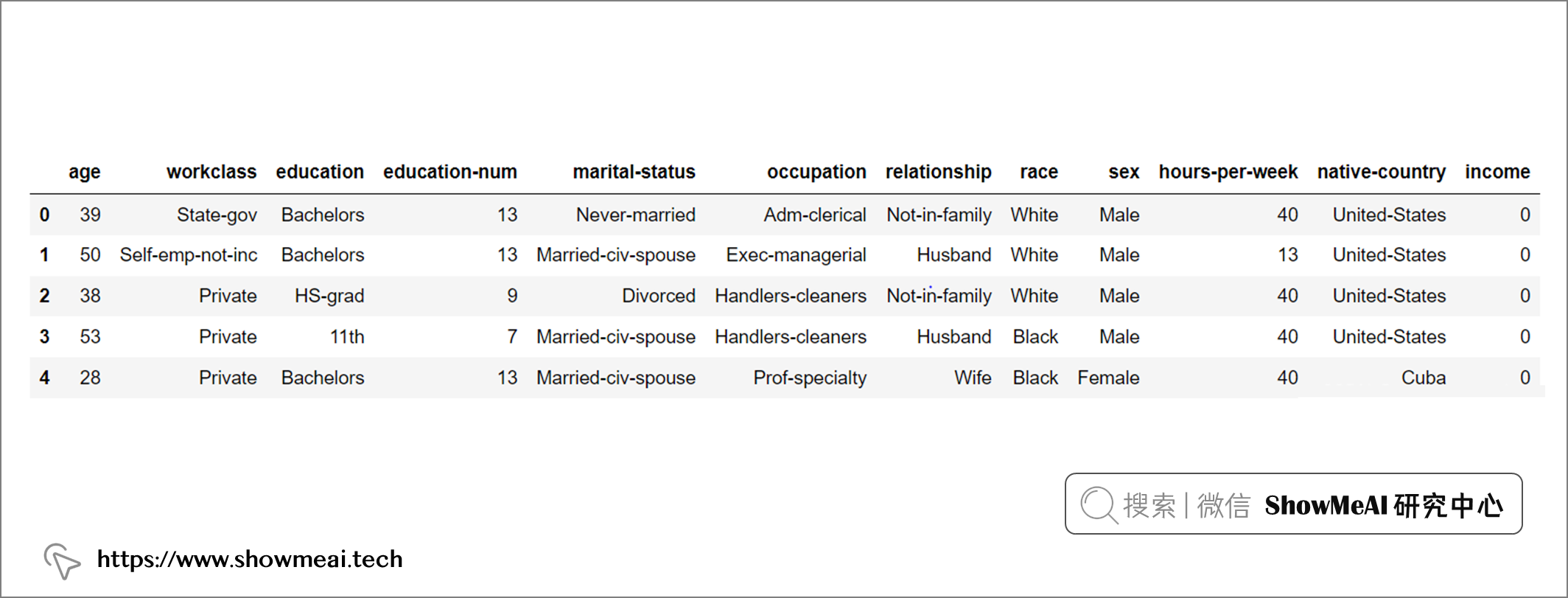

ShowMeAI在本例中使用到的是 🏆美國人口普查收入數據集,任務是根據人口基本資訊預測其年收入是否可能超過 50,000 美元,是一個二分類問題。

數據集可以在以下地址下載:

📘 //archive.ics.uci.edu/ml/datasets/Adult

📘 //archive.ics.uci.edu/ml/machine-learning-databases/adult/

數據從美國1994年人口普查資料庫抽取而來,可以用來預測居民收入是否超過50K/year。該數據集類變數為年收入是否超過50k,屬性變數包含年齡、工種、學歷、職業、人種等重要資訊,值得一提的是,14個屬性變數中有7個類別型變數。數據集各屬性是:其中序號0~13是屬性,14是類別。

| 欄位序號 | 欄位名 | 含義 | 類型 |

|---|---|---|---|

| 0 | age | 年齡 | Double |

| 1 | workclass | 工作類型* | string |

| 2 | fnlwgt | 序號 | string |

| 3 | education | 教育程度* | string |

| 4 | education_num | 受教育時間 | double |

| 5 | maritial_status | 婚姻狀況* | string |

| 6 | occupation | 職業* | string |

| 7 | relationship | 關係* | string |

| 8 | race | 種族* | string |

| 9 | sex | 性別* | string |

| 10 | capital_gain | 資本收益 | string |

| 11 | capital_loss | 資本損失 | string |

| 12 | hours_per_week | 每周工作小時數 | double |

| 13 | native_country | 原籍* | string |

| 14(label) | income | 收入標籤 | string |

我們先用pandas讀取數據到dataframe中:

CSV_HEADER = [

"age",

"workclass",

"fnlwgt",

"education",

"education_num",

"marital_status",

"occupation",

"relationship",

"race",

"gender",

"capital_gain",

"capital_loss",

"hours_per_week",

"native_country",

"income_bracket",

]

train_data_url = (

"//archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.data"

)

train_data = pd.read_csv(train_data_url, header=None, names=CSV_HEADER)

test_data_url = (

"//archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.test"

)

test_data = pd.read_csv(test_data_url, header=None, names=CSV_HEADER)

print(f"Train dataset shape: {train_data.shape}")

print(f"Test dataset shape: {test_data.shape}")

Train dataset shape: (32561, 15)

Test dataset shape: (16282, 15)

我們做點數據清洗,把測試集第一條記錄剔除(它不是有效的數據示例),把類標籤中的尾隨的「點」去掉。

test_data = test_data[1:]

test_data.income_bracket = test_data.income_bracket.apply(

lambda value: value.replace(".", "")

)

再把訓練集和測試集存回單獨的 CSV 文件中。

train_data_file = "train_data.csv"

test_data_file = "test_data.csv"

train_data.to_csv(train_data_file, index=False, header=False)

test_data.to_csv(test_data_file, index=False, header=False)

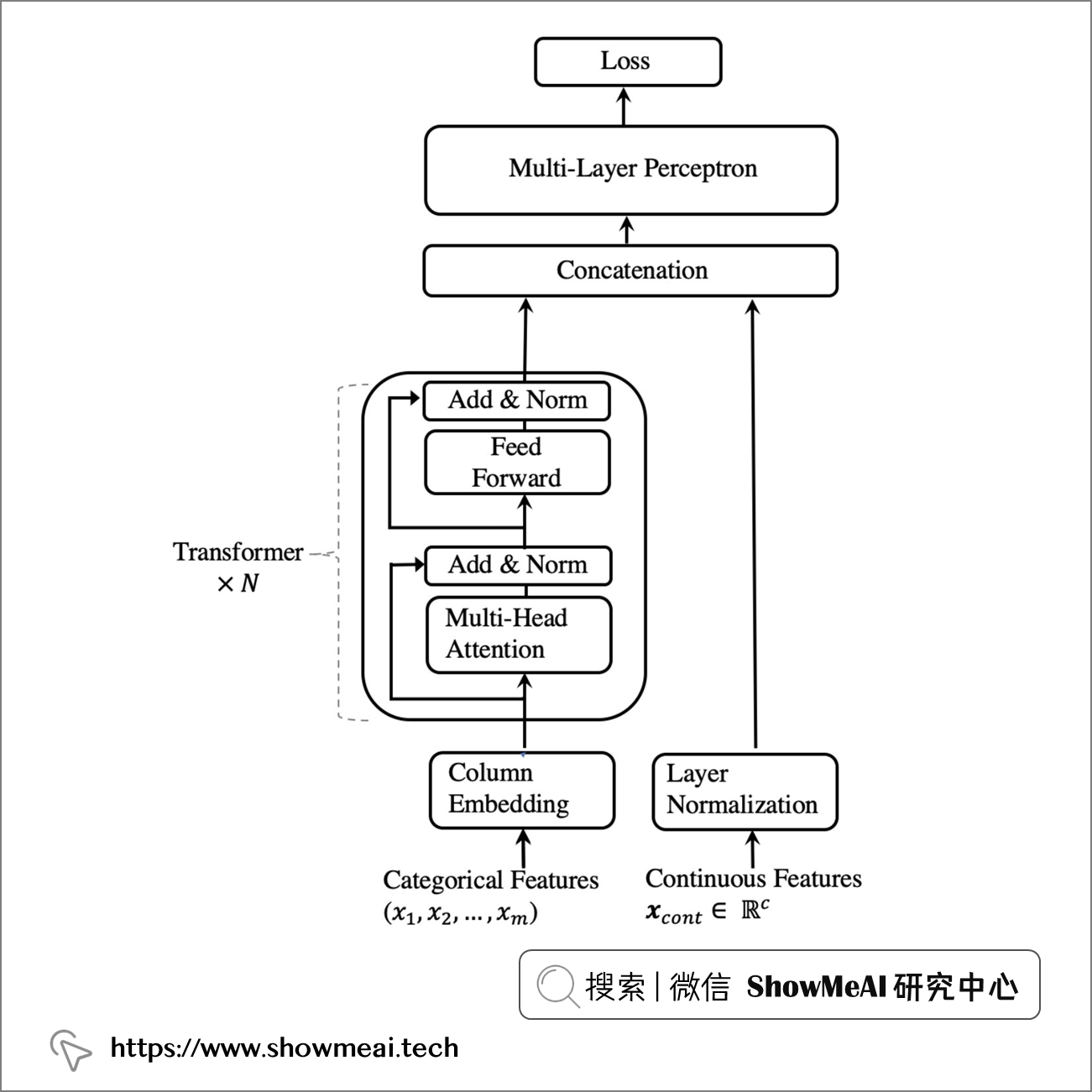

💡 模型原理

TabTransformer的模型架構如下所示:

我們可以看到,類別型的特徵,很適合在 embedding 後,送入 transformer 模組進行深度交叉組合與資訊挖掘,得到的資訊與右側的連續值特徵進行拼接,再送入全連接的 MLP 模組進行組合和完成最後的任務(分類或者回歸)。

💡 模型實現

📌 定義數據集元數據

要實現模型,我們先對輸入數據欄位,區分不同的類型(數值型特徵與類別型特徵)。我們會對不同類型的特徵,使用不同的方式進行處理和完成特徵工程(例如數值型的特徵進行幅度縮放,類別型的特徵進行編碼處理)。

## 數值特徵欄位

NUMERIC_FEATURE_NAMES = [

"age",

"education_num",

"capital_gain",

"capital_loss",

"hours_per_week",

]

## 類別型特徵欄位及其取值列表

CATEGORICAL_FEATURES_WITH_VOCABULARY = {

"workclass": sorted(list(train_data["workclass"].unique())),

"education": sorted(list(train_data["education"].unique())),

"marital_status": sorted(list(train_data["marital_status"].unique())),

"occupation": sorted(list(train_data["occupation"].unique())),

"relationship": sorted(list(train_data["relationship"].unique())),

"race": sorted(list(train_data["race"].unique())),

"gender": sorted(list(train_data["gender"].unique())),

"native_country": sorted(list(train_data["native_country"].unique())),

}

## 權重欄位

WEIGHT_COLUMN_NAME = "fnlwgt"

## 類別型欄位名稱

CATEGORICAL_FEATURE_NAMES = list(CATEGORICAL_FEATURES_WITH_VOCABULARY.keys())

## 所有的輸入特徵

FEATURE_NAMES = NUMERIC_FEATURE_NAMES + CATEGORICAL_FEATURE_NAMES

## 默認填充的取值

COLUMN_DEFAULTS = [

[0.0] if feature_name in NUMERIC_FEATURE_NAMES + [WEIGHT_COLUMN_NAME] else ["NA"]

for feature_name in CSV_HEADER

]

## 目標欄位

TARGET_FEATURE_NAME = "income_bracket"

## 目標欄位取值

TARGET_LABELS = [" <=50K", " >50K"]

📌 配置超參數

我們為神經網路的結構和訓練過程的超參數進行設置,如下。

# 學習率

LEARNING_RATE = 0.001

# 學習率衰減

WEIGHT_DECAY = 0.0001

# 隨機失活 概率參數

DROPOUT_RATE = 0.2

# 批數據大小

BATCH_SIZE = 265

# 總訓練輪次數

NUM_EPOCHS = 15

# transformer塊的數量

NUM_TRANSFORMER_BLOCKS = 3

# 注意力頭的數量

NUM_HEADS = 4

# 類別型embedding嵌入的維度

EMBEDDING_DIMS = 16

# MLP隱層單元數量

MLP_HIDDEN_UNITS_FACTORS = [

2,

1,

]

# MLP塊的數量

NUM_MLP_BLOCKS = 2

📌 實現數據讀取管道

下面我們定義一個輸入函數,它負責讀取和解析文件,並對特徵和標籤處理,放入 tf.data.Dataset,以便後續訓練和評估。

target_label_lookup = layers.StringLookup(

vocabulary=TARGET_LABELS, mask_token=None, num_oov_indices=0

)

def prepare_example(features, target):

target_index = target_label_lookup(target)

weights = features.pop(WEIGHT_COLUMN_NAME)

return features, target_index, weights

# 從csv中讀取數據

def get_dataset_from_csv(csv_file_path, batch_size=128, shuffle=False):

dataset = tf.data.experimental.make_csv_dataset(

csv_file_path,

batch_size=batch_size,

column_names=CSV_HEADER,

column_defaults=COLUMN_DEFAULTS,

label_name=TARGET_FEATURE_NAME,

num_epochs=1,

header=False,

na_value="?",

shuffle=shuffle,

).map(prepare_example, num_parallel_calls=tf.data.AUTOTUNE, deterministic=False)

return dataset.cache()

📌 模型構建與評估

def run_experiment(

model,

train_data_file,

test_data_file,

num_epochs,

learning_rate,

weight_decay,

batch_size,

):

# 優化器

optimizer = tfa.optimizers.AdamW(

learning_rate=learning_rate, weight_decay=weight_decay

)

# 模型編譯

model.compile(

optimizer=optimizer,

loss=keras.losses.BinaryCrossentropy(),

metrics=[keras.metrics.BinaryAccuracy(name="accuracy")],

)

# 訓練集與驗證集

train_dataset = get_dataset_from_csv(train_data_file, batch_size, shuffle=True)

validation_dataset = get_dataset_from_csv(test_data_file, batch_size)

# 模型訓練

print("Start training the model...")

history = model.fit(

train_dataset, epochs=num_epochs, validation_data=validation_dataset

)

print("Model training finished")

# 模型評估

_, accuracy = model.evaluate(validation_dataset, verbose=0)

print(f"Validation accuracy: {round(accuracy * 100, 2)}%")

return history

① 創建模型輸入

基於 TensorFlow 的輸入要求,我們將模型的輸入定義為字典,其中『key/鍵』是特徵名稱,『value/值』為 keras.layers.Input具有相應特徵形狀的張量和數據類型。

def create_model_inputs():

inputs = {}

for feature_name in FEATURE_NAMES:

if feature_name in NUMERIC_FEATURE_NAMES:

inputs[feature_name] = layers.Input(

name=feature_name, shape=(), dtype=tf.float32

)

else:

inputs[feature_name] = layers.Input(

name=feature_name, shape=(), dtype=tf.string

)

return inputs

② 編碼特徵

我們定義一個encode_inputs函數,返回encoded_categorical_feature_list和 numerical_feature_list。我們將分類特徵編碼為嵌入,使用固定的embedding_dims對於所有功能, 無論他們的辭彙量大小。 這是 Transformer 模型所必需的。

def encode_inputs(inputs, embedding_dims):

encoded_categorical_feature_list = []

numerical_feature_list = []

for feature_name in inputs:

if feature_name in CATEGORICAL_FEATURE_NAMES:

# 獲取類別型特徵的不同取值(vocabulary)

vocabulary = CATEGORICAL_FEATURES_WITH_VOCABULARY[feature_name]

# 構建lookup table去構建 類別型取值 和 索引 的相互映射

lookup = layers.StringLookup(

vocabulary=vocabulary,

mask_token=None,

num_oov_indices=0,

output_mode="int",

)

# 類別型字元串取值 轉為 整型索引

encoded_feature = lookup(inputs[feature_name])

# 構建embedding層

embedding = layers.Embedding(

input_dim=len(vocabulary), output_dim=embedding_dims

)

# 為索引構建embedding嵌入

encoded_categorical_feature = embedding(encoded_feature)

encoded_categorical_feature_list.append(encoded_categorical_feature)

else:

# 數值型特徵

numerical_feature = tf.expand_dims(inputs[feature_name], -1)

numerical_feature_list.append(numerical_feature)

return encoded_categorical_feature_list, numerical_feature_list

③ MLP模組實現

網路中不可或缺的部分是 MLP 全連接板塊,下面是它的簡單實現:

def create_mlp(hidden_units, dropout_rate, activation, normalization_layer, name=None):

mlp_layers = []

for units in hidden_units:

mlp_layers.append(normalization_layer),

mlp_layers.append(layers.Dense(units, activation=activation))

mlp_layers.append(layers.Dropout(dropout_rate))

return keras.Sequential(mlp_layers, name=name)

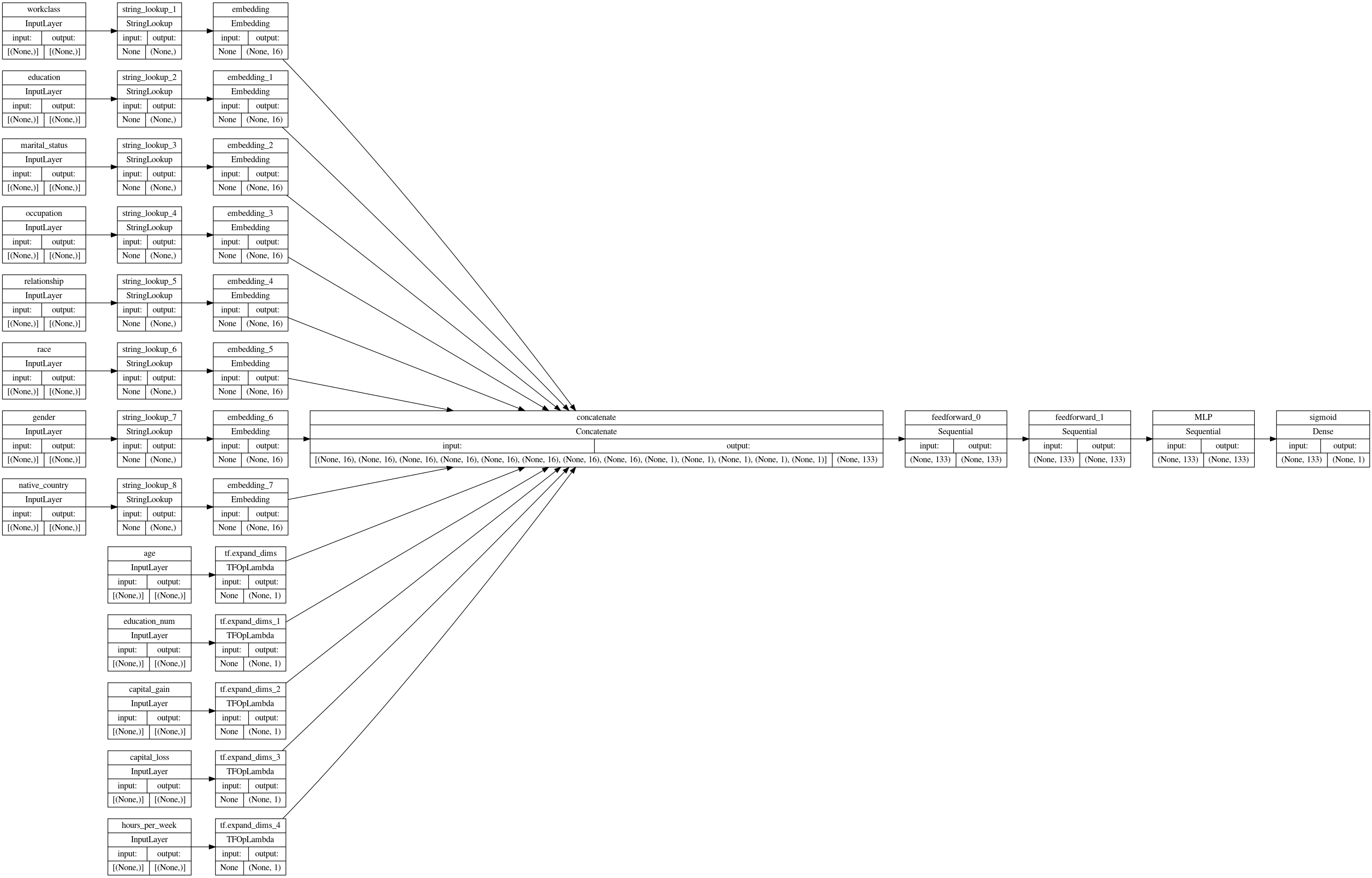

④ 模型實現1:基準線模型

為了對比效果,我們先簡單使用MLP(多層前饋網路)進行建模,程式碼和注釋如下。

def create_baseline_model(

embedding_dims, num_mlp_blocks, mlp_hidden_units_factors, dropout_rate

):

# 創建輸入.

inputs = create_model_inputs()

# 特徵編碼

encoded_categorical_feature_list, numerical_feature_list = encode_inputs(

inputs, embedding_dims

)

# 拼接所有特徵

features = layers.concatenate(

encoded_categorical_feature_list + numerical_feature_list

)

# 前向計算

feedforward_units = [features.shape[-1]]

# 構建全連接,並且添加跳躍連接(skip-connection)

for layer_idx in range(num_mlp_blocks):

features = create_mlp(

hidden_units=feedforward_units,

dropout_rate=dropout_rate,

activation=keras.activations.gelu,

normalization_layer=layers.LayerNormalization(epsilon=1e-6),

name=f"feedforward_{layer_idx}",

)(features)

# MLP全連接的隱層結果

mlp_hidden_units = [

factor * features.shape[-1] for factor in mlp_hidden_units_factors

]

# 最終的MLP網路

features = create_mlp(

hidden_units=mlp_hidden_units,

dropout_rate=dropout_rate,

activation=keras.activations.selu,

normalization_layer=layers.BatchNormalization(),

name="MLP",

)(features)

# 添加sigmoid構建二分類器

outputs = layers.Dense(units=1, activation="sigmoid", name="sigmoid")(features)

model = keras.Model(inputs=inputs, outputs=outputs)

return model

# 完整的模型

baseline_model = create_baseline_model(

embedding_dims=EMBEDDING_DIMS,

num_mlp_blocks=NUM_MLP_BLOCKS,

mlp_hidden_units_factors=MLP_HIDDEN_UNITS_FACTORS,

dropout_rate=DROPOUT_RATE,

)

print("Total model weights:", baseline_model.count_params())

keras.utils.plot_model(baseline_model, show_shapes=True, rankdir="LR")

# Total model weights: 109629

上述模型構建完成之後,我們通過plot_model操作,繪製出模型結構如下:

|

接下來我們訓練和評估一下基準線模型:

history = run_experiment(

model=baseline_model,

train_data_file=train_data_file,

test_data_file=test_data_file,

num_epochs=NUM_EPOCHS,

learning_rate=LEARNING_RATE,

weight_decay=WEIGHT_DECAY,

batch_size=BATCH_SIZE,

)

輸出的訓練過程日誌如下:

Start training the model...

Epoch 1/15

123/123 [==============================] - 6s 25ms/step - loss: 110178.8203 - accuracy: 0.7478 - val_loss: 92703.0859 - val_accuracy: 0.7825

Epoch 2/15

123/123 [==============================] - 2s 14ms/step - loss: 90979.8125 - accuracy: 0.7675 - val_loss: 71798.9219 - val_accuracy: 0.8001

Epoch 3/15

123/123 [==============================] - 2s 14ms/step - loss: 77226.5547 - accuracy: 0.7902 - val_loss: 68581.0312 - val_accuracy: 0.8168

Epoch 4/15

123/123 [==============================] - 2s 14ms/step - loss: 72652.2422 - accuracy: 0.8004 - val_loss: 70084.0469 - val_accuracy: 0.7974

Epoch 5/15

123/123 [==============================] - 2s 14ms/step - loss: 71207.9375 - accuracy: 0.8033 - val_loss: 66552.1719 - val_accuracy: 0.8130

Epoch 6/15

123/123 [==============================] - 2s 14ms/step - loss: 69321.4375 - accuracy: 0.8091 - val_loss: 65837.0469 - val_accuracy: 0.8149

Epoch 7/15

123/123 [==============================] - 2s 14ms/step - loss: 68839.3359 - accuracy: 0.8099 - val_loss: 65613.0156 - val_accuracy: 0.8187

Epoch 8/15

123/123 [==============================] - 2s 14ms/step - loss: 68126.7344 - accuracy: 0.8124 - val_loss: 66155.8594 - val_accuracy: 0.8108

Epoch 9/15

123/123 [==============================] - 2s 14ms/step - loss: 67768.9844 - accuracy: 0.8147 - val_loss: 66705.8047 - val_accuracy: 0.8230

Epoch 10/15

123/123 [==============================] - 2s 14ms/step - loss: 67482.5859 - accuracy: 0.8151 - val_loss: 65668.3672 - val_accuracy: 0.8143

Epoch 11/15

123/123 [==============================] - 2s 14ms/step - loss: 66792.6875 - accuracy: 0.8181 - val_loss: 66536.3828 - val_accuracy: 0.8233

Epoch 12/15

123/123 [==============================] - 2s 14ms/step - loss: 65610.4531 - accuracy: 0.8229 - val_loss: 70377.7266 - val_accuracy: 0.8256

Epoch 13/15

123/123 [==============================] - 2s 14ms/step - loss: 63930.2500 - accuracy: 0.8282 - val_loss: 68294.8516 - val_accuracy: 0.8289

Epoch 14/15

123/123 [==============================] - 2s 14ms/step - loss: 63420.1562 - accuracy: 0.8323 - val_loss: 63050.5859 - val_accuracy: 0.8204

Epoch 15/15

123/123 [==============================] - 2s 14ms/step - loss: 62619.4531 - accuracy: 0.8345 - val_loss: 66933.7500 - val_accuracy: 0.8177

Model training finished

Validation accuracy: 81.77%

我們可以看到基準線模型(全連接MLP網路)實現了約 82% 的驗證準確度。

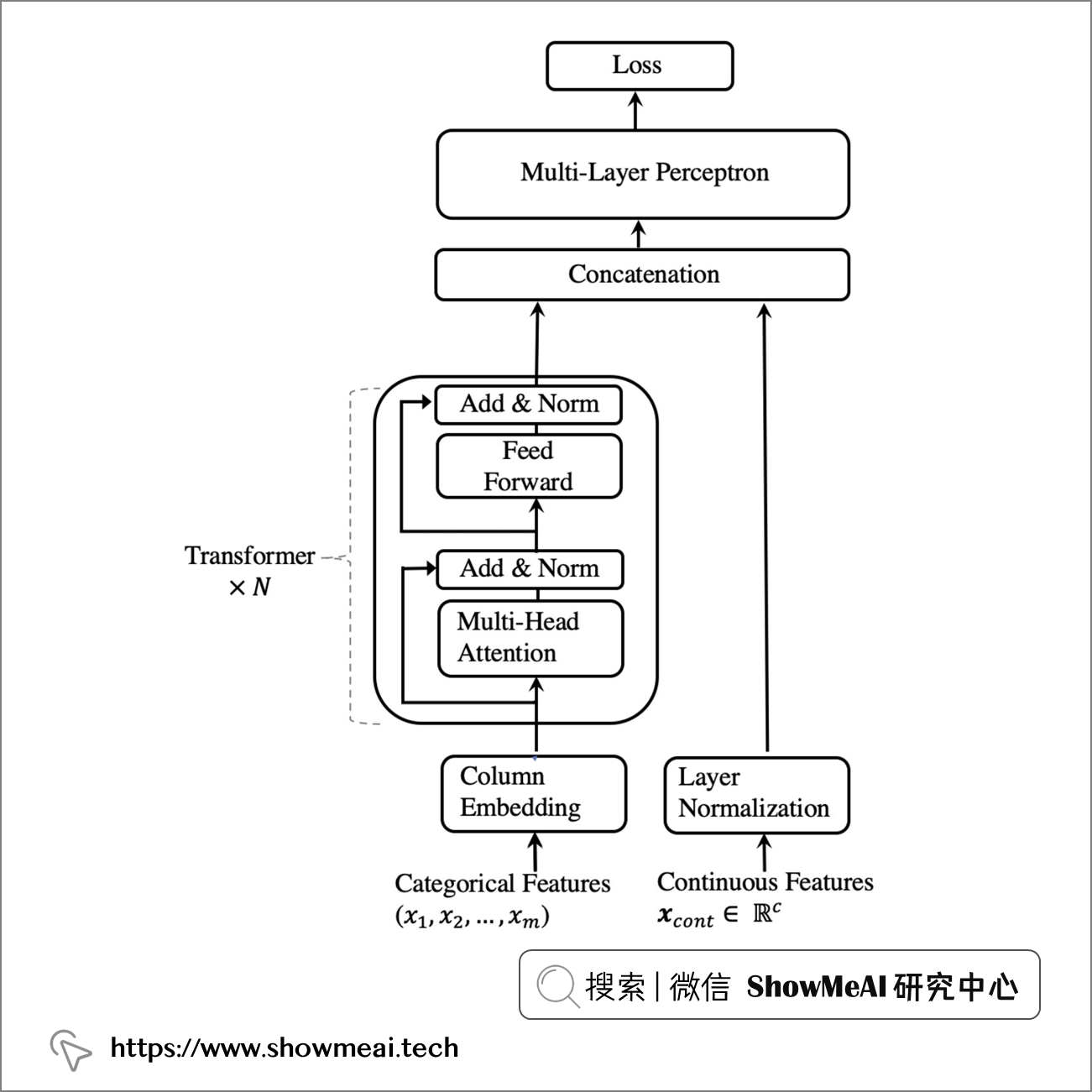

⑤ 模型實現2:TabTransformer

TabTransformer 架構的工作原理如下:

- 所有類別型特徵都被編碼為嵌入,使用相同的

embedding_dims。 - 將列嵌入(每個類別型特徵的一個嵌入向量)添加類別型特徵嵌入中。

- 嵌入的類別型特徵被輸入到一系列的 Transformer 塊中。 每個 Transformer 塊由一個多頭自注意力層和一個前饋層組成。

- 最終 Transformer 層的輸出, 與輸入的數值型特徵連接,並輸入到最終的 MLP 塊中。

- 尾部由一個

softmax結構完成分類。

def create_tabtransformer_classifier(

num_transformer_blocks,

num_heads,

embedding_dims,

mlp_hidden_units_factors,

dropout_rate,

use_column_embedding=False,

):

# 構建輸入

inputs = create_model_inputs()

# 編碼特徵

encoded_categorical_feature_list, numerical_feature_list = encode_inputs(

inputs, embedding_dims

)

# 堆疊類別型特徵的embeddings,為輸入Tansformer做準備

encoded_categorical_features = tf.stack(encoded_categorical_feature_list, axis=1)

# 拼接數值型特徵

numerical_features = layers.concatenate(numerical_feature_list)

# embedding

if use_column_embedding:

num_columns = encoded_categorical_features.shape[1]

column_embedding = layers.Embedding(

input_dim=num_columns, output_dim=embedding_dims

)

column_indices = tf.range(start=0, limit=num_columns, delta=1)

encoded_categorical_features = encoded_categorical_features + column_embedding(

column_indices

)

# 構建Transformer塊

for block_idx in range(num_transformer_blocks):

# 多頭自注意力

attention_output = layers.MultiHeadAttention(

num_heads=num_heads,

key_dim=embedding_dims,

dropout=dropout_rate,

name=f"multihead_attention_{block_idx}",

)(encoded_categorical_features, encoded_categorical_features)

# 第1個跳接/Skip connection

x = layers.Add(name=f"skip_connection1_{block_idx}")(

[attention_output, encoded_categorical_features]

)

# 第1個層歸一化/Layer normalization

x = layers.LayerNormalization(name=f"layer_norm1_{block_idx}", epsilon=1e-6)(x)

# 全連接層

feedforward_output = create_mlp(

hidden_units=[embedding_dims],

dropout_rate=dropout_rate,

activation=keras.activations.gelu,

normalization_layer=layers.LayerNormalization(epsilon=1e-6),

name=f"feedforward_{block_idx}",

)(x)

# 第2個跳接/Skip connection

x = layers.Add(name=f"skip_connection2_{block_idx}")([feedforward_output, x])

# 第2個層歸一化/Layer normalization

encoded_categorical_features = layers.LayerNormalization(

name=f"layer_norm2_{block_idx}", epsilon=1e-6

)(x)

# 展平embeddings

categorical_features = layers.Flatten()(encoded_categorical_features)

# 對數值型特徵做層歸一化

numerical_features = layers.LayerNormalization(epsilon=1e-6)(numerical_features)

# 拼接作為最終MLP的輸入

features = layers.concatenate([categorical_features, numerical_features])

# 計算MLP隱層單元

mlp_hidden_units = [

factor * features.shape[-1] for factor in mlp_hidden_units_factors

]

# 構建最終的MLP.

features = create_mlp(

hidden_units=mlp_hidden_units,

dropout_rate=dropout_rate,

activation=keras.activations.selu,

normalization_layer=layers.BatchNormalization(),

name="MLP",

)(features)

# 添加sigmoid構建二分類

outputs = layers.Dense(units=1, activation="sigmoid", name="sigmoid")(features)

model = keras.Model(inputs=inputs, outputs=outputs)

return model

tabtransformer_model = create_tabtransformer_classifier(

num_transformer_blocks=NUM_TRANSFORMER_BLOCKS,

num_heads=NUM_HEADS,

embedding_dims=EMBEDDING_DIMS,

mlp_hidden_units_factors=MLP_HIDDEN_UNITS_FACTORS,

dropout_rate=DROPOUT_RATE,

)

print("Total model weights:", tabtransformer_model.count_params())

keras.utils.plot_model(tabtransformer_model, show_shapes=True, rankdir="LR")

#Total model weights: 87479

最終輸出的模型結構示意圖如下(因為模型結構較深,總體很長,點擊放大)

|

下面我們訓練和評估一下TabTransformer 模型的效果:

history = run_experiment(

model=tabtransformer_model,

train_data_file=train_data_file,

test_data_file=test_data_file,

num_epochs=NUM_EPOCHS,

learning_rate=LEARNING_RATE,

weight_decay=WEIGHT_DECAY,

batch_size=BATCH_SIZE,

)

Start training the model...

Epoch 1/15

123/123 [==============================] - 13s 61ms/step - loss: 82503.1641 - accuracy: 0.7944 - val_loss: 64260.2305 - val_accuracy: 0.8421

Epoch 2/15

123/123 [==============================] - 6s 51ms/step - loss: 68677.9375 - accuracy: 0.8251 - val_loss: 63819.8633 - val_accuracy: 0.8389

Epoch 3/15

123/123 [==============================] - 6s 51ms/step - loss: 66703.8984 - accuracy: 0.8301 - val_loss: 63052.8789 - val_accuracy: 0.8428

Epoch 4/15

123/123 [==============================] - 6s 51ms/step - loss: 65287.8672 - accuracy: 0.8342 - val_loss: 61593.1484 - val_accuracy: 0.8451

Epoch 5/15

123/123 [==============================] - 6s 52ms/step - loss: 63968.8594 - accuracy: 0.8379 - val_loss: 61385.4531 - val_accuracy: 0.8442

Epoch 6/15

123/123 [==============================] - 6s 51ms/step - loss: 63645.7812 - accuracy: 0.8394 - val_loss: 61332.3281 - val_accuracy: 0.8447

Epoch 7/15

123/123 [==============================] - 6s 51ms/step - loss: 62778.6055 - accuracy: 0.8412 - val_loss: 61342.5352 - val_accuracy: 0.8461

Epoch 8/15

123/123 [==============================] - 6s 51ms/step - loss: 62815.6992 - accuracy: 0.8398 - val_loss: 61220.8242 - val_accuracy: 0.8460

Epoch 9/15

123/123 [==============================] - 6s 52ms/step - loss: 62191.1016 - accuracy: 0.8416 - val_loss: 61055.9102 - val_accuracy: 0.8452

Epoch 10/15

123/123 [==============================] - 6s 51ms/step - loss: 61992.1602 - accuracy: 0.8439 - val_loss: 61251.8047 - val_accuracy: 0.8441

Epoch 11/15

123/123 [==============================] - 6s 50ms/step - loss: 61745.1289 - accuracy: 0.8429 - val_loss: 61364.7695 - val_accuracy: 0.8445

Epoch 12/15

123/123 [==============================] - 6s 51ms/step - loss: 61696.3477 - accuracy: 0.8445 - val_loss: 61074.3594 - val_accuracy: 0.8450

Epoch 13/15

123/123 [==============================] - 6s 51ms/step - loss: 61569.1719 - accuracy: 0.8436 - val_loss: 61844.9688 - val_accuracy: 0.8456

Epoch 14/15

123/123 [==============================] - 6s 51ms/step - loss: 61343.0898 - accuracy: 0.8445 - val_loss: 61702.8828 - val_accuracy: 0.8455

Epoch 15/15

123/123 [==============================] - 6s 51ms/step - loss: 61355.0547 - accuracy: 0.8504 - val_loss: 61272.2852 - val_accuracy: 0.8495

Model training finished

Validation accuracy: 84.55%

TabTransformer 模型實現了約 85% 的驗證準確度,相比於直接使用全連接網路效果有一定的提升。

參考資料

- 📘 TabTransformer://arxiv.org/abs/2012.06678

- 📘 TensorFlow插件addons://www.tensorflow.org/addons/overview

- 📘AI垂直領域工具庫速查表 | TensorFlow2建模速查&應用速查://www.showmeai.tech/article-detail/109