MindSpore激活函數總結與測試

技術背景

激活函數在機器學習的前向網路中擔任著非常重要的角色,我們可以認為它是一個決策函數。舉個例子說,我們要判斷一個輸出的數據是貓還是狗,我們所得到的數據是0.01,而我們預設的數據中0代表貓1代表狗,那麼0.01雖然不是0也不是1,但是我們可以預期這張圖片是貓的概率肯定是非常大的。這樣的話我們就可以假定一個激活函數,當得到的數據小於0.5時,這個數據就被認為是貓,大於0.5時,這個數據就被認為是狗,這就是人為定義的一種決策函數。這篇文章主要介紹的是,在MindSpore中已經實現的幾種激活函數及其使用方法。

雙餘弦激活函數

雙餘弦函數取的兩邊極限是\([-1,1]\),整體的趨勢在靠近中間模糊地帶時斜率是最大的,這種場景適用於區分的兩種結果主體特徵差異較大的情況。

該函數作圖所用到的python程式碼如下,其中通過plt.gca()的方法來調整坐標軸的呈現:

import matplotlib.pyplot as plt

import numpy as np

def _tanh(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = (np.exp(2*x)-1)/(np.exp(2*x)+1)

new_l.append(th)

return new_l

plt.figure()

plt.title('Tanh')

plt.xlabel('x')

plt.ylabel('y')

plt.ylim(-3,3)

ax = plt.gca()

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

ax.xaxis.set_ticks_position('bottom')

ax.yaxis.set_ticks_position('left')

ax.spines['bottom'].set_position(('data', 0))

ax.spines['left'].set_position(('data', 0))

x = np.arange(-6,6,0.05)

y = _tanh(x)

plt.plot(x,y)

plt.savefig('function.png')

雙餘弦激活函數的函數形式為:

\]

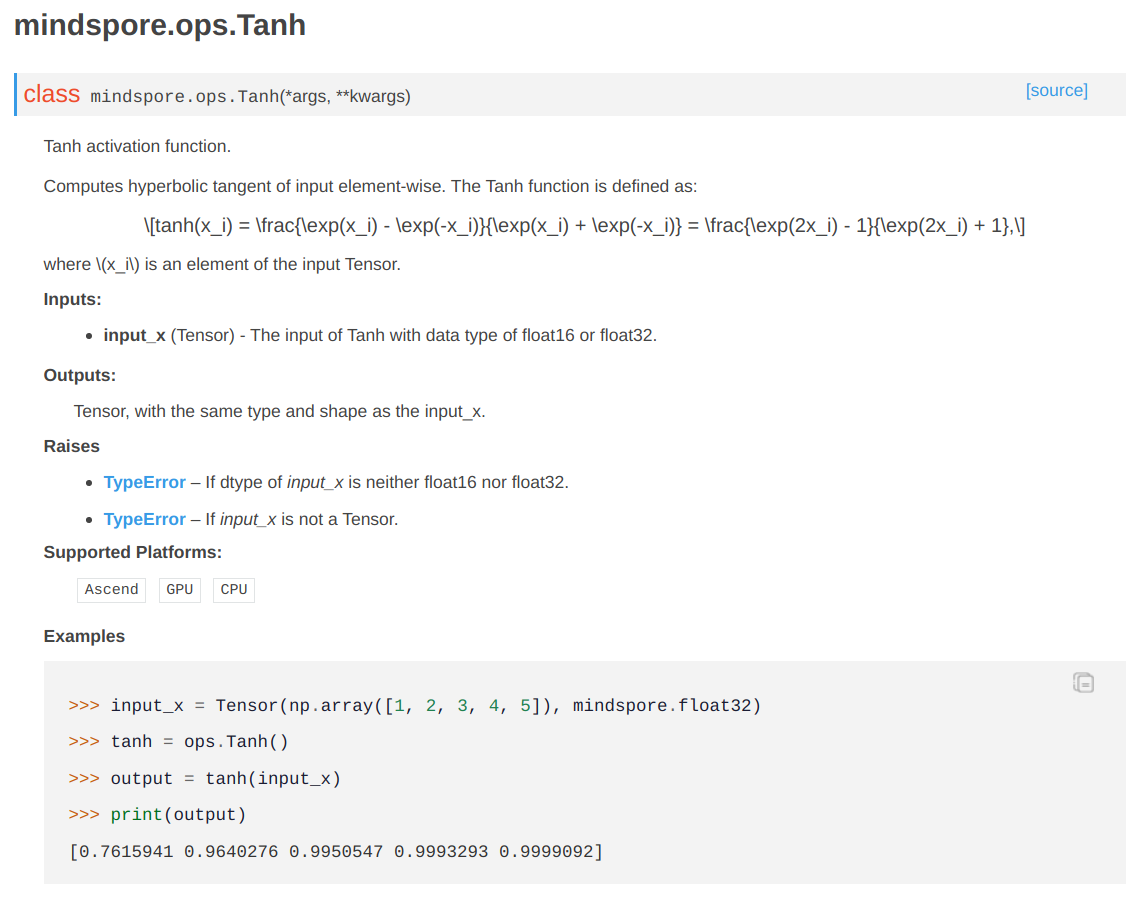

在官方的指導文檔中我們可以看到,該激活函數支援昇騰、GPU和CPU3個平台的操作:

那麼我們通過一個在CPU上執行的案例來對這個函數進行測試:

# activation.py

from mindspore import context

context.set_context(mode=context.GRAPH_MODE, device_target="CPU")

import mindspore as ms

from mindspore import Tensor, ops

import numpy as np

from tabulate import tabulate

def _tanh(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = (np.exp(2*x)-1)/(np.exp(2*x)+1)

new_l.append(th)

return new_l

x = np.array([1,2,3,4,5]).astype(np.float32)

input_x = Tensor(x, ms.float32)

tanh = ops.Tanh()

output = tanh(input_x)

_output = _tanh(x)

# Format output information

header = ['Mindspore Output', 'Equation Output']

table = []

for i in range(len(x)):

table.append((output[i], _output[i]))

print (tabulate(table, headers=header, tablefmt='fancy_grid'))

在這個測試案例中,帶下劃線的是我們自己實現的遍歷計算的激活函數,MindSpore的激活函數都在ops這個路徑下。最後,我們用tabulate稍微美化了一下輸出數據的效果,執行結果如下所示:

dechin@ubuntu2004:~/projects/gitlab/dechin/src/mindspore$ sudo docker run --rm -v /dev/shm:/dev/shm -v /home/dechin/projects/gitlab/dechin/src/mindspore/:/home/ --runtime=nvidia --privileged=true swr.cn-south-1.myhuaweicloud.com/mindspore/mindspore-gpu:1.2.0 /bin/bash -c "cd /home && python -m pip install tabulate && python activation.py"

Looking in indexes: //mirrors.aliyun.com/pypi/simple/

Collecting tabulate

Downloading //mirrors.aliyun.com/pypi/packages/ca/80/7c0cad11bd99985cfe7c09427ee0b4f9bd6b048bd13d4ffb32c6db237dfb/tabulate-0.8.9-py3-none-any.whl

Installing collected packages: tabulate

WARNING: The script tabulate is installed in '/usr/local/python-3.7.5/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed tabulate-0.8.9

WARNING: You are using pip version 19.2.3, however version 21.1.2 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

╒════════════════════╤═══════════════════╕

│ Mindspore Output │ Equation Output │

╞════════════════════╪═══════════════════╡

│ 0.7615942 │ 0.761594 │

├────────────────────┼───────────────────┤

│ 0.9640276 │ 0.964028 │

├────────────────────┼───────────────────┤

│ 0.9950548 │ 0.995055 │

├────────────────────┼───────────────────┤

│ 0.9993293 │ 0.999329 │

├────────────────────┼───────────────────┤

│ 0.9999092 │ 0.999909 │

╘════════════════════╧═══════════════════╛

由於這裡官方容器鏡像缺乏了一個tabulate的庫,而我這邊本地執行之後又不希望保留眾多的容器歷史記錄,因此每次運行容器都會加上rm選項,所以最偷懶的做法就是在容器運行指令裡面加一條pip安裝python庫的指令,這也是因為這個庫比較小,安裝並不需要多少時間。如果對可操作性要求比較高的童鞋,可以參考docker的restart指令去運行或者是在原鏡像的基礎上自行安裝好相應的python庫再commit到鏡像中,比如可以參考這一篇部落格。

Softmax激活函數

Softmax是一個指數型的歸一化函數,常用於判定一個給定的函數值是否屬於某一個類別的分類器,相應的函數值越高取得的概率就越大,在多類別的分類器中發揮著重要的作用,其函數影像如下圖所示:

Softmax所對應的函數表達形式為:

\]

該函數的函數圖生成程式碼如下所示:

import matplotlib.pyplot as plt

import numpy as np

def _softmax(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = np.exp(x)

new_l.append(th)

sum_th = sum(new_l)

for i in range(len(l)):

new_l[i] /= sum_th

return new_l

plt.figure()

plt.title('Softmax')

plt.xlabel('x')

plt.ylabel('y')

ax = plt.gca()

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

ax.xaxis.set_ticks_position('bottom')

ax.yaxis.set_ticks_position('left')

ax.spines['bottom'].set_position(('data', 0))

ax.spines['left'].set_position(('data', 0))

x = np.arange(-6,6,0.05)

y = _softmax(x)

print (x,y)

在這個程式碼中,除去替換了softmax的計算函數,也取消了y軸的範圍限制。對應的Softmax函數在MindSpore中的調用如下,同樣的也是從ops裡面獲取Softmax函數:

# activation.py

from mindspore import context

context.set_context(mode=context.GRAPH_MODE, device_target="CPU")

import mindspore as ms

from mindspore import Tensor, ops

import numpy as np

from tabulate import tabulate

def _softmax(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = np.exp(x)

new_l.append(th)

sum_th = sum(new_l)

for i in range(len(l)):

new_l[i] /= sum_th

return new_l

x = np.array([1, 2, 3, 4, 5]).astype(np.float32)

input_x = Tensor(x, ms.float32)

softmax = ops.Softmax()

output = softmax(input_x)

_output = _softmax(x)

# Format output information

header = ['Mindspore Output', 'Equation Output']

table = []

for i in range(len(x)):

table.append((output[i], _output[i]))

print (tabulate(table, headers=header, tablefmt='fancy_grid'))

同樣的使用docker容器來運行這個mindspore實例並得到對比的結果:

dechin@ubuntu2004:~/projects/gitlab/dechin/src/mindspore$ sudo docker run --rm -v /dev/shm:/dev/shm -v /home/dechin/projects/gitlab/dechin/src/mindspore/:/home/ --runtime=nvidia --privileged=true swr.cn-south-1.myhuaweicloud.com/mindspore/mindspore-gpu:1.2.0 /bin/bash -c "cd /home && python -m pip install tabulate && python activation.py"

Looking in indexes: //mirrors.aliyun.com/pypi/simple/

Collecting tabulate

Downloading //mirrors.aliyun.com/pypi/packages/ca/80/7c0cad11bd99985cfe7c09427ee0b4f9bd6b048bd13d4ffb32c6db237dfb/tabulate-0.8.9-py3-none-any.whl

Installing collected packages: tabulate

WARNING: The script tabulate is installed in '/usr/local/python-3.7.5/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed tabulate-0.8.9

WARNING: You are using pip version 19.2.3, however version 21.1.2 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

╒════════════════════╤═══════════════════╕

│ Mindspore Output │ Equation Output │

╞════════════════════╪═══════════════════╡

│ 0.011656228 │ 0.0116562 │

├────────────────────┼───────────────────┤

│ 0.031684916 │ 0.0316849 │

├────────────────────┼───────────────────┤

│ 0.08612853 │ 0.0861285 │

├────────────────────┼───────────────────┤

│ 0.23412165 │ 0.234122 │

├────────────────────┼───────────────────┤

│ 0.63640857 │ 0.636409 │

╘════════════════════╧═══════════════════╛

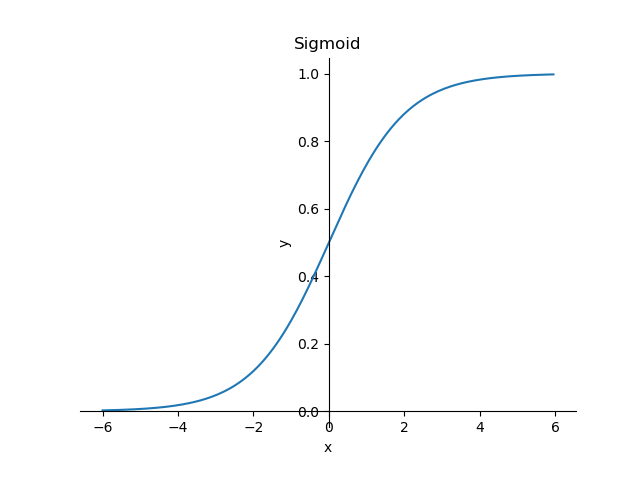

Sigmoid激活函數

不同的激活函數適用於不同的應用場景,比如Softmax更加適用於多類別分類器中只有一個正確答案的場景,而Sigmoid函數則適用於多個正確答案的場景,可以獲取到對應於每一種答案的概率。從函數影像來說,Sigmoid激活函數的形狀有點像是前面一個章節中提到的Tanh激活函數:

其對應的函數表達形式為:

\]

該函數生成函數影像的python程式碼如下所示:

import matplotlib.pyplot as plt

import numpy as np

def _sigmoid(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = 1/(np.exp(-x)+1)

new_l.append(th)

return new_l

plt.figure()

plt.title('Sigmoid')

plt.xlabel('x')

plt.ylabel('y')

ax = plt.gca()

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

ax.xaxis.set_ticks_position('bottom')

ax.yaxis.set_ticks_position('left')

ax.spines['bottom'].set_position(('data', 0))

ax.spines['left'].set_position(('data', 0))

x = np.arange(-6,6,0.05)

y = _sigmoid(x)

plt.plot(x,y)

plt.savefig('function.png')

以下是使用MindSpore來運行Sigmoid激活函數的方案,並與我們自己寫的Sigmoid計算函數進行一次對比:

# activation.py

from mindspore import context

context.set_context(mode=context.GRAPH_MODE, device_target="CPU")

import mindspore as ms

from mindspore import Tensor, ops

import numpy as np

from tabulate import tabulate

def _sigmoid(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = 1/(np.exp(-x)+1)

new_l.append(th)

return new_l

x = np.array([1, 2, 3, 4, 5]).astype(np.float32)

input_x = Tensor(x, ms.float32)

sigmoid = ops.Sigmoid()

output = sigmoid(input_x)

_output = _sigmoid(x)

# Format output information

header = ['Mindspore Output', 'Equation Output']

table = []

for i in range(len(x)):

table.append((output[i], _output[i]))

print (tabulate(table, headers=header, tablefmt='fancy_grid'))

在Docker容器的執行下輸出如下所示(請自行忽略在安裝tabulate的過程中產生的一些冗餘列印資訊):

dechin@ubuntu2004:~/projects/gitlab/dechin/src/mindspore$ sudo docker run --rm -v /dev/shm:/dev/shm -v /home/dechin/projects/gitlab/dechin/src/mindspore/:/home/ --runtime=nvidia --privileged=true swr.cn-south-1.myhuaweicloud.com/mindspore/mindspore-gpu:1.2.0 /bin/bash -c "cd /home && python -m pip install tabulate && python activation.py"

Looking in indexes: //mirrors.aliyun.com/pypi/simple/

Collecting tabulate

Downloading //mirrors.aliyun.com/pypi/packages/ca/80/7c0cad11bd99985cfe7c09427ee0b4f9bd6b048bd13d4ffb32c6db237dfb/tabulate-0.8.9-py3-none-any.whl

Installing collected packages: tabulate

WARNING: The script tabulate is installed in '/usr/local/python-3.7.5/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed tabulate-0.8.9

WARNING: You are using pip version 19.2.3, however version 21.1.2 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

╒════════════════════╤═══════════════════╕

│ Mindspore Output │ Equation Output │

╞════════════════════╪═══════════════════╡

│ 0.7310586 │ 0.731059 │

├────────────────────┼───────────────────┤

│ 0.8807971 │ 0.880797 │

├────────────────────┼───────────────────┤

│ 0.95257413 │ 0.952574 │

├────────────────────┼───────────────────┤

│ 0.9820138 │ 0.982014 │

├────────────────────┼───────────────────┤

│ 0.9933072 │ 0.993307 │

╘════════════════════╧═══════════════════╛

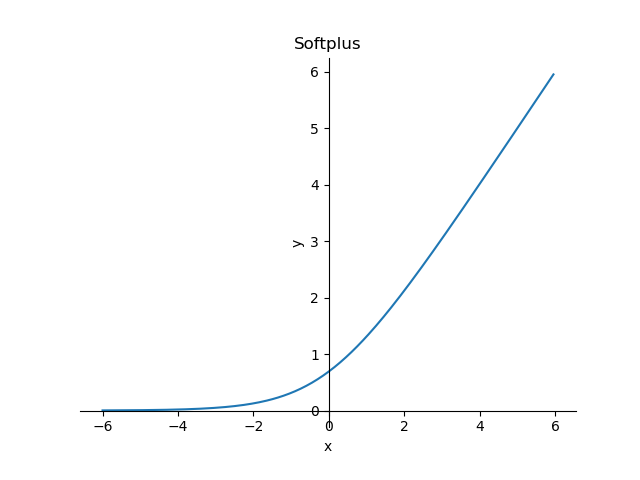

Softplus激活函數

Softplus是跟Softmax性質比較相似的一種激活函數類型,下圖是Softplus的函數形狀示意圖:

其對應的函數表達形式為:

\]

我們可以很明顯的看到,Softplus函數在後期的增長其實更是線性的一個趨勢而不是指數增長的趨勢(\(\lim\limits_{x\to+\infty}softplus(x)=x\)),相比於Softmax函數要更加柔和一些。生成該函數影像的python程式碼如下所示:

import matplotlib.pyplot as plt

import numpy as np

def _softplus(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = np.log(1+np.exp(x))

new_l.append(th)

return new_l

plt.figure()

plt.title('Softplus')

plt.xlabel('x')

plt.ylabel('y')

ax = plt.gca()

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

ax.xaxis.set_ticks_position('bottom')

ax.yaxis.set_ticks_position('left')

ax.spines['bottom'].set_position(('data', 0))

ax.spines['left'].set_position(('data', 0))

x = np.arange(-6,6,0.05)

y = _softplus(x)

plt.plot(x,y)

plt.savefig('function.png')

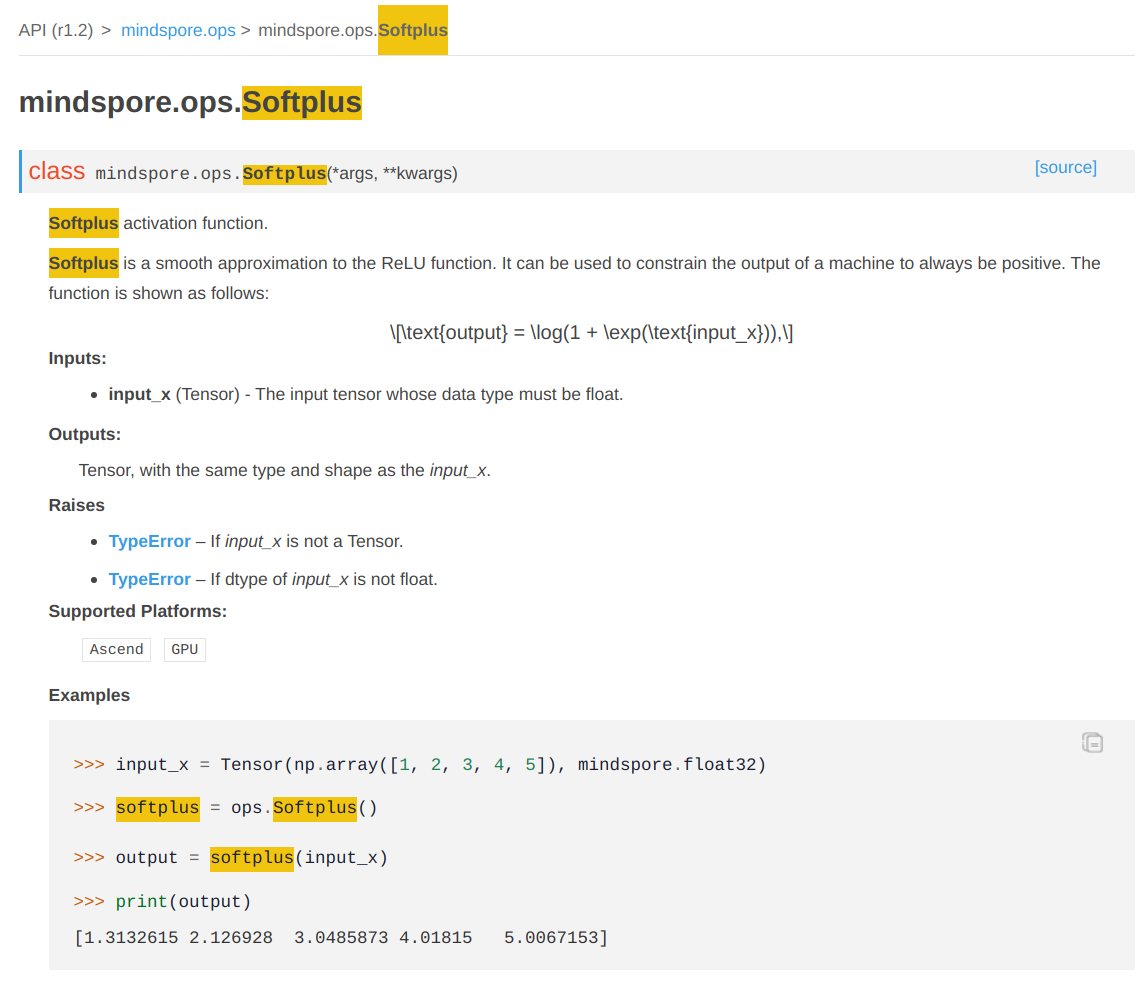

同樣的我們也可以看一下在MindSpore上面實現Softplus激活函數的方法,這裡有一點需要注意的是,在官方文檔中也有相應的提示:

這個激活函數的實現目前僅支援了GPU和昇騰的版本,因此我們這裡在context中修改為GPU的欄位來進行運行,這一點跟前面幾個激活函數有所差別:

# activation.py

from mindspore import context

context.set_context(mode=context.GRAPH_MODE, device_target="GPU")

import mindspore as ms

from mindspore import Tensor, ops

import numpy as np

from tabulate import tabulate

def _softplus(l) -> list:

'''Self defined equation for evaluating.

'''

new_l = []

for x in l:

th = np.log(1+np.exp(x))

new_l.append(th)

return new_l

x = np.array([1, 2, 3, 4, 5]).astype(np.float32)

input_x = Tensor(x, ms.float32)

softplus = ops.Softplus()

output = softplus(input_x)

_output = _softplus(x)

# Format output information

header = ['Mindspore Output', 'Equation Output']

table = []

for i in range(len(x)):

table.append((output[i], _output[i]))

print (tabulate(table, headers=header, tablefmt='fancy_grid'))

在docker容器中的運行結果如下:

dechin@ubuntu2004:~/projects/gitlab/dechin/src/mindspore$ sudo docker run --rm -v /dev/shm:/dev/shm -v /home/dechin/projects/gitlab/dechin/src/mindspore/:/home/ --runtime=nvidia --privileged=true swr.cn-south-1.myhuaweicloud.com/mindspore/mindspore-gpu:1.2.0 /bin/bash -c "cd /home && python -m pip install tabulate && python activation.py"

Looking in indexes: //mirrors.aliyun.com/pypi/simple/

Collecting tabulate

Downloading //mirrors.aliyun.com/pypi/packages/ca/80/7c0cad11bd99985cfe7c09427ee0b4f9bd6b048bd13d4ffb32c6db237dfb/tabulate-0.8.9-py3-none-any.whl

Installing collected packages: tabulate

WARNING: The script tabulate is installed in '/usr/local/python-3.7.5/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed tabulate-0.8.9

WARNING: You are using pip version 19.2.3, however version 21.1.2 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

╒════════════════════╤═══════════════════╕

│ Mindspore Output │ Equation Output │

╞════════════════════╪═══════════════════╡

│ 1.3132616 │ 1.31326 │

├────────────────────┼───────────────────┤

│ 2.126928 │ 2.12693 │

├────────────────────┼───────────────────┤

│ 3.0485873 │ 3.04859 │

├────────────────────┼───────────────────┤

│ 4.01815 │ 4.01815 │

├────────────────────┼───────────────────┤

│ 5.0067153 │ 5.00672 │

╘════════════════════╧═══════════════════╛

需要特殊說明的是,MindSpore在GPU版本的運行中,需要先編譯一段時間,因此速度相比於CPU會沒有那麼快,除非是數據量特別大的情況,才能塞滿GPU的Flops。

總結概要

這篇文章主要介紹了Softplus、Sigmoid、Softmax和Tanh這4種激活函數,激活函數在機器學習領域中主要起到的是一個決策性質的作用,在分類器中有重要的應用價值。而除了這4種激活函數之外,MindSpore還實現了Softsign的激活函數,但是目前只能在昇騰平台上使用,其他的除了Softplus只能在GPU和昇騰平台運行之外,都是全平台支援的(CPU、GPU、昇騰)。

版權聲明

本文首發鏈接為://www.cnblogs.com/dechinphy/p/activate.html

作者ID:DechinPhy

更多原著文章請參考://www.cnblogs.com/dechinphy/

打賞專用鏈接://www.cnblogs.com/dechinphy/gallery/image/379634.html

騰訊雲專欄同步://cloud.tencent.com/developer/column/91958