greenplum6.14、GPCC6.4安装详解

最近在做gp的升级和整改,所以把做的内容整理下,这篇文章主要是基于gp6.14的安装,主要分为gp,gpcc,pxf的一些安装和初始化。本文为博客园作者所写: 一寸HUI,个人博客地址://www.cnblogs.com/zsql/

一、安装前准备

1.1、机器选型

1.2、网络参数调整

echo "10000 65535" > /proc/sys/net/ipv4/ip_local_port_range #net.ipv4.ip_local_port_range,定义了地tcp/udp的端口范围。可以理解为系统中的程序会选择这个范围内的端口来连接到目的端口。

echo 1024 > /proc/sys/net/core/somaxconn #net.core.somaxconn,服务端所能accept即处理数据的最大客户端数量,即完成连接上限。默认值是128,建议修改成1024。

echo 16777216 > /proc/sys/net/core/rmem_max #net.core.rmem_max,接收套接字缓冲区大小的最大值。默认值是229376,建议修改成16777216。

echo 16777216 > /proc/sys/net/core/wmem_max #net.core.wmem_max,发送套接字缓冲区大小的最大值(以字节为单位)。默认值是229376,建议修改成16777216。

echo "4096 87380 16777216" > /proc/sys/net/ipv4/tcp_rmem #net.ipv4.tcp_rmem,配置读缓冲的大小,三个值,第一个是这个读缓冲的最小值,第三个是最大值,中间的是默认值。默认值是"4096 87380 6291456",建议修改成"4096 87380 16777216"。

echo "4096 65536 16777216" > /proc/sys/net/ipv4/tcp_wmem #net.ipv4.tcp_wmem,配置写缓冲的大小,三个值,第一个是这个写缓冲的最小值,第三个是最大值,中间的是默认值。默认值是"4096 16384 4194304",建议修改成"4096 65536 16777216"。

echo 360000 > /proc/sys/net/ipv4/tcp_max_tw_buckets #net.ipv4.max_tw_buckets,表示系统同时保持TIME_WAIT套接字的最大数量。默认值是2048,建议修改成360000

参考://support.huaweicloud.com/tngg-kunpengdbs/kunpenggreenplum_05_0011.html

1.3、磁盘和I/O调整

mount -o rw,nodev,noatime,nobarrier,inode64 /dev/dfa /data #挂载

/sbin/blockdev --setra 16384 /dev/dfa #配置readhead,减少磁盘的寻道次数和应用程序的I/O等待时间,提升磁盘读I/O性能

echo deadline > /sys/block/dfa/queue/scheduler #配置IO调度,deadline更适用于Greenplum数据库场景

grubby --update-kernel=ALL --args="elevator=deadline"

vim /etc/security/limits.conf #配置文件描述符

#添加如下行

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

修改:/etc/security/limits.d/20-nproc.conf

#添加如下内容

* soft nproc 131072

root soft nproc unlimited

参考://docs-cn.greenplum.org/v6/best_practices/sysconfig.html

1.4、内核参数调整

vim /etc/sysctl.conf ,设置完成后 重载参数( sysctl -p),注意:如下的内容根据内存的实际情况进行修改

# kernel.shmall = _PHYS_PAGES / 2 # See Shared Memory Pages # 共享内存

kernel.shmall = 4000000000

# kernel.shmmax = kernel.shmall * PAGE_SIZE # 共享内存

kernel.shmmax = 500000000

kernel.shmmni = 4096

vm.overcommit_memory = 2 # See Segment Host Memory # 主机内存

vm.overcommit_ratio = 95 # See Segment Host Memory # 主机内存

net.ipv4.ip_local_port_range = 10000 65535 # See Port Settings 端口设定

kernel.sem = 500 2048000 200 40960

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 2097152

net.core.wmem_max = 2097152

vm.swappiness = 10

vm.zone_reclaim_mode = 0

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

vm.dirty_background_ratio = 0 # See System Memory # 系统内存

vm.dirty_ratio = 0

vm.dirty_background_bytes = 1610612736

vm.dirty_bytes = 4294967296

部分参数相关解释和计算(注意:还是根据实际情况进行计算):

kernel.shmall = _PHYS_PAGES / 2 #echo $(expr $(getconf _PHYS_PAGES) / 2)

kernel.shmmax = kernel.shmall * PAGE_SIZE #echo $(expr $(getconf _PHYS_PAGES) / 2 \* $(getconf PAGE_SIZE))

vm.overcommit_memory 系统使用该参数来确定可以为进程分配多少内存。对于GP数据库,此参数应设置为2。

vm.overcommit_ratio 以为进程分配内的百分比,其余部分留给操作系统。在Red Hat上,默认值为50。建议设置95 #vm.overcommit_ratio = (RAM-0.026*gp_vmem) / RAM

为避免在Greenplum初始化期间与其他应用程序之间的端口冲突,指定的端口范围 net.ipv4.ip_local_port_range。

使用gpinitsystem初始化Greenplum时,请不要在该范围内指定Greenplum数据库端口。

例如,如果net.ipv4.ip_local_port_range = 10000 65535,将Greenplum数据库基本端口号设置为这些值。

PORT_BASE = 6000

MIRROR_PORT_BASE = 7000

系统内存大于64G ,建议以下配置

vm.dirty_background_ratio = 0

vm.dirty_ratio = 0

vm.dirty_background_bytes = 1610612736 # 1.5GB

vm.dirty_bytes = 4294967296 # 4GB

1.5、java安装

mkdir /usr/local/java

tar –zxvf jdk-8u181-linux-x64.tar.gz –C /usr/local/java

cd /usr/local/java

ln -s jdk1.8.0_181/ jdk

配置环境变量:

配置环境变量:vim /etc/profile #添加如下内容

export JAVA_HOME=/usr/local/java/jdk

export CLASSPATH=.:/lib/dt.jar:/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

然后source /etc/profile,然后使用java,javac命令检查是否安装好

1.6、防火墙和SElinux(Security-Enhanced Linux)检查

关闭防火墙:

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl status firewalld

禁用SElinux

修改 /etc/selinux/config 设置 #修改如下内容

SELINUX=disabled

1.7、其他配置

禁用THP:grubby --update-kernel=ALL --args="transparent_hugepage=never" #添加参数后,重启系统

设置RemoveIPC

vim /etc/systemd/logind.conf

RemoveIPC=no

1.8、配置hosts文件

vim /etc/hosts #把所有的ip hostname配置进去

1.9、新建gpadmin用户和用户组并配置免密

新建用户和用户组(每个节点):

groupdel gpadmin

userdel gpadmin

groupadd gpadmin

useradd -g gpadmin -d /apps/gpadmin gpadmin

passwd gpadmin

或者echo 'ow@R99d7' | passwd gpadmin --stdin

配置免密:

ssh-keygen -t rsa

ssh-copy-id lgh1

ssh-copy-id lgh2

ssh-copy-id lgh3

二、Greenplum安装

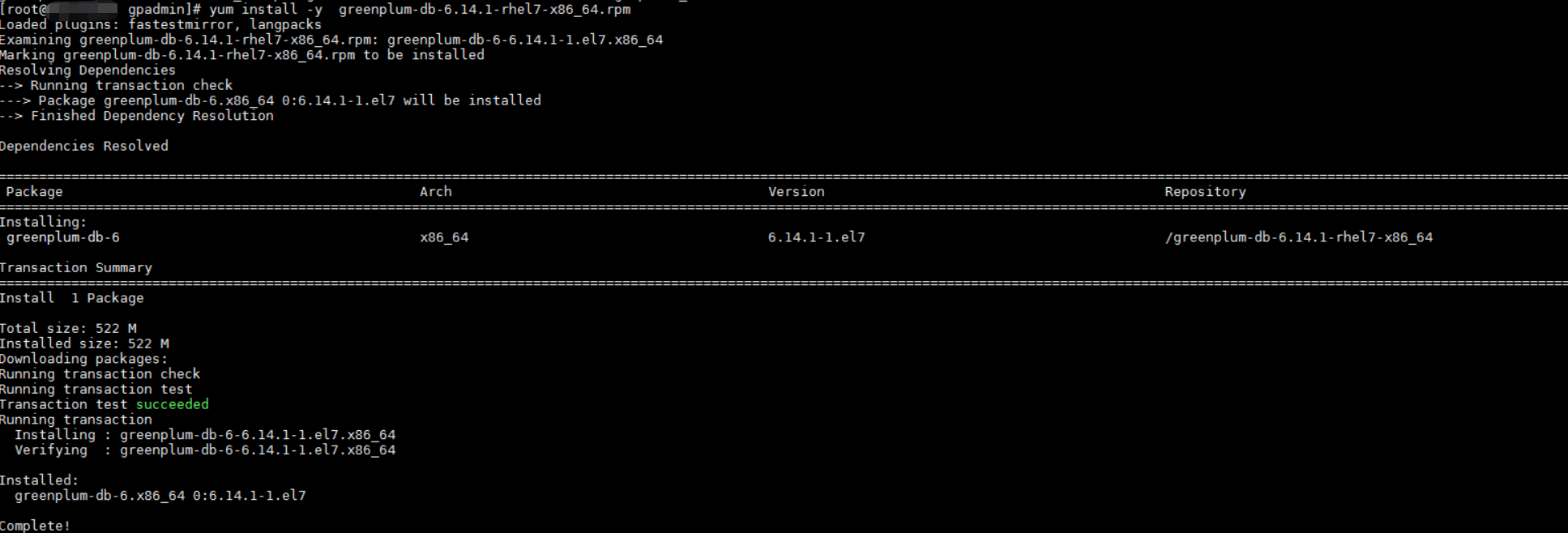

2.1、安装

下载地址://network.pivotal.io/products/pivotal-gpdb#/releases/837931 #6.14.1版本

安装:yum install -y greenplum-db-6.14.1-rhel7-x86_64.rpm #默认安装在/usr/local目录下,安装greenpum需要很多依赖,但是通过这样安装默认自动安装需要的依赖。

安装完毕后会出现目录:/usr/local/greenplum-db

chown -R gpadmin:gpadmin /usr/local/greenplum*

查看安装的目录:

[root@lgh1 local]# cd /usr/local/greenplum-db

[root@lgh1 greenplum-db]# ll

total 5548

drwxr-xr-x 7 root root 4096 Mar 30 16:44 bin

drwxr-xr-x 3 root root 21 Mar 30 16:44 docs

drwxr-xr-x 2 root root 58 Mar 30 16:44 etc

drwxr-xr-x 3 root root 19 Mar 30 16:44 ext

-rwxr-xr-x 1 root root 724 Mar 30 16:44 greenplum_path.sh

drwxr-xr-x 5 root root 4096 Mar 30 16:44 include

drwxr-xr-x 6 root root 4096 Mar 30 16:44 lib

drwxr-xr-x 2 root root 20 Mar 30 16:44 libexec

-rw-r--r-- 1 root root 5133247 Feb 23 03:17 open_source_licenses.txt

-rw-r--r-- 1 root root 519852 Feb 23 03:17 open_source_license_VMware_Tanzu_Greenplum_Streaming_Server_1.5.0_GA.txt

drwxr-xr-x 7 root root 135 Mar 30 16:44 pxf

drwxr-xr-x 2 root root 4096 Mar 30 16:44 sbin

drwxr-xr-x 5 root root 49 Mar 30 16:44 share

2.2、创建集群相关主机文件

在$GPHOME目录创建两个host文件(all_host,seg_host),用于后续使用gpssh,gpscp 等脚本host参数文件

all_host : 内容是集群所有主机名或ip,包含master,segment,standby等。

seg_host: 内容是所有 segment主机名或ip

2.3、配置环境变量

vim ~/.bashrc

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/apps/data1/master/gpseg-1

export PGPORT=5432

#然后使其生效

source ~/.bashrc

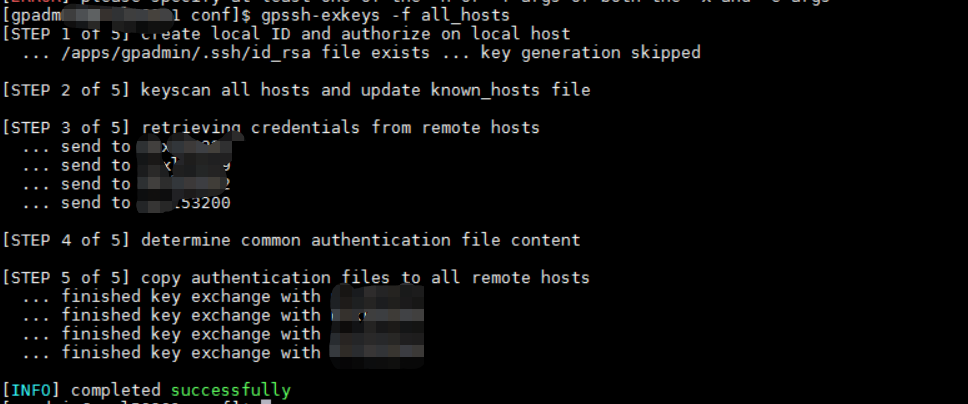

2.4、gpssh-exkeys 工具,打通n-n的免密登陆

#gpssh-exkeys -f all_hosts

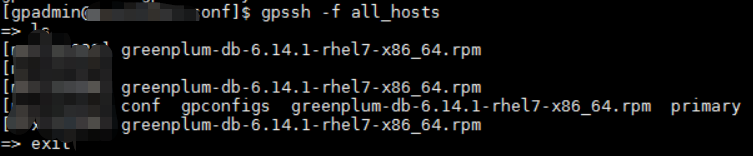

2.5、验证gpssh

#gpssh -f all_hosts

2.6、同步master配置到各个主机

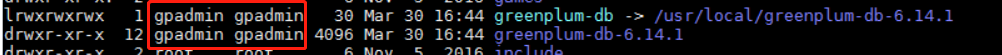

这里主要是同步:/usr/local/greenplum-db文件到其他的主机上,不管使用什么方式,只要最终在每个机器上的状态如下就好:ll /usr/local

可以使用scp,也可以使用gp的方式(需要root账号)注意:这里会涉及到权限的问题,所以使用root就好,最后通过chown去修改为gpadmin即可,下面的方式应该是不使用,除非每个主机的/usr/lcoal目录的权限为gpadmin,

打包:cd /usr/local && tar -cf /usr/local/gp6.tar greenplum-db-6.14.1

分发:gpscp -f /apps/gpadmin/conf/all_hosts /usr/local/gp6.tar =:/usr/local

然后解压,并建立软链接

gpssh -f /apps/gpadmin/conf/all_hosts

cd /usr/local

tar -xf gp6.tar

ln -s greenplum-db-6.14.1 greenplum-db

chown -R gpadmin:gpadmin /usr/local/greenplum*

2.7、创建master和segment相关文件

#创建mster文件

mkdir /data/data1/master

chown -R gpadmin:gpadmin /data/data1/master

#创建segment文件

gpssh -f /apps/gpadmin/conf/seg_hosts -e 'mkdir -p /data/data1/primary'

gpssh -f /apps/gpadmin/conf/seg_hosts -e 'mkdir -p /data/data1/mirror'

gpssh -f /apps/gpadmin/conf/seg_hosts -e 'chown -R gpadmin:gpadmin /data/data1/'

不管使用什么方式,也可以使用CRT批量创建,保证每个主机的目录相同,所有的目录的权限为gpadmin:gpadmin即可,

2.8、初始化集群

创建文件夹,并复制配置文件:

mkdir -p ~/gpconfigs

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config ~/gpconfigs/gpinitsystem_config

然后修改~/gpconfigs/gpinitsystem_config文件:

[gpadmin@lgh1 gpconfigs]$ cat gpinitsystem_config | egrep -v '^$|#'

ARRAY_NAME="Greenplum Data Platform"

SEG_PREFIX=gpseg

PORT_BASE=6000

declare -a DATA_DIRECTORY=(/apps/data1/primary)

MASTER_HOSTNAME=master_hostname

MASTER_DIRECTORY=/apps/data1/master

MASTER_PORT=5432

TRUSTED_SHELL=ssh

CHECK_POINT_SEGMENTS=8

ENCODING=UNICODE

MIRROR_PORT_BASE=7000

declare -a MIRROR_DATA_DIRECTORY=(/apps/data1/mirror)

DATABASE_NAME=gp_test

使用如下命令进行初始化:

gpinitsystem -c ~/gpconfigs/gpinitsystem_config -h ~/conf/seg_hosts

运行结果如下:

[gpadmin@lgh1 ~]$ gpinitsystem -c ~/gpconfigs/gpinitsystem_config -h ~/conf/seg_hosts

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checking configuration parameters, please wait...

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Reading Greenplum configuration file /apps/gpadmin/gpconfigs/gpinitsystem_config

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Locale has not been set in /apps/gpadmin/gpconfigs/gpinitsystem_config, will set to default value

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Locale set to en_US.utf8

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checking configuration parameters, Completed

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Commencing multi-home checks, please wait...

..

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Configuring build for standard array

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Commencing multi-home checks, Completed

20210330:17:37:56:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Building primary segment instance array, please wait...

..

20210330:17:37:57:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Building group mirror array type , please wait...

..

20210330:17:37:58:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checking Master host

20210330:17:37:58:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checking new segment hosts, please wait...

....

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checking new segment hosts, Completed

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Greenplum Database Creation Parameters

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:---------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master Configuration

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:---------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master instance name = Greenplum Data Platform

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master hostname = lgh1

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master port = 5432

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master instance dir = /apps/data1/master/gpseg-1

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master LOCALE = en_US.utf8

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Greenplum segment prefix = gpseg

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master Database = gpebusiness

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master connections = 250

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master buffers = 128000kB

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Segment connections = 750

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Segment buffers = 128000kB

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Checkpoint segments = 8

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Encoding = UNICODE

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Postgres param file = Off

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Initdb to be used = /usr/local/greenplum-db-6.14.1/bin/initdb

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-GP_LIBRARY_PATH is = /usr/local/greenplum-db-6.14.1/lib

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-HEAP_CHECKSUM is = on

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-HBA_HOSTNAMES is = 0

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Ulimit check = Passed

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Array host connect type = Single hostname per node

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Master IP address [1] = 10.18.43.86

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Standby Master = Not Configured

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Number of primary segments = 1

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total Database segments = 2

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Trusted shell = ssh

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Number segment hosts = 2

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Mirror port base = 7000

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Number of mirror segments = 1

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Mirroring config = ON

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Mirroring type = Group

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:----------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Greenplum Primary Segment Configuration

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:----------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-lgh2 6000 lgh2 /apps/data1/primary/gpseg0 2

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-lgh3 6000 lgh3 /apps/data1/primary/gpseg1 3

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:---------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Greenplum Mirror Segment Configuration

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:---------------------------------------

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-lgh3 7000 lgh3 /apps/data1/mirror/gpseg0 4

20210330:17:38:02:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-lgh2 7000 lgh2 /apps/data1/mirror/gpseg1 5

Continue with Greenplum creation Yy|Nn (default=N):

> y

20210330:17:38:05:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Building the Master instance database, please wait...

20210330:17:38:09:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Starting the Master in admin mode

20210330:17:38:10:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Commencing parallel build of primary segment instances

20210330:17:38:10:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20210330:17:38:10:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

.........

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Parallel process exit status

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as completed = 2

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as killed = 0

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as failed = 0

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Deleting distributed backout files

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Removing back out file

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-No errors generated from parallel processes

20210330:17:38:19:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Restarting the Greenplum instance in production mode

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Starting gpstop with args: -a -l /apps/gpadmin/gpAdminLogs -m -d /apps/data1/master/gpseg-1

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Gathering information and validating the environment...

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Commencing Master instance shutdown with mode='smart'

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Master segment instance directory=/apps/data1/master/gpseg-1

20210330:17:38:20:013281 gpstop:lgh1:gpadmin-[INFO]:-Stopping master segment and waiting for user connections to finish ...

server shutting down

20210330:17:38:21:013281 gpstop:lgh1:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process

20210330:17:38:21:013281 gpstop:lgh1:gpadmin-[INFO]:-Terminating processes for segment /apps/data1/master/gpseg-1

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Starting gpstart with args: -a -l /apps/gpadmin/gpAdminLogs -d /apps/data1/master/gpseg-1

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Gathering information and validating the environment...

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Greenplum Catalog Version: '301908232'

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Starting Master instance in admin mode

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Setting new master era

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Master Started...

20210330:17:38:21:013307 gpstart:lgh1:gpadmin-[INFO]:-Shutting down master

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-Process results...

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:- Successful segment starts = 2

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:- Failed segment starts = 0

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-Successfully started 2 of 2 segment instances

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:38:22:013307 gpstart:lgh1:gpadmin-[INFO]:-Starting Master instance lgh1 directory /apps/data1/master/gpseg-1

20210330:17:38:23:013307 gpstart:lgh1:gpadmin-[INFO]:-Command pg_ctl reports Master lgh1 instance active

20210330:17:38:23:013307 gpstart:lgh1:gpadmin-[INFO]:-Connecting to dbname='template1' connect_timeout=15

20210330:17:38:23:013307 gpstart:lgh1:gpadmin-[INFO]:-No standby master configured. skipping...

20210330:17:38:23:013307 gpstart:lgh1:gpadmin-[INFO]:-Database successfully started

20210330:17:38:23:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Completed restart of Greenplum instance in production mode

20210330:17:38:23:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Commencing parallel build of mirror segment instances

20210330:17:38:23:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20210330:17:38:23:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

..

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Parallel process exit status

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as completed = 2

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as killed = 0

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Total processes marked as failed = 0

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:------------------------------------------------

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Scanning utility log file for any warning messages

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[WARN]:-*******************************************************

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[WARN]:-Scan of log file indicates that some warnings or errors

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[WARN]:-were generated during the array creation

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Please review contents of log file

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-/apps/gpadmin/gpAdminLogs/gpinitsystem_20210330.log

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-To determine level of criticality

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-These messages could be from a previous run of the utility

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-that was called today!

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[WARN]:-*******************************************************

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Greenplum Database instance successfully created

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-------------------------------------------------------

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-To complete the environment configuration, please

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-update gpadmin .bashrc file with the following

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-2. Add "export MASTER_DATA_DIRECTORY=/apps/data1/master/gpseg-1"

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:- to access the Greenplum scripts for this instance:

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:- or, use -d /apps/data1/master/gpseg-1 option for the Greenplum scripts

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:- Example gpstate -d /apps/data1/master/gpseg-1

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Script log file = /apps/gpadmin/gpAdminLogs/gpinitsystem_20210330.log

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-To initialize a Standby Master Segment for this Greenplum instance

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Review options for gpinitstandby

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-------------------------------------------------------

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-The Master /apps/data1/master/gpseg-1/pg_hba.conf post gpinitsystem

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-new array must be explicitly added to this file

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-located in the /usr/local/greenplum-db-6.14.1/docs directory

20210330:17:38:26:011255 gpinitsystem:lgh1:gpadmin-[INFO]:-------------------------------------------------------

View Code

到这里就算安装完了,但是这里需要说一下就是mirror的分布方式,这里使用了默认的方式:Group Mirroring,在初始化的时候可以指定其他的方式,其他的分布方式要求稍微高一些,也复杂点,可以参考://docs-cn.greenplum.org/v6/best_practices/ha.html

具体在初始化的时候如何指定mirror的分布方式,以及在初始化的安装master standby,可参考:gpinitsystem命令=》网址://docs-cn.greenplum.org/v6/utility_guide/admin_utilities/gpinitsystem.html#topic1

2.9、查看集群状态

#gpstate #命令见://docs-cn.greenplum.org/v6/utility_guide/admin_utilities/gpstate.html

[gpadmin@lgh1 ~]$ gpstate

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-Starting gpstate with args:

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-Gathering data from segments...

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-Greenplum instance status summary

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Master instance = Active

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Master standby = No master standby configured

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total segment instance count from metadata = 4

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Primary Segment Status

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total primary segments = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment valid (at master) = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Mirror Segment Status

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segments = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment valid (at master) = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment failures (at master) = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as primary segments = 0

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as mirror segments = 2

20210330:17:41:32:013986 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

2.10、安装master standby

segment的mirror,以及master的standby都可以在初始化的时候安装,可以在安装好了手动添加mirror和master standby,mirror的手动添加参考://docs-cn.greenplum.org/v6/utility_guide/admin_utilities/gpaddmirrors.html

这里说下master standby的添加:参考://docs-cn.greenplum.org/v6/utility_guide/admin_utilities/gpinitstandby.html

找一台机器,创建目录:

mkdir /apps/data1/master

chown -R gpadmin:gpadmin /apps/data1/master

安装master standby:gpinitstandby -s lgh2

[gpadmin@lgh1 ~]$ gpinitstandby -s lgh2

20210330:17:58:39:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Validating environment and parameters for standby initialization...

20210330:17:58:39:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Checking for data directory /apps/data1/master/gpseg-1 on lgh2

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:------------------------------------------------------

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum standby master initialization parameters

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:------------------------------------------------------

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum master hostname = lgh1

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum master data directory = /apps/data1/master/gpseg-1

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum master port = 5432

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum standby master hostname = lgh2

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum standby master port = 5432

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum standby master data directory = /apps/data1/master/gpseg-1

20210330:17:58:40:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Greenplum update system catalog = On

Do you want to continue with standby master initialization? Yy|Nn (default=N):

> y

20210330:17:58:41:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Syncing Greenplum Database extensions to standby

20210330:17:58:42:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-The packages on lgh2 are consistent.

20210330:17:58:42:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Adding standby master to catalog...

20210330:17:58:42:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Database catalog updated successfully.

20210330:17:58:42:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Updating pg_hba.conf file...

20210330:17:58:42:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-pg_hba.conf files updated successfully.

20210330:17:58:43:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Starting standby master

20210330:17:58:43:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Checking if standby master is running on host: lgh2 in directory: /apps/data1/master/gpseg-1

20210330:17:58:44:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Cleaning up pg_hba.conf backup files...

20210330:17:58:44:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Backup files of pg_hba.conf cleaned up successfully.

20210330:17:58:44:014332 gpinitstandby:lgh1:gpadmin-[INFO]:-Successfully created standby master on lgh2

三、GPCC安装

下载地址://network.pivotal.io/products/gpdb-command-center/#/releases/829440

参考文档://gpcc.docs.pivotal.io/640/welcome.html

3.1、确认安装环境

数据库状态:确认greenplum数据库已被安装并正在运行:gpstate

环境变量:环境变量MASTER_DATA_DIRECTORY已设置并生效:echo $ MASTER_DATA_DIRECTORY

/usr/local/权限:确认gpadmin有/usr/local/的写权限:ls –ltr /usr

28080端口:28080为默认的web客户端口,确认未被占用:netstat -aptn| grep 28080

8899端口:8899为RPC端口,确认未被占用:netstat -aptn| grep 8899

依赖包apr-util:确认依赖包apr-util已被安装:rpm –q apr-util

GPCC默认安装在/usr/local目录下面,然而gpadmin默认是没有权限的,所以可以创建一个目录授权给gpadmin,然后在安装的时候指定该目录,我这里比较偷懒,选择如下方式:注意:每一台机器都要一样的操作,这种方式如果还有其他用户的目录均要还原

chown -R gpadmin:gpadmin /usr/local

安装完成后,执行如下操作:

chown -R root:root /usr/local

chown -R gpadmin:gpadmin /usr/local/greenplum*

3.2、安装

安装官网://gpcc.docs.pivotal.io/640/topics/install.html

安装方式的选择,总共有四种方式的安装,这里选择交互式的安装方式:

解压:

unzip greenplum-cc-web-6.4.0-gp6-rhel7-x86_64.zip

ln -s greenplum-cc-web-6.4.0-gp6-rhel7-x86_64 greenplum-cc

chown -R gpadmin:gpadmin greenplum-cc*

切换到gpadmin用户,然后使用-W安装:

su - gpadmin

cd greenplum-cc

./gpccinstall-6.4.0 -W #这里设置密码

输入密码后,一直按空格键,指导同意安装协议:

3.3、配置环境变量

vim ~/.bashrc #添加如下内容,然后source ~/.bashrc

source /usr/local/greenplum-cc/gpcc_path.sh

3.4、验证安装是否成功

然后使用测试:gpcc status

然后看下在查看配置的密码文件:ll -a ~ #没有找到隐藏文件 .pgpass文件,所以就手动创建一个这样的文件

vim ~/.pgpass

#在里面添加如下内容:最后一个字段为安装gpcc时输入的密码

*:5432:gpperfmon:gpmon:1qaz@WSX

然后授权:

chown gpadmin:gpadmin ~/.pgpass

chmod 600 ~/.pgpass

也可以重新修改密码:参考://gpcc.docs.pivotal.io/640/topics/gpmon.html

注意:这里需要把.pgpass文件拷贝master standby 主机的gpadmin的home目录下

然后查看状态,并启动:

然后登陆最后的url,使用gpmon用户和密码登录:

到此配置GPCC就安装完毕了

四、pxf初始化和基本配置

4.1、安装环境确认

使用gpstate确认GP是否启动

确认java是否安装

确认/usr/local目录gpadmin用户是否有权限创建文件,当然也可以使用其他目录

这里同样采用骚操作是的gpadmin用户具有/usr/local目录的创建权限:

chown -R gpadmin:gpadmin /usr/local

安装完成后,执行如下操作:

chown -R root:root /usr/local

chown -R gpadmin:gpadmin /usr/local/greenplum*

4.2、初始化

参考网址://docs-cn.greenplum.org/v6/pxf/init_pxf.html

PXF_CONF=/usr/local/greenplum-pxf $GPHOME/pxf/bin/pxf cluster init

[gpadmin@lgh1~]$ PXF_CONF=/usr/local/greenplum-pxf $GPHOME/pxf/bin/pxf cluster init

Initializing PXF on master host and 2 segment hosts...

PXF initialized successfully on 3 out of 3 hosts

4.3、配置环境变量

vim ~/.bashrc 添加如下内容,然后source一下

export PXF_CONF=/usr/local/greenplum-pxf

export PXF_HOME=/usr/local/greenplum-db/pxf/

export PATH=$PXF_HOME/bin:$PATH

4.4、查看安装是否成功

pxf只会在segment的主机上安装,所以下面只有两个主机有安装

[gpadmin@lgh1 ~]$ pxf cluster status

Checking status of PXF servers on 2 segment hosts...

ERROR: PXF is not running on 2 out of 2 hosts

lgh2 ==> Checking if tomcat is up and running...

ERROR: PXF is down - tomcat is not running...

lgh3 ==> Checking if tomcat is up and running...

ERROR: PXF is down - tomcat is not running...

[gpadmin@lgh1 ~]$ pxf cluster start

Starting PXF on 2 segment hosts...

PXF started successfully on 2 out of 2 hosts

[gpadmin@lgh1 ~]$ pxf cluster status

Checking status of PXF servers on 2 segment hosts...

PXF is running on 2 out of 2 hosts

pxf命令详见://docs-cn.greenplum.org/v6/pxf/ref/pxf-ref.html

4.5、配置hadoop和hive连接器

参考官网://docs-cn.greenplum.org/v6/pxf/client_instcfg.html

说的有点繁琐,一句话就是把所有的几个配置copy过来,看看具体的结果就好:我是直接把hadoop和hive作为默认的连接器:所以配置文件全部放在default下面即可:

[gpadmin@lgh1~]$ cd /usr/local/greenplum-pxf/servers/default/

[gpadmin@lgh1 default]$ ll

total 40

-rw-r--r-- 1 gpadmin gpadmin 4421 Mar 19 17:03 core-site.xml

-rw-r--r-- 1 gpadmin gpadmin 2848 Mar 19 17:03 hdfs-site.xml

-rw-r--r-- 1 gpadmin gpadmin 5524 Mar 23 14:58 hive-site.xml

-rw-r--r-- 1 gpadmin gpadmin 5404 Mar 19 17:03 mapred-site.xml

-rw-r--r-- 1 gpadmin gpadmin 1460 Mar 19 17:03 pxf-site.xml

-rw-r--r-- 1 gpadmin gpadmin 5412 Mar 19 17:03 yarn-site.xml

上面的core-site.xml,hdfs-site.xml,hive-site.xml,mapred-site.xml,yarn-site.xml文件均为hadoop和hive的一些配置文件,均不要修改。因为是CDH的集群,所以如上的配置均在目录中:/etc/hadoop/conf,/etc/hive/conf可以找到

pxf-site.xml这个文件主要是用来配置权限的,可以值得gpadmin可以访问hadoop的目录权限,这种方式比较简单,当然还有其他的方式,内容如下:

[gpadmin@lgh1 default]$ cat pxf-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>pxf.service.kerberos.principal</name>

<value>gpadmin/[email protected]</value>

<description>Kerberos principal pxf service should use. _HOST is replaced automatically with hostnames FQDN</description>

</property>

<property>

<name>pxf.service.kerberos.keytab</name>

<value>${pxf.conf}/keytabs/pxf.service.keytab</value>

<description>Kerberos path to keytab file owned by pxf service with permissions 0400</description>

</property>

<property>

<name>pxf.service.user.impersonation</name>

<value>false</value>

<description>End-user identity impersonation, set to true to enable, false to disable</description>

</property>

<property>

<name>pxf.service.user.name</name>

<value>gpadmin</value>

<description>

The pxf.service.user.name property is used to specify the login

identity when connecting to a remote system, typically an unsecured

Hadoop cluster. By default, it is set to the user that started the

PXF process. If PXF impersonation feature is used, this is the

identity that needs to be granted Hadoop proxy user privileges.

Change it ONLY if you would like to use another identity to login to

an unsecured Hadoop cluster

</description>

</property>

</configuration>

如上内容的操作步骤:

1、 复制模板/usr/local/greenplum-pxf/templates/pxf-site.xml到目标server路径/usr/local/greenplum-pxf/servers/default下

2、 修改pxf.service.user.name和pxf.service.user.impersonation配置

注意:这里可能需要把hadoop整个集群的hosts文件的内容复制到greenplum主机的hosts文件中

还有一种模拟用户和代理的方式,需要重启hadoop集群,详见://docs-cn.greenplum.org/v6/pxf/pxfuserimpers.html

4.6、同步和重启pxf

[gpadmin@lgh1 ~]$ pxf cluster stop

Stopping PXF on 2 segment hosts...

PXF stopped successfully on 2 out of 2 hosts

[gpadmin@lgh1 ~]$ pxf cluster sync

Syncing PXF configuration files from master host to 2 segment hosts...

PXF configs synced successfully on 2 out of 2 hosts

[gpadmin@lgh1 ~]$ pxf cluster start

Starting PXF on 2 segment hosts...

PXF started successfully on 2 out of 2 hosts

这里不做测试了,太繁琐,因为都实现过了,测试可以参考://docs-cn.greenplum.org/v6/pxf/access_hdfs.html