分散式存儲系統之Ceph集群MDS擴展

- 2022 年 10 月 10 日

- 筆記

- ceph, CephFS, MDS擴展, MDS故障轉移機制, Multi MDS, rank, 元數據分區, 常用的元數據分區方式, 手動分配目錄子樹至rank, 管理rank

前文我們了解了cephfs使用相關話題,回顧請參考//www.cnblogs.com/qiuhom-1874/p/16758866.html;今天我們來聊一聊MDS組件擴展相關話題;

我們知道MDS是為了實現cephfs而運行的進程,主要負責管理文件系統元數據資訊;這意味著客戶端使用cephfs存取數據,都會先聯繫mds找元數據;然後mds再去元數據存儲池讀取數據,然後返回給客戶端;即元素存儲池只能由mds操作;換句話說,mds是訪問cephfs的唯一入口;那麼問題來了,如果ceph集群上只有一個mds進程,很多個客戶端來訪問cephfs,那麼mds肯定會成為瓶頸,所以為了提高cephfs的性能,我們必須提供多個mds供客戶端使用;那mds該怎麼擴展呢?前邊我們說過,mds是管理文件系統元素資訊,將元素資訊存儲池至rados集群的指定存儲池中,使得mds從有狀態變為無狀態;那麼對於mds來說,擴展mds就是多運行幾個進程而已;但是由於文件系統元數據的工作特性,我們不能像擴展其他無狀態應用那樣擴展;比如,在ceph集群上有兩個mds,他們同時操作一個存儲池中的一個文件,那麼最後合併時發現,一個刪除文件,一個修改了文件,合併文件系統崩潰了;即兩個mds同時操作存儲池的同一個文件那麼對應mds需要同步和數據一致,這和副本有什麼區別呢?對於客戶端讀請求可以由多個mds分散負載,對於客戶端的寫請求呢,向a寫入,b該怎麼辦呢?b只能從a這邊同步,或者a向b寫入,這樣一來對於客戶端的寫請求並不能分散負載,即當客戶端增多,瓶頸依然存在;

為了解決分散負載文件系統的讀寫請求,分散式文件系統業界提供了將名稱空間分割治理的解決方案,通過將文件系統根樹及其熱點子樹分別部署於不同的元數據伺服器進行負載均衡,從而賦予了元數據存儲線性擴展的可能;簡單講就是一個mds之複製一個子目錄的元數據資訊;

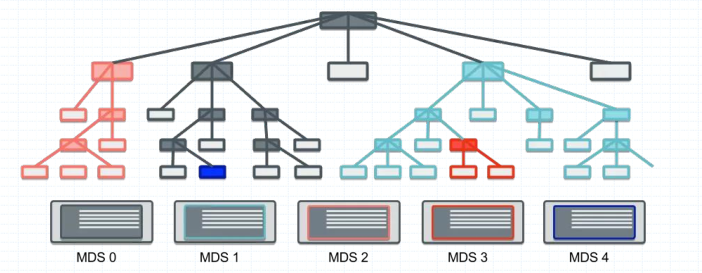

元數據分區

提示:如上所示,我們將一個文件系統可以分成多顆子樹,一個mds只複製其中一顆子樹,從而實現元數據資訊的讀寫分散負載;

常用的元數據分區方式

1、靜態子樹分區:所謂靜態子樹分區,就是管理員手動指定某顆指數,由某個元數據伺服器負責;如,我們將nfs掛載之一個目錄下,這種方式就是靜態子樹分區,通過將一個子目錄關聯到另外一個分區上去,從而實現減輕當前文件系統的負載;

2、靜態hash分區:所謂靜態hash分區是指,有多個目錄,對應文件存儲到那個目錄下,不是管理員指定而是通過對文件名做一致性hash或者hash再取模等等,最終落到那個目錄就存儲到那個目錄;從而減輕對應子目錄在當前文件系統的負載;

3、惰性混編分區:所謂惰性混編分區是指將靜態hash方式和傳統文件系統的方式結合使用;

4、動態子樹分區:所謂動態子樹分區就是根據文件系統的負載能力動態調整對應子樹;cephfs就是使用這種方式實現多活mds;在ceph上多主MDS模式是指CephFS將整個文件系統的名稱空間切分為多個子樹並配置到多個MDS之上,不過,讀寫操作的負載均衡策略分別是子樹切分和目錄副本;將寫操作負載較重的目錄切分成多個子目錄以分散負載;為讀操作負載較重的目錄創建多個副本以均衡負載;子樹分區和遷移的決策是一個同步過程,各MDS每10秒鐘做一次獨立的遷移決策,每個MDS並不存在一個一致的名稱空間視圖,且MDS集群也不存在一個全局調度器負責統一的調度決策;各MDS彼此間通過交換心跳資訊(HeartBeat,簡稱HB)及負載狀態來確定是否要進行遷移、如何分區名稱空間,以及是否需要目錄切分為子樹等;管理員也可以配置CephFS負載的計算方式從而影響MDS的負載決策,目前,CephFS支援基於CPU負載、文件系統負載及混合此兩種的決策機制;

動態子樹分區依賴於共享存儲完成熱點負載在MDS間的遷移,於是Ceph把MDS的元數據存儲於後面的RADOS集群上的專用存儲池中,此存儲池可由多個MDS共享;MDS對元數據的訪問並不直接基於RADOS進行,而是為其提供了一個基於記憶體的快取區以快取熱點元數據,並且在元數據相關日誌條目過期之前將一直存儲於記憶體中;

CephFS使用元數據日誌來解決容錯問題

元數據日誌資訊流式存儲於CephFS元數據存儲池中的元數據日誌文件上,類似於LFS(Log-Structured File System)和WAFL( Write Anywhere File Layout)的工作機制, CephFS元數據日誌文件的體積可以無限增長以確保日誌資訊能順序寫入RADOS,並額外賦予守護進程修剪冗餘或不相關日誌條目的能力;

Multi MDS

每個CephFS都會有一個易讀的文件系統名稱和一個稱為FSCID標識符ID,並且每個CephFS默認情況下都只配置一個Active MDS守護進程;一個MDS集群中可處於Active狀態的MDS數量的上限由max_mds參數配置,它控制著可用的rank數量,默認值為1; rank是指CephFS上可同時處於Active狀態的MDS守護進程的可用編號,其範圍從0到max_mds-1;一個rank編號意味著一個可承載CephFS層級文件系統目錄子樹 目錄子樹元數據管理功能的Active狀態的ceph-mds守護進程編製,max_mds的值為1時意味著僅有一個0號rank可用; 剛啟動的ceph-mds守護進程沒有接管任何rank,它隨後由MON按需進行分配;一個ceph-mds一次僅可佔據一個rank,並且在守護進程終止時將其釋放;即rank分配出去以後具有排它性;一個rank可以處於下列三種狀態中的某一種,Up:rank已經由某個ceph-mds守護進程接管; Failed:rank未被任何ceph-mds守護進程接管; Damaged:rank處於損壞狀態,其元數據處於崩潰或丟失狀態;在管理員修復問題並對其運行「ceph mds repaired」命令之前,處於Damaged狀態的rank不能分配給其它任何MDS守護進程;

查看ceph集群mds狀態

[root@ceph-admin ~]# ceph mds stat

cephfs-1/1/1 up {0=ceph-mon02=up:active}

[root@ceph-admin ~]#

提示:可以看到當前集群有一個mds運行在ceph-mon02節點並處於up活動狀態;

部署多個mds

[root@ceph-admin ~]# ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9478f34830>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7f947918d050>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('ceph-mon01', 'ceph-mon01'), ('ceph-mon03', 'ceph-mon03')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy][ERROR ] ConfigError: Cannot load config: [Errno 2] No such file or directory: 'ceph.conf'; has `ceph-deploy new` been run in this directory?

[root@ceph-admin ~]# su - cephadm

Last login: Thu Sep 29 23:09:04 CST 2022 on pts/0

[cephadm@ceph-admin ~]$ ls

cephadm@ceph-mgr01 cephadm@ceph-mgr02 cephadm@ceph-mon01 cephadm@ceph-mon02 cephadm@ceph-mon03 ceph-cluster

[cephadm@ceph-admin ~]$ cd ceph-cluster/

[cephadm@ceph-admin ceph-cluster]$ ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2c575ba7e8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7f2c57813050>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('ceph-mon01', 'ceph-mon01'), ('ceph-mon03', 'ceph-mon03')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts ceph-mon01:ceph-mon01 ceph-mon03:ceph-mon03

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon01

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.mds][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite-conf to overwrite

[ceph-mon03][DEBUG ] connection detected need for sudo

[ceph-mon03][DEBUG ] connected to host: ceph-mon03

[ceph-mon03][DEBUG ] detect platform information from remote host

[ceph-mon03][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon03

[ceph-mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon03][WARNIN] mds keyring does not exist yet, creating one

[ceph-mon03][DEBUG ] create a keyring file

[ceph-mon03][DEBUG ] create path if it doesn't exist

[ceph-mon03][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mon03 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mon03/keyring

[ceph-mon03][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mon03

[ceph-mon03][WARNIN] Created symlink from /etc/systemd/system/ceph-mds.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[ceph-mon03][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mon03

[ceph-mon03][INFO ] Running command: sudo systemctl enable ceph.target

[ceph_deploy][ERROR ] GenericError: Failed to create 1 MDSs

[cephadm@ceph-admin ceph-cluster]$

提示:這裡出了兩個錯誤,第一個錯誤是沒有找到ceph.conf文件,解決辦法就是切換至cephadm用戶執行ceph-deploy mds create命令;第二個錯誤是告訴我們說遠程主機上的配置文件和我們本地配置文件不一樣;解決辦法,可以先推送配置文件到集群各主機之上或者從集群主機拉取配置文件到本地然後在分發配置文件,然後在部署mds;

查看本地配置文件和遠程集群主機配置文件

[cephadm@ceph-admin ceph-cluster]$ cat /etc/ceph/ceph.conf [global] fsid = 7fd4a619-9767-4b46-9cee-78b9dfe88f34 mon_initial_members = ceph-mon01 mon_host = 192.168.0.71 public_network = 192.168.0.0/24 cluster_network = 172.16.30.0/24 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx [cephadm@ceph-admin ceph-cluster]$ ssh ceph-mon01 'cat /etc/ceph/ceph.conf' [global] fsid = 7fd4a619-9767-4b46-9cee-78b9dfe88f34 mon_initial_members = ceph-mon01 mon_host = 192.168.0.71 public_network = 192.168.0.0/24 cluster_network = 172.16.30.0/24 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx [client] rgw_frontends = "civetweb port=8080" [cephadm@ceph-admin ceph-cluster]$

提示:可以看到ceph-mon01節點上的配置文件中多了一個client的配置段;

從ceph-mon01拉去配置文件到本地

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy config pull ceph-mon01 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy config pull ceph-mon01 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : pull [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f966fb478c0> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-mon01'] [ceph_deploy.cli][INFO ] func : <function config at 0x7f966fd76cf8> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.config][DEBUG ] Checking ceph-mon01 for /etc/ceph/ceph.conf [ceph-mon01][DEBUG ] connection detected need for sudo [ceph-mon01][DEBUG ] connected to host: ceph-mon01 [ceph-mon01][DEBUG ] detect platform information from remote host [ceph-mon01][DEBUG ] detect machine type [ceph-mon01][DEBUG ] fetch remote file [ceph_deploy.config][DEBUG ] Got /etc/ceph/ceph.conf from ceph-mon01 [ceph_deploy.config][ERROR ] local config file ceph.conf exists with different content; use --overwrite-conf to overwrite [ceph_deploy.config][ERROR ] Unable to pull /etc/ceph/ceph.conf from ceph-mon01 [ceph_deploy][ERROR ] GenericError: Failed to fetch config from 1 hosts [cephadm@ceph-admin ceph-cluster]$ ceph-deploy --overwrite-conf config pull ceph-mon01 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy --overwrite-conf config pull ceph-mon01 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : True [ceph_deploy.cli][INFO ] subcommand : pull [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa2f65438c0> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph-mon01'] [ceph_deploy.cli][INFO ] func : <function config at 0x7fa2f6772cf8> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.config][DEBUG ] Checking ceph-mon01 for /etc/ceph/ceph.conf [ceph-mon01][DEBUG ] connection detected need for sudo [ceph-mon01][DEBUG ] connected to host: ceph-mon01 [ceph-mon01][DEBUG ] detect platform information from remote host [ceph-mon01][DEBUG ] detect machine type [ceph-mon01][DEBUG ] fetch remote file [ceph_deploy.config][DEBUG ] Got /etc/ceph/ceph.conf from ceph-mon01 [cephadm@ceph-admin ceph-cluster]$ ls ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring [cephadm@ceph-admin ceph-cluster]$ cat ceph.conf [global] fsid = 7fd4a619-9767-4b46-9cee-78b9dfe88f34 mon_initial_members = ceph-mon01 mon_host = 192.168.0.71 public_network = 192.168.0.0/24 cluster_network = 172.16.30.0/24 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx [client] rgw_frontends = "civetweb port=8080" [cephadm@ceph-admin ceph-cluster]$

提示:如果本地配置文件存在需要加上–overwrite-conf選項強制將覆蓋原有配置文件

再次將本地配置文件分發至集群各主機

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy --overwrite-conf config push ceph-mon01 ceph-mon02 ceph-mon03 ceph-mgr01 ceph-mgr02

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy --overwrite-conf config push ceph-mon01 ceph-mon02 ceph-mon03 ceph-mgr01 ceph-mgr02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fcf983488c0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-mon01', 'ceph-mon02', 'ceph-mon03', 'ceph-mgr01', 'ceph-mgr02']

[ceph_deploy.cli][INFO ] func : <function config at 0x7fcf98577cf8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon02

[ceph-mon02][DEBUG ] connection detected need for sudo

[ceph-mon02][DEBUG ] connected to host: ceph-mon02

[ceph-mon02][DEBUG ] detect platform information from remote host

[ceph-mon02][DEBUG ] detect machine type

[ceph-mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon03

[ceph-mon03][DEBUG ] connection detected need for sudo

[ceph-mon03][DEBUG ] connected to host: ceph-mon03

[ceph-mon03][DEBUG ] detect platform information from remote host

[ceph-mon03][DEBUG ] detect machine type

[ceph-mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr01

[ceph-mgr01][DEBUG ] connection detected need for sudo

[ceph-mgr01][DEBUG ] connected to host: ceph-mgr01

[ceph-mgr01][DEBUG ] detect platform information from remote host

[ceph-mgr01][DEBUG ] detect machine type

[ceph-mgr01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr02

[ceph-mgr02][DEBUG ] connection detected need for sudo

[ceph-mgr02][DEBUG ] connected to host: ceph-mgr02

[ceph-mgr02][DEBUG ] detect platform information from remote host

[ceph-mgr02][DEBUG ] detect machine type

[ceph-mgr02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[cephadm@ceph-admin ceph-cluster]$

再次部署MDS

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mds create ceph-mon01 ceph-mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fc39019c7e8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7fc3903f5050>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('ceph-mon01', 'ceph-mon01'), ('ceph-mon03', 'ceph-mon03')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts ceph-mon01:ceph-mon01 ceph-mon03:ceph-mon03

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon01

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon01][WARNIN] mds keyring does not exist yet, creating one

[ceph-mon01][DEBUG ] create a keyring file

[ceph-mon01][DEBUG ] create path if it doesn't exist

[ceph-mon01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mon01 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mon01/keyring

[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mon01

[ceph-mon01][WARNIN] Created symlink from /etc/systemd/system/ceph-mds.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[ceph-mon01][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mon01

[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph.target

[ceph-mon03][DEBUG ] connection detected need for sudo

[ceph-mon03][DEBUG ] connected to host: ceph-mon03

[ceph-mon03][DEBUG ] detect platform information from remote host

[ceph-mon03][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mon03

[ceph-mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon03][DEBUG ] create path if it doesn't exist

[ceph-mon03][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mon03 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mon03/keyring

[ceph-mon03][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mon03

[ceph-mon03][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mon03

[ceph-mon03][INFO ] Running command: sudo systemctl enable ceph.target

[cephadm@ceph-admin ceph-cluster]$

查看msd狀態

[cephadm@ceph-admin ceph-cluster]$ ceph mds stat

cephfs-1/1/1 up {0=ceph-mon02=up:active}, 2 up:standby

[cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs

cephfs - 0 clients

======

+------+--------+------------+---------------+-------+-------+

| Rank | State | MDS | Activity | dns | inos |

+------+--------+------------+---------------+-------+-------+

| 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 |

+------+--------+------------+---------------+-------+-------+

+---------------------+----------+-------+-------+

| Pool | type | used | avail |

+---------------------+----------+-------+-------+

| cephfs-metadatapool | metadata | 59.8k | 280G |

| cephfs-datapool | data | 3391k | 280G |

+---------------------+----------+-------+-------+

+-------------+

| Standby MDS |

+-------------+

| ceph-mon03 |

| ceph-mon01 |

+-------------+

MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable)

[cephadm@ceph-admin ceph-cluster]$

提示:可以看到現在有兩個mds處於standby狀態,一個active狀態mds;

管理rank

增加Active MDS的數量命令格式:ceph fs set <fsname> max_mds <number>

[cephadm@ceph-admin ceph-cluster]$ ceph fs set cephfs max_mds 2 [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon01 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 61.1k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | ceph-mon03 | +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$

提示:僅當存在某個備用守護進程可供新rank使用時,文件系統中的實際rank數才會增加;多活MDS的場景依然要求存在備用的冗餘主機以實現服務HA,因此max_mds的值總是應該比實際可用的MDS數量至少小1;

降低Acitve MDS的數量

減小max_mds的值僅會限制新的rank的創建,對於已經存在的Active MDS及持有的rank不造成真正的影響,因此降低max_mds的值後,管理員需要手動關閉不再不再被需要的rank;命令格式:ceph mds deactivate {System:rank|FSID:rank|rank}

[cephadm@ceph-admin ceph-cluster]$ ceph fs set cephfs max_mds 1 [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs - 0 clients ====== +------+----------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+----------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | stopping | ceph-mon01 | | 10 | 13 | +------+----------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 61.6k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | ceph-mon03 | +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$ ceph mds deactivate cephfs:1 Error ENOTSUP: command is obsolete; please check usage and/or man page [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 62.1k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | ceph-mon03 | | ceph-mon01 | +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$

提示:雖然我們執行ceph deactivate 命令對應提示我們命令過時,但對應mds還是被還原了;

手動分配目錄子樹至rank

多Active MDS的CephFS集群上會運行一個均衡器用於調度元數據負載,這種模式通常足以滿足大多數用戶的需求;個別場景中,用戶需要使用元數據到特定級別的顯式映射來覆蓋動態平衡器,以在整個集群上自定義分配應用負載;針對此目的提供的機制稱為「導出關聯」,它是目錄的擴展屬性ceph.dir.pin;目錄屬性設置命令:setfattr -n ceph.dir.pin -v RANK /PATH/TO/DIR;擴展屬性的值 ( -v ) 是要將目錄子樹指定到的rank 默認為-1,表示不關聯該目錄;目錄導出關聯繼承自設置了導出關聯的最近的父級,因此,對某個目錄設置導出關聯會影響該目錄的所有子級目錄;

[cephadm@ceph-admin ceph-cluster]$ sefattr -bash: sefattr: command not found [cephadm@ceph-admin ceph-cluster]$ yum provides setfattr Loaded plugins: fastestmirror Repository epel is listed more than once in the configuration Repository epel-debuginfo is listed more than once in the configuration Repository epel-source is listed more than once in the configuration Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com attr-2.4.46-13.el7.x86_64 : Utilities for managing filesystem extended attributes Repo : base Matched from: Filename : /usr/bin/setfattr [cephadm@ceph-admin ceph-cluster]$

提示:前提是我們系統上要有setfattr命令,如果沒有可以安裝attr這個包即可;

MDS故障轉移機制

出於冗餘的目的,每個CephFS上都應該配置一定數量Standby狀態的ceph-mds守護進程等著接替失效的rank,CephFS提供了四個選項用於控制Standby狀態的MDS守護進程如何工作;

1、 mds_standby_replay:布爾型值,true表示當前MDS守護進程將持續讀取某個特定的Up狀態的rank的元數據日誌,從而持有相關rank的元數據快取,並在此rank失效時加速故障切換; 一個Up狀態的rank僅能擁有一個replay守護進程,多出的會被自動降級為正常的非replay型MDS;

2、 mds_standby_for_name:設置當前MDS進程僅備用於指定名稱的rank;

3、 mds_standby_for_rank:設置當前MDS進程僅備用於指定的rank,它不會接替任何其它失效的rank;不過,在有著多個CephFS的場景中,可聯合使用下面的參數來指定為哪個文件系統的rank進行冗餘;

4、 mds_standby_for_fscid:聯合mds_standby_for_rank參數的值協同生效;同時設置了mds_standby_for_rank:備用於指定fscid的指定rank;未設置mds_standby_for_rank時:備用於指定fscid的任意rank;

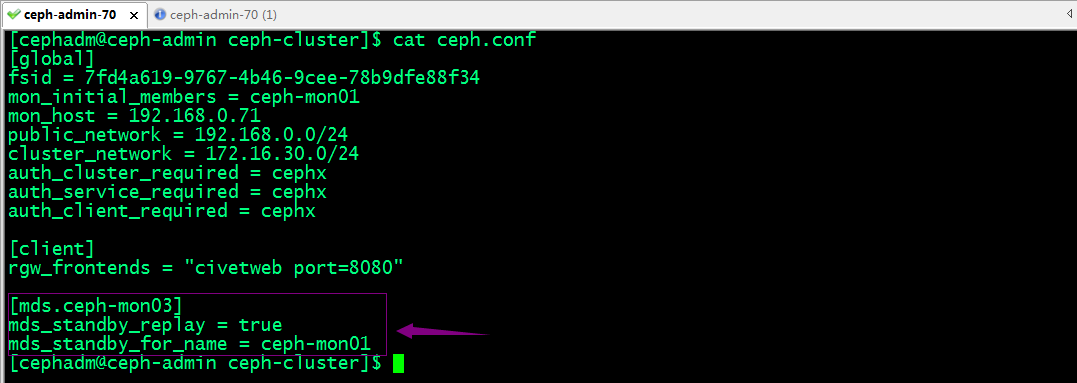

配置冗餘mds

提示:上述配置表示ceph-mon03這個冗餘的mds開啟對ceph-mon01做實時備份,但ceph-mon01故障,對應ceph-mon03自動接管ceph-mon01負責的rank;

推送配置到集群各主機

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy --overwrite-conf config push ceph-mon01 ceph-mon02 ceph-mon03 ceph-mgr01 ceph-mgr02

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy --overwrite-conf config push ceph-mon01 ceph-mon02 ceph-mon03 ceph-mgr01 ceph-mgr02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f03332968c0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-mon01', 'ceph-mon02', 'ceph-mon03', 'ceph-mgr01', 'ceph-mgr02']

[ceph_deploy.cli][INFO ] func : <function config at 0x7f03334c5cf8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon02

[ceph-mon02][DEBUG ] connection detected need for sudo

[ceph-mon02][DEBUG ] connected to host: ceph-mon02

[ceph-mon02][DEBUG ] detect platform information from remote host

[ceph-mon02][DEBUG ] detect machine type

[ceph-mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mon03

[ceph-mon03][DEBUG ] connection detected need for sudo

[ceph-mon03][DEBUG ] connected to host: ceph-mon03

[ceph-mon03][DEBUG ] detect platform information from remote host

[ceph-mon03][DEBUG ] detect machine type

[ceph-mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr01

[ceph-mgr01][DEBUG ] connection detected need for sudo

[ceph-mgr01][DEBUG ] connected to host: ceph-mgr01

[ceph-mgr01][DEBUG ] detect platform information from remote host

[ceph-mgr01][DEBUG ] detect machine type

[ceph-mgr01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to ceph-mgr02

[ceph-mgr02][DEBUG ] connection detected need for sudo

[ceph-mgr02][DEBUG ] connected to host: ceph-mgr02

[ceph-mgr02][DEBUG ] detect platform information from remote host

[ceph-mgr02][DEBUG ] detect machine type

[ceph-mgr02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[cephadm@ceph-admin ceph-cluster]$

停止ceph-mon01上的mds進程,看看對應ceph-mon03是否接管?

[cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon01 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | ceph-mon03 | +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$ ssh ceph-mon01 'systemctl stop [email protected]' Failed to stop [email protected]: Interactive authentication required. See system logs and 'systemctl status [email protected]' for details. [cephadm@ceph-admin ceph-cluster]$ ssh ceph-mon01 'sudo systemctl stop [email protected]' [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | rejoin | ceph-mon03 | | 0 | 3 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon03 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$

提示:可以看到當ceph-mon01故障以後,對應ceph-mon03自動接管了ceph-mon01負責的rank;

恢復ceph-mon01

[cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon03 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$ ssh ceph-mon01 'sudo systemctl start [email protected]' [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs - 0 clients ====== +------+--------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+--------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon03 | Reqs: 0 /s | 10 | 13 | +------+--------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ | ceph-mon01 | +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$ ssh ceph-mon03 'sudo systemctl restart [email protected]' [cephadm@ceph-admin ceph-cluster]$ ceph fs status cephfs - 0 clients ====== +------+----------------+------------+---------------+-------+-------+ | Rank | State | MDS | Activity | dns | inos | +------+----------------+------------+---------------+-------+-------+ | 0 | active | ceph-mon02 | Reqs: 0 /s | 18 | 17 | | 1 | active | ceph-mon01 | Reqs: 0 /s | 10 | 13 | | 1-s | standby-replay | ceph-mon03 | Evts: 0 /s | 0 | 3 | +------+----------------+------------+---------------+-------+-------+ +---------------------+----------+-------+-------+ | Pool | type | used | avail | +---------------------+----------+-------+-------+ | cephfs-metadatapool | metadata | 65.3k | 280G | | cephfs-datapool | data | 3391k | 280G | +---------------------+----------+-------+-------+ +-------------+ | Standby MDS | +-------------+ +-------------+ MDS version: ceph version 13.2.10 (564bdc4ae87418a232fc901524470e1a0f76d641) mimic (stable) [cephadm@ceph-admin ceph-cluster]$

提示:重新恢復ceph-mon01以後,對應不會進行搶佔,它會自動淪為standby狀態;並且當ceph-mon03重啟或故障後對應ceph-mon01也會自動接管對應rank;