SUSE Ceph 的 'MAX AVAIL' 和 數據平衡 – Storage 6

- 2019 年 10 月 3 日

- 筆記

1. 客戶環境

-

節點數量:4個存儲節點

-

OSD數量:每個節點10塊8GB磁碟,總共 40 塊OSD

-

Ceph 版本: Storage 6

-

使用類型: CephFS 文件

-

CephFS數據池: EC, 2+1

-

元數據池: 3 replication

-

客戶端:sles12sp3

2. 問題描述

客戶詢問為什麼突然少了那麼多存儲容量?

1) 客戶在存儲數據前掛載cephfs,磁碟容量顯示185T

# df -Th 10.109.205.61,10.109.205.62,10.109.205.63:/ 185T 50G 184T 1% /SES

2) 但在客戶存儲數據後,發現掛載的存儲容量少了8T,只有177T

# df -Th 10.109.205.61,10.109.205.62,10.109.205.63:/ 177T 50G 184T 1% /SES

3. 問題分析

從客戶獲取 “ceph df ”資訊進行比較

(1)未存儲數據前資訊

# ceph df RAW STORAGE: CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 297 TiB 291 TiB 5.9 TiB 5.9 TiB 1.99 TOTAL 297 TiB 291 TiB 5.9 TiB 5.9 TiB 1.99 POOLS: POOL ID STORED OBJECTS USED %USED MAX AVAIL cephfs_data 1 0 B 0 0 B 0 184 TiB cephfs_metadata 2 6.7 KiB 60 3.8 MiB 0 92 TiB

(2)客戶存儲數據後資訊輸出

# ceph df RAW STORAGE: CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 297 TiB 239 TiB 57 TiB 58 TiB 19.37 TOTAL 297 TiB 239 TiB 57 TiB 58 TiB 19.37 POOLS: POOL ID STORED OBJECTS USED %USED MAX AVAIL cephfs_data 1 34 TiB 9.75M 51 TiB 19.40 142 TiB cephfs_metadata 2 1.0 GiB 9.23K 2.9 GiB 0 71 TiB

我們發現客戶端掛載顯示的存儲容量 MAX AVAIL + STORED

(1)存儲數據前:185TB = 184 + 0

(2)存儲數據後:177TB = 142 + 34 = 176

(3)從中可以發現少掉 7TB 數據量是由 ‘MAX AVAIL’引起的變化

(4)SUSE 知識庫對 MAX AVAIL 計算公式如下:

[min(osd.avail for osd in OSD_up) - ( min(osd.avail for osd in OSD_up).total_size * (1 - mon_osd_full_ratio)) ]* len(osd.avail for osd in OSD_up) /pool.size()

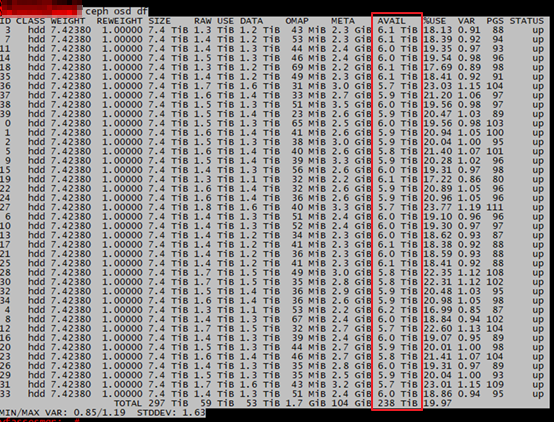

(5)命令 ceph osd df 輸出資訊

圖1

因此可以推算出:

(1)未存儲數據之前容量計算:

185 TB (Max avail) = min(osd.avail for osd in OSD_up) * num of OSD * 66.66% * mon_osd_full_ratio = 7.4 * 40 * 66.66% * 0.95= 195 TB * 0.95 =185

(2)存儲數據後容量計算

142 TB (MAX avail)= min(osd.avail for osd in OSD_up) * num of OSD * 66.66% * mon_osd_full_ratio = 5.7 * 40 *66.66% * 0.95 = 151 TB * 0.95 = 143

公式解釋

1) min(osd.avail for osd in OSD_up):從ceph osd df 中AVAIL一列選擇最小值。

- 未存儲數據時最小值是7.4TB

- 而存儲數據後最小值是5.7TB

2) num of OSD :表示集群中UP狀態的OSD數量,那客戶新集群OSD數量就40

3) 66.66% : 因為糾刪碼原因,2+1 的比例

4) mon_osd_full_ratio = 95%

When a Ceph Storage cluster gets close to its maximum capacity (specifies by the mon_osd_full_ratio parameter), Ceph prevents you from writing to or reading from Ceph OSDs as a safety measure to prevent data loss. Therefore, letting a production Ceph Storage cluster approach its full ratio is not a good practice, because it sacrifices high availability. The default full ratio is .95, or 95% of capacity. This a very aggressive setting for a test cluster with a small number of OSDs.

ceph daemon osd.2 config get mon_osd_full_ratio { "mon_osd_full_ratio": "0.950000" }

4. 解決方案

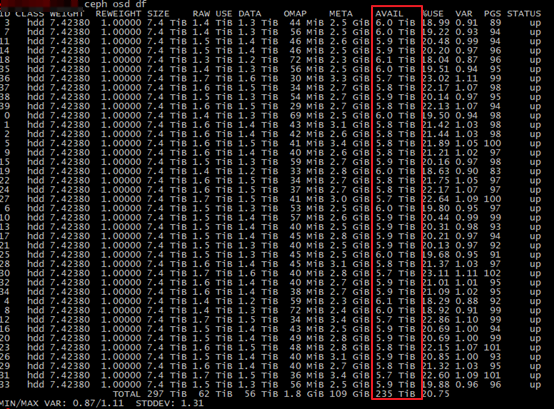

從上面公式分析,我們可以清楚的看到主要是 MAX AVAIL 的計算公式原因導致存儲容量的變化,這是淺層次的分析問題所在,其實在圖1中我們可以仔細的發現主要是OSD數據不均勻導致。

- 客戶詢問我如何才能讓數據平衡,我的回答是PG數量計算 和 Storage6 新功能自動平衡。

(1)開啟ceph 數據平衡

# ceph balancer on

# ceph balancer mode crush-compat

# ceph balancer status { "active": true, "plans": [], "mode": "crush-compat" }

(2)數據自動開始遷移

cluster: id: 8038197f-81a2-47ae-97d1-fb3c6b31ea94 health: HEALTH_OK services: mon: 3 daemons, quorum yfasses1,yfasses2,yfasses3 (age 5d) mgr: yfasses1(active, since 5d), standbys: yfasses2, yfasses3 mds: cephfs:2 {0=yfasses4=up:active,1=yfasses2=up:active} 1 up:standby osd: 40 osds: 40 up (since 5d), 40 in (since 5d); 145 remapped pgs data: pools: 2 pools, 1280 pgs objects: 10.40M objects, 36 TiB usage: 61 TiB used, 236 TiB / 297 TiB avail pgs: 1323622/31197615 objects misplaced (4.243%) 1135 active+clean 124 active+remapped+backfill_wait 21 active+remapped+backfilling io: client: 91 MiB/s rd, 125 MiB/s wr, 882 op/s rd, 676 op/s wr recovery: 1.0 GiB/s, 279 objects/s

(3)等遷移後基本所有的OSD數據保持差不多數據量

(4) 客戶端掛載顯示180TB

10.109.205.61,10.109.205.62,10.109.205.63:/ 180T 37T 143T 21% /SES