pod生命周期

- 2021 年 7 月 4 日

- 筆記

- Kubernetes, Linux學習

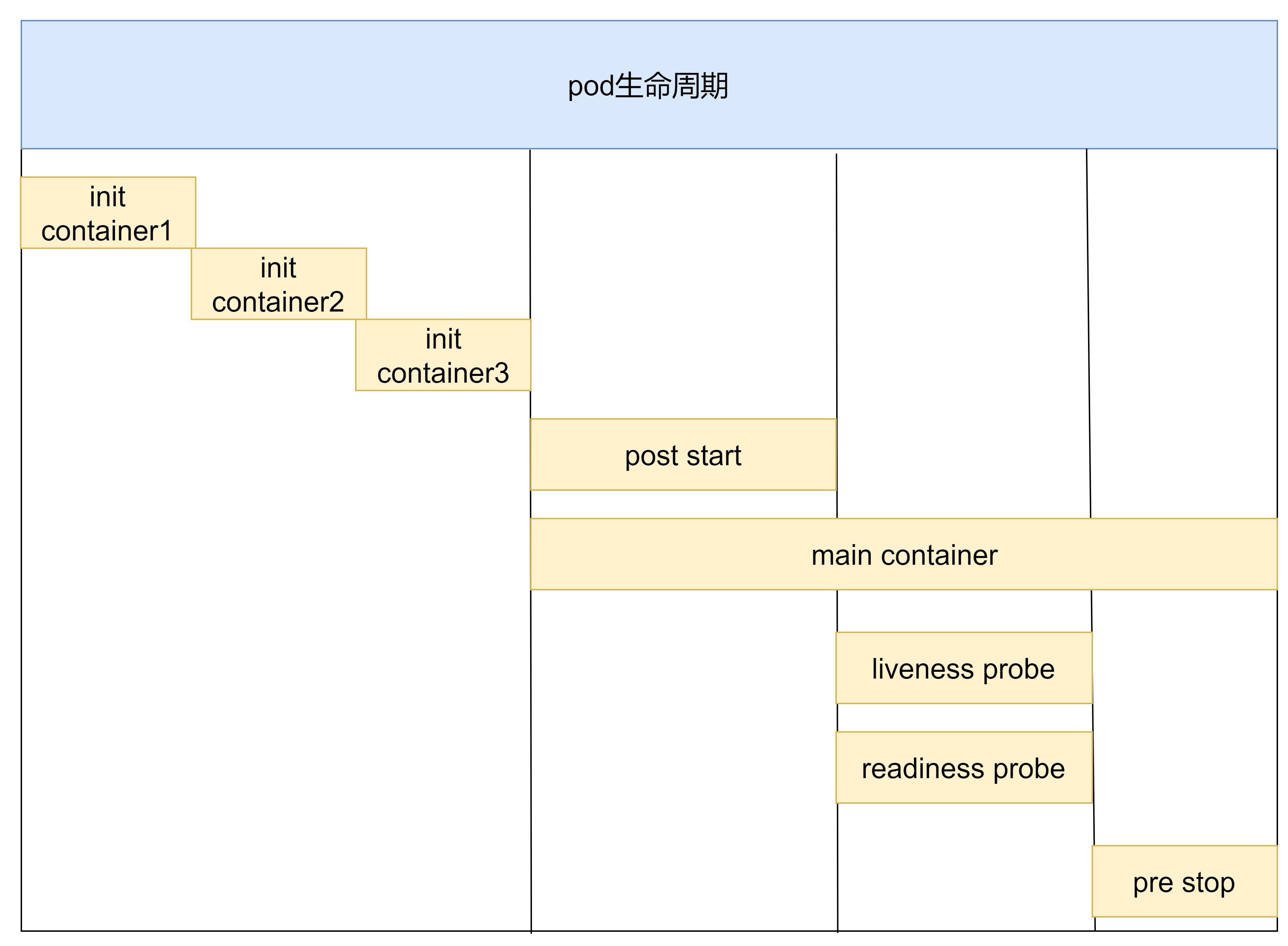

Pod生命周期

我們一般將pod對象從創建至終這段時間範圍成為pod的生命周期,它主要包含以下的過程:

- pod創建過程

- 運行初始化容器(init container)過程

- 運行主容器(main container)

- 容器啟動後鉤子(post start)、容器終止前鉤子(pre stop)

- 容器的存活性檢測(liveness probe)、就緒性檢測(readiness probe)

- pod終止過程

pod的創建和終止

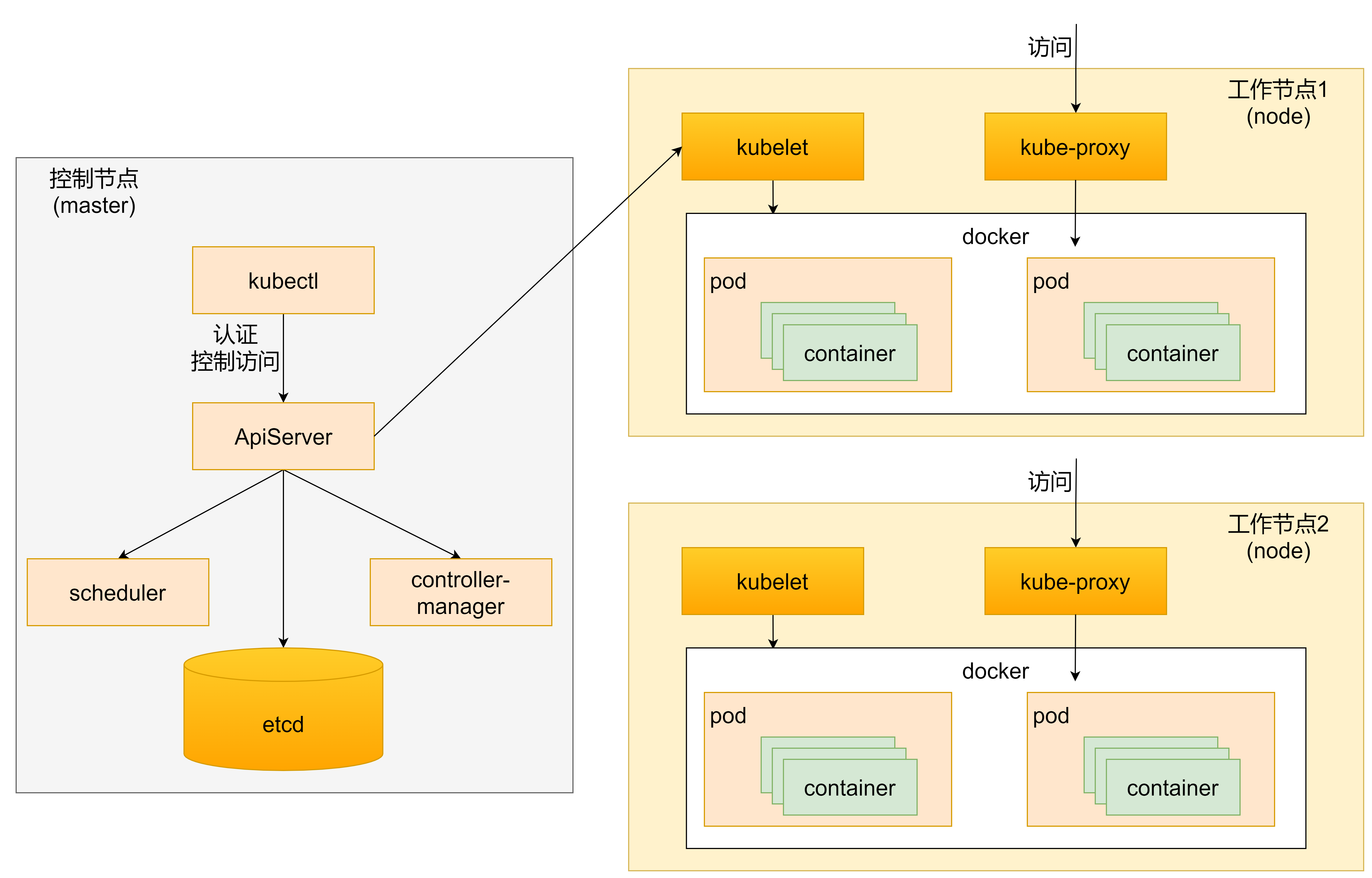

pod的創建過程

- 用戶通過kubectl或其他api客戶端提交需要創建的pod資訊給apiserver

- apiserver開始生成pod對象的資訊,並將資訊存入etcd,然後返回確認資訊至客戶端

- apiserver開始反映etcd中pod對象的變化,其他組件使用watch機制來跟蹤檢查apiserver上的變動

- scheduler發現有新的pod對象要創建,開始為pod分配主機並將結果資訊更新至apiserver

- node節點上的kubectl發現有pod調度過來,嘗試調用docker啟動容器,並將結果返回送至apiserver

- apiserver將接收到的pod狀態資訊存入etcd中

pod的終止過程

- 用戶向apiserver發送刪除pod對象的命令

- apiserver中的pod對象資訊會隨著時間的推移而更新,在寬限期內(默認30s),pod被視為dead

- 將pod標記為terminating狀態

- kubelet在監控到pod對象轉為terminating狀態的同時啟動pod關閉進程

- 端點控制器監控到pod對象的關閉行為時將其從所有匹配到此端點的service資源的端點列表移除

- 如果當前pod對象定義了prestop鉤子處理器,則在其標記為terminating後即會以同步的方式啟動執行

- pod對象中的容器進程收到停止訊號

- 寬限期結束後,若pod中還存在仍在運行的進程,那麼pod對象會收到立即終止的訊號

- kubelet請求apiserver將此pod資源的寬限期設置為0從而完成刪除操作,此時pod對於用戶已不可見

初始化容器

初始化容器是在pod的主容器啟動之前要運行的容器,主要是做一些主容器的前置工作,它具有兩大特徵

- 初始化容器必須運行完成直至結束,若某初始化容器運行失敗,那麼k8s需要重啟它直到成功完成

- 初始化容器必須按照定義的順序執行,當僅當前一個執行成功之後,後面的一個才能運行

初始化容器有很多的應用場景,下面列出的是最常見的幾個

- 提供主容器鏡像中不具備的工具程式或自定義程式碼

- 初始化容器要先於應用容器串列啟動並運行完成,因此可用於延後應用容器的啟動直至其依賴的條件得到滿足

接下來做一個案例,模擬下面這個需求

假設要以主容器來運行nginx,但是要求在運行nginx之前要能夠連接上mysql和redis所在伺服器

為了簡化測試,事先規定好mysql和redis伺服器的地址

創建pod-initcontainer.yaml,內容如下:

apiVersion: v1 kind: Pod metadata: name: pod-initcontainer namespace: dev labels: user: ayanami spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 initContainers: - name: test-mysql image: busybox:1.30 command: ['sh','-c','until ping 192.168.145.231 -c 1; do echo waiting for mysql...;sleep 2; done'] - name: test-redis image: busybox:1.30 command: ['sh','-c','until ping 192.168.145.232 -c 1; do echo waiting for redis...;sleep 2; done']

運行配置文件

[root@master ~]# vim pod-initcontainer.yaml [root@master ~]# kubectl create -f pod-initcontainer.yaml pod/pod-initcontainer created [root@master ~]# kubectl get pod pod-initcontainer -n dev NAME READY STATUS RESTARTS AGE pod-initcontainer 0/1 Init:0/2 0 20s

發現container一直處於初始化狀態

此時添加ip地址(註:ens32是你的網卡的名字,不同機器可能不同,可以用ifconfig查看)

[root@master ~]# ifconfig ens32:1 192.168.145.231 netmask 255.255.255.0 up [root@master ~]# ifconfig ens32:2 192.168.145.232 netmask 255.255.255.0 up [root@master ~]# kubectl get pod pod-initcontainer -n dev NAME READY STATUS RESTARTS AGE pod-initcontainer 1/1 Running 0 19m

發現pod跑起來了

鉤子函數

鉤子函數能夠感知自身生命周期中的事件,並在相應的時刻到來時運行用戶指定的程式程式碼

k8s在主容器的啟動之後和停止之前提供了兩個鉤子函數

- post start:容器創建之後執行,如果失敗了會重啟容器

- pre stop:容器終止之前執行,執行完成之後容器將成功終止,在其完成之前會阻塞刪除容器的操作

鉤子處理器支援使用下面三種方式定義動作:

- Exec命令:在容器內執行一次命令

...... lifecycle: postStart: exec: command: - cat - /tmp/healthy ......

- TCPSocket:在當前容器嘗試訪問指定的socket

...... lifecycle: postStart: tcpSocket: port: 8080 ......

- HttpGet:在當前容器中向某url發起http請求

......

lifecycle:

postStart:

httpGet:

path: #uri地址

port:

host:

scheme: HTTP #支援的協議,http或者https

......

下面演示鉤子函數的使用

創建pod-hook-exec.yaml文件,內容如下:

apiVersion: v1 kind: Pod metadata: name: pod-hook-exec namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 lifecycle: postStart: exec: #在容器啟動的時候執行一個命令,修改掉nginx的默認首頁內容 command: ["/bin/sh","-c","echo postStart... > /usr/share/nginx/html/index.html"] preStop: #在容器停止之前停止nginx服務 exec: command: ["/usr/sbin/nginx","-s","quit"]

使用配置文件

[root@master ~]# vim pod-hook-exec.yaml [root@master ~]# kubectl create -f pod-hook-exec.yaml pod/pod-hook-exec created [root@master ~]# kubectl get pod pod-hook-exec -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-hook-exec 1/1 Running 0 43s 10.244.2.22 node1 <none> <none> [root@master ~]# curl 10.244.2.22:80 postStart...

容器探測

容器探測用於檢測容器中的應用實例是否能正常工作,是保障業務可用性的一種傳統機制。如果經過探測,實例的狀態不符合預期

,那麼K8S就會把該問題實例「摘除」,不承擔業務流量,k8s提供了兩種探針來實現容器探測,分別是:

- liveness probes:存活性探針,用於檢測應用實例當前是否處於正常運行狀態,如果不是,k8s會重啟容器

- readiness probes:就緒性探針,用於檢測應用實例當前是否可以接受請求,如果不能,k8s不會轉發流量

即livenessProbe決定是否重啟容器,readinesProbe決定是否將請求轉發給容器

上面兩種探針目前均支援三種探測方式:

- Exec命令:在容器內執行一次命令,如果命令執行的退出碼為0,則認為程式正常,否則不正常

...... livenessProbe: exec: command: - cat - /tmp/healthy ......

- TCPSocket:將會嘗試訪問同一個用戶容器的埠,如果能夠建立這條連接,則認為程式正常,否則不正常

...... livenessProbe: tcpSocket: port: 8080 ......

- HTTPGet:調用容器內Web應用的URL,如果返回的狀態碼在200和399之間,則認為程式正常,否則不正常

......

lifecycle:

postStart:

httpGet:

path: #uri地址

port:

host:

scheme: HTTP #支援的協議,http或者https

......

下面以liveness probes為例,做幾個演示:

方式一:EXEC

創建pod-liveness-exec.yaml

apiVersion: v1 kind: Pod metadata: name: pod-liveness-exec namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: exec: command: ["/bin/cat","/tmp/hello.txt"] #執行一個查看文件的命令

使用配置文件

[root@master ~]# vim pod-liveness-exec.yaml [root@master ~]# kubectl create -f pod-liveness-exec.yaml pod/pod-liveness-exec created [root@master ~]# kubectl get pod pod-liveness-exec -n dev NAME READY STATUS RESTARTS AGE pod-liveness-exec 1/1 Running 1 102s

發現pod重啟了一次,查看錯誤資訊

[root@master ~]# kubectl describe pod pod-liveness-exec -n dev Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned dev/pod-liveness-exec to node1 Normal Pulled 49s (x4 over 2m20s) kubelet, node1 Container image "nginx:1.17.1" already present on machine Normal Created 49s (x4 over 2m20s) kubelet, node1 Created container main-container Normal Started 49s (x4 over 2m20s) kubelet, node1 Started container main-container Normal Killing 49s (x3 over 109s) kubelet, node1 Container main-container failed liveness probe, will be restarted Warning Unhealthy 39s (x10 over 2m9s) kubelet, node1 Liveness probe failed: /bin/cat: /tmp/hello.txt: No such file or directory

修改文件內容

apiVersion: v1 kind: Pod metadata: name: pod-liveness-exec namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: exec: command: ["/bin/ls","/tmp/"] #執行一個查看文件的命令

使用配置文件

[root@master ~]# vim pod-liveness-exec.yaml [root@master ~]# kubectl delete -f pod-liveness-exec.yaml [root@master ~]# kubectl create -f pod-liveness-exec.yaml pod/pod-liveness-exec created [root@master ~]# kubectl get pod pod-liveness-exec -n dev NAME READY STATUS RESTARTS AGE pod-liveness-exec 1/1 Running 0 84s

說明沒有重啟

方式二:TCPSocket

創建pod-liveness-tcpsocket.yaml

apiVersion: v1 kind: Pod metadata: name: pod-liveness-tcpsocket namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: tcpSocket: port: 8080 #嘗試訪問8080埠

使用配置文件

[root@master ~]# vim pod-liveness-tcpsocket.yaml [root@master ~]# kubectl create -f pod-liveness-tcpsocket.yaml pod/pod-liveness-tcpsocket created [root@master ~]# kubectl get pod pod-liveness-tcpsocket -n dev NAME READY STATUS RESTARTS AGE pod-liveness-tcpsocket 1/1 Running 1 29s

[root@master ~]# kubectl describe pod pod-liveness-tcpsocket -n dev Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned dev/pod-liveness-tcpsocket to node1 Normal Pulled 43s (x4 over 2m10s) kubelet, node1 Container image "nginx:1.17.1" already present on machine Normal Created 43s (x4 over 2m10s) kubelet, node1 Created container main-container Normal Started 43s (x4 over 2m10s) kubelet, node1 Started container main-container Normal Killing 43s (x3 over 103s) kubelet, node1 Container main-container failed liveness probe, will be restarted Warning Unhealthy 33s (x10 over 2m3s) kubelet, node1 Liveness probe failed: dial tcp 10.244.2.25:8080: connect: connection refused

更改文件內容

apiVersion: v1 kind: Pod metadata: name: pod-liveness-tcpsocket namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: tcpSocket: port: 80 #嘗試訪問80埠

重新使用配置文件

[root@master ~]# vim pod-liveness-tcpsocket.yaml [root@master ~]# kubectl delete -f pod-liveness-tcpsocket.yaml pod "pod-liveness-tcpsocket" deleted [root@master ~]# kubectl create -f pod-liveness-tcpsocket.yaml pod/pod-liveness-tcpsocket created [root@master ~]# kubectl get pod pod-liveness-tcpsocket -n dev NAME READY STATUS RESTARTS AGE pod-liveness-tcpsocket 1/1 Running 0 18s

表明沒有問題

方式三:HTTPGet

創建pod-liveness-httpget.yaml

apiVersion: v1 kind: Pod metadata: name: pod-liveness-httpget namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: httpGet: #其實就是訪問http://127.0.0.1:80/hello scheme: HTTP #支援的協議,http或https port: 80 path: /hello #uri地址

使用配置文件

[root@master ~]# vim pod-liveness-httpget.yaml [root@master ~]# kubectl create -f pod-liveness-httpget.yaml pod/pod-liveness-httpget created [root@master ~]# kubectl get pod pod-liveness-httpget -n dev NAME READY STATUS RESTARTS AGE pod-liveness-httpget 1/1 Running 1 75s [root@master ~]# kubectl describe pod pod-liveness-httpget -n dev Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned dev/pod-liveness-httpget to node2 Normal Pulled 18s (x3 over 74s) kubelet, node2 Container image "nginx:1.17.1" already present on machine Normal Created 18s (x3 over 74s) kubelet, node2 Created container main-container Normal Killing 18s (x2 over 48s) kubelet, node2 Container main-container failed liveness probe, will be restarted Normal Started 17s (x3 over 73s) kubelet, node2 Started container main-container Warning Unhealthy 8s (x7 over 68s) kubelet, node2 Liveness probe failed: HTTP probe failed with statuscode: 404

可以看見pod在重啟,詳細描述說明沒找到這個網址

修改配置文件

apiVersion: v1 kind: Pod metadata: name: pod-liveness-httpget namespace: dev spec: containers: - name: main-container image: nginx:1.17.1 ports: - name: nginx-port containerPort: 80 livenessProbe: httpGet: #其實就是訪問http://127.0.0.1:80/ scheme: HTTP #支援的協議,http或https port: 80 path: / #uri地址

重新使用配置文件

[root@master ~]# kubectl delete -f pod-liveness-httpget.yaml pod "pod-liveness-httpget" deleted [root@master ~]# kubectl create -f pod-liveness-httpget.yaml pod/pod-liveness-httpget created [root@master ~]# kubectl get pod pod-liveness-httpget -n dev NAME READY STATUS RESTARTS AGE pod-liveness-httpget 1/1 Running 0 24s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned dev/pod-liveness-httpget to node2 Normal Pulled 27s kubelet, node2 Container image "nginx:1.17.1" already present on machine Normal Created 27s kubelet, node2 Created container main-container Normal Started 27s kubelet, node2 Started container main-container

表明配置沒有問題

其他配置

至此,已經使用liveness probe演示了三種探測方式,但是查看livenessProbe的子屬性,會發現除了這三種方式,還有一些其他的配置,在這裡一併解釋下

[root@master ~]# kubectl explain pod.spec.containers.livenessProbe KIND: Pod VERSION: v1 RESOURCE: livenessProbe <Object> DESCRIPTION: Periodic probe of container liveness. Container will be restarted if the probe fails. Cannot be updated. More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes Probe describes a health check to be performed against a container to determine whether it is alive or ready to receive traffic. FIELDS: exec <Object> One and only one of the following should be specified. Exec specifies the action to take. failureThreshold <integer> Minimum consecutive failures for the probe to be considered failed after having succeeded. Defaults to 3. Minimum value is 1. httpGet <Object> HTTPGet specifies the http request to perform. initialDelaySeconds <integer> Number of seconds after the container has started before liveness probes are initiated. More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes periodSeconds <integer> How often (in seconds) to perform the probe. Default to 10 seconds. Minimum value is 1. successThreshold <integer> Minimum consecutive successes for the probe to be considered successful after having failed. Defaults to 1. Must be 1 for liveness and startup. Minimum value is 1. tcpSocket <Object> TCPSocket specifies an action involving a TCP port. TCP hooks not yet supported timeoutSeconds <integer> Number of seconds after which the probe times out. Defaults to 1 second. Minimum value is 1. More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes