剖析虛幻渲染體系(04)- 延遲渲染管線

- 4.1 本篇概述

- 4.2 延遲渲染技術

- 4.2.1 延遲渲染簡介

- 4.2.2 延遲渲染技術

- 4.2.3 延遲渲染的變種

- 4.2.3.1 Deferred Lighting(Light Pre-Pass)

- 4.2.3.2 Tiled-Based Deferred Rendering(TBDR)

- 4.2.3.3 Clustered Deferred Rendering

- 4.2.3.4 Decoupled Deferred Shading

- 4.2.3.5 Visibility Buffer

- 4.2.3.6 Fine Pruned Tiled Light Lists

- 4.2.3.7 Deferred Attribute Interpolation Shading

- 4.2.3.8 Deferred Coarse Pixel Shading

- 4.2.3.9 Deferred Adaptive Compute Shading

- 4.2.4 前向渲染及其變種

- 4.2.5 渲染路徑總結

- 4.3 延遲著色場景渲染器

- 4.4 本篇總結

- 特別說明

- 參考文獻

4.1 本篇概述

4.1.1 本篇概述

本篇主要闡述UE的FDeferredShadingSceneRenderer的具體實現過程及涉及的過程。這裡涉及了關鍵的渲染技術:延遲著色(Deferred Shading)。它是和前向渲染有著不同步驟和設計的渲染路徑。

在闡述FDeferredShadingSceneRenderer之前,會先闡述渲染渲染、前向渲染及它們的變種的技術,以便更好地切入到UE的實現。更具體地說,本篇主要包含以下幾點內容:

-

渲染路徑(Render Path)

- 前向渲染及其變種

- 延遲渲染及其變種

-

FDeferredShadingSceneRenderer(延遲著色場景渲染器)

- InitViews

- PrePass

- BasePass

- LightingPass

- TranslucentPass

- Postprocess

-

延遲渲染的優化及未來

4.1.2 基礎概念

本篇涉及的部分渲染基礎概念及解析如下表:

| 概念 | 縮寫 | 中文譯名 | 解析 |

|---|---|---|---|

| Render Target | RT | 渲染目標,渲染紋理 | 一種位於GPU顯示記憶體的紋理緩衝區,可用作顏色寫入和混合的目標。 |

| Geometry Buffer | GBuffer | 幾何緩衝區 | 一種特殊的Render Target,延遲著色技術的幾何數據存儲容器。 |

| Rendering Path | – | 渲染路徑 | 將場景物體渲染到渲染紋理的過程中所使用的步驟和技術。 |

| Forward Shading | – | 前向渲染 | 一種普通存在和天然支援的渲染路徑,比延遲著色路徑更為簡單便捷。 |

4.2 延遲渲染技術

4.2.1 延遲渲染簡介

延遲渲染(Deferred Rendering, Deferred Shading)最早由Michael Deering在1988年的論文The triangle processor and normal vector shader: a VLSI system for high performance graphics提出,是一種基於螢幕空間著色的技術,也是最普遍的一種渲染路徑(Rendering Path)技術。與之相類似的是前向渲染(Forward Shading)。

它的核心思想是將場景的物體繪製分離成兩個Pass:幾何Pass和光照Pass,目的是將計算量大的光照Pass延後,和物體數量和光照數量解耦,以提升著色效率。在目前的主流渲染器和商業引擎中,有著廣泛且充分的支援。

4.2.2 延遲渲染技術

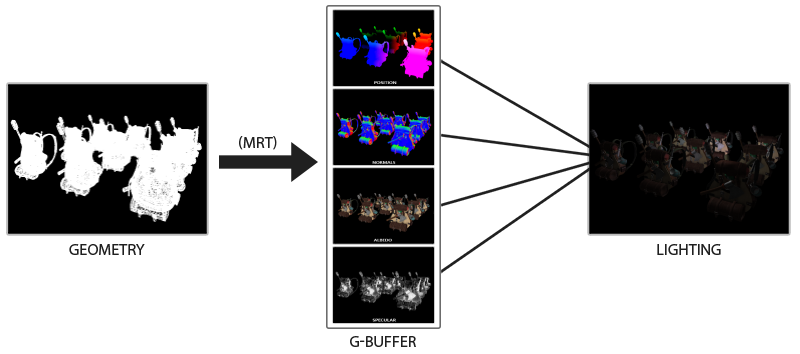

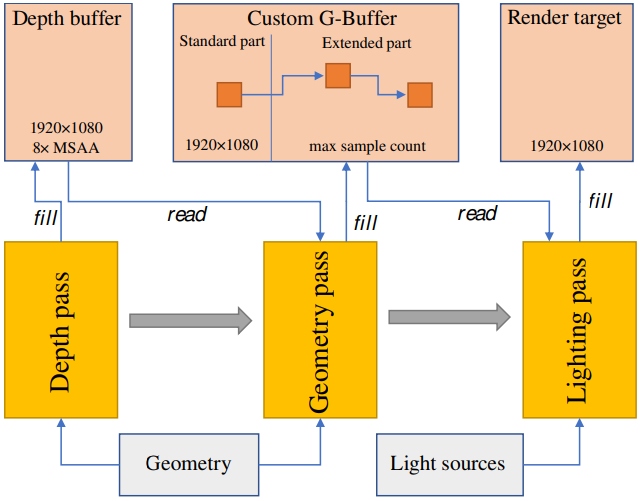

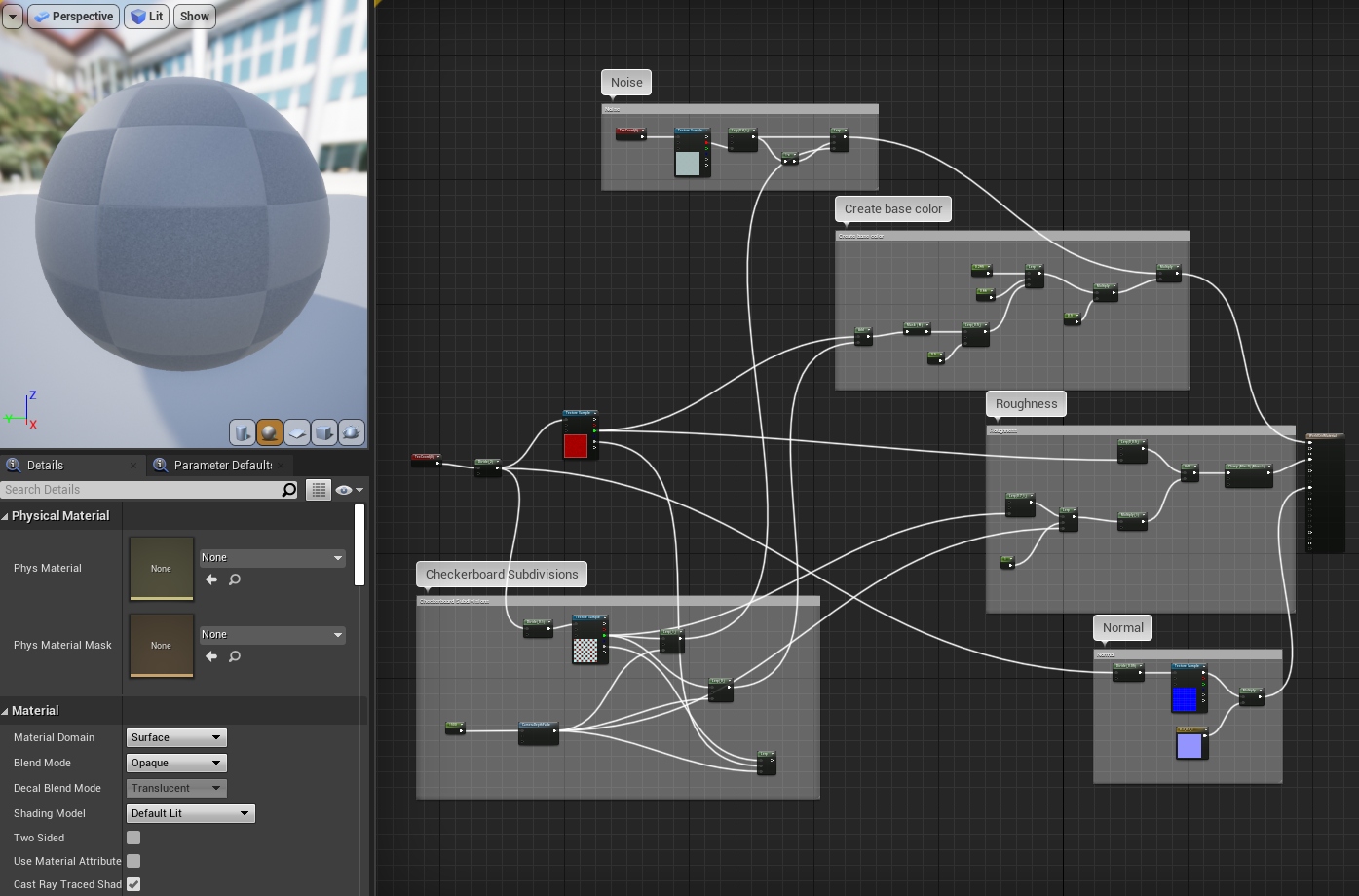

延遲渲染不同於前向渲染存在兩個主要的Pass:幾何通道(Geometry Pass, UE稱為Base Pass)和光照通道(Lighting Pass),它的渲染過程如下圖所示:

延遲渲染存在兩個渲染Pass:第一個是幾何通道,將場景的物體資訊光柵化到多個GBuffer中;第二個是光照階段,利用GBuffer的幾何資訊計算每個像素的光照結果。

兩個通道的各自過程和作用如下:

- 幾何通道

這個階段將場景中所有的不透明物體(Opaque)和Masked物體用無光照(Unlit)的材質執行渲染,然後將物體的幾何資訊寫入到對應的渲染紋理(Render Target)中。

其中物體的幾何資訊有:

1、位置(Position,也可以是深度,因為只要有深度和螢幕空間的uv就可以重建出位置)。

2、法線(Normal)。

3、材質參數(Diffuse Color, Emissive Color, Specular Scale, Specular Power, AO, Roughness, Shading Model, …)。

幾何通道的實現偽程式碼如下:

void RenderBasePass()

{

SetupGBuffer(); // 設置幾何數據緩衝區。

// 遍歷場景的非半透明物體

for each(Object in OpaqueAndMaskedObjectsInScene)

{

SetUnlitMaterial(Object); // 設置無光照的材質

DrawObjectToGBuffer(Object); // 渲染Object的幾何資訊到GBuffer,通常在GPU光柵化中完成。

}

}

經過上述的渲染之後,將獲得這些非透明物體的GBuffer資訊,如顏色、法線、深度、AO等等(下圖):

延遲渲染技術在幾何通道後獲得物體的GBuffer資訊,其中左上是位置,右上是法線,左下是顏色(基礎色),右下是高光度。

需要特別注意,幾何通道階段不會執行光照計算,從而摒棄了很多冗餘的光照計算邏輯,也就是避免了很多本被遮擋的物體光照計算,避免了過繪製(Overdraw)。

- 光照通道

在光照階段,利用幾何通道階段生成的GBuffer數據執行光照計算,它的核心邏輯是遍歷渲染解析度數量相同的像素,根據每個像素的UV坐標從GBuffer取樣獲取該像素的幾何資訊,從而執行光照計算。偽程式碼如下:

void RenderLightingPass()

{

BindGBuffer(); // 綁定幾何數據緩衝區。

SetupRenderTarget(); // 設置渲染紋理。

// 遍歷RT所有的像素

for each(pixel in RenderTargetPixels)

{

// 獲取GBuffer數據。

pixelData = GetPixelDataFromGBuffer(pixel.uv);

// 清空累計顏色

color = 0;

// 遍歷所有燈光,將每個燈光的光照計算結果累加到顏色中。

for each(light in Lights)

{

color += CalculateLightColor(light, pixelData);

}

// 寫入顏色到RT。

WriteColorToRenderTarget(color);

}

}

其中CalculateLightColor可以採用Gouraud, Lambert, Phong, Blinn-Phong, BRDF, BTDF, BSSRDF等光照模型。

- 延遲渲染優劣

由於最耗時的光照計算延遲到後處理階段,所以跟場景的物體數量解耦,只跟Render Targe尺寸相關,複雜度是O(\(N_{light} \times W_{RT} \times H_{RT}\))。所以,延遲渲染在應對複雜的場景和光源數量的場景比較得心應手,往往能得到非常好的性能提升。

但是,也存在一些缺點,如需多一個通道來繪製幾何資訊,需要多渲染紋理(MRT)的支援,更多的CPU和GPU顯示記憶體佔用,更高的頻寬要求,有限的材質呈現類型,難以使用MSAA等硬體抗鋸齒,存在畫面較糊的情況等等。此外,應對簡單場景時,可能反而得不到渲染性能方面的提升。

4.2.3 延遲渲染的變種

延遲渲染可以針對不同的平台和API使用不同的優化改進技術,從而產生了諸多變種。下面是其中部分變種:

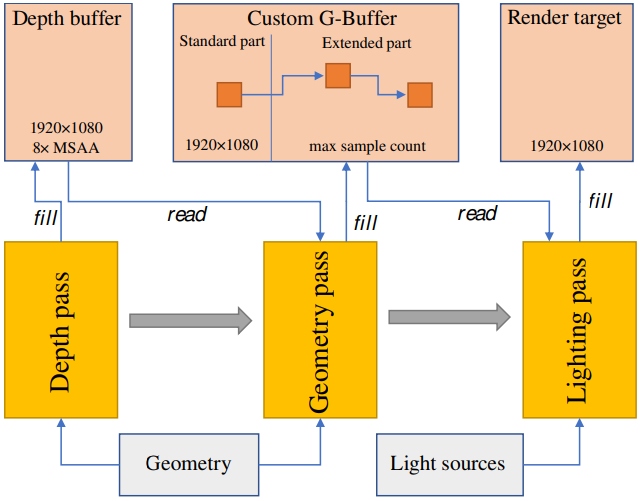

4.2.3.1 Deferred Lighting(Light Pre-Pass)

Deferred Lighting(延遲光照)又被稱為Light Pre-Pass,它和Deferred Shading的不同在於需要三個Pass:

1、第一個Pass叫Geometry Pass:只輸出每個像素光照計算所需的幾何屬性(法線、深度)到GBuffer中。

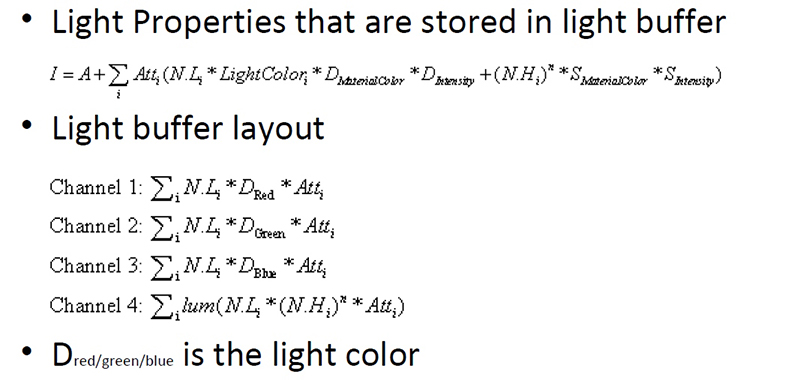

2、第二個Pass叫Lighting Pass:存儲光源屬性(如Normal*LightDir、LightColor、Specular)到LBuffer(Light Buffer,光源緩衝區)。

光源緩衝區存儲的光源數據和布局。

3、第三個Pass叫Secondary Geometry Pass:獲取GBuffer和LBuffer的數據,重建每個像素計算光照所需的數據,執行光照計算。

與Deferred Shading相比,Deferred lighting的優勢在於G-Buffer所需的尺寸急劇減少,允許更多的材質類型呈現,較好第支援MSAA等。劣勢是需要繪製場景兩次,增加了Draw Call。

另外,Deferred lighting還有個優化版本,做法與上面所述略有不同,具體參見文獻Light Pre-Pass。

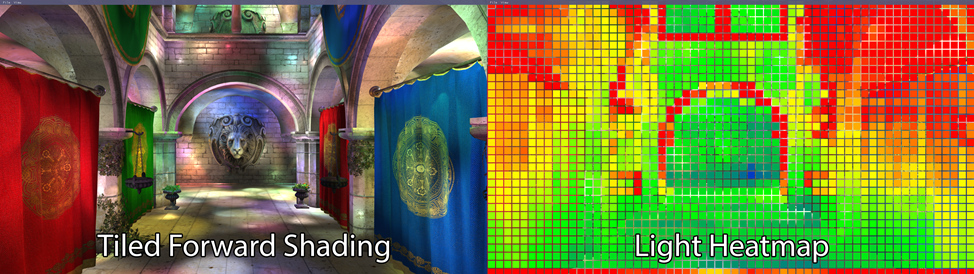

4.2.3.2 Tiled-Based Deferred Rendering(TBDR)

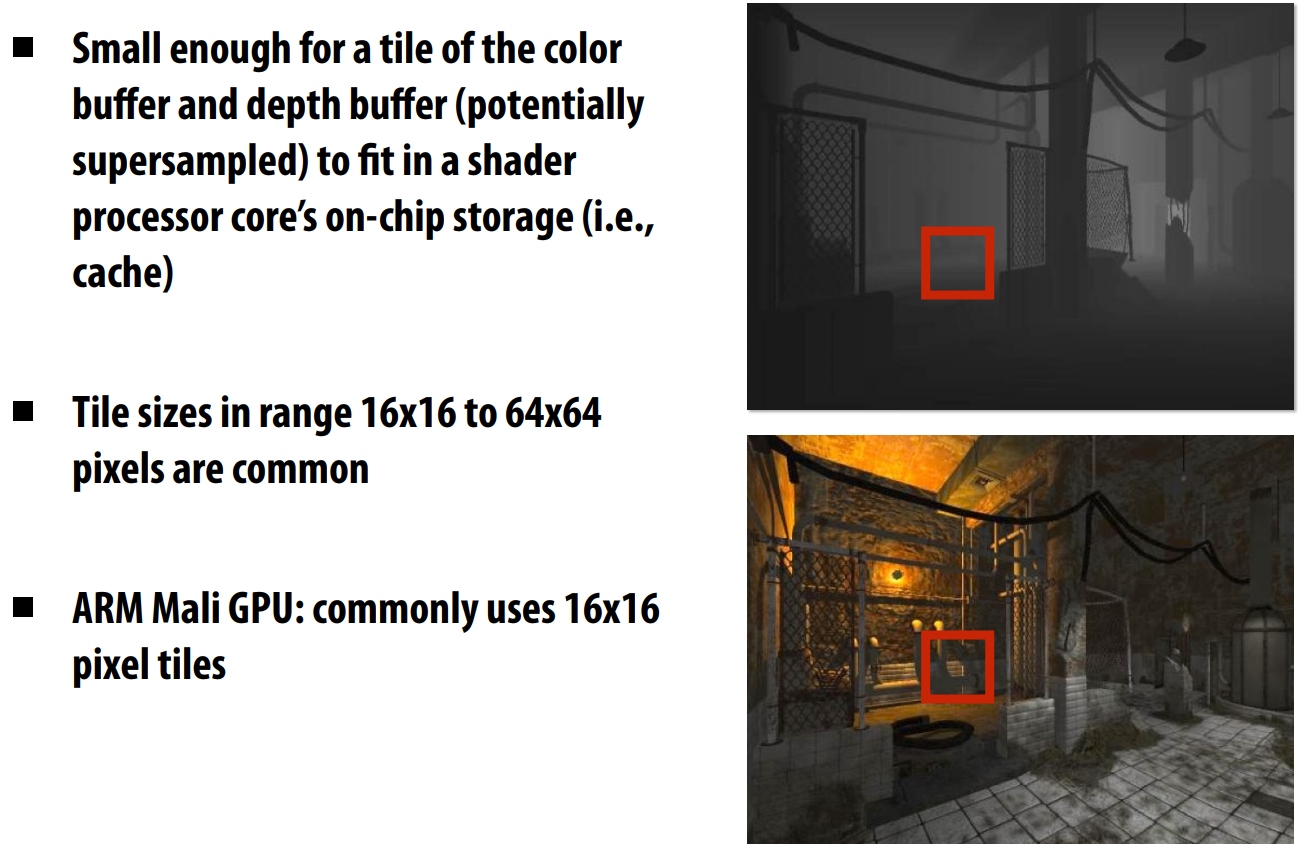

Tiled-Based Deferred Rendering譯名是基於瓦片的渲染,簡稱TBDR,它的核心思想在於將渲染紋理分成規則的一個個四邊形(稱為Tile),然後利用四邊形的包圍盒剔除該Tile內無用的光源,只保留有作用的光源列表,從而減少了實際光照計算中的無效光源的計算量。

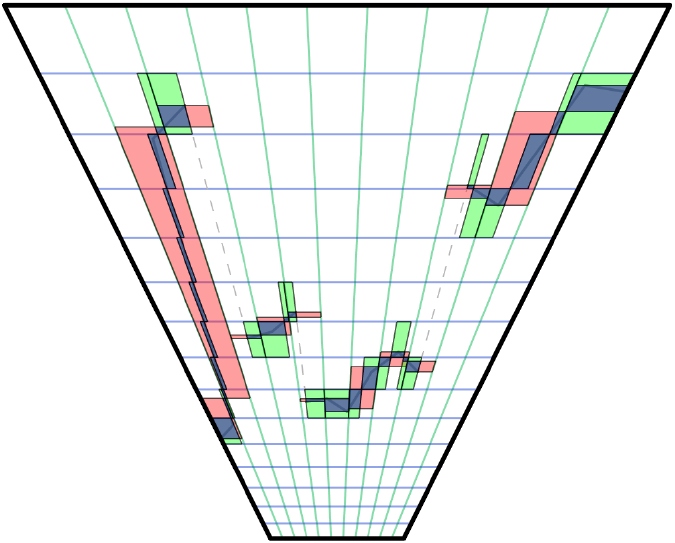

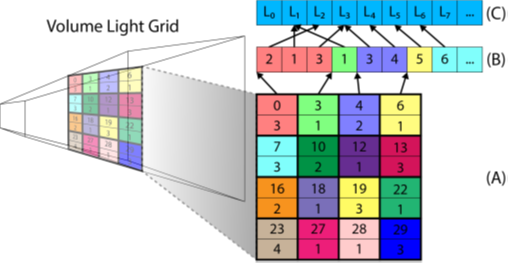

它的實現示意圖及具體的步驟如下:

1、將渲染紋理分成一個個均等面積的小塊(Tile)。參見上圖(b)。

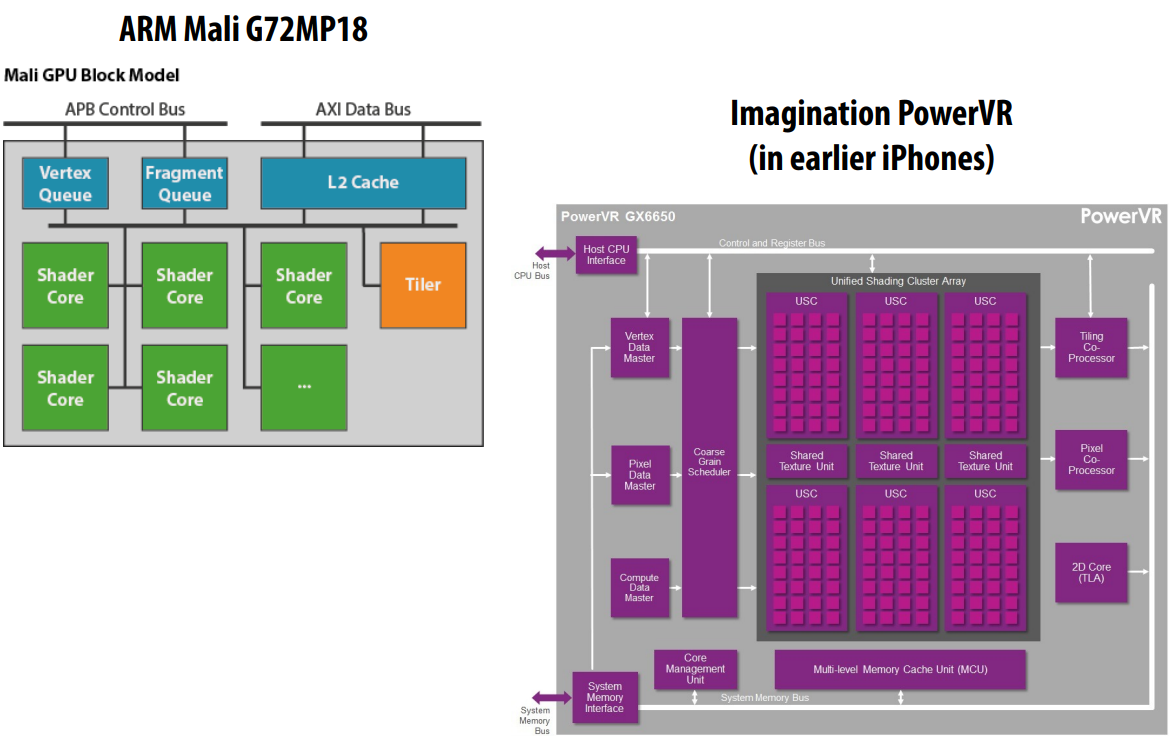

Tile沒有統一的固定大小,在不同的平台架構和渲染器中有所不同,不過一般是2的N次方,且長寬不一定相等,可以是16×16、32×32、64×64等等,不宜太小或太大,否則優化效果不明顯。PowerVR GPU通常取32×32,而ARM Mali GPU取16×16。

2、根據Tile內的Depth範圍計算出其Bounding Box。

TBDR中的每個Tile內的深度範圍可能不一樣,由此可得到不同大小的Bounding Box。

3、根據Tile的Bounding Box和Light的Bounding Box,執行求交。

除了無衰減的方向光,其它類型的光源都可以根據位置和衰減計算得到其Bounding Box。

4、摒棄不相交的Light,得到對Tile有作用的Light列表。參見上圖(c)。

5、遍歷所有Tile,獲取每個Tile的有作業的光源索引列表,計算該Tile內所有像素的光照結果。

由於TBDR可以摒棄很多無作用的光源,能夠避免很多無效的光照計算,目前已被廣泛採用與運動端GPU架構中,形成了基於硬體加速的TBDR:

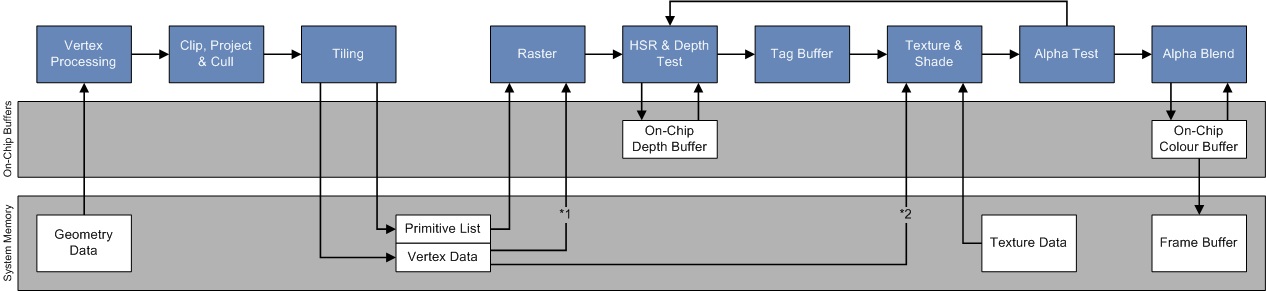

PowerVR的TBDR架構,和立即模式的架構相比,在裁剪之後光柵化之前增加了Tiling階段,增加了On-Chip Depth Buffer和Color Buffer,以更快地存取深度和顏色。

下圖是PowerVR Rogue家族的Series7XT系列GPU和的硬體架構示意圖:

4.2.3.3 Clustered Deferred Rendering

Clustered Deferred Rendering是分簇延遲渲染,與TBDR的不同在於對深度進行了更細粒度的劃分,從而避免TBDR在深度範圍跳變很大(中間無任何有效像素)時產生的光源裁剪效率降低的問題。

Clustered Deferred Rendering的核心思想是將深度按某種方式細分為若干份,從而更加精確地計算每個簇的包圍盒,進而更精準地裁剪光源,避免深度不連續時的光源裁剪效率降低。

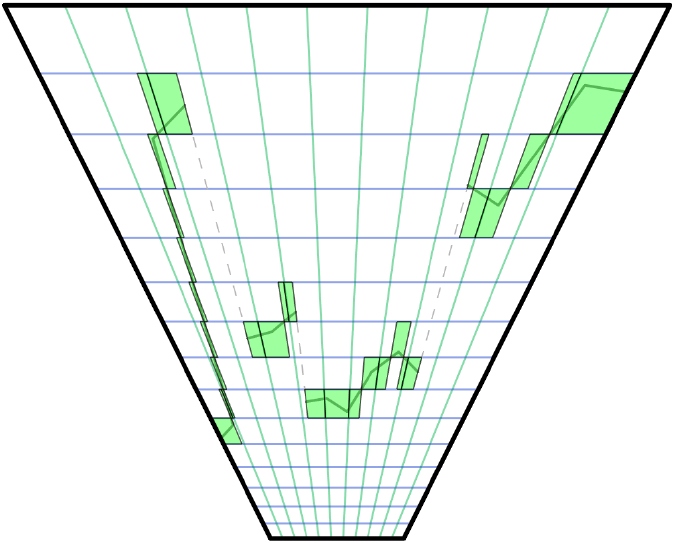

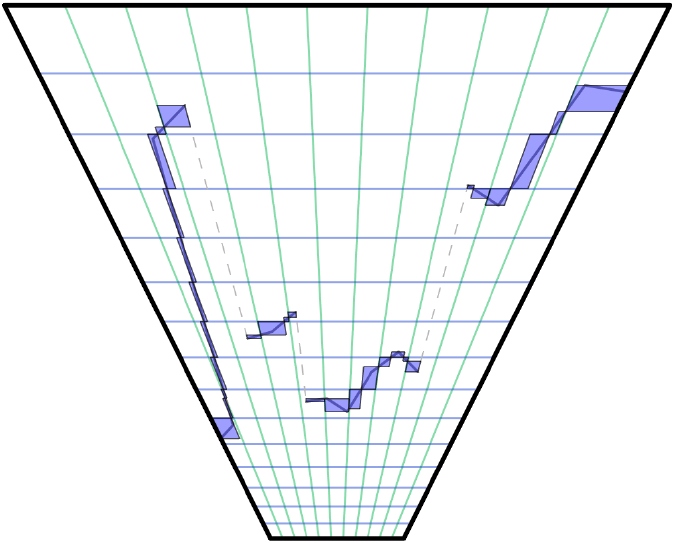

上圖的分簇方法被稱為隱式(Implicit)分簇法,實際上存在顯式(Explicit)分簇法,可以進一步精確深度細分,以實際的深度範圍計算每個族的包圍盒:

顯式(Explicit)的深度分簇更加精確地定位到每簇的包圍盒。

下圖是Tiled、Implicit、Explicit深度劃分法的對比圖:

紅色是Tiled分塊法,綠色是Implicit分簇法,藍色是Explicit分簇法。可知,Explicit分簇得到的包圍盒更精準,包圍盒更小,從而提升光源裁剪效率。

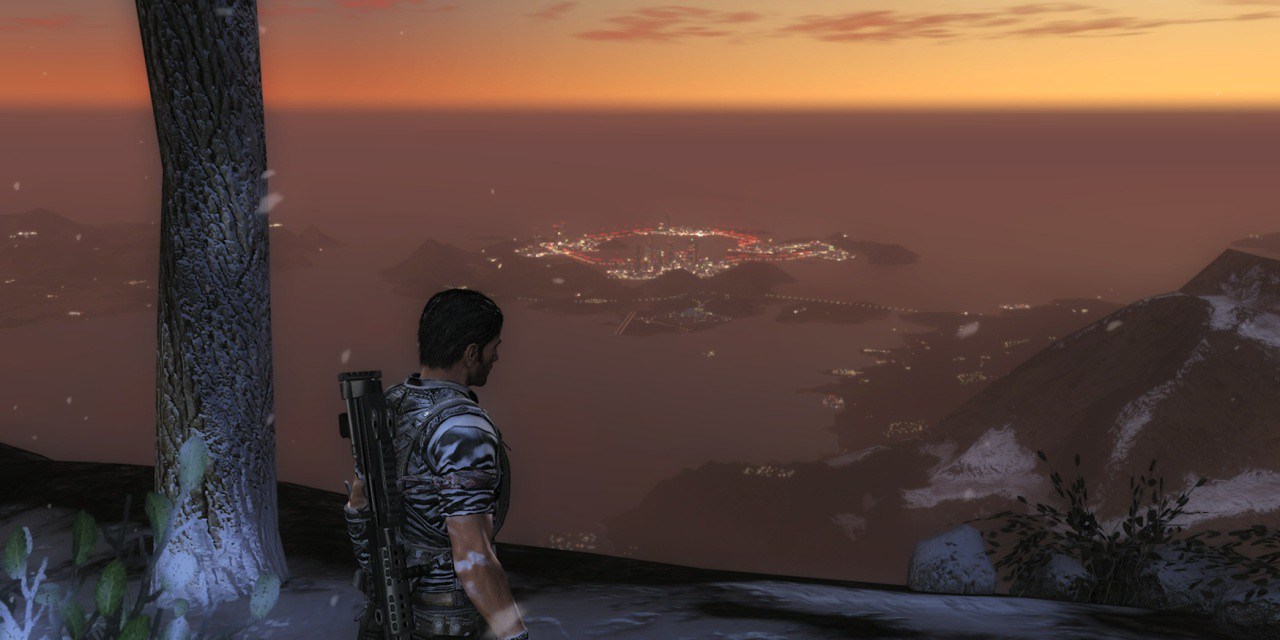

有了Clustered Deferred大法,媽媽再也不用擔心電腦在渲染如下畫面時卡頓和掉幀了O_O:

4.2.3.4 Decoupled Deferred Shading

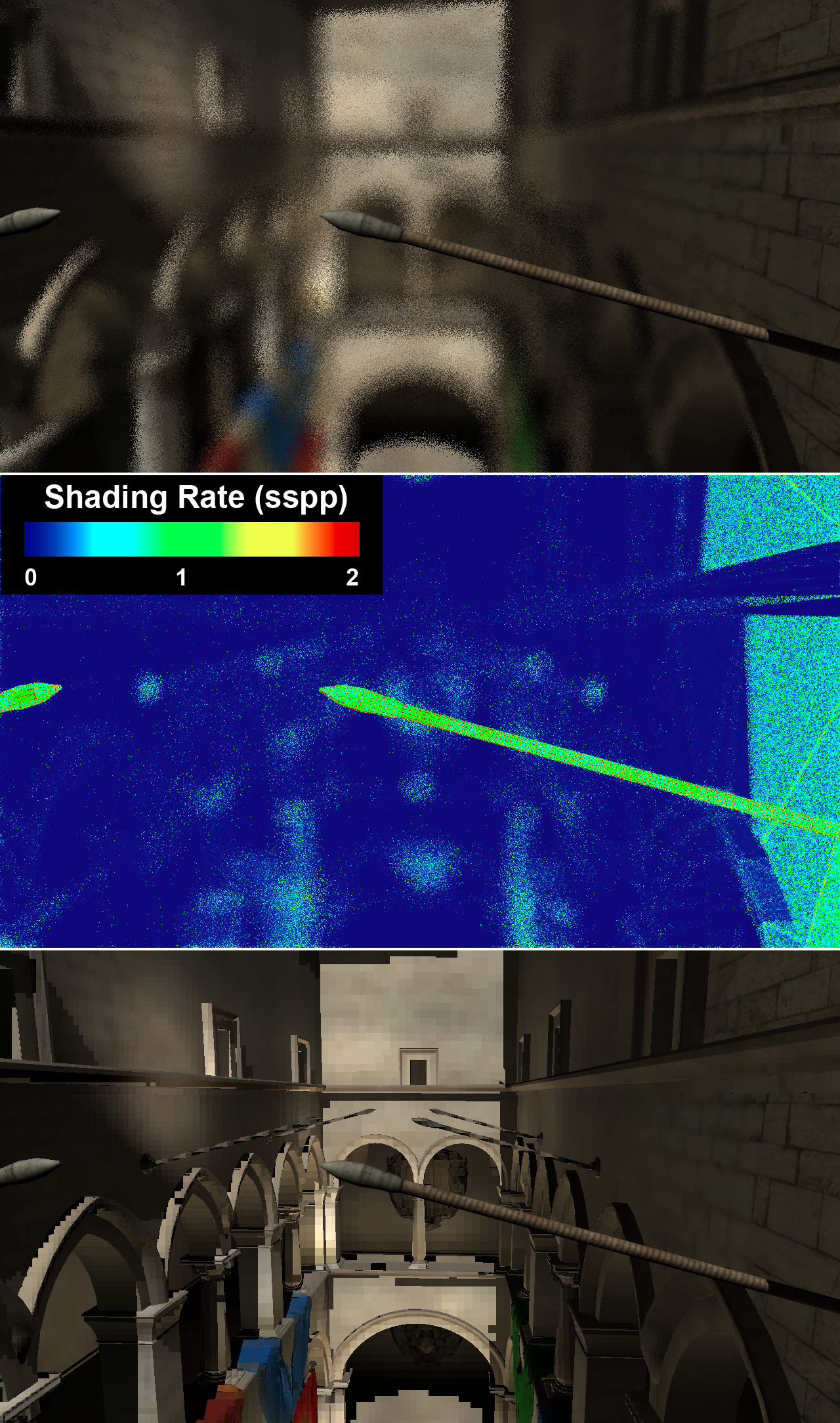

Decoupled Deferred Shading(解耦的延遲渲染)是一種優化的延遲渲染技術,由Gabor Liktor等人在論文Decoupled deferred shading for hardware rasterization中首次提出。它的核心思想是增加compact geometry buffer用以存儲著色取樣數據(獨立於可見性),通過memoization cache(下圖),極力壓縮計算量,提升隨機光柵化(stochastic rasterization)的著色重用率,降低AA的消耗,從而提升著色效率和解決延遲渲染難以使用MSAA的問題。

上圖左:表面上的一點隨著時間推移在螢幕上投影點的變化示意圖,假設t2-t0足夠小,則它們在螢幕的投影點將位於同一點,從而可以重用之前的著色結果。上圖右:Decoupled Deferred Shading利用memoization cache緩衝區重用之前著色結果的示意圖,將物體的坐標映射到螢幕空間,如果是相同的uv,則直接取memoization cache緩衝區的結果,而不是直接光照。

採用Decoupled Deferred Shading的效果一覽。上:採用4倍超取樣渲染的景深;中:每像素的著色率(SSPP);下:從攝像機捕捉的場景畫面。

4.2.3.5 Visibility Buffer

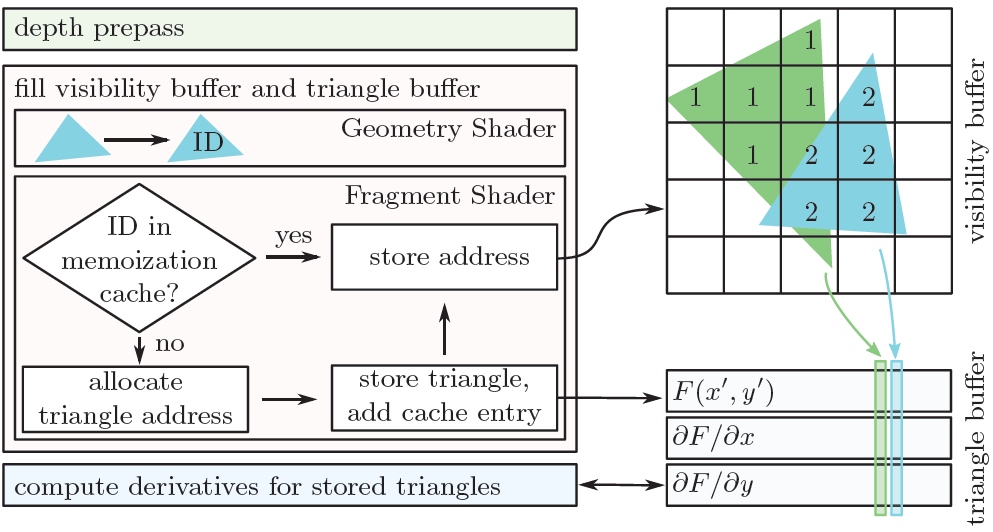

Visibility Buffer與Deferred Texturing非常類似,是Deferred Lighting更加大膽的改進方案,核心思路是:為了減少GBuffer佔用(GBuffer佔用大意味著頻寬大能耗大),不渲染GBuffer,改成渲染Visibility Buffer。Visibility Buffer上只存三角形和實例id,有了這些屬性,在計算光照階段(shading)分別從UAV和bindless texture裡面讀取真正需要的vertex attributes和貼圖的屬性,根據uv的差分自行計算mip-map(下圖)。

GBuffer和Visibility Buffer渲染管線對比示意圖。後者在由Visiblity階段取代前者的Gemotry Pass,此階段只記錄三角形和實例id,可將它們壓縮進4bytes的Buffer中,從而極大地減少了顯示記憶體的佔用。

此方法雖然可以減少對Buffer的佔用,但需要bindless texture的支援,對GPU Cache並不友好(相鄰像素的三角形和實例id跳變大,降低Cache的空間局部性)

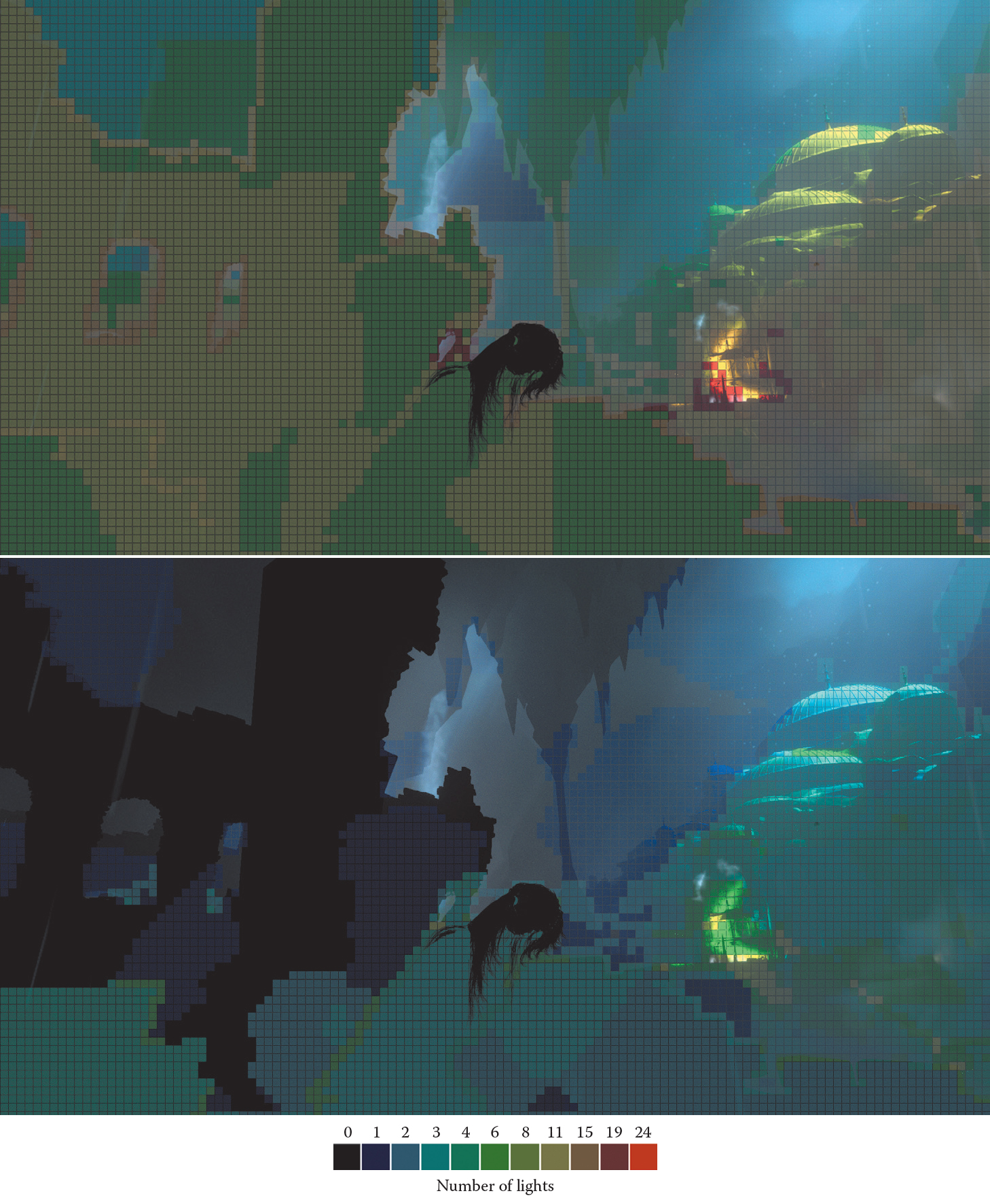

4.2.3.6 Fine Pruned Tiled Light Lists

Fine Pruned Tiled Light Lists是一種優化的Tiled Shading,它和傳統瓦片渲染不一樣的是,有兩個pass來計算物體和光源的求交:

第一個Pass計算光源在螢幕空間的AABB,進而和物體的AABB進行粗略判斷;

第二個Pass逐Tile和光源的真實形狀(而非AABB)進行精確求交。

Fine Pruned Tiled Light Lists裁剪示意圖。上圖是粗略裁剪的結果,下圖是精確裁剪的結果。由此可見,粗略裁剪的數量普遍多於精確裁剪,但其速度快;精確裁剪在此基礎上執行更準確的求交,從而進一步摒棄不相交的光源,減少了大量的光源數量,避免進入著色計算。

這種可以實現不規則光源的Tiled Shading,而且可以利用Compute Shader實現。已應用於主機遊戲《古墓麗影·崛起》中。

4.2.3.7 Deferred Attribute Interpolation Shading

與Visibility Buffer類似,Deferred Attribute Interpolation Shading也是解決傳統GBuffer記憶體太高的問題。它給出了一種方法,不保存GBuffer資訊,而是保存三角形資訊,再通過插值進行計算,降低Deferred的記憶體消耗。這種方法也可以使用Visibility Buffer剔除冗餘的三角形資訊,進一步降低顯示記憶體的佔用。

Deferred Attribute Interpolation Shading演算法示意圖。第一個Pass,生成Visibility Buffer;第二個Pass,採用Visibility,保證所有像素都只有一個三角形的資訊寫入memoization cache,其中memoization cache是記錄三角形id和實際地址的映射緩衝區;最後的Pass會計算每個三角形的螢幕空間的偏導數,以供屬性插值使用。

這種方法還可以把GI等低頻光照單獨計算以降低著色消耗,也可以良好啟用MSAA。

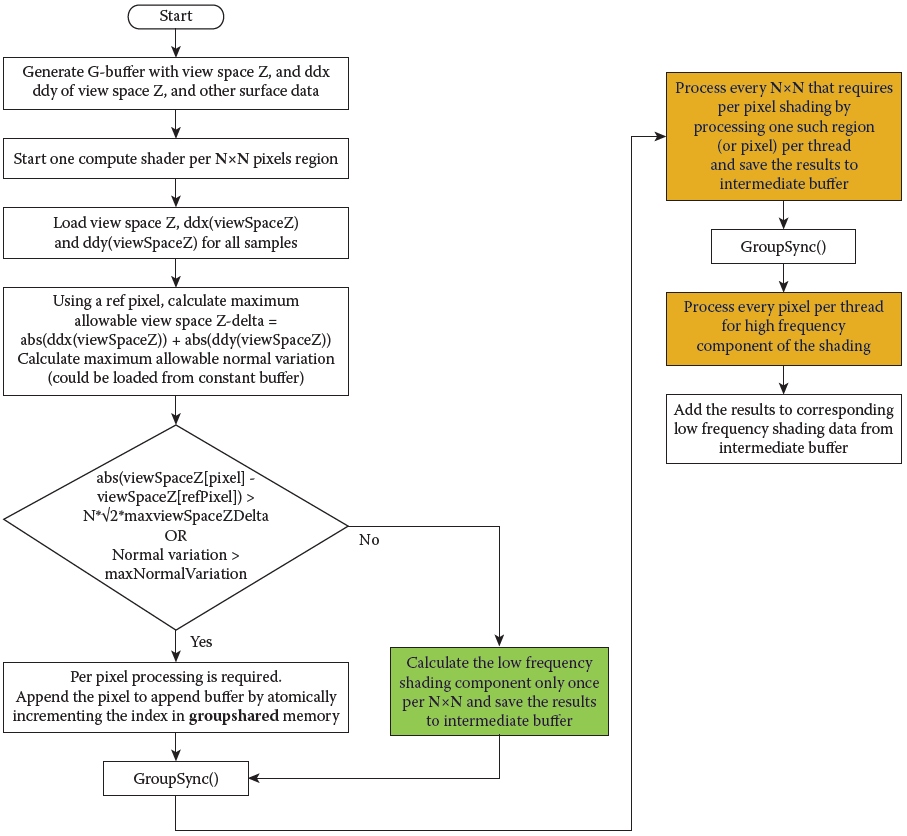

4.2.3.8 Deferred Coarse Pixel Shading

Deferred Coarse Pixel Shading的提出是為了解決傳統延遲渲染在螢幕空間的光照計算階段的高消耗問題,在生成GBuffer時,額外生成ddx和ddy,再利用Compute Shader對某個大小的分塊找到變化不明顯的區域,使用低頻著色(幾個像素共用一次計算,跟可變速率著色相似),從而提升著色效率,降低消耗。

Deferred Coarse Pixel Shading在Compute Shader渲染機制示意圖。關鍵步驟在於計算出ddx和ddy找到變化低的像素,降低著色頻率,提高復用率。

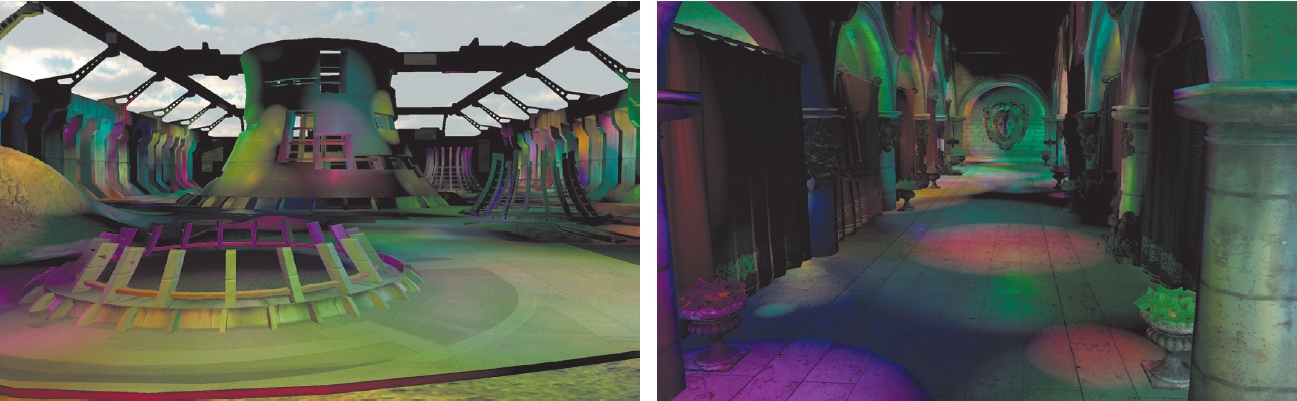

採用這種方法渲染後,在渲染如下兩個場景時,性能均得到較大的提升。

利用Deferred Coarse Pixel Shading渲染的兩個場景。左邊是Power Plant,右邊是Sponza。

具體的數據如下表:

Power Plant場景可以節省50%-60%,Sponza場景則處於25%-37%之間。

這種方法同樣適用於螢幕空間的後處理。

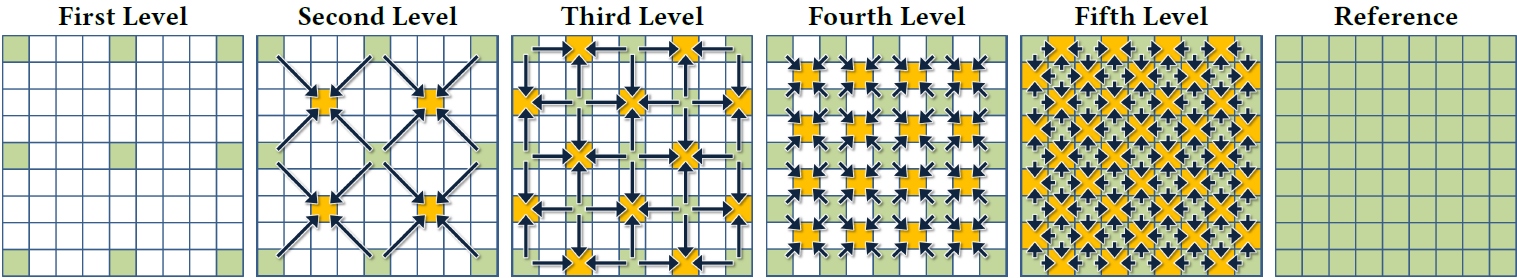

4.2.3.9 Deferred Adaptive Compute Shading

Deferred Adaptive Compute Shading的核心思想在於將螢幕像素按照某種方式劃分為5個Level的不同粒度的像素塊,以便決定是直接從相鄰Level插值還是重新著色。

Deferred Adaptive Compute Shading的5個層級及它們的插值示意圖。對於每個層級新增加的像素(黃色所示),通過考慮上一級的周邊像素,估算它們的frame buffer和GBuffer的局部方差(local variance),以便決定是從相鄰像素插值還是直接著色。

它的演算法過程如下:

-

第1個Level,渲染1/16(因為取了4×4的分塊)的像素。

-

遍歷第2~5個Level,逐Level按照下面的步驟著色:

- 計算上一個Level相鄰4個像素的相似度(Similarity Criterion)。

- 若Similarity Criterion小於某個閾值,認為此像素和周邊像素足夠相似,當前像素的結果直接插值獲得。

- 若否,說明此像素和周邊像素差異過大,開啟著色計算。

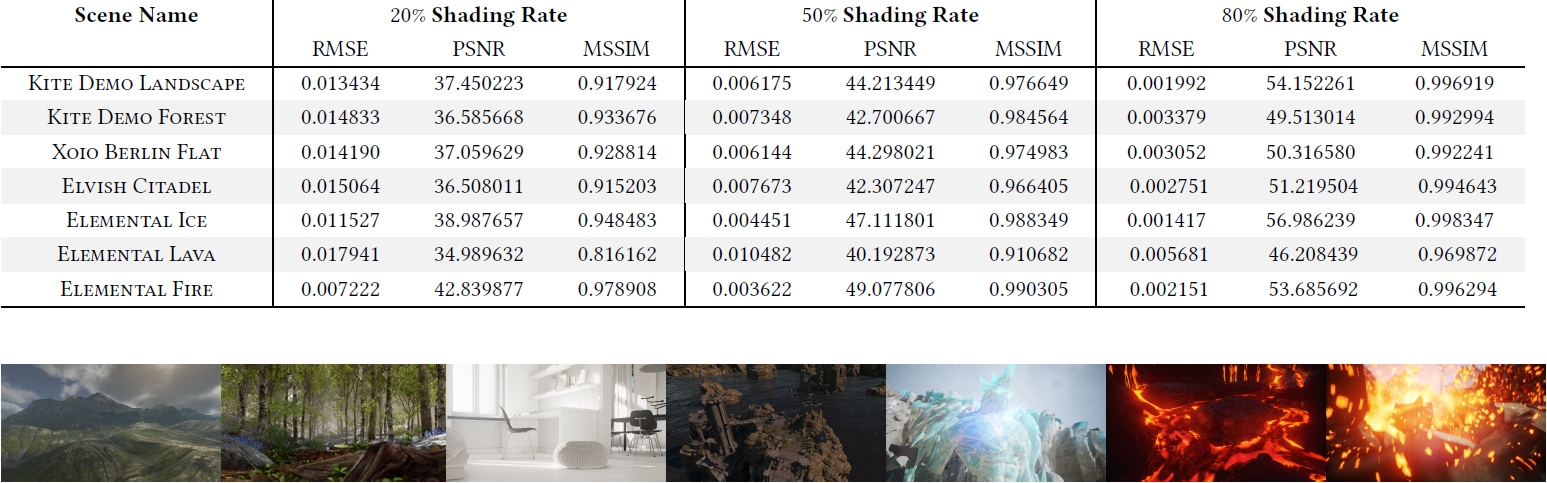

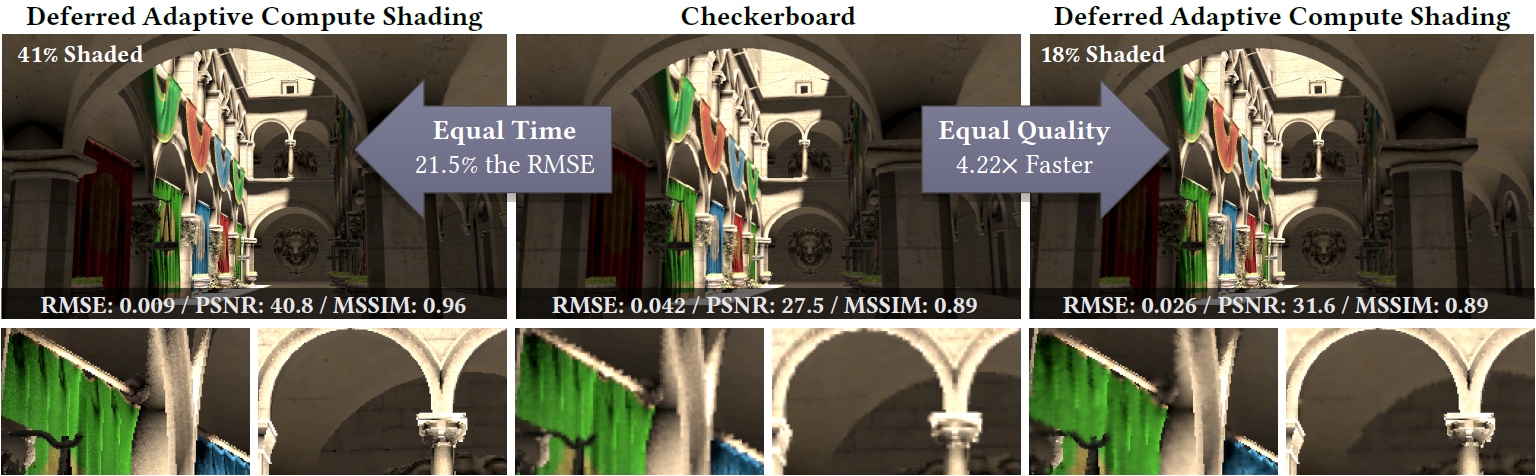

此法在渲染UE4的不同場景時,在均方誤差(RMSE)、峰值信噪比(PSNR)、平均結構相似性(MSSIM)都能獲得良好的指標。(下圖)

渲染UE4的一些場景時,DACS方法在不同的著色率下都可獲得良好的影像渲染指標。

渲染同一場景和畫面時,對比Checkerboard(棋盤)著色方法,相同時間內,DACS的均方誤差(RMSE)只是前者的21.5%,相同影像品質(MSSIM)下,DACS的時間快了4.22倍。

4.2.4 前向渲染及其變種

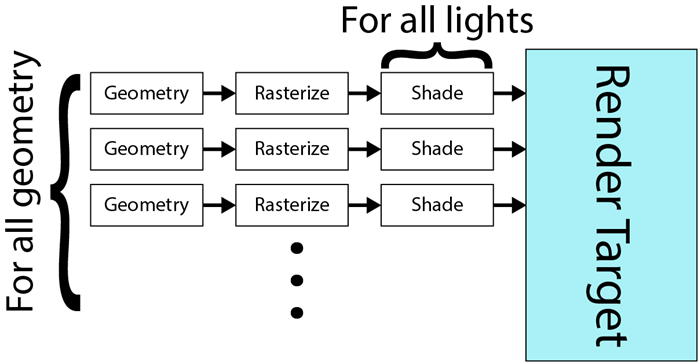

4.2.4.1 前向渲染

前向渲染(Forward Shading)是最簡單也最廣泛支援的一種渲染技術,它的實現思想是遍歷場景的所有物體,每個物體調用一次繪製品質,經由渲染管線光柵化後寫入渲染紋理上,實現偽程式碼如下:

// 遍歷RT所有的像素

for each(object in ObjectsInScene)

{

color = 0;

// 遍歷所有燈光,將每個燈光的光照計算結果累加到顏色中。

for each(light in Lights)

{

color += CalculateLightColor(light, object);

}

// 寫入顏色到RT。

WriteColorToRenderTarget(color);

}

渲染過程示意圖如下:

它的時間複雜度是\(O(g\cdot f\cdot l)\),其中:

-

\(g\)表示場景的物體數量。

-

\(f\)表示著色的片元數量。

-

\(l\)表示場景的光源數量。

這種簡單的技術從出現GPU起,就天然受硬體的支援,有著非常廣泛的應用。它的優點是實現簡單,無需多個Pass渲染,無需MRT的支援,而且完美地開啟MSAA抗鋸齒。

當然它也有缺點,無法支撐大型複雜附帶大量光源的場景渲染,冗餘計算多,過繪製嚴重。

4.2.4.2 前向渲染的變種

- Forward+ Rendering

Forward+也被稱為Tiled Forward Rendering,為了提升前向渲染光源的數量,它增加了光源剔除階段,有3個Pass:depth prepass,light culling pass,shading pass。

light culling pass和瓦片的延遲渲染類似,將螢幕劃分成若干個Tile,將每個Tile和光源求交,有效的光源寫入到Tile的光源列表,以減少shading階段的光源數量。

Forward+存在由於街頭錐體拉長後在幾何邊界產生誤報(False positives,可以通過separating axis theorem (SAT)改善)的情況。

- Cluster Forward Rendering

Cluster Forward Rendering和Cluster Deferred Rendering類似,將螢幕空間劃分成均等Tile,深度細分成一個個簇,進而更加細粒度地裁剪光源。演算法類似,這裡就不累述了。

- Volume Tiled Forward Rendering

Volume Tiled Forward Rendering在Tiled和Clusterer的前向渲染基礎上擴展的一種技術,旨在提升場景的光源支援數量,論文作者認為可以達到400萬個光源的場景以30FPS實時渲染。

它由以下步驟組成:

1、初始化階段:

1.1 計算Grid(Volume Tile)的尺寸。給定Tile尺寸\((t_x, t_y)\)和螢幕解析度\((w, h)\),可以算出螢幕的細分數量\((S_x, S_y)\):

\]

深度方向的細分數量為:

\]

1.2 計算每個Volume Tile的AABB。結合下圖,每個Tile的AABB邊界計算如下:

k_{near} &=& Z_{near}\Bigg(1 + \frac{2\tan(\theta)}{S_y} \Bigg)^k \\

k_{far} &=& Z_{near}\Bigg(1 + \frac{2\tan(\theta)}{S_y} \Bigg)^{k+1} \\

p_{min} &=& (S_x\cdot i, \ S_y\cdot j) \\

p_{max} &=& \bigg(S_x\cdot (i+1), \ S_y\cdot (j+1)\bigg)

\end{eqnarray*}

\]

2、更新階段:

2.1 深度Pre-pass。只記錄非半透明物體的深度。

2.2 標記激活的Tile。

2.3 創建和壓縮Tile列表。

2.4 將光源賦給Tile。每個執行緒組執行一個激活的Volume Tile,利用Tile的AABB和場景中所有的光源求交(可用BVH減少求交次數),將相交的光源索引記錄到對應Tile的光源列表(每個Tile的光源數據是光源列表的起始位置和光源的數量):

2.5 著色。此階段與前述方法無特別差異。

基於體素分塊的渲染雖然能夠滿足海量光源的渲染,但也存在Draw Call數量攀升和自相似體素瓦片(Self-Similar Volume Tiles,離攝像機近的體素很小而遠的又相當大)的問題。

4.2.5 渲染路徑總結

4.2.5.1 延遲渲染 vs 前向渲染

延遲渲染和前向渲染的比較見下表:

| 前向渲染 | 延遲渲染 | |

|---|---|---|

| 場景複雜度 | 簡單 | 複雜 |

| 光源支援數量 | 少 | 多 |

| 時間複雜度 | \(O(g\cdot f\cdot l)\) | \(O(f\cdot l)\) |

| 抗鋸齒 | MSAA, FXAA, SMAA | TAA, SMAA |

| 顯示記憶體和頻寬 | 較低 | 較高 |

| Pass數量 | 少 | 多 |

| MRT | 無要求 | 要求 |

| 過繪製 | 嚴重 | 良好避免 |

| 材質類型 | 多 | 少 |

| 畫質 | 清晰,抗鋸齒效果好 | 模糊,抗鋸齒效果打折扣 |

| 透明物體 | 不透明物體、Masked物體、半透明物體 | 不透明物體、Masked物體,不支援半透明物體 |

| 螢幕解析度 | 低 | 高 |

| 硬體要求 | 低,幾乎覆蓋100%的硬體設備 | 較高,需MRT的支援,需要Shader Model 3.0+ |

| 實現複雜度 | 低 | 高 |

| 後處理 | 無法支援需要法線和深度等資訊的後處理 | 支援需要法線和深度等資訊的後處理,如SSAO、SSR、SSGI等 |

這裡補充以下幾點說明:

-

延遲渲染之所以不支援MSAA,是因為帶MSAA的RT在執行光照前需要Resolve(將MSAA的RT按權重算出最終的顏色),當然也可以不Resolve直接進入著色,但這樣會導致顯示記憶體和頻寬的暴增。

-

延遲渲染的畫面之所以比前向渲染模糊,是因為經歷了兩次光柵化:幾何通道和光照通道,相當於執行了兩次離散化,造成了兩次訊號的丟失,對後續的重建造成影響,以致最終畫面的方差擴大。再加上延遲渲染會採納TAA作為抗鋸齒技術,TAA的核心思想是將空間取樣轉換成時間取樣,而時間的取樣往往不太准,帶有各種小問題,再一次擴大了畫面的方差,由此可能產生鬼影、摩爾紋、閃爍、灰濛濛的畫面。這也是為什麼採用默認開啟了延遲渲染的UE做出的遊戲畫面會多少有一點不通透的感覺。

畫質模糊問題可以通過一些後處理(高反差保留、色調映射)和針對性的AA技術(SMAA、SSAA、DLSS)來緩解,但無論如何都難以用同等的消耗達到前向渲染的畫質效果。

-

延遲渲染不支援半透明物體,所以往往在最後階段還需要增加前向渲染步驟來專門渲染半透明物體。

-

現階段(2021上半年),延遲渲染是PC電腦和主機設備的首先渲染技術,而前向渲染是移動設備的首選渲染技術。隨著時間的推移和硬體的發展,相信延遲渲染很快會被推廣到移動平台。

-

對於材質呈現類型,延遲渲染可以將不同的著色模型作為幾何數據寫入GBuffer,以擴充材質和光照著色類型,但一樣受限於GBuffer的頻寬,若取1 byte,則可以支援256種材質著色類型。

UE用了4 bit來存儲Shading Model ID,最多只能支援16種著色模型。筆者曾嘗試擴展UE的著色類型,發現其它4 bit被佔用了,除非額外開闢一張GBuffer來存儲。但是,為了更多材質類型而多一張GBuffer完全不划算。

-

關於延遲渲染的MSAA,有些論文(Multisample anti-aliasing in deferred rendering, Optimizing multisample anti-aliasing for deferred renderers)闡述了在延遲渲染管線下如何支援MSAA、取樣策略、數據結構和頻寬優化。

Multisample anti-aliasing in deferred rendering提出的延遲渲染下MSAA的演算法示意圖。它的核心思想在於Geometry Pass除了存儲標準的GBuffer,還額外增加了GBuffer對應的擴展數據,這些擴展數據僅僅包含了需要多取樣像素的MSAA數據,以供Lighting Pass著色時使用。這樣既能滿足MSAA的要求,又可以降低GBuffer的佔用。

4.2.5.2 延遲渲染變種的比較

本小節主要對延遲渲染及其常見的變種進行比較,見下表:

| Deferred | Tiled Deferred | Clustered Deferred | |

|---|---|---|---|

| 光源數量 | 略多 | 多 | 很多 |

| 頻寬消耗 | 很高 | 高 | 略高 |

| 分塊方式 | 無 | 螢幕空間 | 螢幕空間+深度 |

| 實現複雜度 | 略高 | 高 | 很高 |

| 適用場景 | 物體多光源多的場景 | 物體多局部光源多彼此不交疊的場景 | 物體多局部光源多彼此不交疊且視圖空間距離較遠的場景 |

4.3 延遲著色場景渲染器

4.3.1 FSceneRenderer

FSceneRenderer是UE場景渲染器父類,是UE渲染體系的大腦和發動機,在整個渲染體系擁有舉足輕重的位置,主要用於處理和渲染場景,生成RHI層的渲染指令。它的部分定義和解析如下:

// Engine\Source\Runtime\Renderer\Private\SceneRendering.h

// 場景渲染器

class FSceneRenderer

{

public:

FScene* Scene; // 被渲染的場景

FSceneViewFamily ViewFamily; // 被渲染的場景視圖族(保存了需要渲染的所有view)。

TArray<FViewInfo> Views; // 需要被渲染的view實例。

FMeshElementCollector MeshCollector; // 網格收集器

FMeshElementCollector RayTracingCollector; // 光線追蹤網格收集器

// 可見光源資訊

TArray<FVisibleLightInfo,SceneRenderingAllocator> VisibleLightInfos;

// 陰影相關的數據

TArray<FParallelMeshDrawCommandPass*, SceneRenderingAllocator> DispatchedShadowDepthPasses;

FSortedShadowMaps SortedShadowsForShadowDepthPass;

// 特殊標記

bool bHasRequestedToggleFreeze;

bool bUsedPrecomputedVisibility;

// 使用全場景陰影的點光源列表(可通過r.SupportPointLightWholeSceneShadows開關)

TArray<FName, SceneRenderingAllocator> UsedWholeScenePointLightNames;

// 平台Level資訊

ERHIFeatureLevel::Type FeatureLevel;

EShaderPlatform ShaderPlatform;

(......)

public:

FSceneRenderer(const FSceneViewFamily* InViewFamily,FHitProxyConsumer* HitProxyConsumer);

virtual ~FSceneRenderer();

// FSceneRenderer介面(注意部分是空實現體和抽象介面)

// 渲染入口

virtual void Render(FRHICommandListImmediate& RHICmdList) = 0;

virtual void RenderHitProxies(FRHICommandListImmediate& RHICmdList) {}

// 場景渲染器實例

static FSceneRenderer* CreateSceneRenderer(const FSceneViewFamily* InViewFamily, FHitProxyConsumer* HitProxyConsumer);

void PrepareViewRectsForRendering();

#if WITH_MGPU // 多GPU支援

void ComputeViewGPUMasks(FRHIGPUMask RenderTargetGPUMask);

#endif

// 更新每個view所在的渲染紋理的結果

void DoCrossGPUTransfers(FRHICommandListImmediate& RHICmdList, FRHIGPUMask RenderTargetGPUMask);

// 遮擋查詢介面和數據

bool DoOcclusionQueries(ERHIFeatureLevel::Type InFeatureLevel) const;

void BeginOcclusionTests(FRHICommandListImmediate& RHICmdList, bool bRenderQueries);

static FGraphEventRef OcclusionSubmittedFence[FOcclusionQueryHelpers::MaxBufferedOcclusionFrames];

void FenceOcclusionTests(FRHICommandListImmediate& RHICmdList);

void WaitOcclusionTests(FRHICommandListImmediate& RHICmdList);

bool ShouldDumpMeshDrawCommandInstancingStats() const { return bDumpMeshDrawCommandInstancingStats; }

static FGlobalBoundShaderState OcclusionTestBoundShaderState;

static bool ShouldCompositeEditorPrimitives(const FViewInfo& View);

// 等待場景渲染器執行完成和清理工作以及最終刪除

static void WaitForTasksClearSnapshotsAndDeleteSceneRenderer(FRHICommandListImmediate& RHICmdList, FSceneRenderer* SceneRenderer, bool bWaitForTasks = true);

static void DelayWaitForTasksClearSnapshotsAndDeleteSceneRenderer(FRHICommandListImmediate& RHICmdList, FSceneRenderer* SceneRenderer);

// 其它介面

static FIntPoint ApplyResolutionFraction(...);

static FIntPoint QuantizeViewRectMin(const FIntPoint& ViewRectMin);

static FIntPoint GetDesiredInternalBufferSize(const FSceneViewFamily& ViewFamily);

static ISceneViewFamilyScreenPercentage* ForkScreenPercentageInterface(...);

static int32 GetRefractionQuality(const FSceneViewFamily& ViewFamily);

protected:

(......)

#if WITH_MGPU // 多GPU支援

FRHIGPUMask AllViewsGPUMask;

FRHIGPUMask GetGPUMaskForShadow(FProjectedShadowInfo* ProjectedShadowInfo) const;

#endif

// ----可在所有渲染器共享的介面----

// --渲染流程和MeshPass相關介面--

void OnStartRender(FRHICommandListImmediate& RHICmdList);

void RenderFinish(FRHICommandListImmediate& RHICmdList);

void SetupMeshPass(FViewInfo& View, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, FViewCommands& ViewCommands);

void GatherDynamicMeshElements(...);

void RenderDistortion(FRHICommandListImmediate& RHICmdList);

void InitFogConstants();

bool ShouldRenderTranslucency(ETranslucencyPass::Type TranslucencyPass) const;

void RenderCustomDepthPassAtLocation(FRHICommandListImmediate& RHICmdList, int32 Location);

void RenderCustomDepthPass(FRHICommandListImmediate& RHICmdList);

void RenderPlanarReflection(class FPlanarReflectionSceneProxy* ReflectionSceneProxy);

void InitSkyAtmosphereForViews(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphereLookUpTables(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphere(FRHICommandListImmediate& RHICmdList);

void RenderSkyAtmosphereEditorNotifications(FRHICommandListImmediate& RHICmdList);

// ---陰影相關介面---

void InitDynamicShadows(FRHICommandListImmediate& RHICmdList, FGlobalDynamicIndexBuffer& DynamicIndexBuffer, FGlobalDynamicVertexBuffer& DynamicVertexBuffer, FGlobalDynamicReadBuffer& DynamicReadBuffer);

bool RenderShadowProjections(FRHICommandListImmediate& RHICmdList, const FLightSceneInfo* LightSceneInfo, IPooledRenderTarget* ScreenShadowMaskTexture, IPooledRenderTarget* ScreenShadowMaskSubPixelTexture, bool bProjectingForForwardShading, bool bMobileModulatedProjections, const struct FHairStrandsVisibilityViews* InHairVisibilityViews);

TRefCountPtr<FProjectedShadowInfo> GetCachedPreshadow(...);

void CreatePerObjectProjectedShadow(...);

void SetupInteractionShadows(...);

void AddViewDependentWholeSceneShadowsForView(...);

void AllocateShadowDepthTargets(FRHICommandListImmediate& RHICmdList);

void AllocatePerObjectShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateCachedSpotlightShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateCSMDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateRSMDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateOnePassPointLightDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

void AllocateTranslucentShadowDepthTargets(FRHICommandListImmediate& RHICmdList, ...);

bool CheckForProjectedShadows(const FLightSceneInfo* LightSceneInfo) const;

void GatherShadowPrimitives(...);

void RenderShadowDepthMaps(FRHICommandListImmediate& RHICmdList);

void RenderShadowDepthMapAtlases(FRHICommandListImmediate& RHICmdList);

void CreateWholeSceneProjectedShadow(FLightSceneInfo* LightSceneInfo, ...);

void UpdatePreshadowCache(FSceneRenderTargets& SceneContext);

void InitProjectedShadowVisibility(FRHICommandListImmediate& RHICmdList);

void GatherShadowDynamicMeshElements(FGlobalDynamicIndexBuffer& DynamicIndexBuffer, FGlobalDynamicVertexBuffer& DynamicVertexBuffer, FGlobalDynamicReadBuffer& DynamicReadBuffer);

// --光源介面--

static void GetLightNameForDrawEvent(const FLightSceneProxy* LightProxy, FString& LightNameWithLevel);

static void GatherSimpleLights(const FSceneViewFamily& ViewFamily, ...);

static void SplitSimpleLightsByView(const FSceneViewFamily& ViewFamily, ...);

// --可見性介面--

void PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList);

void ComputeViewVisibility(FRHICommandListImmediate& RHICmdList, ...);

void PostVisibilityFrameSetup(FILCUpdatePrimTaskData& OutILCTaskData);

// --其它介面--

void GammaCorrectToViewportRenderTarget(FRHICommandList& RHICmdList, const FViewInfo* View, float OverrideGamma);

FRHITexture* GetMultiViewSceneColor(const FSceneRenderTargets& SceneContext) const;

void UpdatePrimitiveIndirectLightingCacheBuffers();

bool ShouldRenderSkyAtmosphereEditorNotifications();

void ResolveSceneColor(FRHICommandList& RHICmdList);

(......)

};

FSceneRenderer由遊戲執行緒的 FRendererModule::BeginRenderingViewFamily負責創建和初始化,然後傳遞給渲染執行緒。渲染執行緒會調用FSceneRenderer::Render(),渲染完返回後,會刪除FSceneRenderer的實例。也就是說,FSceneRenderer會被每幀創建和銷毀。具體流程的示意程式碼如下:

void UGameEngine::Tick( float DeltaSeconds, bool bIdleMode )

{

UGameEngine::RedrawViewports()

{

void FViewport::Draw( bool bShouldPresent)

{

void UGameViewportClient::Draw()

{

// 計算ViewFamily、View的各種屬性

ULocalPlayer::CalcSceneView();

// 發送渲染命令

FRendererModule::BeginRenderingViewFamily(..., FSceneViewFamily* ViewFamily)

{

FScene* const Scene = ViewFamily->Scene->GetRenderScene();

World->SendAllEndOfFrameUpdates();

// 創建場景渲染器

FSceneRenderer* SceneRenderer = FSceneRenderer::CreateSceneRenderer(ViewFamily, ...);

// 向渲染執行緒發送繪製場景指令.

ENQUEUE_RENDER_COMMAND(FDrawSceneCommand)(

[SceneRenderer](FRHICommandListImmediate& RHICmdList)

{

RenderViewFamily_RenderThread(RHICmdList, SceneRenderer)

{

(......)

// 調用場景渲染器的繪製介面.

SceneRenderer->Render(RHICmdList);

(......)

// 等待SceneRenderer的任務完成然後清理並刪除實例。

FSceneRenderer::WaitForTasksClearSnapshotsAndDeleteSceneRenderer(RHICmdList, SceneRenderer)

{

// 在刪除實例前等待渲染任務完成。

RHICmdList.ImmediateFlush(EImmediateFlushType::WaitForOutstandingTasksOnly);

// 等待所有陰影Pass完成。

for (int32 PassIndex = 0; PassIndex < SceneRenderer->DispatchedShadowDepthPasses.Num(); ++PassIndex)

{

SceneRenderer->DispatchedShadowDepthPasses[PassIndex]->WaitForTasksAndEmpty();

}

(......)

// 刪除SceneRenderer實例。

delete SceneRenderer;

(......)

}

}

FlushPendingDeleteRHIResources_RenderThread();

});

}

}}}}

FSceneRenderer擁有兩個子類:FMobileSceneRenderer和FDeferredShadingSceneRenderer。

FMobileSceneRenderer是用於移動平台的場景渲染器,默認採用了前向渲染的流程。

FDeferredShadingSceneRenderer雖然名字叫做延遲著色場景渲染器,但其實集成了包含前向渲染和延遲渲染的兩種渲染路徑,是PC和主機平台的默認場景渲染器(筆者剛接觸伊始也被這蜜汁取名迷惑過)。

後面章節將以FDeferredShadingSceneRenderer的延遲渲染路徑為重點,闡述UE的延遲渲染管線的流程和機制。

4.3.2 FDeferredShadingSceneRenderer

FDeferredShadingSceneRenderer是UE在PC和主機平台的場景渲染器,實現了延遲渲染路徑的邏輯,它的部分定義和聲明如下:

// Engine\Source\Runtime\Renderer\Private\DeferredShadingRenderer.h

class FDeferredShadingSceneRenderer : public FSceneRenderer

{

public:

// EarlyZ相關

EDepthDrawingMode EarlyZPassMode;

bool bEarlyZPassMovable;

bool bDitheredLODTransitionsUseStencil;

int32 StencilLODMode = 0;

FComputeFenceRHIRef TranslucencyLightingVolumeClearEndFence;

// 構造函數

FDeferredShadingSceneRenderer(const FSceneViewFamily* InViewFamily,FHitProxyConsumer* HitProxyConsumer);

// 清理介面

void ClearView(FRHICommandListImmediate& RHICmdList);

void ClearGBufferAtMaxZ(FRHICommandList& RHICmdList);

void ClearLPVs(FRHICommandListImmediate& RHICmdList);

void UpdateLPVs(FRHICommandListImmediate& RHICmdList);

// PrePass相關介面

bool RenderPrePass(FRHICommandListImmediate& RHICmdList, TFunctionRef<void()> AfterTasksAreStarted);

bool RenderPrePassHMD(FRHICommandListImmediate& RHICmdList);

void RenderPrePassView(FRHICommandList& RHICmdList, ...);

bool RenderPrePassViewParallel(const FViewInfo& View, ...);

void PreRenderDitherFill(FRHIAsyncComputeCommandListImmediate& RHICmdList, ...);

void PreRenderDitherFill(FRHICommandListImmediate& RHICmdList, ...);

void RenderPrePassEditorPrimitives(FRHICommandList& RHICmdList, ...);

// basepass介面

bool RenderBasePass(FRHICommandListImmediate& RHICmdList, ...);

void RenderBasePassViewParallel(FViewInfo& View, ...);

bool RenderBasePassView(FRHICommandListImmediate& RHICmdList, ...);

// skypass介面

void RenderSkyPassViewParallel(FRHICommandListImmediate& ParentCmdList, ...);

bool RenderSkyPassView(FRHICommandListImmediate& RHICmdList, ...);

(......)

// GBuffer & Texture

void CopySingleLayerWaterTextures(FRHICommandListImmediate& RHICmdList, ...);

static void BeginRenderingWaterGBuffer(FRHICommandList& RHICmdList, ...);

void FinishWaterGBufferPassAndResolve(FRHICommandListImmediate& RHICmdList, ...);

// 水體渲染

bool RenderSingleLayerWaterPass(FRHICommandListImmediate& RHICmdList, ...);

bool RenderSingleLayerWaterPassView(FRHICommandListImmediate& RHICmdList, ...);

void RenderSingleLayerWaterReflections(FRHICommandListImmediate& RHICmdList, ...);

// 渲染流程相關

// 渲染主入口

virtual void Render(FRHICommandListImmediate& RHICmdList) override;

void RenderFinish(FRHICommandListImmediate& RHICmdList);

// 其它渲染介面

bool RenderHzb(FRHICommandListImmediate& RHICmdList);

void RenderOcclusion(FRHICommandListImmediate& RHICmdList);

void FinishOcclusion(FRHICommandListImmediate& RHICmdList);

virtual void RenderHitProxies(FRHICommandListImmediate& RHICmdList) override;

private:

// 靜態數據(用於各種pass的初始化)

static FGraphEventRef TranslucencyTimestampQuerySubmittedFence[FOcclusionQueryHelpers::MaxBufferedOcclusionFrames + 1];

static FGlobalDynamicIndexBuffer DynamicIndexBufferForInitViews;

static FGlobalDynamicIndexBuffer DynamicIndexBufferForInitShadows;

static FGlobalDynamicVertexBuffer DynamicVertexBufferForInitViews;

static FGlobalDynamicVertexBuffer DynamicVertexBufferForInitShadows;

static TGlobalResource<FGlobalDynamicReadBuffer> DynamicReadBufferForInitViews;

static TGlobalResource<FGlobalDynamicReadBuffer> DynamicReadBufferForInitShadows;

// 可見性介面

void PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList);

// 初始化view數據

bool InitViews(FRHICommandListImmediate& RHICmdList, ...);

void InitViewsPossiblyAfterPrepass(FRHICommandListImmediate& RHICmdList, ...);

void SetupSceneReflectionCaptureBuffer(FRHICommandListImmediate& RHICmdList);

void UpdateTranslucencyTimersAndSeparateTranslucencyBufferSize(FRHICommandListImmediate& RHICmdList);

void CreateIndirectCapsuleShadows();

// 渲染霧

bool RenderFog(FRHICommandListImmediate& RHICmdList, ...);

void RenderViewFog(FRHICommandList& RHICmdList, const FViewInfo& View, ...);

// 大氣、天空、非直接光、距離場、環境光

void RenderAtmosphere(FRHICommandListImmediate& RHICmdList, ...);

void RenderDebugSkyAtmosphere(FRHICommandListImmediate& RHICmdList);

void RenderDiffuseIndirectAndAmbientOcclusion(FRHICommandListImmediate& RHICmdList);

void RenderDeferredReflectionsAndSkyLighting(FRHICommandListImmediate& RHICmdList, ...);

void RenderDeferredReflectionsAndSkyLightingHair(FRHICommandListImmediate& RHICmdList, ...);

void RenderDFAOAsIndirectShadowing(FRHICommandListImmediate& RHICmdList,...);

bool RenderDistanceFieldLighting(FRHICommandListImmediate& RHICmdList,...);

void RenderDistanceFieldAOScreenGrid(FRHICommandListImmediate& RHICmdList, ...);

void RenderMeshDistanceFieldVisualization(FRHICommandListImmediate& RHICmdList, ...);

// ----Tiled光源介面----

// 計算Tile模式下的Tile數據,以裁剪對Tile無作用的光源。只可用在forward shading、clustered deferred shading和啟用Surface lighting的透明模式。

void ComputeLightGrid(FRHICommandListImmediate& RHICmdList, ...);

bool CanUseTiledDeferred() const;

bool ShouldUseTiledDeferred(int32 NumTiledDeferredLights) const;

bool ShouldUseClusteredDeferredShading() const;

bool AreClusteredLightsInLightGrid() const;

void AddClusteredDeferredShadingPass(FRHICommandListImmediate& RHICmdList, ...);

void RenderTiledDeferredLighting(FRHICommandListImmediate& RHICmdList, const TArray<FSortedLightSceneInfo, SceneRenderingAllocator>& SortedLights, ...);

void GatherAndSortLights(FSortedLightSetSceneInfo& OutSortedLights);

void RenderLights(FRHICommandListImmediate& RHICmdList, ...);

void RenderLightArrayForOverlapViewmode(FRHICommandListImmediate& RHICmdList, ...);

void RenderStationaryLightOverlap(FRHICommandListImmediate& RHICmdList);

// ----渲染光源(直接光、間接光、環境光、靜態光、體積光)----

void RenderLight(FRHICommandList& RHICmdList, ...);

void RenderLightsForHair(FRHICommandListImmediate& RHICmdList, ...);

void RenderLightForHair(FRHICommandList& RHICmdList, ...);

void RenderSimpleLightsStandardDeferred(FRHICommandListImmediate& RHICmdList, ...);

void ClearTranslucentVolumeLighting(FRHICommandListImmediate& RHICmdListViewIndex, ...);

void InjectAmbientCubemapTranslucentVolumeLighting(FRHICommandList& RHICmdList, ...);

void ClearTranslucentVolumePerObjectShadowing(FRHICommandList& RHICmdList, const int32 ViewIndex);

void AccumulateTranslucentVolumeObjectShadowing(FRHICommandList& RHICmdList, ...);

void InjectTranslucentVolumeLighting(FRHICommandListImmediate& RHICmdList, ...);

void InjectTranslucentVolumeLightingArray(FRHICommandListImmediate& RHICmdList, ...);

void InjectSimpleTranslucentVolumeLightingArray(FRHICommandListImmediate& RHICmdList, ...);

void FilterTranslucentVolumeLighting(FRHICommandListImmediate& RHICmdList, ...);

// Light Function

bool RenderLightFunction(FRHICommandListImmediate& RHICmdList, ...);

bool RenderPreviewShadowsIndicator(FRHICommandListImmediate& RHICmdList, ...);

bool RenderLightFunctionForMaterial(FRHICommandListImmediate& RHICmdList, ...);

// 透明pass

void RenderViewTranslucency(FRHICommandListImmediate& RHICmdList, ...);

void RenderViewTranslucencyParallel(FRHICommandListImmediate& RHICmdList, ...);

void BeginTimingSeparateTranslucencyPass(FRHICommandListImmediate& RHICmdList, const FViewInfo& View);

void EndTimingSeparateTranslucencyPass(FRHICommandListImmediate& RHICmdList, const FViewInfo& View);

void BeginTimingSeparateTranslucencyModulatePass(FRHICommandListImmediate& RHICmdList, ...);

void EndTimingSeparateTranslucencyModulatePass(FRHICommandListImmediate& RHICmdList, ...);

void SetupDownsampledTranslucencyViewParameters(FRHICommandListImmediate& RHICmdList, ...);

void ConditionalResolveSceneColorForTranslucentMaterials(FRHICommandListImmediate& RHICmdList, ...);

void RenderTranslucency(FRHICommandListImmediate& RHICmdList, bool bDrawUnderwaterViews = false);

void RenderTranslucencyInner(FRHICommandListImmediate& RHICmdList, ...);

// 光束

void RenderLightShaftOcclusion(FRHICommandListImmediate& RHICmdList, FLightShaftsOutput& Output);

void RenderLightShaftBloom(FRHICommandListImmediate& RHICmdList);

// 速度緩衝

bool ShouldRenderVelocities() const;

void RenderVelocities(FRHICommandListImmediate& RHICmdList, ...);

void RenderVelocitiesInner(FRHICommandListImmediate& RHICmdList, ...);

// 其它渲染介面

bool RenderLightMapDensities(FRHICommandListImmediate& RHICmdList);

bool RenderDebugViewMode(FRHICommandListImmediate& RHICmdList);

void UpdateDownsampledDepthSurface(FRHICommandList& RHICmdList);

void DownsampleDepthSurface(FRHICommandList& RHICmdList, ...);

void CopyStencilToLightingChannelTexture(FRHICommandList& RHICmdList);

// ----陰影渲染相關介面----

void CreatePerObjectProjectedShadow(...);

bool InjectReflectiveShadowMaps(FRHICommandListImmediate& RHICmdList, ...);

bool RenderCapsuleDirectShadows(FRHICommandListImmediate& RHICmdList, ...) const;

void SetupIndirectCapsuleShadows(FRHICommandListImmediate& RHICmdList, ...) const;

void RenderIndirectCapsuleShadows(FRHICommandListImmediate& RHICmdList, ...) const;

void RenderCapsuleShadowsForMovableSkylight(FRHICommandListImmediate& RHICmdList, ...) const;

bool RenderShadowProjections(FRHICommandListImmediate& RHICmdList, ...);

void RenderForwardShadingShadowProjections(FRHICommandListImmediate& RHICmdList, ...);

// 體積霧

bool ShouldRenderVolumetricFog() const;

void SetupVolumetricFog();

void RenderLocalLightsForVolumetricFog(...);

void RenderLightFunctionForVolumetricFog(...);

void VoxelizeFogVolumePrimitives(...);

void ComputeVolumetricFog(FRHICommandListImmediate& RHICmdList);

void VisualizeVolumetricLightmap(FRHICommandListImmediate& RHICmdList);

// 反射

void RenderStandardDeferredImageBasedReflections(FRHICommandListImmediate& RHICmdList, ...);

bool HasDeferredPlanarReflections(const FViewInfo& View) const;

void RenderDeferredPlanarReflections(FRDGBuilder& GraphBuilder, ...);

bool ShouldDoReflectionEnvironment() const;

// 距離場AO和陰影

bool ShouldRenderDistanceFieldAO() const;

bool ShouldPrepareForDistanceFieldShadows() const;

bool ShouldPrepareForDistanceFieldAO() const;

bool ShouldPrepareForDFInsetIndirectShadow() const;

bool ShouldPrepareDistanceFieldScene() const;

bool ShouldPrepareGlobalDistanceField() const;

bool ShouldPrepareHeightFieldScene() const;

void UpdateGlobalDistanceFieldObjectBuffers(FRHICommandListImmediate& RHICmdList);

void UpdateGlobalHeightFieldObjectBuffers(FRHICommandListImmediate& RHICmdList);

void AddOrRemoveSceneHeightFieldPrimitives(bool bSkipAdd = false);

void PrepareDistanceFieldScene(FRHICommandListImmediate& RHICmdList, bool bSplitDispatch);

void CopySceneCaptureComponentToTarget(FRHICommandListImmediate& RHICmdList);

void SetupImaginaryReflectionTextureParameters(...);

// 光線追蹤介面

void RenderRayTracingDeferredReflections(...);

void RenderRayTracingShadows(...);

void RenderRayTracingStochasticRectLight(FRHICommandListImmediate& RHICmdList, ...);

void CompositeRayTracingSkyLight(FRHICommandListImmediate& RHICmdList, ...);

bool RenderRayTracingGlobalIllumination(FRDGBuilder& GraphBuilder, ...);

void RenderRayTracingGlobalIlluminationBruteForce(FRDGBuilder& GraphBuilder, ...);

void RayTracingGlobalIlluminationCreateGatherPoints(FRDGBuilder& GraphBuilder, ...);

void RenderRayTracingGlobalIlluminationFinalGather(FRDGBuilder& GraphBuilder, ...);

void RenderRayTracingAmbientOcclusion(FRDGBuilder& GraphBuilder, ...);

(......)

// 是否開啟分簇裁決光源

bool bClusteredShadingLightsInLightGrid;

};

從上面可以看到,FDeferredShadingSceneRenderer主要包含了Mesh Pass、光源、陰影、光線追蹤、反射、可見性等幾大類介面。其中最重要的介面非FDeferredShadingSceneRenderer::Render莫屬,它是FDeferredShadingSceneRenderer的渲染主入口,主流程和重要介面的調用都直接或間接發生它內部。若細分FDeferredShadingSceneRenderer::Render的邏輯,則可以劃分成以下主要階段:

| 階段 | 解析 |

|---|---|

| FScene::UpdateAllPrimitiveSceneInfos | 更新所有圖元的資訊到GPU,若啟用了GPUScene,將會用二維紋理或StructureBuffer來存儲圖元的資訊。 |

| FSceneRenderTargets::Allocate | 若有需要(解析度改變、API觸發),重新分配場景的渲染紋理,以保證足夠大的尺寸渲染對應的view。 |

| InitViews | 採用裁剪若干方式初始化圖元的可見性,設置可見的動態陰影,有必要時會對陰影平截頭體和世界求交(全場陰影和逐物體陰影)。 |

| PrePass / Depth only pass | 提前深度Pass,用來渲染不透明物體的深度。此Pass只會寫入深度而不會寫入顏色,寫入深度時有disabled、occlusion only、complete depths三種模式,視不同的平台和Feature Level決定。通常用來建立Hierarchical-Z,以便能夠開啟硬體的Early-Z技術,提升Base Pass的渲染效率。 |

| Base pass | 也就是前面章節所說的幾何通道。用來渲染不透明物體(Opaque和Masked材質)的幾何資訊,包含法線、深度、顏色、AO、粗糙度、金屬度等等。這些幾何資訊會寫入若干張GBuffer中。此階段不會計算動態光源的貢獻,但會計算Lightmap和天空光的貢獻。 |

| Issue Occlusion Queries / BeginOcclusionTests | 開啟遮擋渲染,此幀的渲染遮擋數據用於下一幀InitViews階段的遮擋剔除。此階段主要使用物體的包圍盒來渲染,也可能會打包相近物體的包圍盒以減少Draw Call。 |

| Lighting | 此階段也就是前面章節所說的光照通道,是標準延遲著色和分塊延遲著色的混合體。會計算開啟陰影的光源的陰影圖,也會計算每個燈光對螢幕空間像素的貢獻量,並累計到Scene Color中。此外,還會計算光源也對translucency lighting volumes的貢獻量。 |

| Fog | 在螢幕空間計算霧和大氣對不透明物體表面像素的影響。 |

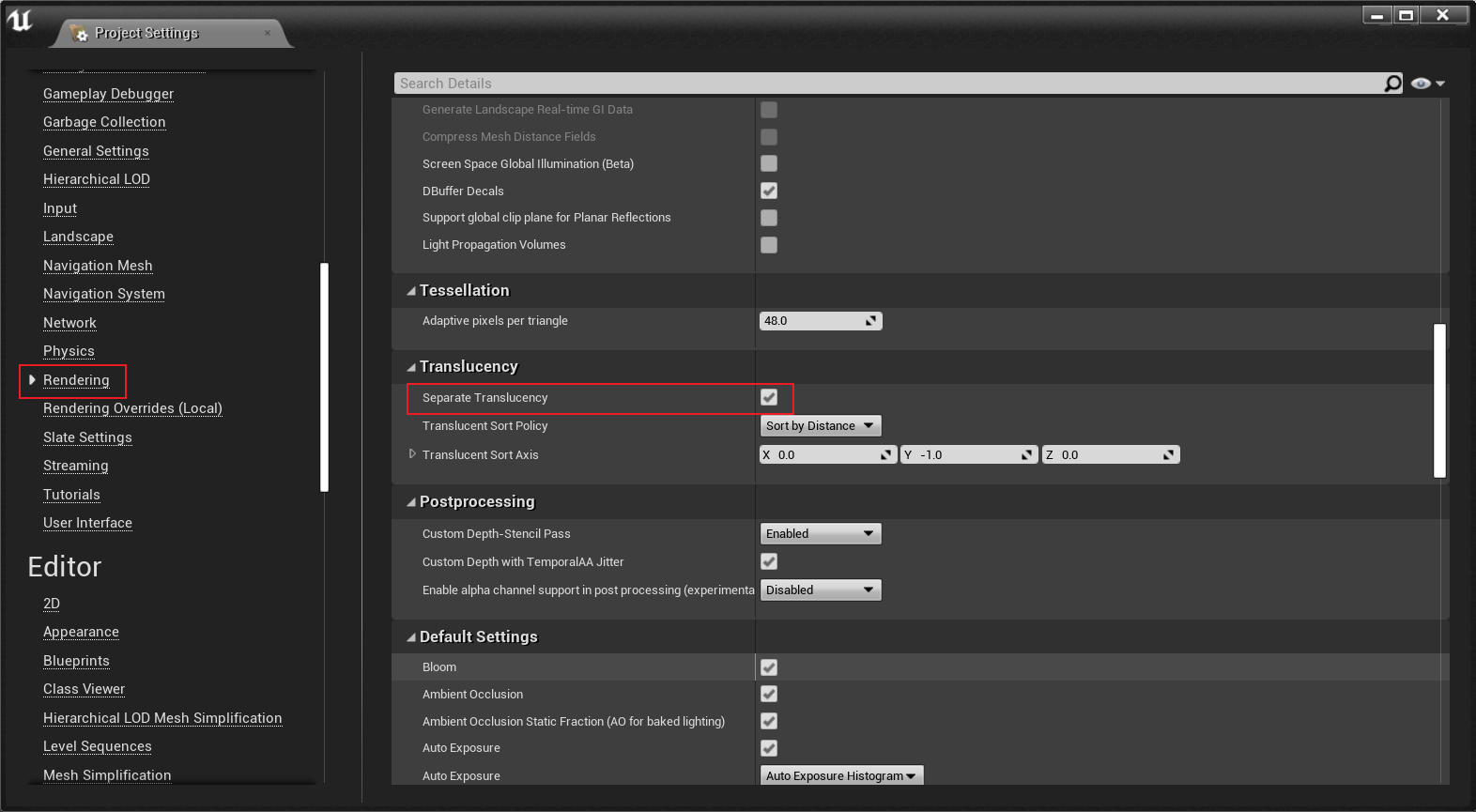

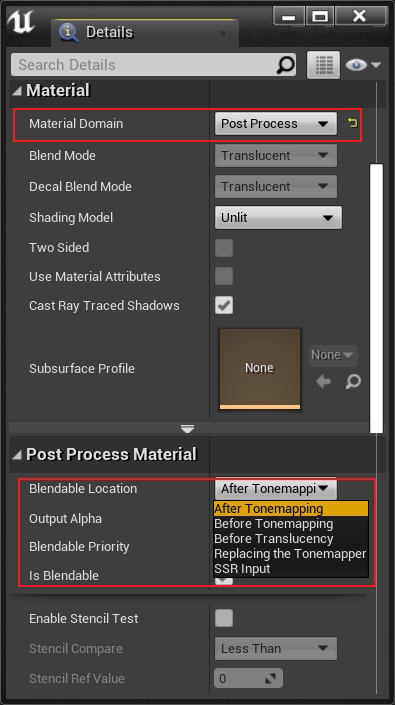

| Translucency | 渲染半透明物體階段。所有半透明物體由遠到近(視圖空間)逐個繪製到離屏渲染紋理(offscreen render target,程式碼中叫separate translucent render target)中,接著用單獨的pass以正確計算和混合光照結果。 |

| Post Processing | 後處理階段。包含了不需要GBuffer的Bloom、色調映射、Gamma校正等以及需要GBuffer的SSR、SSAO、SSGI等。此階段會將半透明的渲染紋理混合到最終的場景顏色中。 |

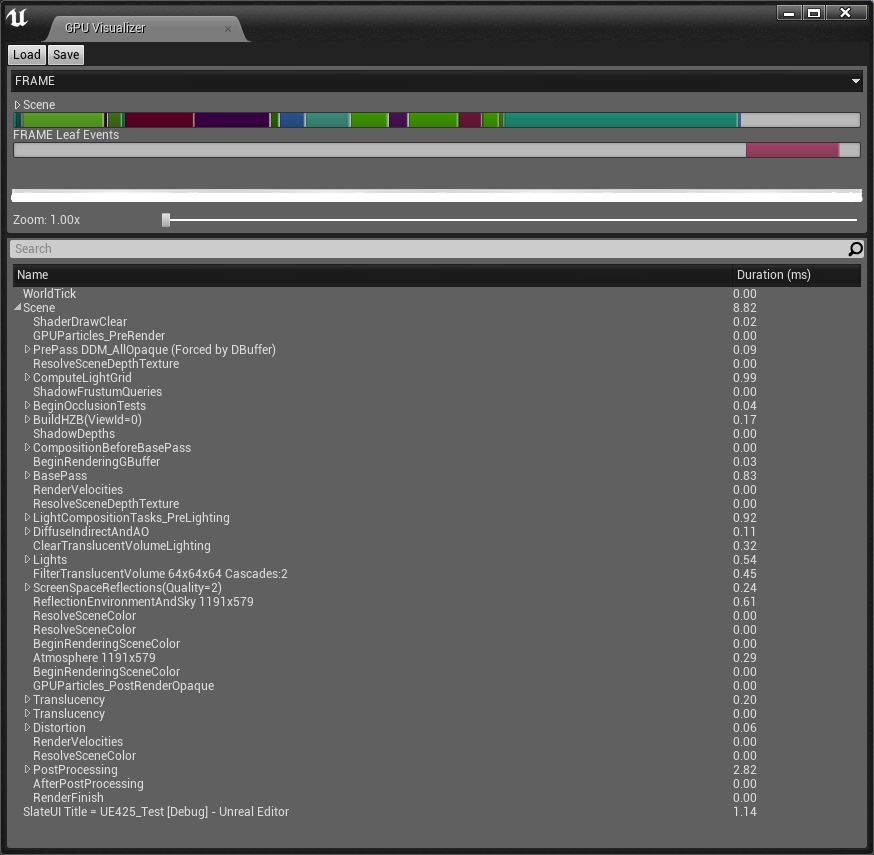

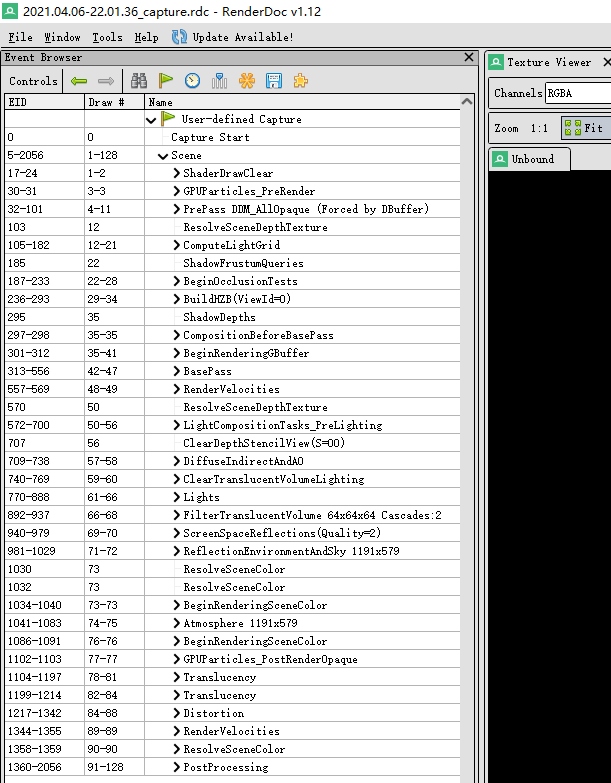

上面只是簡單列出了部分過程而非全部,可以利用RenderDoc工具截幀或用命令行profilegpu可以查看UE每幀的詳細渲染過程。

UE控制台命令行profilegpu執行後彈出的GPU Visualizer窗口,可以清晰地看到場景每幀渲染的步驟及時長。

利用渲染分析工具RenderDoc截取的UE的一幀。

後面章節將以上面的順序一一剖析其渲染過程及涉及的優化,分析的程式碼主要集中在FDeferredShadingSceneRenderer::Render實現體中,順著FDeferredShadingSceneRenderer::Render這條藤去摸UE場景渲染中所涉及的各種瓜。

4.3.3 FScene::UpdateAllPrimitiveSceneInfos

FScene::UpdateAllPrimitiveSceneInfos的調用發生在FDeferredShadingSceneRenderer::Render的第一行:

// Engine\Source\Runtime\Renderer\Private\DeferredShadingRenderer.cpp

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList, true);

(......)

}

FScene::UpdateAllPrimitiveSceneInfos的主要作用是刪除、增加、更新CPU側的圖元數據,且同步到GPU端。其中GPU的圖元數據存在兩種方式:

- 每個圖元獨有一個Uniform Buffer。在shader中需要訪問圖元的數據時從該圖元的Uniform Buffer中獲取即可。這種結構簡單易理解,兼容所有FeatureLevel的設備。但是會增加CPU和GPU的IO,降低GPU的Cache命中率。

- 使用Texture2D或StructuredBuffer的GPU Scene,所有圖元的數據按規律放置到此。在shader中需要訪問圖元的數據時需要從GPU Scene中對應的位置讀取數據。需要SM5支援,實現難度高,不易理解,但可減少CPU和GPU的IO,提升GPU Cache命中率,可更好地支援光線追蹤和GPU Driven Pipeline。

雖然以上訪問的方式不一樣,但shader中已經做了封裝,使用者不需要區分是哪種形式的Buffer,只需使用以下方式:

GetPrimitiveData(PrimitiveId).xxx;

其中xxx是圖元屬性名,具體可訪問的屬性如下結構體:

// Engine\Shaders\Private\SceneData.ush

struct FPrimitiveSceneData

{

float4x4 LocalToWorld;

float4 InvNonUniformScaleAndDeterminantSign;

float4 ObjectWorldPositionAndRadius;

float4x4 WorldToLocal;

float4x4 PreviousLocalToWorld;

float4x4 PreviousWorldToLocal;

float3 ActorWorldPosition;

float UseSingleSampleShadowFromStationaryLights;

float3 ObjectBounds;

float LpvBiasMultiplier;

float DecalReceiverMask;

float PerObjectGBufferData;

float UseVolumetricLightmapShadowFromStationaryLights;

float DrawsVelocity;

float4 ObjectOrientation;

float4 NonUniformScale;

float3 LocalObjectBoundsMin;

uint LightingChannelMask;

float3 LocalObjectBoundsMax;

uint LightmapDataIndex;

float3 PreSkinnedLocalBoundsMin;

int SingleCaptureIndex;

float3 PreSkinnedLocalBoundsMax;

uint OutputVelocity;

float4 CustomPrimitiveData[NUM_CUSTOM_PRIMITIVE_DATA];

};

由此可見,每個圖元可訪問的數據還是很多的,佔用的顯示記憶體也相當可觀,每個圖元大約佔用576位元組,如果場景存在10000個圖元(遊戲場景很常見),則忽略Padding情況下,這些圖元數據總量達到約5.5M。

言歸正傳,回到C++層看看GPU Scene的定義:

// Engine\Source\Runtime\Renderer\Private\ScenePrivate.h

class FGPUScene

{

public:

// 是否更新全部圖元數據,通常用於調試,運行期會導致性能下降。

bool bUpdateAllPrimitives;

// 需要更新數據的圖元索引.

TArray<int32> PrimitivesToUpdate;

// 所有圖元的bit,當對應索引的bit為1時表示需要更新(同時在PrimitivesToUpdate中).

TBitArray<> PrimitivesMarkedToUpdate;

// 存放圖元的GPU數據結構, 可以是TextureBuffer或Texture2D, 但只有其中一種會被創建和生效, 移動端可由Mobile.UseGPUSceneTexture控制台變數設定.

FRWBufferStructured PrimitiveBuffer;

FTextureRWBuffer2D PrimitiveTexture;

// 上傳的buffer

FScatterUploadBuffer PrimitiveUploadBuffer;

FScatterUploadBuffer PrimitiveUploadViewBuffer;

// 光照圖

FGrowOnlySpanAllocator LightmapDataAllocator;

FRWBufferStructured LightmapDataBuffer;

FScatterUploadBuffer LightmapUploadBuffer;

};

回到本小節開頭的主題,繼續分析FScene::UpdateAllPrimitiveSceneInfos的過程:

// Engine\Source\Runtime\Renderer\Private\RendererScene.cpp

void FScene::UpdateAllPrimitiveSceneInfos(FRHICommandListImmediate& RHICmdList, bool bAsyncCreateLPIs)

{

TArray<FPrimitiveSceneInfo*> RemovedLocalPrimitiveSceneInfos(RemovedPrimitiveSceneInfos.Array());

RemovedLocalPrimitiveSceneInfos.Sort(FPrimitiveArraySortKey());

TArray<FPrimitiveSceneInfo*> AddedLocalPrimitiveSceneInfos(AddedPrimitiveSceneInfos.Array());

AddedLocalPrimitiveSceneInfos.Sort(FPrimitiveArraySortKey());

TSet<FPrimitiveSceneInfo*> DeletedSceneInfos;

DeletedSceneInfos.Reserve(RemovedLocalPrimitiveSceneInfos.Num());

(......)

// 處理圖元刪除

{

(......)

while (RemovedLocalPrimitiveSceneInfos.Num())

{

// 找到起始點

int StartIndex = RemovedLocalPrimitiveSceneInfos.Num() - 1;

SIZE_T InsertProxyHash = RemovedLocalPrimitiveSceneInfos[StartIndex]->Proxy->GetTypeHash();

while (StartIndex > 0 && RemovedLocalPrimitiveSceneInfos[StartIndex - 1]->Proxy->GetTypeHash() == InsertProxyHash)

{

StartIndex--;

}

(......)

{

SCOPED_NAMED_EVENT(FScene_SwapPrimitiveSceneInfos, FColor::Turquoise);

for (int CheckIndex = StartIndex; CheckIndex < RemovedLocalPrimitiveSceneInfos.Num(); CheckIndex++)

{

int SourceIndex = RemovedLocalPrimitiveSceneInfos[CheckIndex]->PackedIndex;

for (int TypeIndex = BroadIndex; TypeIndex < TypeOffsetTable.Num(); TypeIndex++)

{

FTypeOffsetTableEntry& NextEntry = TypeOffsetTable[TypeIndex];

int DestIndex = --NextEntry.Offset; //decrement and prepare swap

// 刪除操作示意圖, 配合PrimitiveSceneProxies和TypeOffsetTable, 可以減少刪除元素的移動或交換次數.

// example swap chain of removing X

// PrimitiveSceneProxies[0,0,0,6,X,6,6,6,2,2,2,2,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,X,2,2,2,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,X,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,X,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,X,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,4,X,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,4,8,X]

if (DestIndex != SourceIndex)

{

checkfSlow(DestIndex > SourceIndex, TEXT("Corrupted Prefix Sum [%d, %d]"), DestIndex, SourceIndex);

Primitives[DestIndex]->PackedIndex = SourceIndex;

Primitives[SourceIndex]->PackedIndex = DestIndex;

TArraySwapElements(Primitives, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveTransforms, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveSceneProxies, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveBounds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveFlagsCompact, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVisibilityIds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveOcclusionFlags, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveComponentIds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVirtualTextureFlags, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVirtualTextureLod, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveOcclusionBounds, DestIndex, SourceIndex);

TBitArraySwapElements(PrimitivesNeedingStaticMeshUpdate, DestIndex, SourceIndex);

AddPrimitiveToUpdateGPU(*this, SourceIndex);

AddPrimitiveToUpdateGPU(*this, DestIndex);

SourceIndex = DestIndex;

}

}

}

}

const int PreviousOffset = BroadIndex > 0 ? TypeOffsetTable[BroadIndex - 1].Offset : 0;

const int CurrentOffset = TypeOffsetTable[BroadIndex].Offset;

if (CurrentOffset - PreviousOffset == 0)

{

// remove empty OffsetTable entries e.g.

// TypeOffsetTable[3,8,12,15,15,17,18]

// TypeOffsetTable[3,8,12,15,17,18]

TypeOffsetTable.RemoveAt(BroadIndex);

}

(......)

for (int RemoveIndex = StartIndex; RemoveIndex < RemovedLocalPrimitiveSceneInfos.Num(); RemoveIndex++)

{

int SourceIndex = RemovedLocalPrimitiveSceneInfos[RemoveIndex]->PackedIndex;

check(SourceIndex >= (Primitives.Num() - RemovedLocalPrimitiveSceneInfos.Num() + StartIndex));

Primitives.Pop();

PrimitiveTransforms.Pop();

PrimitiveSceneProxies.Pop();

PrimitiveBounds.Pop();

PrimitiveFlagsCompact.Pop();

PrimitiveVisibilityIds.Pop();

PrimitiveOcclusionFlags.Pop();

PrimitiveComponentIds.Pop();

PrimitiveVirtualTextureFlags.Pop();

PrimitiveVirtualTextureLod.Pop();

PrimitiveOcclusionBounds.Pop();

PrimitivesNeedingStaticMeshUpdate.RemoveAt(PrimitivesNeedingStaticMeshUpdate.Num() - 1);

}

CheckPrimitiveArrays();

for (int RemoveIndex = StartIndex; RemoveIndex < RemovedLocalPrimitiveSceneInfos.Num(); RemoveIndex++)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = RemovedLocalPrimitiveSceneInfos[RemoveIndex];

FScopeCycleCounter Context(PrimitiveSceneInfo->Proxy->GetStatId());

int32 PrimitiveIndex = PrimitiveSceneInfo->PackedIndex;

PrimitiveSceneInfo->PackedIndex = INDEX_NONE;

if (PrimitiveSceneInfo->Proxy->IsMovable())

{

// Remove primitive's motion blur information.

VelocityData.RemoveFromScene(PrimitiveSceneInfo->PrimitiveComponentId);

}

// Unlink the primitive from its shadow parent.

PrimitiveSceneInfo->UnlinkAttachmentGroup();

// Unlink the LOD parent info if valid

PrimitiveSceneInfo->UnlinkLODParentComponent();

// Flush virtual textures touched by primitive

PrimitiveSceneInfo->FlushRuntimeVirtualTexture();

// Remove the primitive from the scene.

PrimitiveSceneInfo->RemoveFromScene(true);

// Update the primitive that was swapped to this index

AddPrimitiveToUpdateGPU(*this, PrimitiveIndex);

DistanceFieldSceneData.RemovePrimitive(PrimitiveSceneInfo);

DeletedSceneInfos.Add(PrimitiveSceneInfo);

}

RemovedLocalPrimitiveSceneInfos.RemoveAt(StartIndex, RemovedLocalPrimitiveSceneInfos.Num() - StartIndex);

}

}

// 處理圖元增加

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(AddPrimitiveSceneInfos);

SCOPED_NAMED_EVENT(FScene_AddPrimitiveSceneInfos, FColor::Green);

SCOPE_CYCLE_COUNTER(STAT_AddScenePrimitiveRenderThreadTime);

if (AddedLocalPrimitiveSceneInfos.Num())

{

Primitives.Reserve(Primitives.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveTransforms.Reserve(PrimitiveTransforms.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveSceneProxies.Reserve(PrimitiveSceneProxies.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveBounds.Reserve(PrimitiveBounds.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveFlagsCompact.Reserve(PrimitiveFlagsCompact.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveVisibilityIds.Reserve(PrimitiveVisibilityIds.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveOcclusionFlags.Reserve(PrimitiveOcclusionFlags.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveComponentIds.Reserve(PrimitiveComponentIds.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveVirtualTextureFlags.Reserve(PrimitiveVirtualTextureFlags.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveVirtualTextureLod.Reserve(PrimitiveVirtualTextureLod.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitiveOcclusionBounds.Reserve(PrimitiveOcclusionBounds.Num() + AddedLocalPrimitiveSceneInfos.Num());

PrimitivesNeedingStaticMeshUpdate.Reserve(PrimitivesNeedingStaticMeshUpdate.Num() + AddedLocalPrimitiveSceneInfos.Num());

}

while (AddedLocalPrimitiveSceneInfos.Num())

{

int StartIndex = AddedLocalPrimitiveSceneInfos.Num() - 1;

SIZE_T InsertProxyHash = AddedLocalPrimitiveSceneInfos[StartIndex]->Proxy->GetTypeHash();

while (StartIndex > 0 && AddedLocalPrimitiveSceneInfos[StartIndex - 1]->Proxy->GetTypeHash() == InsertProxyHash)

{

StartIndex--;

}

for (int AddIndex = StartIndex; AddIndex < AddedLocalPrimitiveSceneInfos.Num(); AddIndex++)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = AddedLocalPrimitiveSceneInfos[AddIndex];

Primitives.Add(PrimitiveSceneInfo);

const FMatrix LocalToWorld = PrimitiveSceneInfo->Proxy->GetLocalToWorld();

PrimitiveTransforms.Add(LocalToWorld);

PrimitiveSceneProxies.Add(PrimitiveSceneInfo->Proxy);

PrimitiveBounds.AddUninitialized();

PrimitiveFlagsCompact.AddUninitialized();

PrimitiveVisibilityIds.AddUninitialized();

PrimitiveOcclusionFlags.AddUninitialized();

PrimitiveComponentIds.AddUninitialized();

PrimitiveVirtualTextureFlags.AddUninitialized();

PrimitiveVirtualTextureLod.AddUninitialized();

PrimitiveOcclusionBounds.AddUninitialized();

PrimitivesNeedingStaticMeshUpdate.Add(false);

const int SourceIndex = PrimitiveSceneProxies.Num() - 1;

PrimitiveSceneInfo->PackedIndex = SourceIndex;

AddPrimitiveToUpdateGPU(*this, SourceIndex);

}

bool EntryFound = false;

int BroadIndex = -1;

//broad phase search for a matching type

for (BroadIndex = TypeOffsetTable.Num() - 1; BroadIndex >= 0; BroadIndex--)

{

// example how the prefix sum of the tails could look like

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,2,1,1,1,7,4,8]

// TypeOffsetTable[3,8,12,15,16,17,18]

if (TypeOffsetTable[BroadIndex].PrimitiveSceneProxyType == InsertProxyHash)

{

EntryFound = true;

break;

}

}

//new type encountered

if (EntryFound == false)

{

BroadIndex = TypeOffsetTable.Num();

if (BroadIndex)

{

FTypeOffsetTableEntry Entry = TypeOffsetTable[BroadIndex - 1];

//adding to the end of the list and offset of the tail (will will be incremented once during the while loop)

TypeOffsetTable.Push(FTypeOffsetTableEntry(InsertProxyHash, Entry.Offset));

}

else

{

//starting with an empty list and offset zero (will will be incremented once during the while loop)

TypeOffsetTable.Push(FTypeOffsetTableEntry(InsertProxyHash, 0));

}

}

{

SCOPED_NAMED_EVENT(FScene_SwapPrimitiveSceneInfos, FColor::Turquoise);

for (int AddIndex = StartIndex; AddIndex < AddedLocalPrimitiveSceneInfos.Num(); AddIndex++)

{

int SourceIndex = AddedLocalPrimitiveSceneInfos[AddIndex]->PackedIndex;

for (int TypeIndex = BroadIndex; TypeIndex < TypeOffsetTable.Num(); TypeIndex++)

{

FTypeOffsetTableEntry& NextEntry = TypeOffsetTable[TypeIndex];

int DestIndex = NextEntry.Offset++; //prepare swap and increment

// example swap chain of inserting a type of 6 at the end

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,2,1,1,1,7,4,8,6]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,1,1,1,7,4,8,2]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,7,4,8,1]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,4,8,7]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,7,8,4]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,7,4,8]

if (DestIndex != SourceIndex)

{

checkfSlow(SourceIndex > DestIndex, TEXT("Corrupted Prefix Sum [%d, %d]"), SourceIndex, DestIndex);

Primitives[DestIndex]->PackedIndex = SourceIndex;

Primitives[SourceIndex]->PackedIndex = DestIndex;

TArraySwapElements(Primitives, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveTransforms, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveSceneProxies, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveBounds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveFlagsCompact, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVisibilityIds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveOcclusionFlags, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveComponentIds, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVirtualTextureFlags, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveVirtualTextureLod, DestIndex, SourceIndex);

TArraySwapElements(PrimitiveOcclusionBounds, DestIndex, SourceIndex);

TBitArraySwapElements(PrimitivesNeedingStaticMeshUpdate, DestIndex, SourceIndex);

AddPrimitiveToUpdateGPU(*this, DestIndex);

}

}

}

}

CheckPrimitiveArrays();

for (int AddIndex = StartIndex; AddIndex < AddedLocalPrimitiveSceneInfos.Num(); AddIndex++)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = AddedLocalPrimitiveSceneInfos[AddIndex];

FScopeCycleCounter Context(PrimitiveSceneInfo->Proxy->GetStatId());

int32 PrimitiveIndex = PrimitiveSceneInfo->PackedIndex;

// Add the primitive to its shadow parent's linked list of children.

// Note: must happen before AddToScene because AddToScene depends on LightingAttachmentRoot

PrimitiveSceneInfo->LinkAttachmentGroup();

}

{

SCOPED_NAMED_EVENT(FScene_AddPrimitiveSceneInfoToScene, FColor::Turquoise);

if (GIsEditor)

{

FPrimitiveSceneInfo::AddToScene(RHICmdList, this, TArrayView<FPrimitiveSceneInfo*>(&AddedLocalPrimitiveSceneInfos[StartIndex], AddedLocalPrimitiveSceneInfos.Num() - StartIndex), true);

}

else

{

const bool bAddToDrawLists = !(CVarDoLazyStaticMeshUpdate.GetValueOnRenderThread());

if (bAddToDrawLists)

{

FPrimitiveSceneInfo::AddToScene(RHICmdList, this, TArrayView<FPrimitiveSceneInfo*>(&AddedLocalPrimitiveSceneInfos[StartIndex], AddedLocalPrimitiveSceneInfos.Num() - StartIndex), true, true, bAsyncCreateLPIs);

}

else

{

FPrimitiveSceneInfo::AddToScene(RHICmdList, this, TArrayView<FPrimitiveSceneInfo*>(&AddedLocalPrimitiveSceneInfos[StartIndex], AddedLocalPrimitiveSceneInfos.Num() - StartIndex), true, false, bAsyncCreateLPIs);

for (int AddIndex = StartIndex; AddIndex < AddedLocalPrimitiveSceneInfos.Num(); AddIndex++)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = AddedLocalPrimitiveSceneInfos[AddIndex];

PrimitiveSceneInfo->BeginDeferredUpdateStaticMeshes();

}

}

}

}

for (int AddIndex = StartIndex; AddIndex < AddedLocalPrimitiveSceneInfos.Num(); AddIndex++)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = AddedLocalPrimitiveSceneInfos[AddIndex];

int32 PrimitiveIndex = PrimitiveSceneInfo->PackedIndex;

if (PrimitiveSceneInfo->Proxy->IsMovable() && GetFeatureLevel() > ERHIFeatureLevel::ES3_1)

{

// We must register the initial LocalToWorld with the velocity state.

// In the case of a moving component with MarkRenderStateDirty() called every frame, UpdateTransform will never happen.

VelocityData.UpdateTransform(PrimitiveSceneInfo, PrimitiveTransforms[PrimitiveIndex], PrimitiveTransforms[PrimitiveIndex]);

}

AddPrimitiveToUpdateGPU(*this, PrimitiveIndex);

bPathTracingNeedsInvalidation = true;

DistanceFieldSceneData.AddPrimitive(PrimitiveSceneInfo);

// Flush virtual textures touched by primitive

PrimitiveSceneInfo->FlushRuntimeVirtualTexture();

// Set LOD parent information if valid

PrimitiveSceneInfo->LinkLODParentComponent();

// Update scene LOD tree

SceneLODHierarchy.UpdateNodeSceneInfo(PrimitiveSceneInfo->PrimitiveComponentId, PrimitiveSceneInfo);

}

AddedLocalPrimitiveSceneInfos.RemoveAt(StartIndex, AddedLocalPrimitiveSceneInfos.Num() - StartIndex);

}

}

// 更新圖元變換矩陣

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(UpdatePrimitiveTransform);

SCOPED_NAMED_EVENT(FScene_AddPrimitiveSceneInfos, FColor::Yellow);

SCOPE_CYCLE_COUNTER(STAT_UpdatePrimitiveTransformRenderThreadTime);

TArray<FPrimitiveSceneInfo*> UpdatedSceneInfosWithStaticDrawListUpdate;

TArray<FPrimitiveSceneInfo*> UpdatedSceneInfosWithoutStaticDrawListUpdate;

UpdatedSceneInfosWithStaticDrawListUpdate.Reserve(UpdatedTransforms.Num());

UpdatedSceneInfosWithoutStaticDrawListUpdate.Reserve(UpdatedTransforms.Num());

for (const auto& Transform : UpdatedTransforms)

{

FPrimitiveSceneProxy* PrimitiveSceneProxy = Transform.Key;

if (DeletedSceneInfos.Contains(PrimitiveSceneProxy->GetPrimitiveSceneInfo()))

{

continue;

}

check(PrimitiveSceneProxy->GetPrimitiveSceneInfo()->PackedIndex != INDEX_NONE);

const FBoxSphereBounds& WorldBounds = Transform.Value.WorldBounds;

const FBoxSphereBounds& LocalBounds = Transform.Value.LocalBounds;

const FMatrix& LocalToWorld = Transform.Value.LocalToWorld;

const FVector& AttachmentRootPosition = Transform.Value.AttachmentRootPosition;

FScopeCycleCounter Context(PrimitiveSceneProxy->GetStatId());

FPrimitiveSceneInfo* PrimitiveSceneInfo = PrimitiveSceneProxy->GetPrimitiveSceneInfo();

const bool bUpdateStaticDrawLists = !PrimitiveSceneProxy->StaticElementsAlwaysUseProxyPrimitiveUniformBuffer();

if (bUpdateStaticDrawLists)

{

UpdatedSceneInfosWithStaticDrawListUpdate.Push(PrimitiveSceneInfo);

}

else

{

UpdatedSceneInfosWithoutStaticDrawListUpdate.Push(PrimitiveSceneInfo);

}

PrimitiveSceneInfo->FlushRuntimeVirtualTexture();

// Remove the primitive from the scene at its old location

// (note that the octree update relies on the bounds not being modified yet).

PrimitiveSceneInfo->RemoveFromScene(bUpdateStaticDrawLists);

if (PrimitiveSceneInfo->Proxy->IsMovable() && GetFeatureLevel() > ERHIFeatureLevel::ES3_1)

{

VelocityData.UpdateTransform(PrimitiveSceneInfo, LocalToWorld, PrimitiveSceneProxy->GetLocalToWorld());

}

// Update the primitive transform.

PrimitiveSceneProxy->SetTransform(LocalToWorld, WorldBounds, LocalBounds, AttachmentRootPosition);

PrimitiveTransforms[PrimitiveSceneInfo->PackedIndex] = LocalToWorld;

if (!RHISupportsVolumeTextures(GetFeatureLevel())

&& (PrimitiveSceneProxy->IsMovable() || PrimitiveSceneProxy->NeedsUnbuiltPreviewLighting() || PrimitiveSceneProxy->GetLightmapType() == ELightmapType::ForceVolumetric))

{

PrimitiveSceneInfo->MarkIndirectLightingCacheBufferDirty();

}

AddPrimitiveToUpdateGPU(*this, PrimitiveSceneInfo->PackedIndex);

DistanceFieldSceneData.UpdatePrimitive(PrimitiveSceneInfo);

// If the primitive has static mesh elements, it should have returned true from ShouldRecreateProxyOnUpdateTransform!

check(!(bUpdateStaticDrawLists && PrimitiveSceneInfo->StaticMeshes.Num()));

}

// Re-add the primitive to the scene with the new transform.

if (UpdatedSceneInfosWithStaticDrawListUpdate.Num() > 0)

{

FPrimitiveSceneInfo::AddToScene(RHICmdList, this, UpdatedSceneInfosWithStaticDrawListUpdate, true, true, bAsyncCreateLPIs);

}

if (UpdatedSceneInfosWithoutStaticDrawListUpdate.Num() > 0)

{

FPrimitiveSceneInfo::AddToScene(RHICmdList, this, UpdatedSceneInfosWithoutStaticDrawListUpdate, false, true, bAsyncCreateLPIs);

for (FPrimitiveSceneInfo* PrimitiveSceneInfo : UpdatedSceneInfosWithoutStaticDrawListUpdate)

{

PrimitiveSceneInfo->FlushRuntimeVirtualTexture();

}

}

if (AsyncCreateLightPrimitiveInteractionsTask && AsyncCreateLightPrimitiveInteractionsTask->GetTask().HasPendingPrimitives())

{

check(GAsyncCreateLightPrimitiveInteractions);

AsyncCreateLightPrimitiveInteractionsTask->StartBackgroundTask();

}

for (const auto& Transform : OverridenPreviousTransforms)

{

FPrimitiveSceneInfo* PrimitiveSceneInfo = Transform.Key;

VelocityData.OverridePreviousTransform(PrimitiveSceneInfo->PrimitiveComponentId, Transform.Value);

}

}

(......)

}

總結起來,FScene::UpdateAllPrimitiveSceneInfos的作用是刪除、增加圖元,以及更新圖元的所有數據,包含變換矩陣、自定義數據、距離場數據等。

有意思的是,刪除或增加圖元時,會結合有序的PrimitiveSceneProxies和TypeOffsetTable,可以減少操作元素過程中的移動或交換次數,比較巧妙。注釋程式碼中已經清晰給出了交換過程:

// 刪除圖元示意圖:會依次將被刪除的元素交換到相同類型的末尾,直到列表末尾

// PrimitiveSceneProxies[0,0,0,6,X,6,6,6,2,2,2,2,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,X,2,2,2,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,X,1,1,1,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,X,7,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,X,4,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,4,X,8]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,1,1,1,7,4,8,X]

// 增加圖元示意圖:先將被增加的元素放置列表末尾,然後依次和相同類型的末尾交換。

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,2,2,2,2,1,1,1,7,4,8,6]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,1,1,1,7,4,8,2]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,7,4,8,1]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,4,8,7]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,7,8,4]

// PrimitiveSceneProxies[0,0,0,6,6,6,6,6,6,2,2,2,2,1,1,1,7,4,8]

另外,對於所有被增加或刪除或更改了數據的圖元索引,會調用AddPrimitiveToUpdateGPU將它們加入到待更新列表:

// Engine\Source\Runtime\Renderer\Private\GPUScene.cpp

void AddPrimitiveToUpdateGPU(FScene& Scene, int32 PrimitiveId)

{

if (UseGPUScene(GMaxRHIShaderPlatform, Scene.GetFeatureLevel()))

{

if (PrimitiveId + 1 > Scene.GPUScene.PrimitivesMarkedToUpdate.Num())

{

const int32 NewSize = Align(PrimitiveId + 1, 64);

Scene.GPUScene.PrimitivesMarkedToUpdate.Add(0, NewSize - Scene.GPUScene.PrimitivesMarkedToUpdate.Num());

}

// 將待更新的圖元索引加入PrimitivesToUpdate和PrimitivesMarkedToUpdate中

if (!Scene.GPUScene.PrimitivesMarkedToUpdate[PrimitiveId])

{

Scene.GPUScene.PrimitivesToUpdate.Add(PrimitiveId);

Scene.GPUScene.PrimitivesMarkedToUpdate[PrimitiveId] = true;

}

}

}

GPUScene的PrimitivesToUpdate和PrimitivesMarkedToUpdate收集好需要更新的所有圖元索引後,會在FDeferredShadingSceneRenderer::Render的InitViews之後同步給GPU:

void FDeferredShadingSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

// 更新GPUScene的數據

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList, true);

(......)

// 初始化View的數據

bDoInitViewAftersPrepass = InitViews(RHICmdList, BasePassDepthStencilAccess, ILCTaskData, UpdateViewCustomDataEvents);

(......)

// 同步CPU端的GPUScene到GPU.

UpdateGPUScene(RHICmdList, *Scene);

(......)

}

進入UpdateGPUScene看看具體的同步邏輯:

// Engine\Source\Runtime\Renderer\Private\GPUScene.cpp

void UpdateGPUScene(FRHICommandListImmediate& RHICmdList, FScene& Scene)

{

// 根據不同的GPUScene存儲類型調用對應的介面。

if (GPUSceneUseTexture2D(Scene.GetShaderPlatform()))

{

UpdateGPUSceneInternal<FTextureRWBuffer2D>(RHICmdList, Scene);

}

else

{

UpdateGPUSceneInternal<FRWBufferStructured>(RHICmdList, Scene);

}

}

// ResourceType就是封裝了FTextureRWBuffer2D和FRWBufferStructured的模板類型

template<typename ResourceType>

void UpdateGPUSceneInternal(FRHICommandListImmediate& RHICmdList, FScene& Scene)

{

if (UseGPUScene(GMaxRHIShaderPlatform, Scene.GetFeatureLevel()))

{

// 是否全部圖元數據都同步?

if (GGPUSceneUploadEveryFrame || Scene.GPUScene.bUpdateAllPrimitives)

{

for (int32 Index : Scene.GPUScene.PrimitivesToUpdate)

{

Scene.GPUScene.PrimitivesMarkedToUpdate[Index] = false;

}

Scene.GPUScene.PrimitivesToUpdate.Reset();

for (int32 i = 0; i < Scene.Primitives.Num(); i++)

{

Scene.GPUScene.PrimitivesToUpdate.Add(i);

}

Scene.GPUScene.bUpdateAllPrimitives = false;

}

bool bResizedPrimitiveData = false;

bool bResizedLightmapData = false;

// 獲取GPU的鏡像資源: GPUScene.PrimitiveBuffer或GPUScene.PrimitiveTexture

ResourceType* MirrorResourceGPU = GetMirrorGPU<ResourceType>(Scene);

// 如果資源尺寸不足, 則擴展之. 增長策略是不小於256, 並且向上保證2的N次方.

{

const uint32 SizeReserve = FMath::RoundUpToPowerOfTwo( FMath::Max( Scene.Primitives.Num(), 256 ) );

// 如果資源尺寸不足, 會創建新資源, 然後將舊資源的數據拷貝到新資源.

bResizedPrimitiveData = ResizeResourceIfNeeded(RHICmdList, *MirrorResourceGPU, SizeReserve * sizeof(FPrimitiveSceneShaderData::Data), TEXT("PrimitiveData"));

}

{

const uint32 SizeReserve = FMath::RoundUpToPowerOfTwo( FMath::Max( Scene.GPUScene.LightmapDataAllocator.GetMaxSize(), 256 ) );

bResizedLightmapData = ResizeResourceIfNeeded(RHICmdList, Scene.GPUScene.LightmapDataBuffer, SizeReserve * sizeof(FLightmapSceneShaderData::Data), TEXT("LightmapData"));

}

const int32 NumPrimitiveDataUploads = Scene.GPUScene.PrimitivesToUpdate.Num();

int32 NumLightmapDataUploads = 0;

if (NumPrimitiveDataUploads > 0)

{

// 將所有需要更新的數據收集到PrimitiveUploadBuffer中.由於Buffer的尺寸存在最大值, 所以單次上傳的Buffer尺寸也有限制, 如果超過了最大尺寸, 會分批上傳.

const int32 MaxPrimitivesUploads = GetMaxPrimitivesUpdate(NumPrimitiveDataUploads, FPrimitiveSceneShaderData::PrimitiveDataStrideInFloat4s);

for (int32 PrimitiveOffset = 0; PrimitiveOffset < NumPrimitiveDataUploads; PrimitiveOffset += MaxPrimitivesUploads)

{

SCOPED_DRAW_EVENTF(RHICmdList, UpdateGPUScene, TEXT("UpdateGPUScene PrimitivesToUpdate and Offset = %u %u"), NumPrimitiveDataUploads, PrimitiveOffset);

Scene.GPUScene.PrimitiveUploadBuffer.Init(MaxPrimitivesUploads, sizeof(FPrimitiveSceneShaderData::Data), true, TEXT("PrimitiveUploadBuffer"));

// 在單個最大尺寸內的批次, 逐個將圖元數據填入到PrimitiveUploadBuffer中.

for (int32 IndexUpdate = 0; (IndexUpdate < MaxPrimitivesUploads) && ((IndexUpdate + PrimitiveOffset) < NumPrimitiveDataUploads); ++IndexUpdate)

{

int32 Index = Scene.GPUScene.PrimitivesToUpdate[IndexUpdate + PrimitiveOffset];

// PrimitivesToUpdate may contain a stale out of bounds index, as we don't remove update request on primitive removal from scene.

if (Index < Scene.PrimitiveSceneProxies.Num())

{

FPrimitiveSceneProxy* RESTRICT PrimitiveSceneProxy = Scene.PrimitiveSceneProxies[Index];

NumLightmapDataUploads += PrimitiveSceneProxy->GetPrimitiveSceneInfo()->GetNumLightmapDataEntries();

FPrimitiveSceneShaderData PrimitiveSceneData(PrimitiveSceneProxy);

Scene.GPUScene.PrimitiveUploadBuffer.Add(Index, &PrimitiveSceneData.Data[0]);

}

Scene.GPUScene.PrimitivesMarkedToUpdate[Index] = false;

}

// 轉換UAV的狀態到Compute, 以便上傳數據時GPU拷貝數據.

if (bResizedPrimitiveData)

{

RHICmdList.TransitionResource(EResourceTransitionAccess::ERWBarrier, EResourceTransitionPipeline::EComputeToCompute, MirrorResourceGPU->UAV);

}

else

{

RHICmdList.TransitionResource(EResourceTransitionAccess::EWritable, EResourceTransitionPipeline::EGfxToCompute, MirrorResourceGPU->UAV);

}

// 上傳數據到GPU.

{

Scene.GPUScene.PrimitiveUploadBuffer.ResourceUploadTo(RHICmdList, *MirrorResourceGPU, true);

}

}

// 轉換UAV的狀態到Gfx, 以便渲染物體時shader可用.

RHICmdList.TransitionResource(EResourceTransitionAccess::EReadable, EResourceTransitionPipeline::EComputeToGfx, MirrorResourceGPU->UAV);

}

// 使得MirrorResourceGPU數據生效.

if (GGPUSceneValidatePrimitiveBuffer && (Scene.GPUScene.PrimitiveBuffer.NumBytes > 0 || Scene.GPUScene.PrimitiveTexture.NumBytes > 0))

{

//UE_LOG(LogRenderer, Warning, TEXT("r.GPUSceneValidatePrimitiveBuffer enabled, doing slow readback from GPU"));

uint32 Stride = 0;

FPrimitiveSceneShaderData* InstanceBufferCopy = (FPrimitiveSceneShaderData*)(FPrimitiveSceneShaderData*)LockResource(*MirrorResourceGPU, Stride);

const int32 TotalNumberPrimitives = Scene.PrimitiveSceneProxies.Num();

int32 MaxPrimitivesUploads = GetMaxPrimitivesUpdate(TotalNumberPrimitives, FPrimitiveSceneShaderData::PrimitiveDataStrideInFloat4s);

for (int32 IndexOffset = 0; IndexOffset < TotalNumberPrimitives; IndexOffset += MaxPrimitivesUploads)

{

for (int32 Index = 0; (Index < MaxPrimitivesUploads) && ((Index + IndexOffset) < TotalNumberPrimitives); ++Index)

{

FPrimitiveSceneShaderData PrimitiveSceneData(Scene.PrimitiveSceneProxies[Index + IndexOffset]);

for (int i = 0; i < FPrimitiveSceneShaderData::PrimitiveDataStrideInFloat4s; i++)

{

check(PrimitiveSceneData.Data[i] == InstanceBufferCopy[Index].Data[i]);

}

}

InstanceBufferCopy += Stride / sizeof(FPrimitiveSceneShaderData);

}

UnlockResourceGPUScene(*MirrorResourceGPU);

}

(......)

}

}

關於以上程式碼,值得一提的是:

-

為了減少GPU資源由於尺寸太小而重新創建,GPU Scene在資源增長(保留)策略是:不小於256,且向上取2的N次方(即翻倍)。這個策略跟

std::vector有點類似。 -

UE的默認RHI是基於DirectX11的,在轉換資源時,只有Gfx和Compute兩種

ResourceTransitionPipeline,而忽略了DirectX12的Copy類型:// Engine\Source\Runtime\RHI\Public\RHI.h enum class EResourceTransitionPipeline { EGfxToCompute, EComputeToGfx, EGfxToGfx, EComputeToCompute, };

4.3.4 InitViews

InitViews是GPU Scene的更新之後緊挨著執行。它的處理的渲染邏輯很多且重要:可見性判定,收集場景圖元數據和標記,創建可見網格命令,初始化Pass渲染所需的數據等等。程式碼如下:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

bool FDeferredShadingSceneRenderer::InitViews(FRHICommandListImmediate& RHICmdList, FExclusiveDepthStencil::Type BasePassDepthStencilAccess, struct FILCUpdatePrimTaskData& ILCTaskData, FGraphEventArray& UpdateViewCustomDataEvents)

{

// 創建可見性幀設置預備階段.

PreVisibilityFrameSetup(RHICmdList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 特效系統初始化

{

if (Scene->FXSystem && Scene->FXSystem->RequiresEarlyViewUniformBuffer() && Views.IsValidIndex(0))

{

// 保證第一個view的RHI資源已被初始化.

Views[0].InitRHIResources();

Scene->FXSystem->PostInitViews(RHICmdList, Views[0].ViewUniformBuffer, Views[0].AllowGPUParticleUpdate() && !ViewFamily.EngineShowFlags.HitProxies);

}

}

// 創建可見性網格指令.

FViewVisibleCommandsPerView ViewCommandsPerView;

ViewCommandsPerView.SetNum(Views.Num());

// 計算可見性.

ComputeViewVisibility(RHICmdList, BasePassDepthStencilAccess, ViewCommandsPerView, DynamicIndexBufferForInitViews, DynamicVertexBufferForInitViews, DynamicReadBufferForInitViews);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 膠囊陰影

CreateIndirectCapsuleShadows();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// 初始化大氣效果.

if (ShouldRenderSkyAtmosphere(Scene, ViewFamily.EngineShowFlags))

{

InitSkyAtmosphereForViews(RHICmdList);

}

// 創建可見性幀設置後置階段.

PostVisibilityFrameSetup(ILCTaskData);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

(......)

// 是否可能在Prepass之後初始化view,由GDoInitViewsLightingAfterPrepass決定,而GDoInitViewsLightingAfterPrepass又可通過控制台命令r.DoInitViewsLightingAfterPrepass設定。

bool bDoInitViewAftersPrepass = !!GDoInitViewsLightingAfterPrepass;

if (!bDoInitViewAftersPrepass)

{

InitViewsPossiblyAfterPrepass(RHICmdList, ILCTaskData, UpdateViewCustomDataEvents);

}

{

// 初始化所有view的uniform buffer和RHI資源.

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

if (View.ViewState)

{

if (!View.ViewState->ForwardLightingResources)

{

View.ViewState->ForwardLightingResources.Reset(new FForwardLightingViewResources());

}

View.ForwardLightingResources = View.ViewState->ForwardLightingResources.Get();

}

else

{

View.ForwardLightingResourcesStorage.Reset(new FForwardLightingViewResources());

View.ForwardLightingResources = View.ForwardLightingResourcesStorage.Get();

}

#if RHI_RAYTRACING

View.IESLightProfileResource = View.ViewState ? &View.ViewState->IESLightProfileResources : nullptr;

#endif

// Set the pre-exposure before initializing the constant buffers.

if (View.ViewState)

{

View.ViewState->UpdatePreExposure(View);

}

// Initialize the view's RHI resources.

View.InitRHIResources();

}

}

// 體積霧

SetupVolumetricFog();

// 發送開始渲染事件.

OnStartRender(RHICmdList);

return bDoInitViewAftersPrepass;

}

上面的程式碼似乎沒有做太多邏輯,然而實際上很多邏輯分散在了上面的一些重要介面中,先分析PreVisibilityFrameSetup:

// Engine\Source\Runtime\Renderer\Private\SceneVisibility.cpp

void FDeferredShadingSceneRenderer::PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList)

{

// Possible stencil dither optimization approach

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

View.bAllowStencilDither = bDitheredLODTransitionsUseStencil;

}

FSceneRenderer::PreVisibilityFrameSetup(RHICmdList);

}

void FSceneRenderer::PreVisibilityFrameSetup(FRHICommandListImmediate& RHICmdList)

{

// 通知RHI已經開始渲染場景了.

RHICmdList.BeginScene();

{

static auto CVar = IConsoleManager::Get().FindConsoleVariable(TEXT("r.DoLazyStaticMeshUpdate"));

const bool DoLazyStaticMeshUpdate = (CVar->GetInt() && !GIsEditor);

// 延遲的靜態網格更新.

if (DoLazyStaticMeshUpdate)

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_PreVisibilityFrameSetup_EvictionForLazyStaticMeshUpdate);

static int32 RollingRemoveIndex = 0;

static int32 RollingPassShrinkIndex = 0;

if (RollingRemoveIndex >= Scene->Primitives.Num())

{

RollingRemoveIndex = 0;

RollingPassShrinkIndex++;

if (RollingPassShrinkIndex >= UE_ARRAY_COUNT(Scene->CachedDrawLists))

{

RollingPassShrinkIndex = 0;

}

// Periodically shrink the SparseArray containing cached mesh draw commands which we are causing to be regenerated with UpdateStaticMeshes

Scene->CachedDrawLists[RollingPassShrinkIndex].MeshDrawCommands.Shrink();

}

const int32 NumRemovedPerFrame = 10;

TArray<FPrimitiveSceneInfo*, TInlineAllocator<10>> SceneInfos;

for (int32 NumRemoved = 0; NumRemoved < NumRemovedPerFrame && RollingRemoveIndex < Scene->Primitives.Num(); NumRemoved++, RollingRemoveIndex++)

{

SceneInfos.Add(Scene->Primitives[RollingRemoveIndex]);

}

FPrimitiveSceneInfo::UpdateStaticMeshes(RHICmdList, Scene, SceneInfos, false);

}

}

// 轉換Skin Cache

RunGPUSkinCacheTransition(RHICmdList, Scene, EGPUSkinCacheTransition::FrameSetup);

// 初始化Groom頭髮

if (IsHairStrandsEnable(Scene->GetShaderPlatform()) && Views.Num() > 0)

{

const EWorldType::Type WorldType = Views[0].Family->Scene->GetWorld()->WorldType;

const FShaderDrawDebugData* ShaderDrawData = &Views[0].ShaderDrawData;

auto ShaderMap = GetGlobalShaderMap(FeatureLevel);

RunHairStrandsInterpolation(RHICmdList, WorldType, Scene->GetGPUSkinCache(), ShaderDrawData, ShaderMap, EHairStrandsInterpolationType::SimulationStrands, nullptr);

}

// 特效系統

if (Scene->FXSystem && Views.IsValidIndex(0))

{

Scene->FXSystem->PreInitViews(RHICmdList, Views[0].AllowGPUParticleUpdate() && !ViewFamily.EngineShowFlags.HitProxies);

}

(......)

// 設置運動模糊參數(包含TAA的處理)

for(int32 ViewIndex = 0;ViewIndex < Views.Num();ViewIndex++)

{

FViewInfo& View = Views[ViewIndex];

FSceneViewState* ViewState = View.ViewState;

check(View.VerifyMembersChecks());

// Once per render increment the occlusion frame counter.

if (ViewState)

{

ViewState->OcclusionFrameCounter++;

}

// HighResScreenshot should get best results so we don't do the occlusion optimization based on the former frame

extern bool GIsHighResScreenshot;

const bool bIsHitTesting = ViewFamily.EngineShowFlags.HitProxies;

// Don't test occlusion queries in collision viewmode as they can be bigger then the rendering bounds.

const bool bCollisionView = ViewFamily.EngineShowFlags.CollisionVisibility || ViewFamily.EngineShowFlags.CollisionPawn;

if (GIsHighResScreenshot || !DoOcclusionQueries(FeatureLevel) || bIsHitTesting || bCollisionView)

{

View.bDisableQuerySubmissions = true;

View.bIgnoreExistingQueries = true;

}

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// set up the screen area for occlusion

float NumPossiblePixels = SceneContext.UseDownsizedOcclusionQueries() && IsValidRef(SceneContext.GetSmallDepthSurface()) ?

(float)View.ViewRect.Width() / SceneContext.GetSmallColorDepthDownsampleFactor() * (float)View.ViewRect.Height() / SceneContext.GetSmallColorDepthDownsampleFactor() :

View.ViewRect.Width() * View.ViewRect.Height();

View.OneOverNumPossiblePixels = NumPossiblePixels > 0.0 ? 1.0f / NumPossiblePixels : 0.0f;

// Still need no jitter to be set for temporal feedback on SSR (it is enabled even when temporal AA is off).

check(View.TemporalJitterPixels.X == 0.0f);

check(View.TemporalJitterPixels.Y == 0.0f);

// Cache the projection matrix b

// Cache the projection matrix before AA is applied

View.ViewMatrices.SaveProjectionNoAAMatrix();

if (ViewState)

{

check(View.bStatePrevViewInfoIsReadOnly);

View.bStatePrevViewInfoIsReadOnly = ViewFamily.bWorldIsPaused || ViewFamily.EngineShowFlags.HitProxies || bFreezeTemporalHistories;

ViewState->SetupDistanceFieldTemporalOffset(ViewFamily);

if (!View.bStatePrevViewInfoIsReadOnly && !bFreezeTemporalSequences)

{

ViewState->FrameIndex++;

}

if (View.OverrideFrameIndexValue.IsSet())

{

ViewState->FrameIndex = View.OverrideFrameIndexValue.GetValue();

}

}

// Subpixel jitter for temporal AA

int32 CVarTemporalAASamplesValue = CVarTemporalAASamples.GetValueOnRenderThread();

bool bTemporalUpsampling = View.PrimaryScreenPercentageMethod == EPrimaryScreenPercentageMethod::TemporalUpscale;

// Apply a sub pixel offset to the view.

if (View.AntiAliasingMethod == AAM_TemporalAA && ViewState && (CVarTemporalAASamplesValue > 0 || bTemporalUpsampling) && View.bAllowTemporalJitter)

{

float EffectivePrimaryResolutionFraction = float(View.ViewRect.Width()) / float(View.GetSecondaryViewRectSize().X);

// Compute number of TAA samples.

int32 TemporalAASamples = CVarTemporalAASamplesValue;

{

if (Scene->GetFeatureLevel() < ERHIFeatureLevel::SM5)

{

// Only support 2 samples for mobile temporal AA.

TemporalAASamples = 2;

}

else if (bTemporalUpsampling)

{