【猫狗数据集】使用学习率衰减策略并边训练边测试

- 2020 年 3 月 12 日

- 笔记

数据集下载地址:

链接:https://pan.baidu.com/s/1l1AnBgkAAEhh0vI5_loWKw

提取码:2xq4

创建数据集:https://www.cnblogs.com/xiximayou/p/12398285.html

读取数据集:https://www.cnblogs.com/xiximayou/p/12422827.html

进行训练:https://www.cnblogs.com/xiximayou/p/12448300.html

保存模型并继续进行训练:https://www.cnblogs.com/xiximayou/p/12452624.html

加载保存的模型并测试:https://www.cnblogs.com/xiximayou/p/12459499.html

划分验证集并边训练边验证:https://www.cnblogs.com/xiximayou/p/12464738.html

epoch、batchsize、step之间的关系:https://www.cnblogs.com/xiximayou/p/12405485.html

一个合适的学习率对网络的训练至关重要。学习率太大,会导致梯度在最优解处来回震荡,甚至无法收敛。学习率太小,将导致网络的收敛速度较为缓慢。一般而言,都会先采取较大的学习率进行训练,然后在训练的过程中不断衰减学习率。而学习率衰减的方式有很多,这里我们就只使用简单的方式。

上一节划分了验证集,这节我们要边训练边测试,同时要保存训练的最后一个epoch模型,以及保存测试准确率最高的那个模型。

首先是学习率衰减策略,这里展示两种方式:

scheduler = optim.lr_scheduler.StepLR(optimizer, 80, 0.1) scheduler = optim.lr_scheduler.MultiStepLR(optimizer,[80,160],0.1)

第一种方式是每个80个epoch就将学习率衰减为原来的0.1倍。

第二种方式是在第80和第160个epoch时将学习率衰减为原来的0.1倍

比如说第1个epoch的学习率为0.1,那么在1-80epoch期间都会使用该学习率,在81-160期间使用0.1×0.1=0.01学习率,在161及以后使用0.01×0.1=0.001学习率

一般而言,会在1/3和2/3处进行学习率衰减,比如有200个epoch,那么在70、140个epoch上进行学习率衰减。不过也需要视情况而定。

接下来,我们将学习率衰减策略加入到main.py中:

main.py

import sys sys.path.append("/content/drive/My Drive/colab notebooks") from utils import rdata from model import resnet import torch.nn as nn import torch import numpy as np import torchvision import train import torch.optim as optim np.random.seed(0) torch.manual_seed(0) torch.cuda.manual_seed_all(0) torch.backends.cudnn.deterministic = True torch.backends.cudnn.benchmark = True device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') batch_size=128 train_loader,val_loader,test_loader=rdata.load_dataset(batch_size) model =torchvision.models.resnet18(pretrained=False) model.fc = nn.Linear(model.fc.in_features,2,bias=False) model.cuda() #定义训练的epochs num_epochs=6 #定义学习率 learning_rate=0.01 #定义损失函数 criterion=nn.CrossEntropyLoss() #定义优化方法,简单起见,就是用带动量的随机梯度下降 optimizer = torch.optim.SGD(params=model.parameters(), lr=0.1, momentum=0.9, weight_decay=1*1e-4) scheduler = optim.lr_scheduler.MultiStepLR(optimizer, [2,4], 0.1) print("训练集有:",len(train_loader.dataset)) #print("验证集有:",len(val_loader.dataset)) print("测试集有:",len(test_loader.dataset)) def main(): trainer=train.Trainer(criterion,optimizer,model) trainer.loop(num_epochs,train_loader,val_loader,test_loader,scheduler) main()

这里我们只训练6个epoch,在第2和第4个epoch进行学习率衰减策略。

train.py

import torch class Trainer: def __init__(self,criterion,optimizer,model): self.criterion=criterion self.optimizer=optimizer self.model=model def get_lr(self): for param_group in self.optimizer.param_groups: return param_group['lr'] def loop(self,num_epochs,train_loader,val_loader,test_loader,scheduler=None,acc1=0.0): self.acc1=acc1 for epoch in range(1,num_epochs+1): lr=self.get_lr() print("epoch:{},lr:{}".format(epoch,lr)) self.train(train_loader,epoch,num_epochs) #self.val(val_loader,epoch,num_epochs) self.test(test_loader,epoch,num_epochs) if scheduler is not None: scheduler.step() def train(self,dataloader,epoch,num_epochs): self.model.train() with torch.enable_grad(): self._iteration_train(dataloader,epoch,num_epochs) def val(self,dataloader,epoch,num_epochs): self.model.eval() with torch.no_grad(): self._iteration_val(dataloader,epoch,num_epochs) def test(self,dataloader,epoch,num_epochs): self.model.eval() with torch.no_grad(): self._iteration_test(dataloader,epoch,num_epochs) def _iteration_train(self,dataloader,epoch,num_epochs): total_step=len(dataloader) tot_loss = 0.0 correct = 0 for i ,(images, labels) in enumerate(dataloader): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) _, preds = torch.max(outputs.data,1) loss = self.criterion(outputs, labels) # Backward and optimizer self.optimizer.zero_grad() loss.backward() self.optimizer.step() tot_loss += loss.data if (i+1) % 2 == 0: print('Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}' .format(epoch, num_epochs, i+1, total_step, loss.item())) correct += torch.sum(preds == labels.data).to(torch.float32) ### Epoch info #### epoch_loss = tot_loss/len(dataloader.dataset) print('train loss: {:.4f}'.format(epoch_loss)) epoch_acc = correct/len(dataloader.dataset) print('train acc: {:.4f}'.format(epoch_acc)) if epoch==num_epochs: state = { 'model': self.model.state_dict(), 'optimizer':self.optimizer.state_dict(), 'epoch': epoch, 'train_loss':epoch_loss, 'train_acc':epoch_acc, } save_path="/content/drive/My Drive/colab notebooks/output/" torch.save(state,save_path+"/resnet18_final"+".t7") def _iteration_val(self,dataloader,epoch,num_epochs): total_step=len(dataloader) tot_loss = 0.0 correct = 0 for i ,(images, labels) in enumerate(dataloader): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) _, preds = torch.max(outputs.data,1) loss = self.criterion(outputs, labels) tot_loss += loss.data correct += torch.sum(preds == labels.data).to(torch.float32) if (i+1) % 2 == 0: print('Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}' .format(1, 1, i+1, total_step, loss.item())) ### Epoch info #### epoch_loss = tot_loss/len(dataloader.dataset) print('val loss: {:.4f}'.format(epoch_loss)) epoch_acc = correct/len(dataloader.dataset) print('val acc: {:.4f}'.format(epoch_acc)) def _iteration_test(self,dataloader,epoch,num_epochs): total_step=len(dataloader) tot_loss = 0.0 correct = 0 for i ,(images, labels) in enumerate(dataloader): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) _, preds = torch.max(outputs.data,1) loss = self.criterion(outputs, labels) tot_loss += loss.data correct += torch.sum(preds == labels.data).to(torch.float32) if (i+1) % 2 == 0: print('Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}' .format(1, 1, i+1, total_step, loss.item())) ### Epoch info #### epoch_loss = tot_loss/len(dataloader.dataset) print('test loss: {:.4f}'.format(epoch_loss)) epoch_acc = correct/len(dataloader.dataset) print('test acc: {:.4f}'.format(epoch_acc)) if epoch_acc > self.acc1: state = { "model": self.model.state_dict(), "optimizer": self.optimizer.state_dict(), "epoch": epoch, "epoch_loss": epoch_loss, "epoch_acc": epoch_acc, "acc1": self.acc1, } save_path="/content/drive/My Drive/colab notebooks/output/" print("在第{}个epoch取得最好的测试准确率,准确率为:{}".format(epoch,epoch_acc)) torch.save(state,save_path+"/resnet18_best"+".t7") self.acc1=max(self.acc1,epoch_acc)

我们首先增加了test()和_iteration_test()用于测试。

这里需要注意的是:

UserWarning: Detected call of `lr_scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `lr_scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the learning rate schedule.

也就是说:

scheduler = ... >>> for epoch in range(100): >>> train(...) >>> validate(...) >>> scheduler.step()

在pytorch1.1.0及之后,scheduler.step()这个要放在最后面了。我们定义了一个获取学习率的函数,在每一个epoch的时候打印学习率。我们同时要存储训练的最后一个epoch的模型,方便我们继续训练。存储测试准确率最高的模型,方便我们使用。

最终结果如下,省略了其中的每一个step:

训练集有: 18255 测试集有: 4750 epoch:1,lr:0.1 train loss: 0.0086 train acc: 0.5235 test loss: 0.0055 test acc: 0.5402 在第1个epoch取得最好的测试准确率,准确率为:0.5402105450630188 epoch:2,lr:0.1 train loss: 0.0054 train acc: 0.5562 test loss: 0.0055 test acc: 0.5478 在第2个epoch取得最好的测试准确率,准确率为:0.547789454460144 epoch:3,lr:0.010000000000000002 train loss: 0.0052 train acc: 0.6098 test loss: 0.0053 test acc: 0.6198 在第3个epoch取得最好的测试准确率,准确率为:0.6197894811630249 epoch:4,lr:0.010000000000000002 train loss: 0.0051 train acc: 0.6150 test loss: 0.0051 test acc: 0.6291 在第4个epoch取得最好的测试准确率,准确率为:0.6290526390075684 train loss: 0.0051 train acc: 0.6222 test loss: 0.0052 test acc: 0.6257 epoch:6,lr:0.0010000000000000002 train loss: 0.0051 train acc: 0.6224 test loss: 0.0052 test acc: 0.6295 在第6个epoch取得最好的测试准确率,准确率为:0.6294736862182617

很神奇,lr最后面居然不是0。对lr和准确率输出时可指定输出小数点后?位:{:.?f}

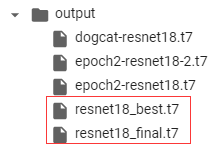

最后看下保存的模型:

的确是都有的。

下一节:可视化训练和测试过程。