Istio(十三):Istio項目實際案例——Online Boutique

一.模塊概覽

在本模塊中,我們將部署名為 Online Boutique 的微服務應用程序,試用 Istio 的不同功能。

Online Boutique 是一個雲原生微服務演示應用程序。Online Boutique 是一個由 10 個微服務組成的應用。該應用是一個基於 Web 的電子商務應用,用戶可以瀏覽商品,將其添加到購物車,併購買商品。

二.系統環境

| 服務器版本 | docker軟件版本 | Kubernetes(k8s)集群版本 | Istio軟件版本 | CPU架構 |

|---|---|---|---|---|

| CentOS Linux release 7.4.1708 (Core) | Docker version 20.10.12 | v1.21.9 | Istio1.14 | x86_64 |

三.創建Kubernetes(k8s)集群

3.1 創建Kubernetes(k8s)集群

我們需要一套可以正常運行的Kubernetes集群,關於Kubernetes(k8s)集群的安裝部署,可以查看博客《Centos7 安裝部署Kubernetes(k8s)集群》//www.cnblogs.com/renshengdezheli/p/16686769.html

3.2 Kubernetes集群環境

Kubernetes集群架構:k8scloude1作為master節點,k8scloude2,k8scloude3作為worker節點

| 服務器 | 操作系統版本 | CPU架構 | 進程 | 功能描述 |

|---|---|---|---|---|

| k8scloude1/192.168.110.130 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kube-apiserver,etcd,kube-scheduler,kube-controller-manager,kubelet,kube-proxy,coredns,calico | k8s master節點 |

| k8scloude2/192.168.110.129 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kubelet,kube-proxy,calico | k8s worker節點 |

| k8scloude3/192.168.110.128 | CentOS Linux release 7.4.1708 (Core) | x86_64 | docker,kubelet,kube-proxy,calico | k8s worker節點 |

四.安裝istio

4.1 安裝Istio

Istio最新版本為1.15,因為我們Kubernetes集群版本為1.21.9,所以我們選擇安裝Istio 1.14版本。

[root@k8scloude1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8scloude1 Ready control-plane,master 288d v1.21.9

k8scloude2 Ready <none> 288d v1.21.9

k8scloude3 Ready <none> 288d v1.21.9

我們將安裝 Istio的demo 配置文件,因為它包含所有的核心組件,啟用了跟蹤和日誌記錄,便於學習不同的 Istio 功能。

關於istio的詳細安裝部署,請查看博客《Istio(二):在Kubernetes(k8s)集群上安裝部署istio1.14》//www.cnblogs.com/renshengdezheli/p/16836404.html

也可以按照如下使用 GetMesh CLI 在Kubernetes集群中安裝 Istio 。

下載 GetMesh CLI:

curl -sL //istio.tetratelabs.io/getmesh/install.sh | bash

安裝 Istio:

getmesh istioctl install --set profile=demo

Istio安裝完成後,創建一個命名空間online-boutique,新的項目就部署在online-boutique命名空間下,給命名空間online-boutique設置上 istio-injection=enabled 標籤,啟用sidecar 自動注入。

#創建命名空間online-boutique

[root@k8scloude1 ~]# kubectl create ns online-boutique

namespace/online-boutique created

#切換命名空間

[root@k8scloude1 ~]# kubens online-boutique

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "online-boutique".

#讓命名空間online-boutique啟用sidecar 自動注入

[root@k8scloude1 ~]# kubectl label ns online-boutique istio-injection=enabled

namespace/online-boutique labeled

[root@k8scloude1 ~]# kubectl get ns -l istio-injection --show-labels

NAME STATUS AGE LABELS

online-boutique Active 16m istio-injection=enabled,kubernetes.io/metadata.name=online-boutique

五.部署online Boutique應用

5.1 部署 Online Boutique 應用

在集群和 Istio 準備好後,我們可以克隆 Online Boutique 應用庫了。istio和k8s集群版本如下:

[root@k8scloude1 ~]# istioctl version

client version: 1.14.3

control plane version: 1.14.3

data plane version: 1.14.3 (1 proxies)

[root@k8scloude1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8scloude1 Ready control-plane,master 283d v1.21.9

k8scloude2 Ready <none> 283d v1.21.9

k8scloude3 Ready <none> 283d v1.21.9

使用git克隆代碼倉庫:

#安裝git

[root@k8scloude1 ~]# yum -y install git

#查看git版本

[root@k8scloude1 ~]# git version

git version 1.8.3.1

#創建online-boutique目錄,項目放在該目錄下

[root@k8scloude1 ~]# mkdir online-boutique

[root@k8scloude1 ~]# cd online-boutique/

[root@k8scloude1 online-boutique]# pwd

/root/online-boutique

#git克隆代碼

[root@k8scloude1 online-boutique]# git clone //github.com/GoogleCloudPlatform/microservices-demo.git

正克隆到 'microservices-demo'...

remote: Enumerating objects: 8195, done.

remote: Counting objects: 100% (332/332), done.

remote: Compressing objects: 100% (167/167), done.

remote: Total 8195 (delta 226), reused 241 (delta 161), pack-reused 7863

接收對象中: 100% (8195/8195), 30.55 MiB | 154.00 KiB/s, done.

處理 delta 中: 100% (5823/5823), done.

[root@k8scloude1 online-boutique]# ls

microservices-demo

前往 microservices-demo 目錄,istio-manifests.yaml,kubernetes-manifests.yaml是主要的安裝文件

[root@k8scloude1 online-boutique]# cd microservices-demo/

[root@k8scloude1 microservices-demo]# ls

cloudbuild.yaml CODEOWNERS docs istio-manifests kustomize pb release SECURITY.md src

CODE_OF_CONDUCT.md CONTRIBUTING.md hack kubernetes-manifests LICENSE README.md renovate.json skaffold.yaml terraform

[root@k8scloude1 microservices-demo]# cd release/

[root@k8scloude1 release]# ls

istio-manifests.yaml kubernetes-manifests.yaml

查看所需的鏡像,可以在k8s集群的worker節點提前下載鏡像

關於gcr.io鏡像的下載方式可以查看博客《輕鬆下載k8s.gcr.io,gcr.io,quay.io鏡像 》//www.cnblogs.com/renshengdezheli/p/16814395.html

[root@k8scloude1 release]# ls

istio-manifests.yaml kubernetes-manifests.yaml

[root@k8scloude1 release]# vim kubernetes-manifests.yaml

#可以看到安裝此項目需要13個鏡像,gcr.io表示是Google的鏡像

[root@k8scloude1 release]# grep image kubernetes-manifests.yaml

image: gcr.io/google-samples/microservices-demo/emailservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/checkoutservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/recommendationservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/frontend:v0.4.0

image: gcr.io/google-samples/microservices-demo/paymentservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/productcatalogservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/cartservice:v0.4.0

image: busybox:latest

image: gcr.io/google-samples/microservices-demo/loadgenerator:v0.4.0

image: gcr.io/google-samples/microservices-demo/currencyservice:v0.4.0

image: gcr.io/google-samples/microservices-demo/shippingservice:v0.4.0

image: redis:alpine

image: gcr.io/google-samples/microservices-demo/adservice:v0.4.0

[root@k8scloude1 release]# grep image kubernetes-manifests.yaml | uniq | wc -l

13

#在k8s集群的worker節點提前下載鏡像,以k8scloude2為例

#把gcr.io換為gcr.lank8s.cn,比如gcr.io/google-samples/microservices-demo/emailservice:v0.4.0換為gcr.lank8s.cn/google-samples/microservices-demo/emailservice:v0.4.0

[root@k8scloude2 ~]# docker pull gcr.lank8s.cn/google-samples/microservices-demo/emailservice:v0.4.0

。。。。。。

其他那些鏡像就按照此方法下載......

。。。。。。

[root@k8scloude2 ~]# docker pull gcr.lank8s.cn/google-samples/microservices-demo/adservice:v0.4.0

#鏡像下載之後,使用sed把kubernetes-manifests.yaml文件中的gcr.io修改為gcr.lank8s.cn

[root@k8scloude1 release]# sed -i 's/gcr.io/gcr.lank8s.cn/' kubernetes-manifests.yaml

#此時kubernetes-manifests.yaml文件中的鏡像就全被修改了

[root@k8scloude1 release]# grep image kubernetes-manifests.yaml

image: gcr.lank8s.cn/google-samples/microservices-demo/emailservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/checkoutservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/recommendationservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/frontend:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/paymentservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/productcatalogservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/cartservice:v0.4.0

image: busybox:latest

image: gcr.lank8s.cn/google-samples/microservices-demo/loadgenerator:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/currencyservice:v0.4.0

image: gcr.lank8s.cn/google-samples/microservices-demo/shippingservice:v0.4.0

image: redis:alpine

image: gcr.lank8s.cn/google-samples/microservices-demo/adservice:v0.4.0

#istio-manifests.yaml 文件沒有鏡像

[root@k8scloude1 release]# vim istio-manifests.yaml

[root@k8scloude1 release]# grep image istio-manifests.yaml

創建 Kubernetes 資源:

[root@k8scloude1 release]# pwd

/root/online-boutique/microservices-demo/release

[root@k8scloude1 release]# ls

istio-manifests.yaml kubernetes-manifests.yaml

#在online-boutique命名空間創建k8s資源

[root@k8scloude1 release]# kubectl apply -f /root/online-boutique/microservices-demo/release/kubernetes-manifests.yaml -n online-boutique

檢查所有 Pod 都在運行:

[root@k8scloude1 release]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

adservice-9c6d67f96-txrsb 2/2 Running 0 85s 10.244.112.151 k8scloude2 <none> <none>

cartservice-6d7544dc98-86p9c 2/2 Running 0 86s 10.244.251.228 k8scloude3 <none> <none>

checkoutservice-5ff49769d4-5p2cn 2/2 Running 0 86s 10.244.112.148 k8scloude2 <none> <none>

currencyservice-5f56dd7456-lxjnz 2/2 Running 0 85s 10.244.251.241 k8scloude3 <none> <none>

emailservice-677bbb77d8-8ndsp 2/2 Running 0 86s 10.244.112.156 k8scloude2 <none> <none>

frontend-7d65884948-hnmh6 2/2 Running 0 86s 10.244.112.154 k8scloude2 <none> <none>

loadgenerator-77ffcbd84d-hhh2w 2/2 Running 0 85s 10.244.112.147 k8scloude2 <none> <none>

paymentservice-88f465d9d-nfxnc 2/2 Running 0 86s 10.244.112.149 k8scloude2 <none> <none>

productcatalogservice-8496676498-6zpfk 2/2 Running 0 86s 10.244.112.143 k8scloude2 <none> <none>

recommendationservice-555cdc5c84-j5w8f 2/2 Running 0 86s 10.244.251.227 k8scloude3 <none> <none>

redis-cart-6f65887b5d-42b8m 2/2 Running 0 85s 10.244.251.236 k8scloude3 <none> <none>

shippingservice-6ff94bd6-tm6d2 2/2 Running 0 85s 10.244.251.242 k8scloude3 <none> <none>

創建 Istio 資源:

[root@k8scloude1 microservices-demo]# pwd

/root/online-boutique/microservices-demo

[root@k8scloude1 microservices-demo]# ls istio-manifests/

allow-egress-googleapis.yaml frontend-gateway.yaml frontend.yaml

[root@k8scloude1 microservices-demo]# kubectl apply -f ./istio-manifests

serviceentry.networking.istio.io/allow-egress-googleapis created

serviceentry.networking.istio.io/allow-egress-google-metadata created

gateway.networking.istio.io/frontend-gateway created

virtualservice.networking.istio.io/frontend-ingress created

virtualservice.networking.istio.io/frontend created

部署了一切後,我們就可以得到入口網關的 IP 地址並打開前端服務:

[root@k8scloude1 microservices-demo]# INGRESS_HOST="$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')"

[root@k8scloude1 microservices-demo]# echo "$INGRESS_HOST"

192.168.110.190

[root@k8scloude1 microservices-demo]# kubectl get service -n istio-system istio-ingressgateway -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

istio-ingressgateway LoadBalancer 10.107.131.65 192.168.110.190 15021:30093/TCP,80:32126/TCP,443:30293/TCP,31400:30628/TCP,15443:30966/TCP 27d app=istio-ingressgateway,istio=ingressgateway

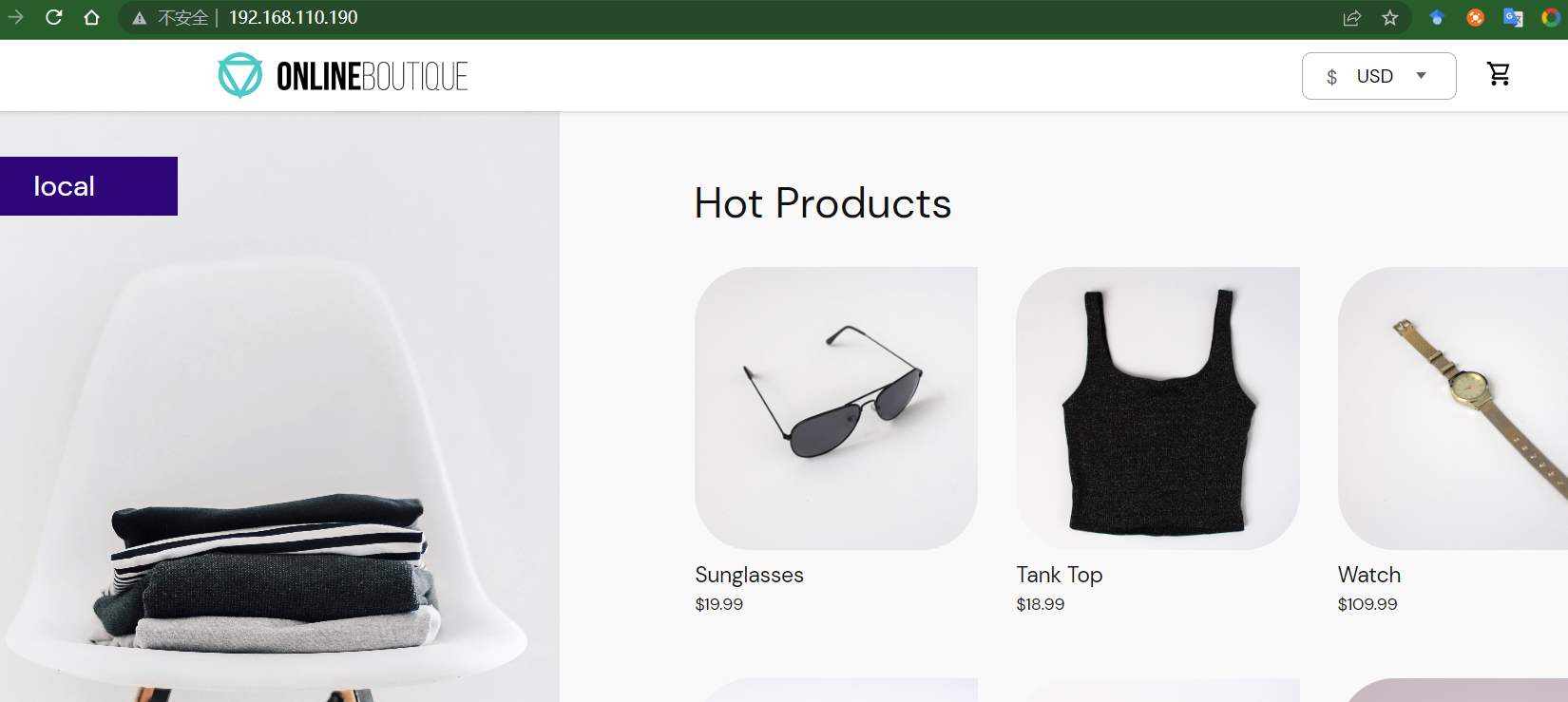

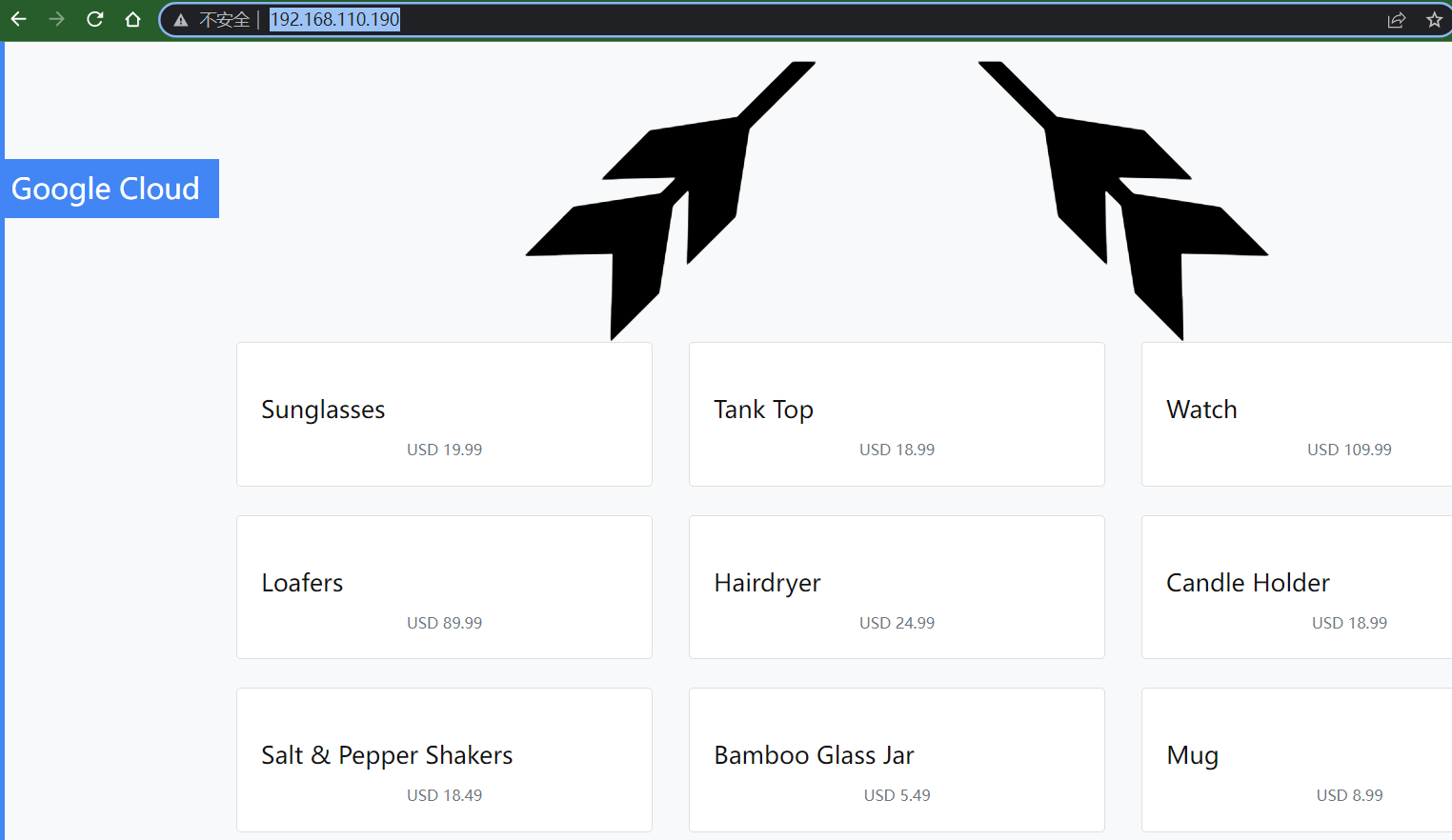

在瀏覽器中打開 INGRESS_HOST,你會看到前端服務,瀏覽器訪問//192.168.110.190/,如下圖所示:

我們需要做的最後一件事是刪除 frontend-external 服務。frontend-external 服務是一個 LoadBalancer 服務,它暴露了前端。由於我們正在使用 Istio 的入口網關,我們不再需要這個 LoadBalancer 服務了。

刪除frontend-external服務,運行:

[root@k8scloude1 ~]# kubectl get svc | grep frontend-external

frontend-external LoadBalancer 10.102.0.207 192.168.110.191 80:30173/TCP 4d15h

[root@k8scloude1 ~]# kubectl delete svc frontend-external

service "frontend-external" deleted

[root@k8scloude1 ~]# kubectl get svc | grep frontend-external

Online Boutique 應用清單還包括一個負載發生器,它正在生成對所有服務的請求——這是為了讓我們能夠模擬網站的流量。

六.部署可觀察性工具

6.1 部署可觀察性工具

接下來,我們將部署可觀察性、分佈式追蹤、數據可視化工具,下面兩種方法任選一種;

關於prometheus,grafana,kiali,zipkin更詳細的安裝方法可以查看博客《Istio(三):服務網格istio可觀察性:Prometheus,Grafana,Zipkin,Kiali》//www.cnblogs.com/renshengdezheli/p/16836943.html

#方法一:

[root@k8scloude1 ~]# kubectl apply -f //raw.githubusercontent.com/istio/istio/release-1.14/samples/addons/prometheus.yaml

[root@k8scloude1 ~]# kubectl apply -f //raw.githubusercontent.com/istio/istio/release-1.14/samples/addons/grafana.yaml

[root@k8scloude1 ~]# kubectl apply -f //raw.githubusercontent.com/istio/istio/release-1.14/samples/addons/kiali.yaml

[root@k8scloude1 ~]# kubectl apply -f //raw.githubusercontent.com/istio/istio/release-1.14/samples/addons/extras/zipkin.yaml

#方法二:下載istio安裝包istio-1.14.3-linux-amd64.tar.gz安裝分析工具

[root@k8scloude1 ~]# ls istio* -d

istio-1.14.3 istio-1.14.3-linux-amd64.tar.gz

[root@k8scloude1 ~]# cd istio-1.14.3/

[root@k8scloude1 addons]# pwd

/root/istio-1.14.3/samples/addons

[root@k8scloude1 addons]# ls

extras grafana.yaml jaeger.yaml kiali.yaml prometheus.yaml README.md

[root@k8scloude1 addons]# kubectl apply -f prometheus.yaml

[root@k8scloude1 addons]# kubectl apply -f grafana.yaml

[root@k8scloude1 addons]# kubectl apply -f kiali.yaml

[root@k8scloude1 addons]# ls extras/

prometheus-operator.yaml prometheus_vm_tls.yaml prometheus_vm.yaml zipkin.yaml

[root@k8scloude1 addons]# kubectl apply -f extras/zipkin.yaml

如果你在安裝 Kiali 的時候發現以下錯誤

No matches for kind "MonitoringDashboard" in version "monitoring.kiali.io/v1alpha1"請重新運行以上命令。

prometheus,grafana,kiali,zipkin被安裝在istio-system命名空間下,我們可以使用 getmesh istioctl dashboard kiali 打開 Kiali界面。

我們使用另外一種方法打開Kiali界面:

#可以看到prometheus,grafana,kiali,zipkin被安裝在istio-system命名空間下

[root@k8scloude1 addons]# kubectl get pod -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6c5dc6df7c-cnc9w 1/1 Running 2 27h

istio-egressgateway-58949b7c84-k7v6f 1/1 Running 8 10d

istio-ingressgateway-75bc568988-69k8j 1/1 Running 6 3d21h

istiod-84d979766b-kz5sd 1/1 Running 14 10d

kiali-5db6985fb5-8t77v 1/1 Running 0 3m25s

prometheus-699b7cc575-dx6rp 2/2 Running 8 2d21h

zipkin-6cd5d58bcc-hxngj 1/1 Running 1 17h

#可以看到kiali這個service的類型為ClusterIP,外部環境訪問不了

[root@k8scloude1 addons]# kubectl get service -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.100.151.232 <none> 3000:31092/TCP 27h

istio-egressgateway ClusterIP 10.102.56.241 <none> 80/TCP,443/TCP 10d

istio-ingressgateway LoadBalancer 10.107.131.65 192.168.110.190 15021:30093/TCP,80:32126/TCP,443:30293/TCP,31400:30628/TCP,15443:30966/TCP 10d

istiod ClusterIP 10.103.37.59 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 10d

kiali ClusterIP 10.109.42.120 <none> 20001/TCP,9090/TCP 7m42s

prometheus NodePort 10.101.141.187 <none> 9090:31755/TCP 2d21h

tracing ClusterIP 10.101.30.10 <none> 80/TCP 17h

zipkin NodePort 10.104.85.78 <none> 9411:30350/TCP 17h

#修改kiali這個service的類型為NodePort,這樣外部環境就可以訪問kiali了

#把type: ClusterIP 修改為 type: NodePort即可

[root@k8scloude1 addons]# kubectl edit service kiali -n istio-system

service/kiali edited

#現在kiali這個service的類型為NodePort,瀏覽器輸入物理機ip:30754即可訪問kiali網頁了

[root@k8scloude1 addons]# kubectl get service -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.100.151.232 <none> 3000:31092/TCP 27h

istio-egressgateway ClusterIP 10.102.56.241 <none> 80/TCP,443/TCP 10d

istio-ingressgateway LoadBalancer 10.107.131.65 192.168.110.190 15021:30093/TCP,80:32126/TCP,443:30293/TCP,31400:30628/TCP,15443:30966/TCP 10d

istiod ClusterIP 10.103.37.59 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 10d

kiali NodePort 10.109.42.120 <none> 20001:30754/TCP,9090:31573/TCP 8m42s

prometheus NodePort 10.101.141.187 <none> 9090:31755/TCP 2d21h

tracing ClusterIP 10.101.30.10 <none> 80/TCP 17h

zipkin NodePort 10.104.85.78 <none> 9411:30350/TCP 17h

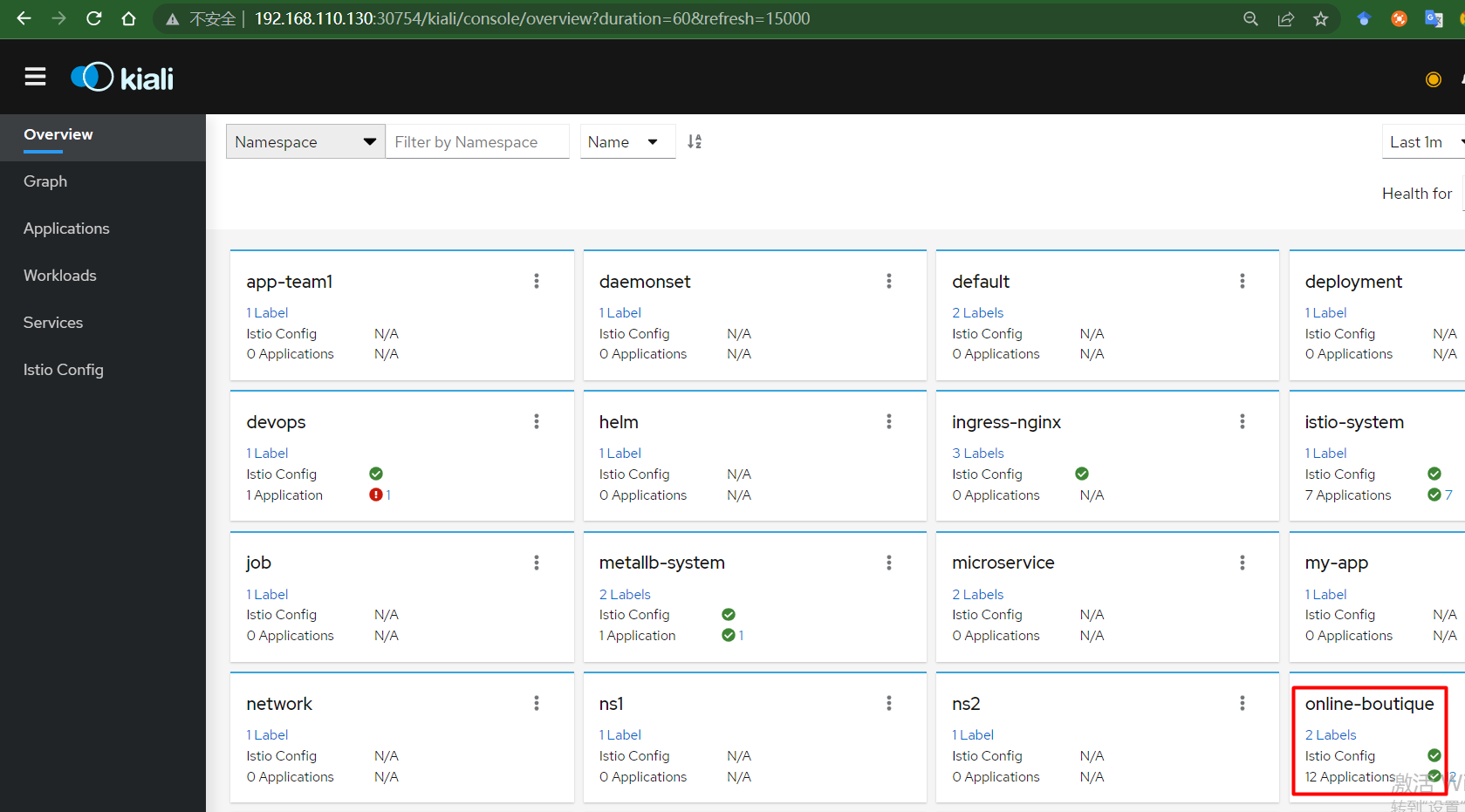

k8scloude1機器的地址為192.168.110.130,我們可以在瀏覽器中打開 //192.168.110.130:30754,進入 kiali,kiali首頁如下:

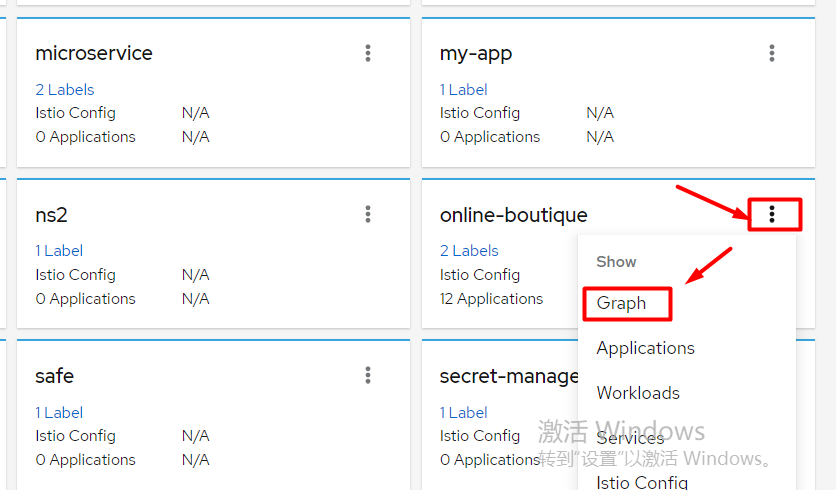

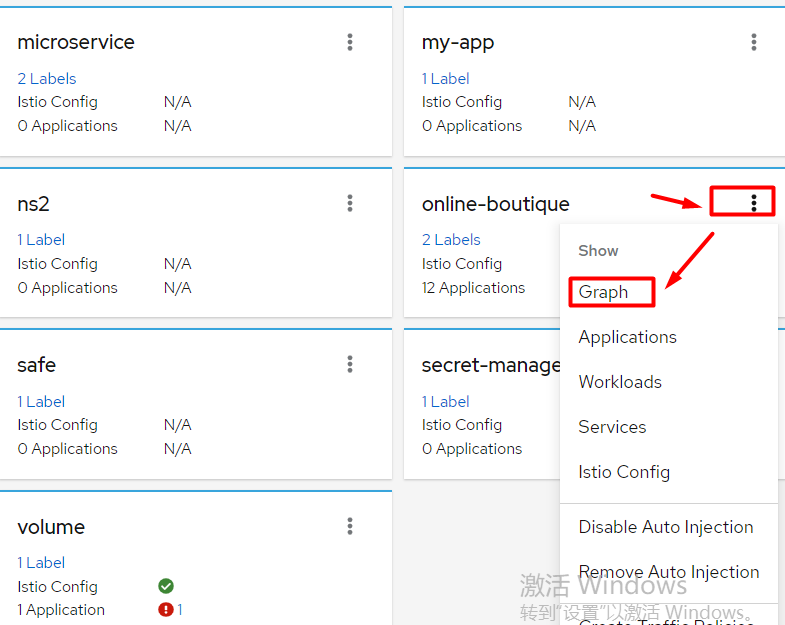

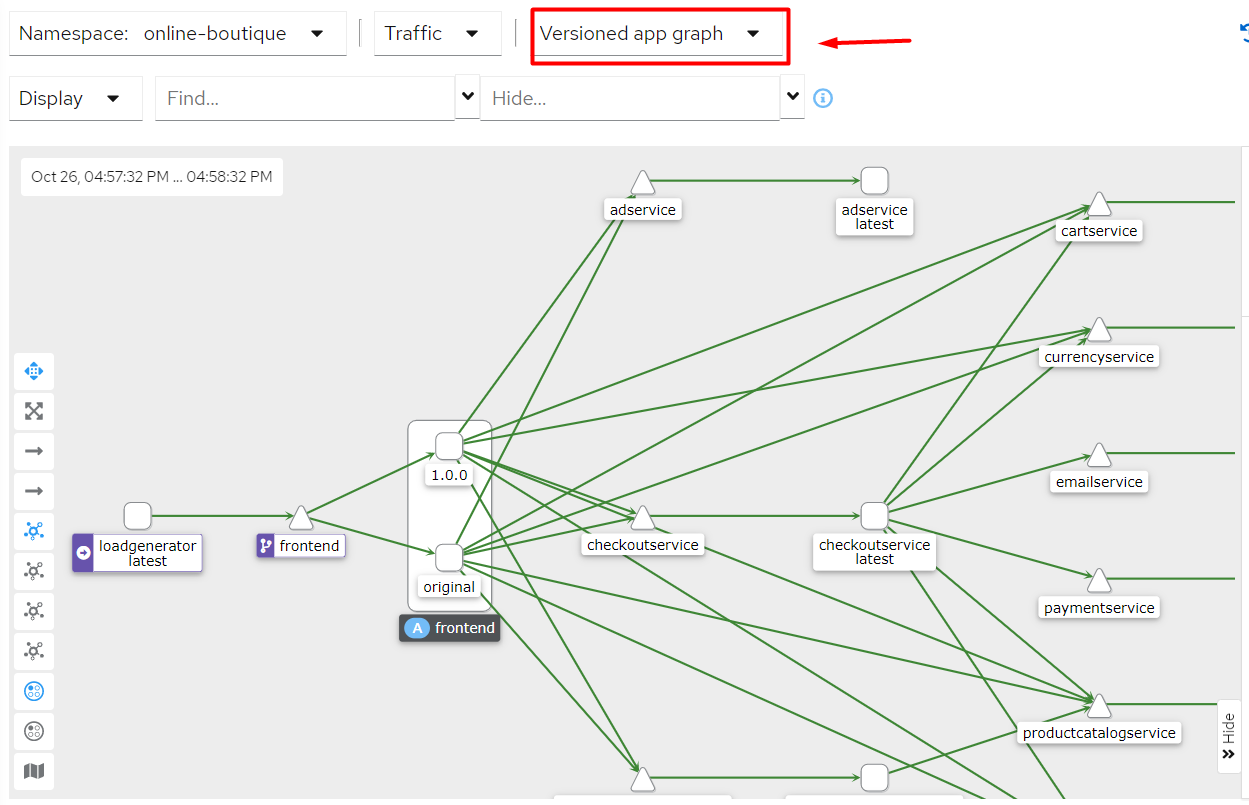

在online-boutique命名空間點擊Graph,查看服務的拓撲結構

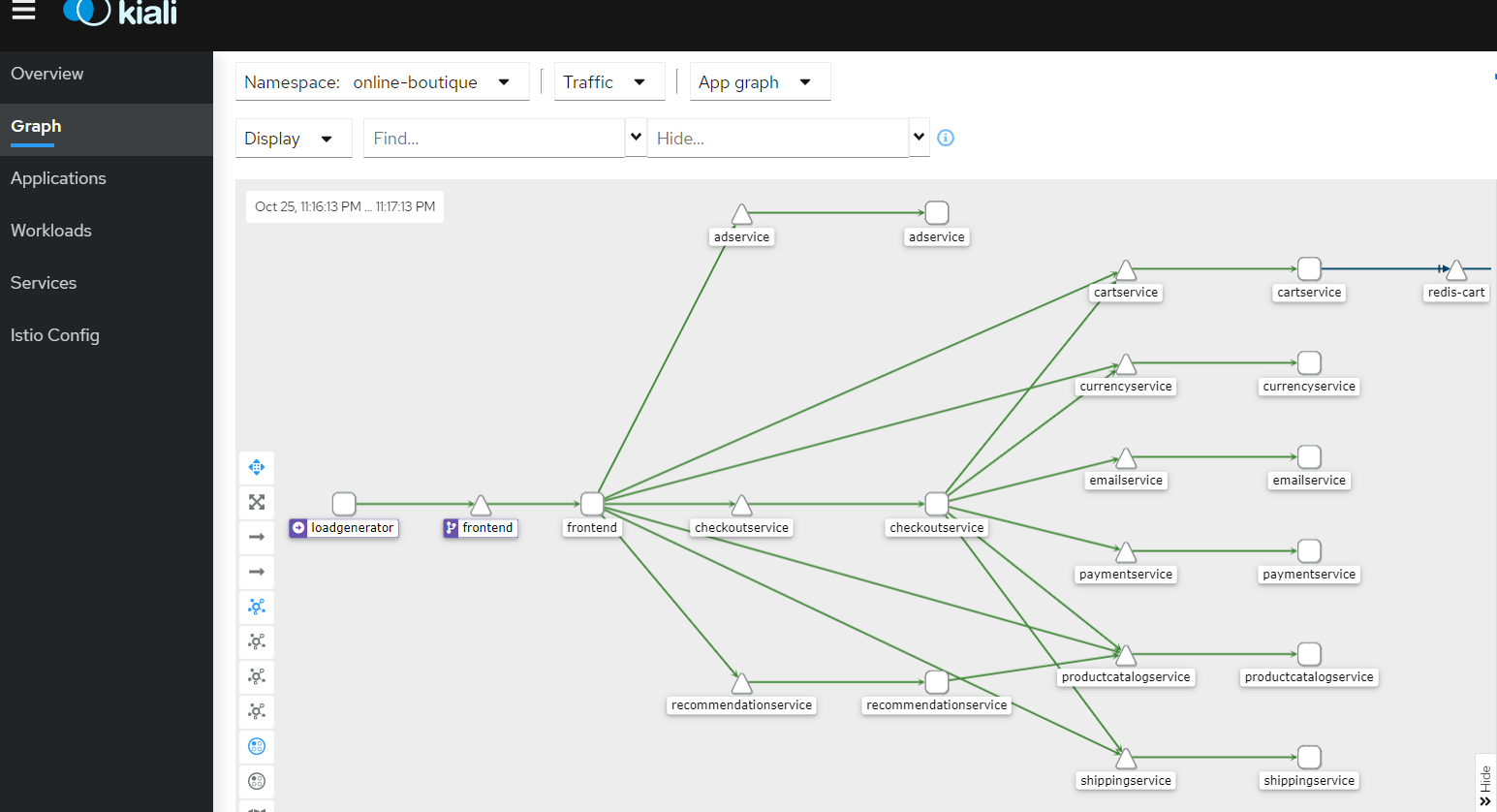

下面是 Boutique 圖表在 Kiali 中的樣子:

該圖向我們展示了服務的拓撲結構,並將服務的通信方式可視化。它還顯示了入站和出站的指標,以及通過連接 Jaeger 和 Grafana(如果安裝了)的追蹤。圖中的顏色代表服務網格的健康狀況。紅色或橙色的節點可能需要注意。組件之間的邊的顏色代表這些組件之間的請求的健康狀況。節點形狀表示組件的類型,如服務、工作負載或應用程序。

七.流量路由

7.1 流量路由

我們已經建立了一個新的 Docker 鏡像,它使用了與當前運行的前端服務不同的標頭。讓我們看看如何部署所需的資源並將一定比例的流量路由到不同的前端服務版本。

在我們創建任何資源之前,讓我們刪除現有的前端部署(kubectl delete deploy frontend)

[root@k8scloude1 ~]# kubectl get deploy | grep frontend

frontend 1/1 1 1 4d21h

[root@k8scloude1 ~]# kubectl delete deploy frontend

deployment.apps "frontend" deleted

[root@k8scloude1 ~]# kubectl get deploy | grep frontend

重新創建一個前端deploy,名字還是frontend,但是指定了一個版本標籤設置為 original 。yaml文件如下:

[root@k8scloude1 ~]# vim frontend-original.yaml

[root@k8scloude1 ~]# cat frontend-original.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

selector:

matchLabels:

app: frontend

version: original

template:

metadata:

labels:

app: frontend

version: original

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

containers:

- name: server

image: gcr.lank8s.cn/google-samples/microservices-demo/frontend:v0.2.1

ports:

- containerPort: 8080

readinessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-readiness-probe"

livenessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-liveness-probe"

env:

- name: PORT

value: "8080"

- name: PRODUCT_CATALOG_SERVICE_ADDR

value: "productcatalogservice:3550"

- name: CURRENCY_SERVICE_ADDR

value: "currencyservice:7000"

- name: CART_SERVICE_ADDR

value: "cartservice:7070"

- name: RECOMMENDATION_SERVICE_ADDR

value: "recommendationservice:8080"

- name: SHIPPING_SERVICE_ADDR

value: "shippingservice:50051"

- name: CHECKOUT_SERVICE_ADDR

value: "checkoutservice:5050"

- name: AD_SERVICE_ADDR

value: "adservice:9555"

- name: ENV_PLATFORM

value: "gcp"

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

創建deploy

[root@k8scloude1 ~]# kubectl apply -f frontend-original.yaml

deployment.apps/frontend created

#deploy創建成功

[root@k8scloude1 ~]# kubectl get deploy | grep frontend

frontend 1/1 1 1 43s

#pod也正常運行

[root@k8scloude1 ~]# kubectl get pod | grep frontend

frontend-ff47c5568-qnzpt 2/2 Running 0 105s

現在我們準備創建一個 DestinationRule,定義兩個版本的前端——現有的(original)和新的(v1)。

[root@k8scloude1 ~]# vim frontend-dr.yaml

[root@k8scloude1 ~]# cat frontend-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: frontend

spec:

host: frontend.online-boutique.svc.cluster.local

subsets:

- name: original

labels:

version: original

- name: v1

labels:

version: 1.0.0

創建DestinationRule

[root@k8scloude1 ~]# kubectl apply -f frontend-dr.yaml

destinationrule.networking.istio.io/frontend created

[root@k8scloude1 ~]# kubectl get destinationrule

NAME HOST AGE

frontend frontend.online-boutique.svc.cluster.local 12s

接下來,我們將更新 VirtualService,並指定將所有流量路由到子集。在這種情況下,我們將把所有流量路由到原始版本original的前端。

[root@k8scloude1 ~]# vim frontend-vs.yaml

[root@k8scloude1 ~]# cat frontend-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: frontend-ingress

spec:

hosts:

- '*'

gateways:

- frontend-gateway

http:

- route:

- destination:

host: frontend.online-boutique.svc.cluster.local

port:

number: 80

subset: original

更新 VirtualService 資源

[root@k8scloude1 ~]# kubectl apply -f frontend-vs.yaml

virtualservice.networking.istio.io/frontend-ingress created

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.default.svc.cluster.local"] 5d14h

frontend-ingress ["frontend-gateway"] ["*"] 14s

#修改frontend這個virtualservice的hosts為frontend.online-boutique.svc.cluster.local

[root@k8scloude1 ~]# kubectl edit virtualservice frontend

virtualservice.networking.istio.io/frontend edited

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 5d14h

frontend-ingress ["frontend-gateway"] ["*"] 3m24s

現在我們將 VirtualService 配置為將所有進入的流量路由到 original 子集,我們可以安全地創建新的前端部署。

[root@k8scloude1 ~]# vim frontend-v1.yaml

[root@k8scloude1 ~]# cat frontend-v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend-v1

spec:

selector:

matchLabels:

app: frontend

version: 1.0.0

template:

metadata:

labels:

app: frontend

version: 1.0.0

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

containers:

- name: server

image: gcr.lank8s.cn/tetratelabs/boutique-frontend:1.0.0

ports:

- containerPort: 8080

readinessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-readiness-probe"

livenessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-liveness-probe"

env:

- name: PORT

value: "8080"

- name: PRODUCT_CATALOG_SERVICE_ADDR

value: "productcatalogservice:3550"

- name: CURRENCY_SERVICE_ADDR

value: "currencyservice:7000"

- name: CART_SERVICE_ADDR

value: "cartservice:7070"

- name: RECOMMENDATION_SERVICE_ADDR

value: "recommendationservice:8080"

- name: SHIPPING_SERVICE_ADDR

value: "shippingservice:50051"

- name: CHECKOUT_SERVICE_ADDR

value: "checkoutservice:5050"

- name: AD_SERVICE_ADDR

value: "adservice:9555"

- name: ENV_PLATFORM

value: "gcp"

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

創建前端部署frontend-v1

[root@k8scloude1 ~]# kubectl apply -f frontend-v1.yaml

deployment.apps/frontend-v1 created

#deploy正常運行

[root@k8scloude1 ~]# kubectl get deploy | grep frontend-v1

frontend-v1 1/1 1 1 54s

#pod正常運行

[root@k8scloude1 ~]# kubectl get pod | grep frontend-v1

frontend-v1-6457cb648d-fgmkk 2/2 Running 0 70s

如果我們在瀏覽器中打開 INGRESS_HOST,我們仍然會看到原始版本的前端。瀏覽器打開//192.168.110.190/,顯示的前端如下:

讓我們更新 VirtualService 中的權重,開始將 30% 的流量路由到 v1 的子集。

[root@k8scloude1 ~]# vim frontend-30.yaml

[root@k8scloude1 ~]# cat frontend-30.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: frontend-ingress

spec:

hosts:

- '*'

gateways:

- frontend-gateway

http:

- route:

- destination:

host: frontend.online-boutique.svc.cluster.local

port:

number: 80

subset: original

weight: 70

- destination:

host: frontend.online-boutique.svc.cluster.local

port:

number: 80

subset: v1

weight: 30

更新 VirtualService

[root@k8scloude1 ~]# kubectl apply -f frontend-30.yaml

virtualservice.networking.istio.io/frontend-ingress configured

[root@k8scloude1 ~]# kubectl get virtualservices

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 5d14h

frontend-ingress ["frontend-gateway"] ["*"] 20m

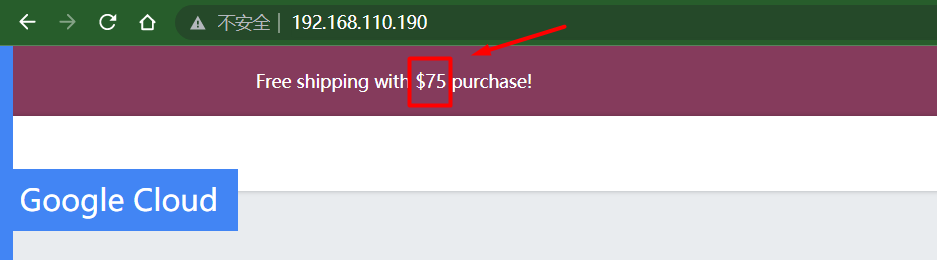

瀏覽器訪問//192.168.110.190/,查看前端界面,如果我們刷新幾次網頁,我們會注意到來自前端 v1 的更新標頭,一般顯示$75,如下所示:

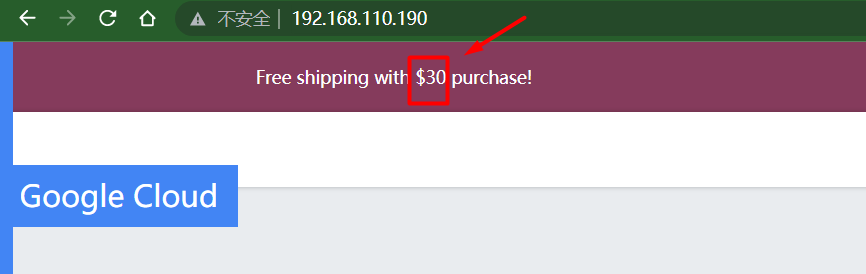

多刷新幾次頁面顯示$30,如下所示:

我們可以在瀏覽器中打開 //192.168.110.130:30754,進入 kiali界面查看服務的拓撲結構,選擇online-boutique命名空間,查看Graph

服務的拓撲結構如下,我們會發現有兩個版本的前端在運行:

八.故障注入

8.1 故障注入

我們將為推薦服務引入 5 秒的延遲。Envoy 將為 50% 的請求注入延遲。

[root@k8scloude1 ~]# vim recommendation-delay.yaml

[root@k8scloude1 ~]# cat recommendation-delay.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: recommendationservice

spec:

hosts:

- recommendationservice.online-boutique.svc.cluster.local

http:

- route:

- destination:

host: recommendationservice.online-boutique.svc.cluster.local

fault:

delay:

percentage:

value: 50

fixedDelay: 5s

將上述 YAML 保存為 recommendation-delay.yaml,然後用 kubectl apply -f recommendation-delay.yaml 創建 VirtualService。

[root@k8scloude1 ~]# kubectl apply -f recommendation-delay.yaml

virtualservice.networking.istio.io/recommendationservice created

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d13h

frontend-ingress ["frontend-gateway"] ["*"] 23h

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 7s

我們可以在瀏覽器中打開 INGRESS_HOST //192.168.110.190/,然後點擊其中一個產品。推薦服務的結果顯示在屏幕底部的」Other Products You Might Light「部分。如果我們刷新幾次頁面,我們會注意到,該頁面要麼立即加載,要麼有一個延遲加載頁面。這個延遲是由於我們注入了 5 秒的延遲。

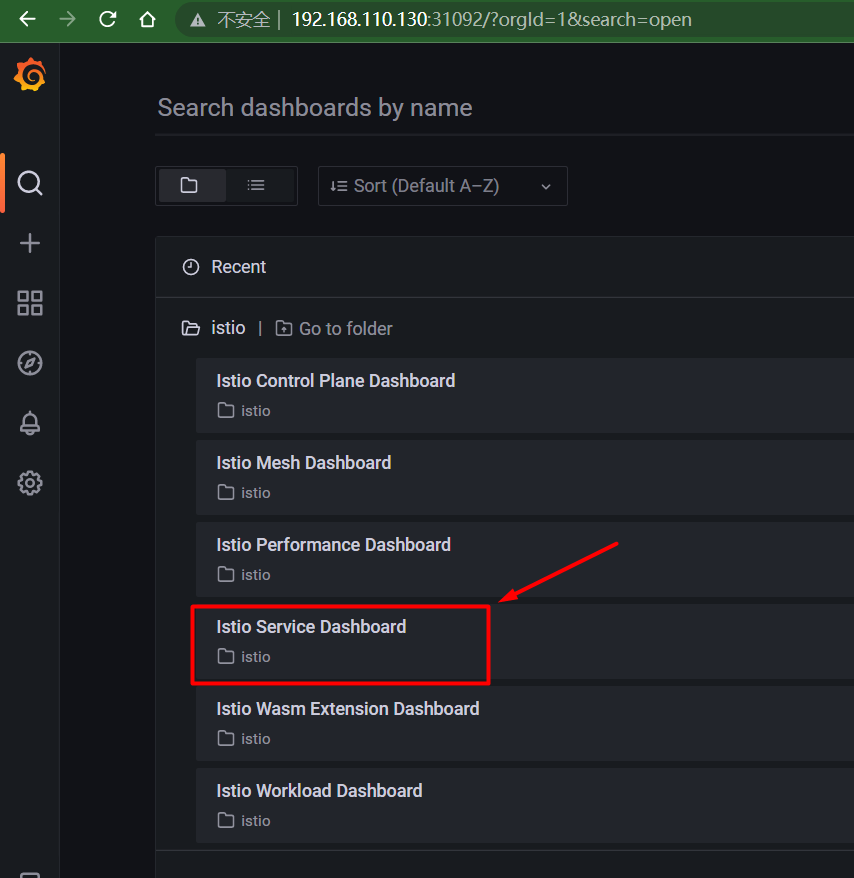

我們可以打開 Grafana(getmesh istioctl dash grafana)和 Istio 服務儀錶板,或者使用如下方法打開Grafana界面:

#查看grafana的端口號

[root@k8scloude1 ~]# kubectl get svc -n istio-system | grep grafana

grafana NodePort 10.100.151.232 <none> 3000:31092/TCP 24d

//192.168.110.130:31092/打開grafana界面。點擊istio-service-dashboard進入istio服務界面

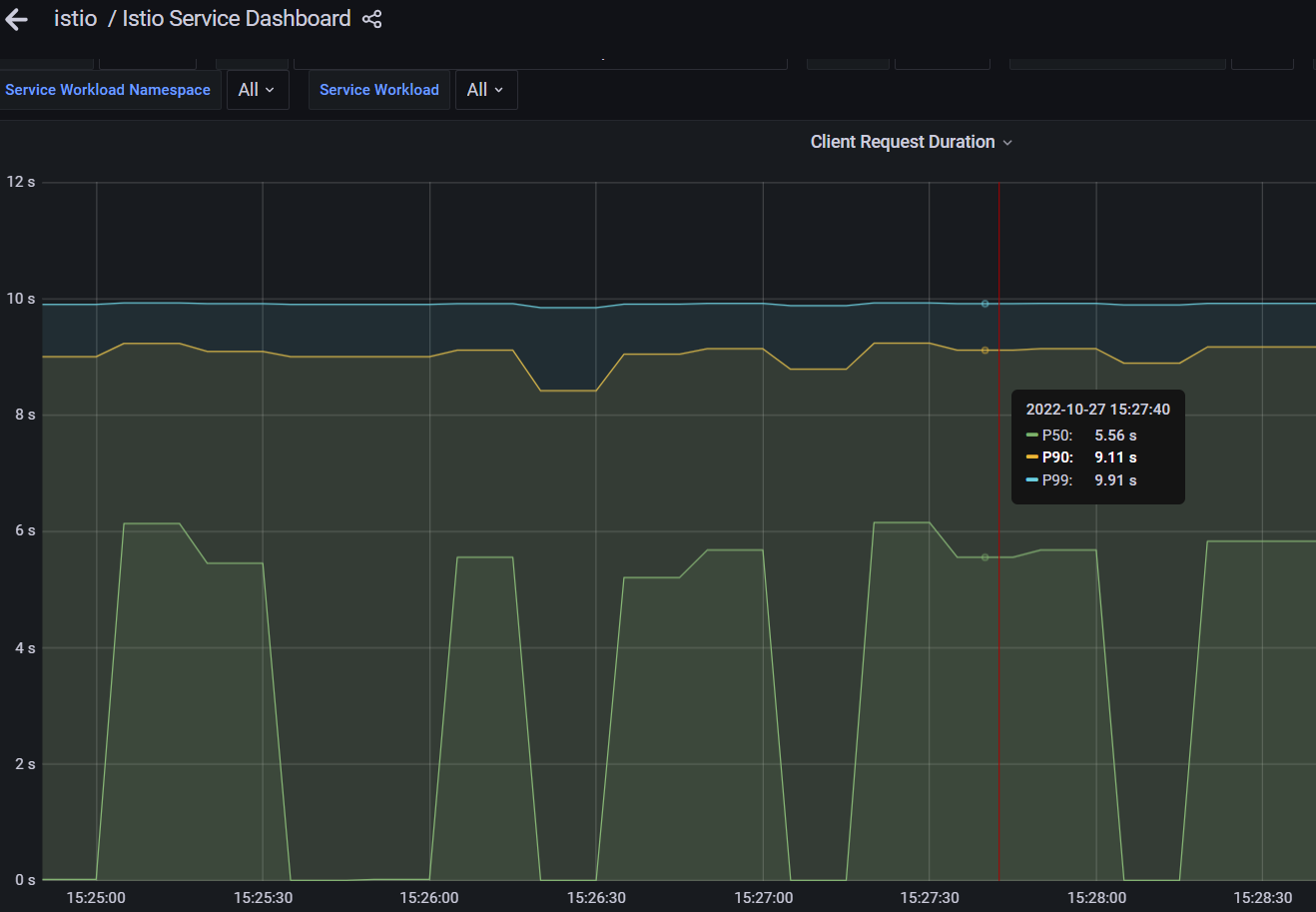

確保從服務列表中選擇recommendationsservice,在 Reporter 下拉菜單中選擇 source,並查看顯示延遲的 Client Request Duration,如下圖所示:

點擊View放大Client Request Duration圖表

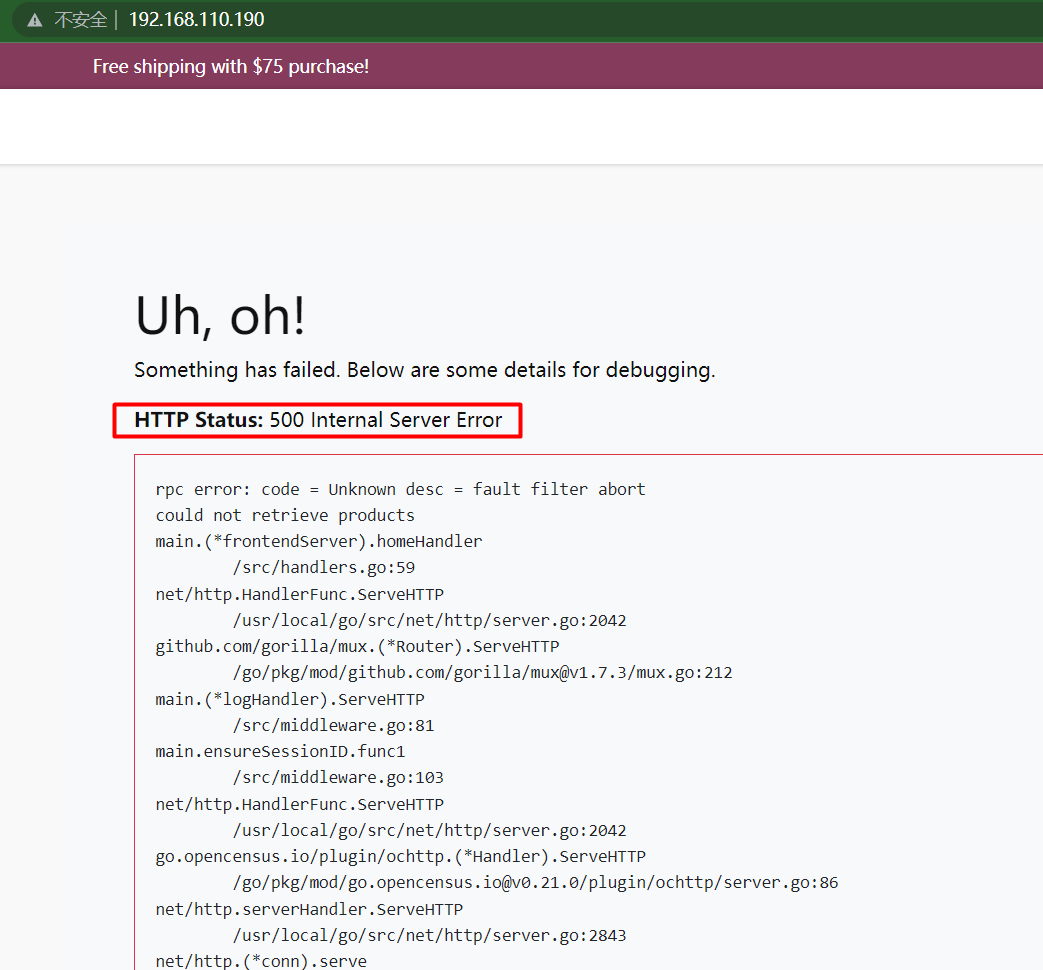

同樣地,我們可以注入一個中止。在下面的例子中,我們為發送到產品目錄服務的 50% 的請求注入一個 HTTP 500。

[root@k8scloude1 ~]# vim productcatalogservice-abort.yaml

[root@k8scloude1 ~]# cat productcatalogservice-abort.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: productcatalogservice

spec:

hosts:

- productcatalogservice.online-boutique.svc.cluster.local

http:

- route:

- destination:

host: productcatalogservice.online-boutique.svc.cluster.local

fault:

abort:

percentage:

value: 50

httpStatus: 500

創建VirtualService。

[root@k8scloude1 ~]# kubectl apply -f productcatalogservice-abort.yaml

virtualservice.networking.istio.io/productcatalogservice created

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d13h

frontend-ingress ["frontend-gateway"] ["*"] 23h

productcatalogservice ["productcatalogservice.online-boutique.svc.cluster.local"] 8s

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 36m

如果我們刷新幾次產品頁面,我們應該得到如下圖所示的錯誤信息。

請注意,錯誤信息說,失敗的原因是故障過濾器中止。如果我們打開 Grafana(getmesh istioctl dash grafana),我們也會注意到圖中報告的錯誤。

刪除productcatalogservice這個VirtualService:

[root@k8scloude1 ~]# kubectl delete virtualservice productcatalogservice

virtualservice.networking.istio.io "productcatalogservice" deleted

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d14h

frontend-ingress ["frontend-gateway"] ["*"] 23h

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 44m

九.彈性

9.1 彈性

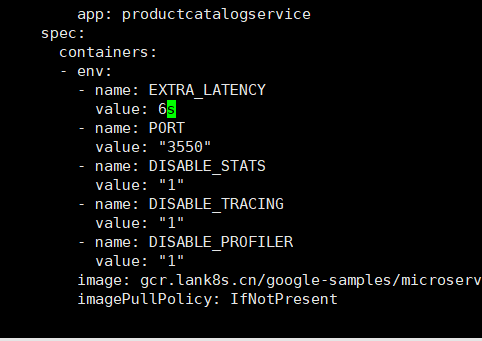

為了演示彈性功能,我們將在產品目錄服務部署中添加一個名為 EXTRA_LATENCY 的環境變量。這個變量會在每次調用服務時注入一個額外的休眠。

通過運行 kubectl edit deploy productcatalogservice 來編輯產品目錄服務部署。

[root@k8scloude1 ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

adservice 1/1 1 1 6d14h

cartservice 1/1 1 1 6d14h

checkoutservice 1/1 1 1 6d14h

currencyservice 1/1 1 1 6d14h

emailservice 1/1 1 1 6d14h

frontend 1/1 1 1 24h

frontend-v1 1/1 1 1 28h

loadgenerator 1/1 1 1 6d14h

paymentservice 1/1 1 1 6d14h

productcatalogservice 1/1 1 1 6d14h

recommendationservice 1/1 1 1 6d14h

redis-cart 1/1 1 1 6d14h

shippingservice 1/1 1 1 6d14h

[root@k8scloude1 ~]# kubectl edit deploy productcatalogservice

deployment.apps/productcatalogservice edited

這將打開一個編輯器。滾動到有環境變量的部分,添加 EXTRA_LATENCY 環境變量。

...

spec:

containers:

- env:

- name: EXTRA_LATENCY

value: 6s

...

保存並推出編輯器。

如果我們刷新//192.168.110.190/頁面,我們會發現頁面需要 6 秒的時間來加載(那是由於我們注入的延遲)。

讓我們給產品目錄服務添加一個 2 秒的超時。

[root@k8scloude1 ~]# vim productcatalogservice-timeout.yaml

[root@k8scloude1 ~]# cat productcatalogservice-timeout.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: productcatalogservice

spec:

hosts:

- productcatalogservice.online-boutique.svc.cluster.local

http:

- route:

- destination:

host: productcatalogservice.online-boutique.svc.cluster.local

timeout: 2s

創建 VirtualService。

[root@k8scloude1 ~]# kubectl apply -f productcatalogservice-timeout.yaml

virtualservice.networking.istio.io/productcatalogservice created

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d14h

frontend-ingress ["frontend-gateway"] ["*"] 24h

productcatalogservice ["productcatalogservice.online-boutique.svc.cluster.local"] 10s

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 76m

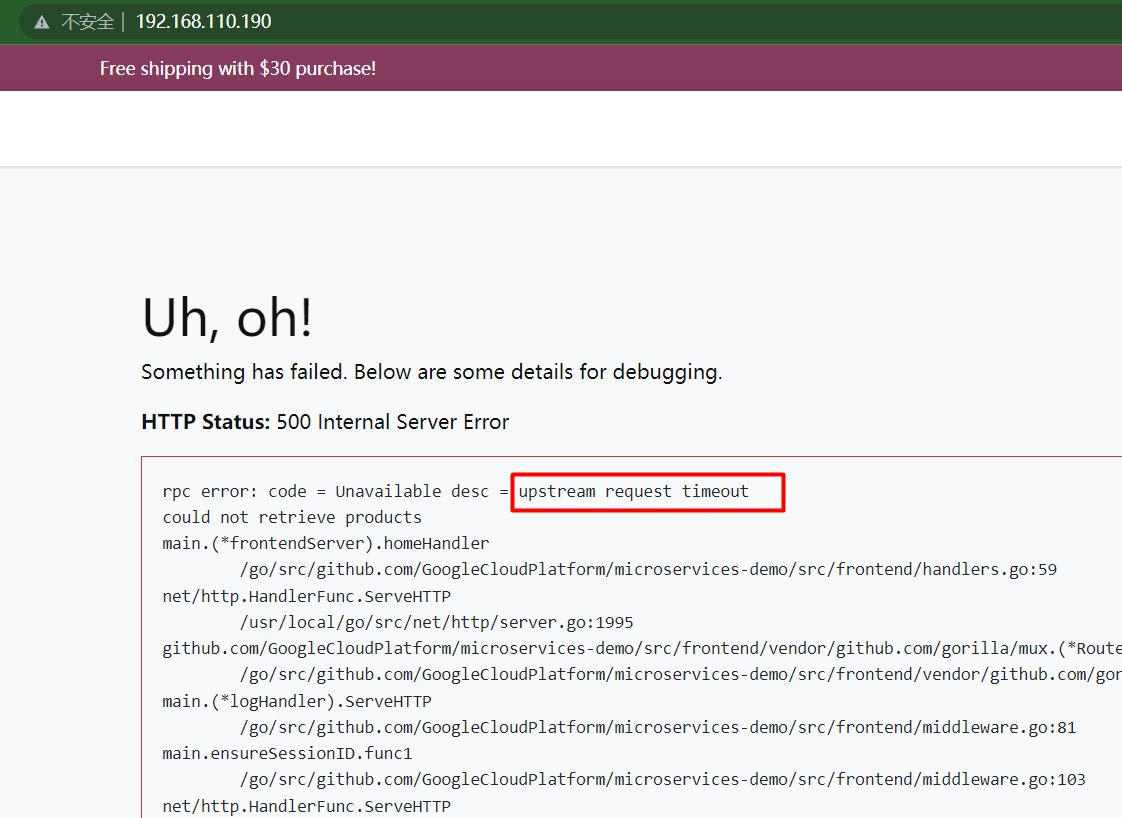

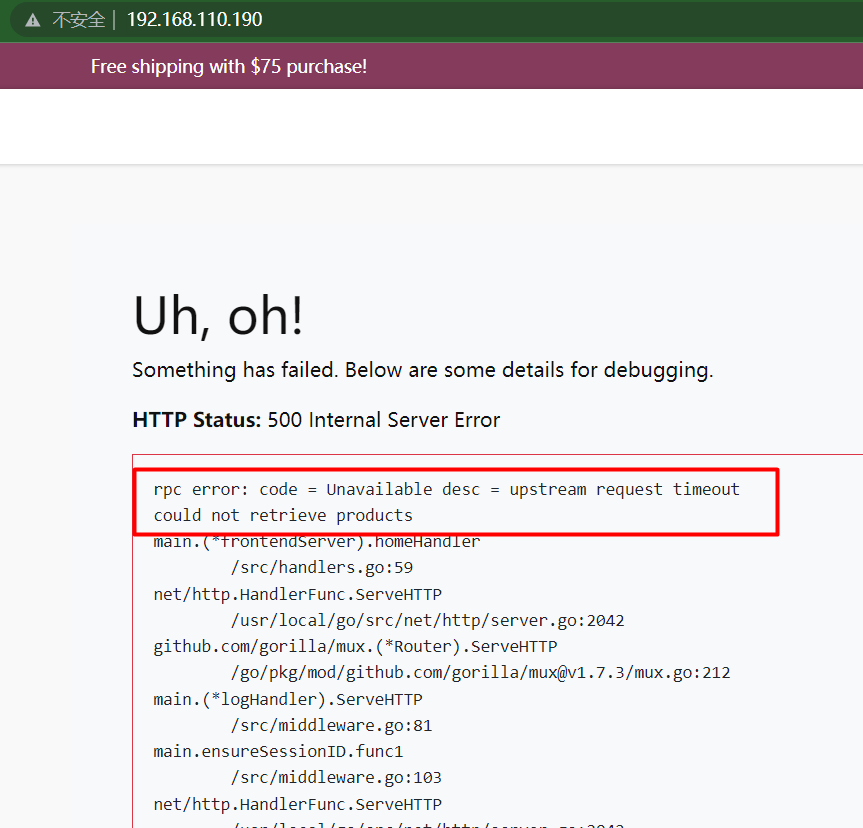

如果我們刷新頁面//192.168.110.190/,我們會注意到一個錯誤信息的出現:

rpc error: code = Unavailable desc = upstream request timeout

could not retrieve products

該錯誤表明對產品目錄服務的請求超時了。原因為:我們修改了服務,增加了 6 秒的延遲,並將超時設置為 2 秒。

讓我們定義一個重試策略,有三次嘗試,每次嘗試的超時為 1 秒。

[root@k8scloude1 ~]# vim productcatalogservice-retry.yaml

[root@k8scloude1 ~]# cat productcatalogservice-retry.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: productcatalogservice

spec:

hosts:

- productcatalogservice.online-boutique.svc.cluster.local

http:

- route:

- destination:

host: productcatalogservice.online-boutique.svc.cluster.local

retries:

attempts: 3

perTryTimeout: 1s

[root@k8scloude1 ~]# kubectl apply -f productcatalogservice-retry.yaml

virtualservice.networking.istio.io/productcatalogservice configured

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d14h

frontend-ingress ["frontend-gateway"] ["*"] 24h

productcatalogservice ["productcatalogservice.online-boutique.svc.cluster.local"] 10m

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 86m

由於我們在產品目錄服務部署中留下了額外的延遲,我們仍然會看到錯誤。

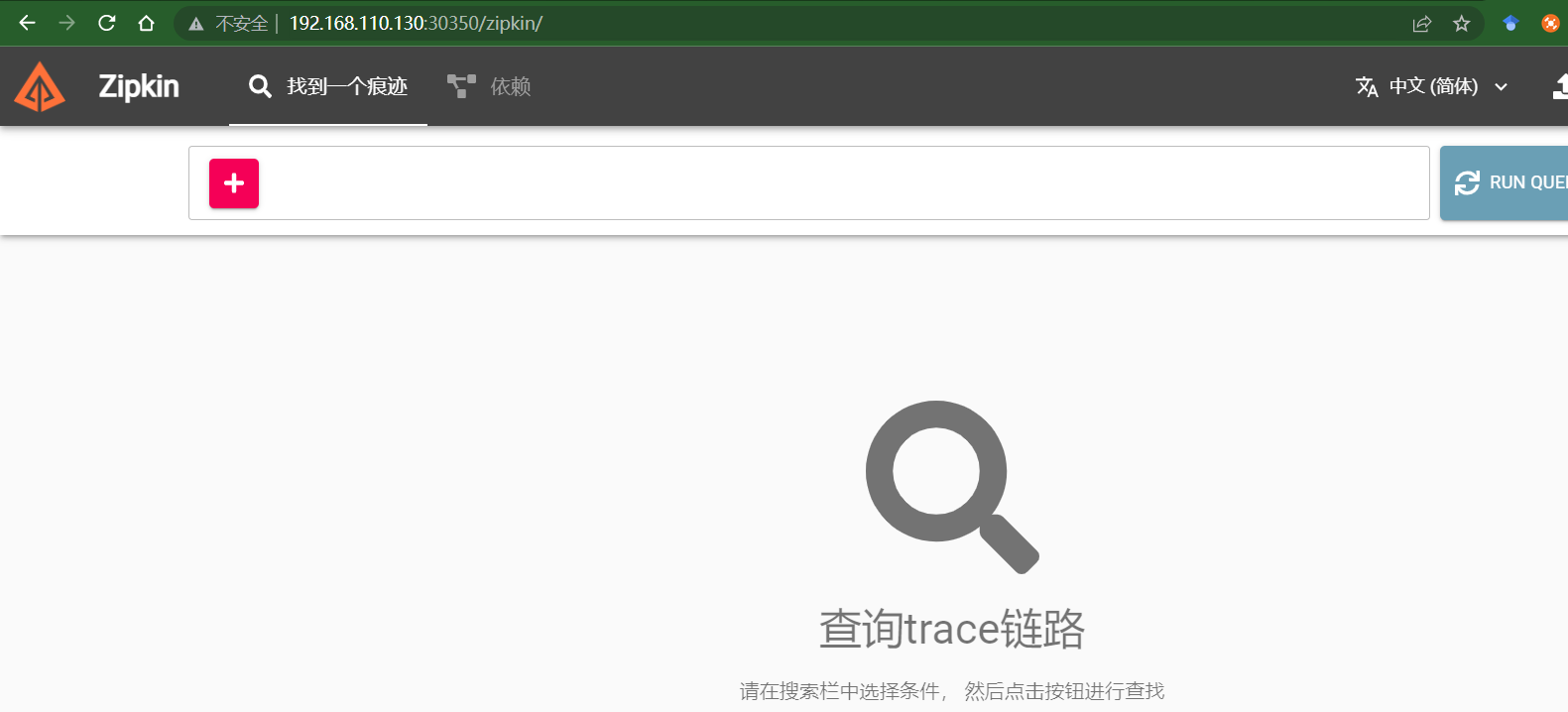

讓我們打開 Zipkin 中的追蹤,看看重試策略的作用。使用 getmesh istioctl dash zipkin 來打開 Zipkin 儀錶盤。或者使用如下方法打開zipkin界面

#查看zipkin端口為30350

[root@k8scloude1 ~]# kubectl get svc -n istio-system | grep zipkin

zipkin NodePort 10.104.85.78 <none> 9411:30350/TCP 23d

瀏覽器輸入//192.168.110.130:30350/打開zipkin界面。

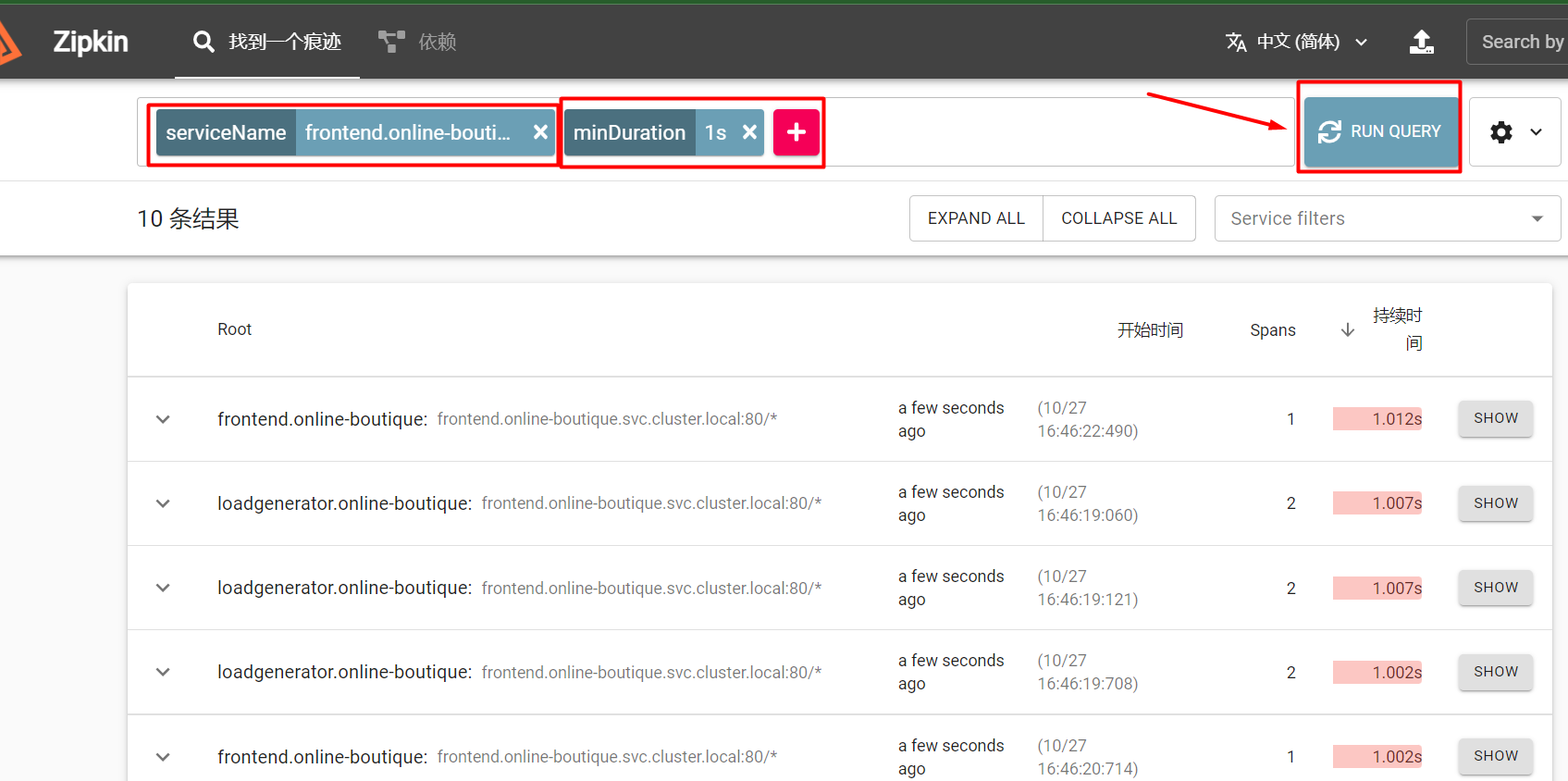

點擊 + 按鈕,選擇 serviceName 和 frontend.online-boutique。為了只得到至少一秒鐘的響應(這就是我們的 perTryTimeout),選擇 minDuration,在文本框中輸入 1s。點擊RUN QUERY搜索按鈕,顯示所有追蹤。

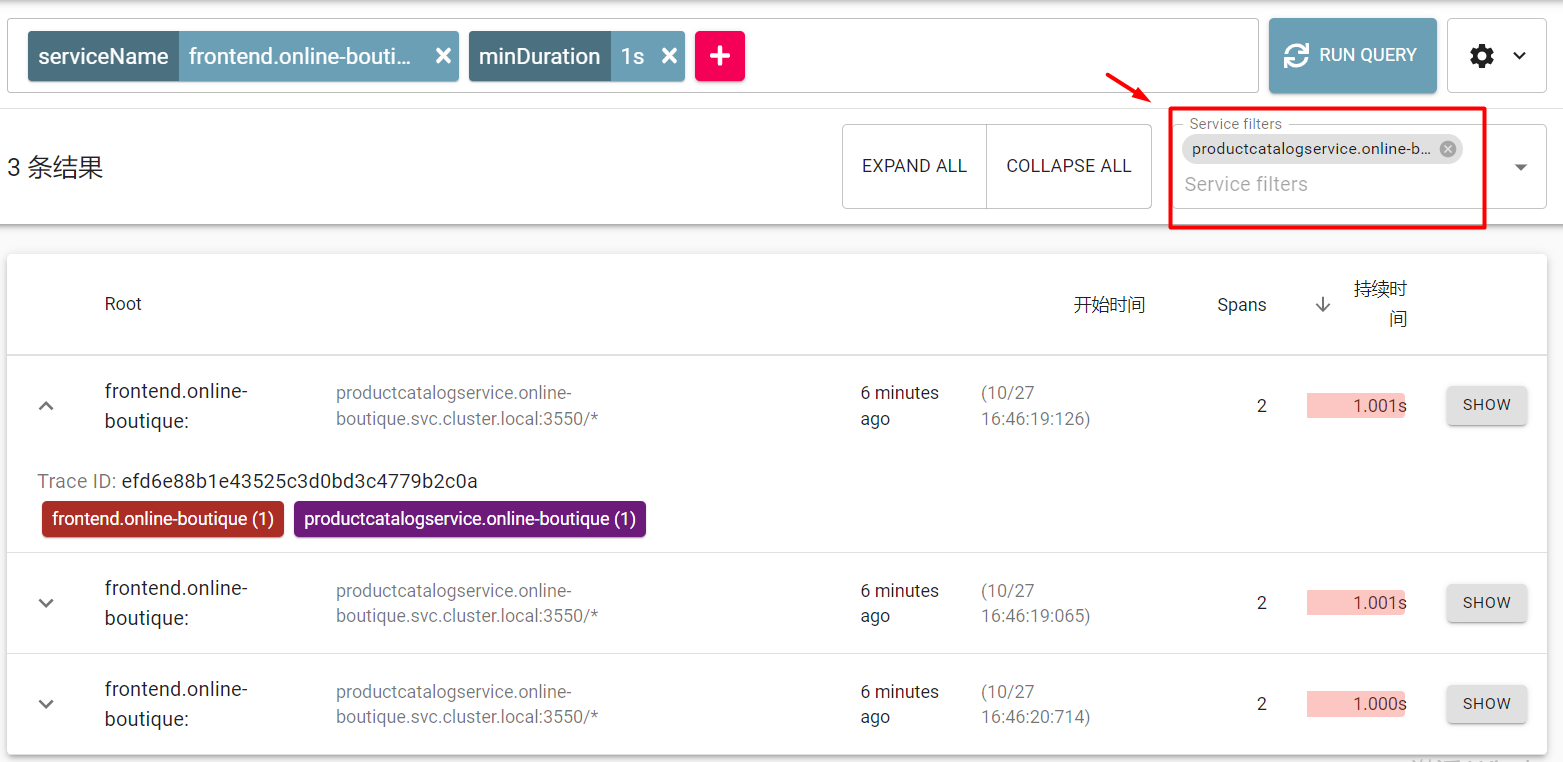

點擊 Filter 按鈕,從下拉菜單中選擇 productCatalogService.online-boutique。你應該看到花了 1 秒鐘的 trace。這些 trace 對應於我們之前定義的 perTryTimeout。

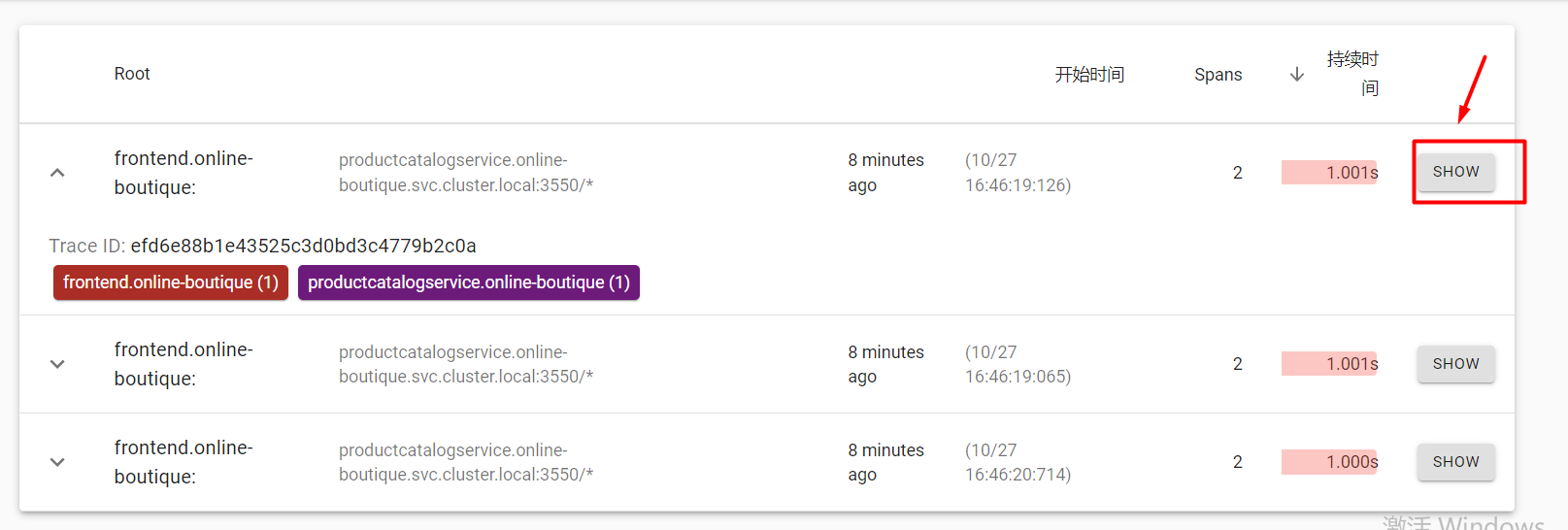

點擊SHOW

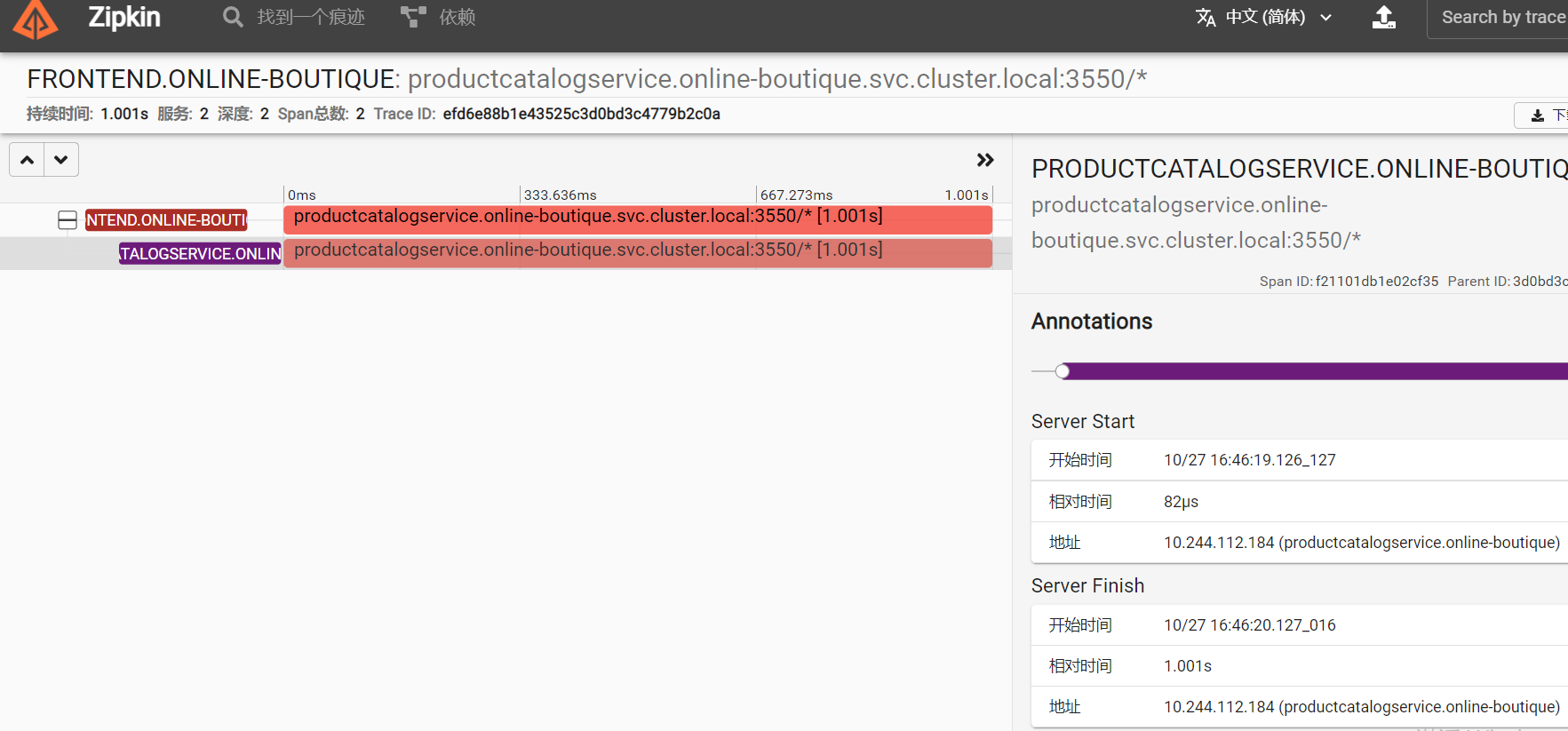

詳細信息如下:

運行 kubectl delete vs productcatalogservice 刪除 VirtualService。

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d15h

frontend-ingress ["frontend-gateway"] ["*"] 24h

productcatalogservice ["productcatalogservice.online-boutique.svc.cluster.local"] 37m

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 113m

[root@k8scloude1 ~]# kubectl delete virtualservice productcatalogservice

virtualservice.networking.istio.io "productcatalogservice" deleted

[root@k8scloude1 ~]# kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

frontend ["frontend.online-boutique.svc.cluster.local"] 6d15h

frontend-ingress ["frontend-gateway"] ["*"] 24h

recommendationservice ["recommendationservice.online-boutique.svc.cluster.local"] 114m