mongodb之shard分片

總的

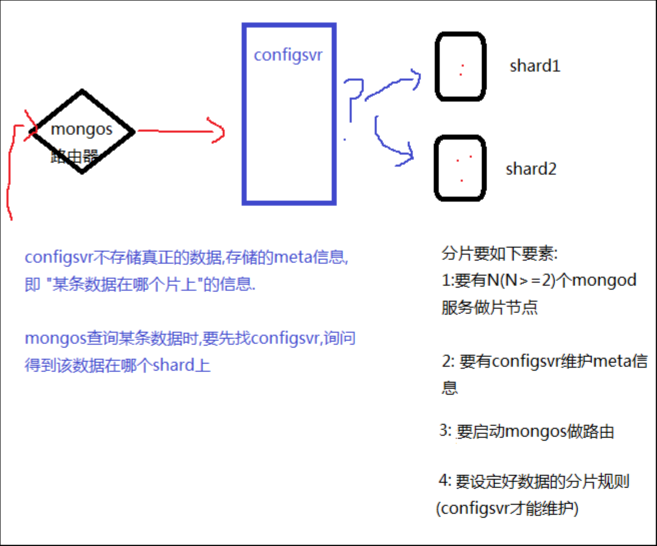

1:在3台獨立服務器上,分別運行 27017,27018,27019實例, 互為副本集,形成3套repl set 2: 在3台服務器上,各配置config server, 運行27020端口上 3: 配置mongos ./bin/mongos --port 30000 \ --dbconfig 192.168.1.201:27020,192.168.1.202:27020,192.168.1.203:27020 4:連接路由器 ./bin/mongo --port 30000 5: 添加repl set為片 >sh.addShard(『192.168.1.201:27017』); >sh.addShard(『192.168.1.203:27017』); >sh.addShard(『192.168.1.203:27017』); 6: 添加待分片的庫 >sh.enableSharding(databaseName); 7: 添加待分片的表 >sh.shardCollection(『dbName.collectionName』,{field:1}); Field是collection的一個字段,系統將會利用filed的值,來計算應該分到哪一個片上. 這個filed叫」片鍵」, shard key mongodb不是從單篇文檔的級別,絕對平均的散落在各個片上, 而是N篇文檔,形成一個塊"chunk", 優先放在某個片上, 當這片上的chunk,比另一個片的chunk,區別比較大時, (>=3) ,會把本片上的chunk,移到另一個片上, 以chunk為單位, 維護片之間的數據均衡 問: 為什麼插入了10萬條數據,才2個chunk? 答: 說明chunk比較大(默認是64M) 在config數據庫中,修改chunksize的值. 問: 既然優先往某個片上插入,當chunk失衡時,再移動chunk, 自然,隨着數據的增多,shard的實例之間,有chunk來回移動的現象,這將帶來什麼問題? 答: 服務器之間IO的增加, 接上問: 能否我定義一個規則, 某N條數據形成1個塊,預告分配M個chunk, M個chunk預告分配在不同片上. 以後的數據直接入各自預分配好的chunk,不再來回移動? 答: 能, 手動預先分片! 以shop.user表為例 1: sh.shardCollection(『shop.user』,{userid:1}); //user表用userid做shard key 2: for(var i=1;i<=40;i++) { sh.splitAt('shop.user',{userid:i*1000}) } // 預先在1K 2K...40K這樣的界限切好chunk(雖然chunk是空的), 這些chunk將會均勻移動到各片上. 3: 通過mongos添加user數據. 數據會添加到預先分配好的chunk上, chunk就不會來回移動了.

分片

部署使用分片的mongodb集群

var rsconf = { _id:'rs2', members: [ {_id:0, host:'10.0.0.11:27017' }, {_id:1, host:'10.0.0.11:27018' }, {_id:2, host:'10.0.0.11:27020' } ] } mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr 需要有一個配置數據庫服務,存儲元數據用,使用參數--configsvr mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork mongos需要指定配置數據庫, [mongod@mcw01 ~]$ mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles about to fork child process, waiting until server is ready for connections. forked process: 18608 child process started successfully, parent exiting [mongod@mcw01 ~]$ mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles about to fork child process, waiting until server is ready for connections. forked process: 18627 child process started successfully, parent exiting [mongod@mcw01 ~]$ mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr about to fork child process, waiting until server is ready for connections. forked process: 18646 child process started successfully, parent exiting [mongod@mcw01 ~]$ mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork 2022-03-05T00:26:41.452+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production. about to fork child process, waiting until server is ready for connections. forked process: 18667 child process started successfully, parent exiting [mongod@mcw01 ~]$ ps -ef|grep -v grep |grep mongo root 16595 16566 0 Mar04 pts/0 00:00:00 su - mongod mongod 16596 16595 0 Mar04 pts/0 00:00:03 -bash root 17669 17593 0 Mar04 pts/1 00:00:00 su - mongod mongod 17670 17669 0 Mar04 pts/1 00:00:00 -bash root 17735 17715 0 Mar04 pts/2 00:00:00 su - mongod mongod 17736 17735 0 Mar04 pts/2 00:00:00 -bash mongod 18608 1 0 00:26 ? 00:00:03 mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles mongod 18627 1 0 00:26 ? 00:00:03 mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles mongod 18646 1 0 00:26 ? 00:00:04 mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr mongod 18667 1 0 00:26 ? 00:00:01 mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork mongod 18698 16596 0 00:36 pts/0 00:00:00 ps -ef [mongod@mcw01 ~]$ 現在configsvr和mongos綁在一塊了,但是和後面的兩個mongodb分片還沒有關係。 下面需要連接mongos,給它增加兩個shard(片節點)。 [mongod@mcw01 ~]$ mongo --port 30000 MongoDB shell version: 3.2.8 connecting to: 127.0.0.1:30000/test mongos> show dbs; config 0.000GB mongos> use config; switched to db config mongos> show tables; #查看mongos中有的表 chunks lockpings locks mongos settings shards tags version mongos> mongos> bye [mongod@mcw01 ~]$ mongo --port 30000 MongoDB shell version: 3.2.8 connecting to: 127.0.0.1:30000/test mongos> sh.addShard('10.0.0.11:27017'); #添加兩個shard { "shardAdded" : "shard0000", "ok" : 1 } mongos> sh.addShard('10.0.0.11:27018'); { "shardAdded" : "shard0001", "ok" : 1 } mongos> sh.status() #查看shard狀況 --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } #可以看到有兩個shard, { "_id" : "shard0001", "host" : "10.0.0.11:27018" } #這兩個片已經加到configsvr里了 active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: mongos> mongos> use test switched to db test mongos> db.stu.insert({name:'poly'}); #現在在mongos上創建四條數據。可以查詢到 WriteResult({ "nInserted" : 1 }) mongos> db.stu.insert({name:'lily'}); WriteResult({ "nInserted" : 1 }) mongos> db.stu.insert({name:'hmm'}); WriteResult({ "nInserted" : 1 }) mongos> db.stu.insert({name:'lucy'}); WriteResult({ "nInserted" : 1 }) mongos> db.stu.find(); { "_id" : ObjectId("6222427bc425e356ae71d452"), "name" : "poly" } { "_id" : ObjectId("62224282c425e356ae71d453"), "name" : "lily" } { "_id" : ObjectId("62224287c425e356ae71d454"), "name" : "hmm" } { "_id" : ObjectId("6222428dc425e356ae71d455"), "name" : "lucy" } mongos> 此時我在27017上能看到 [mongod@mcw01 ~]$ mongo --port 27017 ....... > show dbs; local 0.000GB test 0.000GB > use test; switched to db test > db.stu.find(); { "_id" : ObjectId("6222427bc425e356ae71d452"), "name" : "poly" } { "_id" : ObjectId("62224282c425e356ae71d453"), "name" : "lily" } { "_id" : ObjectId("62224287c425e356ae71d454"), "name" : "hmm" } { "_id" : ObjectId("6222428dc425e356ae71d455"), "name" : "lucy" } > 但是在27018上查不到數據 [mongod@mcw01 ~]$ mongo --port 27018 ....... > show dbs; local 0.000GB > 沒有設定數據的分片規則。下面我們進入mongos查看分片情況 mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } mongos> #如上可以看到,test庫分區(partitioned)是false,沒有分片,就默認首選放到主上的分片shard0000

給庫開啟分片

如下:shop是不存在的庫。給shop開啟分片。可看到是true了,且優先放到shard0001上,但是這還不完善 mongos> show dbs; config 0.000GB test 0.000GB mongos> sh.enable sh.enableBalancing( sh.enableSharding( mongos> sh.enableSharding('shop'); { "ok" : 1 } mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } mongos>

指定db下那個表(集合)做分片,指定分片依據那個字段

mongos> sh.shardCollection('shop.goods',{goods_id:1}); { "collectionsharded" : "shop.goods", "ok" : 1 } mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods #shop庫下的goods需要分片。 shard key: { "goods_id" : 1 } #分片鍵是這個字段 unique: false balancing: true chunks: shard0001 1 #chunk優先放到shard0001分片上 { "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0) mongos>

如下插入多條數據,可以發現基本都分配到shard1上了,此時是默認chunk,是很大的

for(var i=1;i<=10000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf'}) }; [mongod@mcw01 ~]$ mongo --port 30000 MongoDB shell version: 3.2.8 connecting to: 127.0.0.1:30000/test mongos> use shop; switched to db shop mongos> for(var i=1;i<=10000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf'}) }; WriteResult({ "nInserted" : 1 }) mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 1 : Success databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0000 1 shard0001 2 { "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : 2 } on : shard0000 Timestamp(2, 0) { "goods_id" : 2 } -->> { "goods_id" : 12 } on : shard0001 Timestamp(2, 1) { "goods_id" : 12 } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 3) mongos> mongos> db.goods.find().count(); 10000 mongos> 在27017上可以看到一條數據 [mongod@mcw01 ~]$ mongo --port 27017 > use shop switched to db shop > show tables; goods > db.goods.find().count(); 1 > db.goods.find(); { "_id" : ObjectId("622252d8b541d8768347746e"), "goods_id" : 1, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" } > 在27018分片2上有很多,分片分的不均。符合上面顯示的id是2以上的,都在shard1上 [mongod@mcw01 ~]$ mongo --port 27018 > use shop; switched to db shop > db.goods.find().count(); 9999 > db.goods.find().skip(9996); { "_id" : ObjectId("622252e3b541d87683479b7b"), "goods_id" : 9998, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" } { "_id" : ObjectId("622252e3b541d87683479b7c"), "goods_id" : 9999, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" } { "_id" : ObjectId("622252e3b541d87683479b7d"), "goods_id" : 10000, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" } >

修改chunk大小的配置

mongos> use config; #在mongos上,需要切到config庫 switched to db config mongos> show tables; changelog chunks collections databases lockpings locks mongos settings shards tags version mongos> db.settings.find(); #chunk大小的設置在設置裏面,默認大小是64M { "_id" : "chunksize", "value" : NumberLong(64) } mongos> db.settings.find(); { "_id" : "chunksize", "value" : NumberLong(64) } mongos> db.settings.save({_id:'chunksize'},{$set:{value: 1}}); #這裡不能用update的方式修改 WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 }) mongos> db.settings.find(); { "_id" : "chunksize" } mongos> db.settings.save({ "_id" : "chunksize", "value" : NumberLong(64) }); WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 }) mongos> db.settings.save({ "_id" : "chunksize", "value" : NumberLong(1) }); #修改chunk大小的配置為1M WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 }) mongos> db.settings.find(); #查看修改成功 { "_id" : "chunksize", "value" : NumberLong(1) } mongos>

下面我們插入15萬行數據,查看分片規則下的分片情況

for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) }; 之前的表被刪了,分片規則肯定也被刪除了,重新建立分片規則吧 mongos> use shop; switched to db shop mongos> db.goods.drop(); false mongos> for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) }; WriteResult({ "nInserted" : 1 }) mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 1 : Success databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } mongos> sh.shardCollection('shop.goods',{goods_id:1}); { "proposedKey" : { "goods_id" : 1 }, "curIndexes" : [ { "v" : 1, "key" : { "_id" : 1 }, "name" : "_id_", "ns" : "shop.goods" } ], "ok" : 0, "errmsg" : "please create an index that starts with the shard key before sharding." } mongos> 重新建立分片規則,然後添加數據 mongos> db.goods.drop(); true mongos> sh.shardCollection('shop.goods',{goods_id:1}); { "collectionsharded" : "shop.goods", "ok" : 1 } mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 1 : Success databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0001 1 { "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0) 重新插入數據,發現一個分片分了7個chunk,一個分片分了20個chunk,有點不均勻。手動預分配的方式更好 mongos> for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) }; WriteResult({ "nInserted" : 1 }) mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 8 : Success 13 : Failed with error 'aborted', from shard0001 to shard0000 databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0000 7 shard0001 20 too many chunks to print, use verbose if you want to force print mongos> 根據提示,試了幾次,正確顯示出詳細分片情況如下 mongos> sh.status({verbose:1}); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: { "_id" : "mcw01:30000", "ping" : ISODate("2022-03-04T18:32:36.132Z"), "up" : NumberLong(7555), "waiting" : true, "mongoVersion" : "3.2.8" } balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 8 : Success 29 : Failed with error 'aborted', from shard0001 to shard0000 databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0000 7 shard0001 20 { "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : 2 } on : shard0000 Timestamp(8, 1) { "goods_id" : 2 } -->> { "goods_id" : 12 } on : shard0001 Timestamp(7, 1) { "goods_id" : 12 } -->> { "goods_id" : 5473 } on : shard0001 Timestamp(2, 2) { "goods_id" : 5473 } -->> { "goods_id" : 12733 } on : shard0001 Timestamp(2, 3) { "goods_id" : 12733 } -->> { "goods_id" : 18194 } on : shard0000 Timestamp(3, 2) { "goods_id" : 18194 } -->> { "goods_id" : 23785 } on : shard0000 Timestamp(3, 3) { "goods_id" : 23785 } -->> { "goods_id" : 29246 } on : shard0001 Timestamp(4, 2) { "goods_id" : 29246 } -->> { "goods_id" : 34731 } on : shard0001 Timestamp(4, 3) { "goods_id" : 34731 } -->> { "goods_id" : 40192 } on : shard0000 Timestamp(5, 2) { "goods_id" : 40192 } -->> { "goods_id" : 45913 } on : shard0000 Timestamp(5, 3) { "goods_id" : 45913 } -->> { "goods_id" : 51374 } on : shard0001 Timestamp(6, 2) { "goods_id" : 51374 } -->> { "goods_id" : 57694 } on : shard0001 Timestamp(6, 3) { "goods_id" : 57694 } -->> { "goods_id" : 63155 } on : shard0000 Timestamp(7, 2) { "goods_id" : 63155 } -->> { "goods_id" : 69367 } on : shard0000 Timestamp(7, 3) { "goods_id" : 69367 } -->> { "goods_id" : 74828 } on : shard0001 Timestamp(8, 2) { "goods_id" : 74828 } -->> { "goods_id" : 81170 } on : shard0001 Timestamp(8, 3) { "goods_id" : 81170 } -->> { "goods_id" : 86631 } on : shard0001 Timestamp(8, 5) { "goods_id" : 86631 } -->> { "goods_id" : 93462 } on : shard0001 Timestamp(8, 6) { "goods_id" : 93462 } -->> { "goods_id" : 98923 } on : shard0001 Timestamp(8, 8) { "goods_id" : 98923 } -->> { "goods_id" : 106012 } on : shard0001 Timestamp(8, 9) { "goods_id" : 106012 } -->> { "goods_id" : 111473 } on : shard0001 Timestamp(8, 11) { "goods_id" : 111473 } -->> { "goods_id" : 118412 } on : shard0001 Timestamp(8, 12) { "goods_id" : 118412 } -->> { "goods_id" : 123873 } on : shard0001 Timestamp(8, 14) { "goods_id" : 123873 } -->> { "goods_id" : 130255 } on : shard0001 Timestamp(8, 15) { "goods_id" : 130255 } -->> { "goods_id" : 135716 } on : shard0001 Timestamp(8, 17) { "goods_id" : 135716 } -->> { "goods_id" : 142058 } on : shard0001 Timestamp(8, 18) { "goods_id" : 142058 } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(8, 19) mongos> 過一天後再看,發現兩個分片上的chunk分片的比較均衡了13,14,說明它沒有後台自動在做均衡,而且不是很快即均衡的,需要時間。 [mongod@mcw01 ~]$ mongo --port 30000 MongoDB shell version: 3.2.8 connecting to: 127.0.0.1:30000/test mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 14 : Success 65 : Failed with error 'aborted', from shard0001 to shard0000 databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0000 13 shard0001 14 too many chunks to print, use verbose if you want to force print mongos>

手動預先分片

分片的命令

replication 英 [ˌreplɪ'keɪʃ(ə)n] 美 [ˌreplɪ'keɪʃ(ə)n] n. (繪畫等的)複製;拷貝;重複(實驗);(尤指對答辯的)回答 for(var i=1;i<=40;i++){sh.splitAt('shop.user',{userid:i*1000})} 給shop這個庫下的user表切割分片,只要userid字段是1000的倍數就切割一次,形成一個新的chunk。 mongos> sh.help(); sh.addShard( host ) server:port OR setname/server:port sh.enableSharding(dbname) enables sharding on the database dbname sh.shardCollection(fullName,key,unique) shards the collection sh.splitFind(fullName,find) splits the chunk that find is in at the median sh.splitAt(fullName,middle) splits the chunk that middle is in at middle sh.moveChunk(fullName,find,to) move the chunk where 'find' is to 'to' (name of shard) sh.setBalancerState( <bool on or not> ) turns the balancer on or off true=on, false=off sh.getBalancerState() return true if enabled sh.isBalancerRunning() return true if the balancer has work in progress on any mongos sh.disableBalancing(coll) disable balancing on one collection sh.enableBalancing(coll) re-enable balancing on one collection sh.addShardTag(shard,tag) adds the tag to the shard sh.removeShardTag(shard,tag) removes the tag from the shard sh.addTagRange(fullName,min,max,tag) tags the specified range of the given collection sh.removeTagRange(fullName,min,max,tag) removes the tagged range of the given collection sh.status() prints a general overview of the cluster mongos>

預先分片

給user表做分片,以userid作為片鍵進行分片。 假設預計一年內增長4千萬用戶,這兩個sharding上每個上分2千萬,2千萬又分為20個片,每個片上是一百萬個數據 我們模擬一下一共30-40個片,每個片上1千條數據 。這樣預分片得使用切割的方法 mongos> use shop switched to db shop mongos> sh.shardCollection('shop.user',{userid:1}) { "collectionsharded" : "shop.user", "ok" : 1 } mongos> #給shop這個庫下的user表切割分片,只要userid字段是1000的倍數就切割一次,形成一個新的chunk。 mongos> for(var i=1;i<=40;i++){sh.splitAt('shop.user',{userid:i*1000})} { "ok" : 1 } mongos>

執行預先分片後查看

查看user表,當穩定後,20,21就不會因為數據不平衡來迴轉移分片中的chunk,導致機器的io很高 mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("62223dc1dd5791b451d9b441") } shards: { "_id" : "shard0000", "host" : "10.0.0.11:27017" } { "_id" : "shard0001", "host" : "10.0.0.11:27018" } active mongoses: "3.2.8" : 1 balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 34 : Success 65 : Failed with error 'aborted', from shard0001 to shard0000 databases: { "_id" : "test", "primary" : "shard0000", "partitioned" : false } { "_id" : "shop", "primary" : "shard0001", "partitioned" : true } shop.goods shard key: { "goods_id" : 1 } unique: false balancing: true chunks: shard0000 13 shard0001 14 too many chunks to print, use verbose if you want to force print shop.user shard key: { "userid" : 1 } unique: false balancing: true chunks: shard0000 20 shard0001 21 too many chunks to print, use verbose if you want to force print mongos> 查看分片的詳情,可以看到是這樣分片的,現在還沒有插入數據,但是已經提前知道大概多少數據,根據usserid已經劃分好userid所在的分片了。這樣當插入數據的時候,它應會保存到符合條件的範圍chunk上的。 mongos> sh.status({verbose:1}); --- Sharding Status --- ............. shop.user shard key: { "userid" : 1 } unique: false balancing: true chunks: shard0000 20 shard0001 21 { "userid" : { "$minKey" : 1 } } -->> { "userid" : 1000 } on : shard0000 Timestamp(2, 0) { "userid" : 1000 } -->> { "userid" : 2000 } on : shard0000 Timestamp(3, 0) { "userid" : 2000 } -->> { "userid" : 3000 } on : shard0000 Timestamp(4, 0) { "userid" : 3000 } -->> { "userid" : 4000 } on : shard0000 Timestamp(5, 0) { "userid" : 4000 } -->> { "userid" : 5000 } on : shard0000 Timestamp(6, 0) { "userid" : 5000 } -->> { "userid" : 6000 } on : shard0000 Timestamp(7, 0) { "userid" : 6000 } -->> { "userid" : 7000 } on : shard0000 Timestamp(8, 0) { "userid" : 7000 } -->> { "userid" : 8000 } on : shard0000 Timestamp(9, 0) { "userid" : 8000 } -->> { "userid" : 9000 } on : shard0000 Timestamp(10, 0) { "userid" : 9000 } -->> { "userid" : 10000 } on : shard0000 Timestamp(11, 0) { "userid" : 10000 } -->> { "userid" : 11000 } on : shard0000 Timestamp(12, 0) { "userid" : 11000 } -->> { "userid" : 12000 } on : shard0000 Timestamp(13, 0) { "userid" : 12000 } -->> { "userid" : 13000 } on : shard0000 Timestamp(14, 0) { "userid" : 13000 } -->> { "userid" : 14000 } on : shard0000 Timestamp(15, 0) { "userid" : 14000 } -->> { "userid" : 15000 } on : shard0000 Timestamp(16, 0) { "userid" : 15000 } -->> { "userid" : 16000 } on : shard0000 Timestamp(17, 0) { "userid" : 16000 } -->> { "userid" : 17000 } on : shard0000 Timestamp(18, 0) { "userid" : 17000 } -->> { "userid" : 18000 } on : shard0000 Timestamp(19, 0) { "userid" : 18000 } -->> { "userid" : 19000 } on : shard0000 Timestamp(20, 0) { "userid" : 19000 } -->> { "userid" : 20000 } on : shard0000 Timestamp(21, 0) { "userid" : 20000 } -->> { "userid" : 21000 } on : shard0001 Timestamp(21, 1) { "userid" : 21000 } -->> { "userid" : 22000 } on : shard0001 Timestamp(1, 43) { "userid" : 22000 } -->> { "userid" : 23000 } on : shard0001 Timestamp(1, 45) { "userid" : 23000 } -->> { "userid" : 24000 } on : shard0001 Timestamp(1, 47) { "userid" : 24000 } -->> { "userid" : 25000 } on : shard0001 Timestamp(1, 49) { "userid" : 25000 } -->> { "userid" : 26000 } on : shard0001 Timestamp(1, 51) { "userid" : 26000 } -->> { "userid" : 27000 } on : shard0001 Timestamp(1, 53) { "userid" : 27000 } -->> { "userid" : 28000 } on : shard0001 Timestamp(1, 55) { "userid" : 28000 } -->> { "userid" : 29000 } on : shard0001 Timestamp(1, 57) { "userid" : 29000 } -->> { "userid" : 30000 } on : shard0001 Timestamp(1, 59) { "userid" : 30000 } -->> { "userid" : 31000 } on : shard0001 Timestamp(1, 61) { "userid" : 31000 } -->> { "userid" : 32000 } on : shard0001 Timestamp(1, 63) { "userid" : 32000 } -->> { "userid" : 33000 } on : shard0001 Timestamp(1, 65) { "userid" : 33000 } -->> { "userid" : 34000 } on : shard0001 Timestamp(1, 67) { "userid" : 34000 } -->> { "userid" : 35000 } on : shard0001 Timestamp(1, 69) { "userid" : 35000 } -->> { "userid" : 36000 } on : shard0001 Timestamp(1, 71) { "userid" : 36000 } -->> { "userid" : 37000 } on : shard0001 Timestamp(1, 73) { "userid" : 37000 } -->> { "userid" : 38000 } on : shard0001 Timestamp(1, 75) { "userid" : 38000 } -->> { "userid" : 39000 } on : shard0001 Timestamp(1, 77) { "userid" : 39000 } -->> { "userid" : 40000 } on : shard0001 Timestamp(1, 79) { "userid" : 40000 } -->> { "userid" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 80) mongos>

插入數據,查看手動預分片的效果,防止數據在節點間來回複製

當chunk快滿的時候,一定要提前解決,不然新增新的分片,導致數據大量的移動,io太高而發生服務器掛掉的情況 在mongos上插入數據 mongos> for(var i=1;i<=40000;i++){db.user.insert({userid:i,name:'xiao ma guo he'})}; WriteResult({ "nInserted" : 1 }) mongos> 在27017上可以看到1-19999共19999條數據。根據上面的分片詳情,可以知道shard0000上就是分配了1-20000,而這裡是取前不取後。20000在shard0001上,所以0上有19999條數據,而1上有20000-40000的數據,20000-39999是2萬條數據在1上,加上40000到最大在1上,所以就是20001條數據。預分配分片相對來說比較穩定,這也不會因為當隨着數據插入分配不均衡時,數據在兩個節點之間來回複製帶來的性能問題。 [mongod@mcw01 ~]$ mongo --port 27017 > use shop switched to db shop > db.user.find().count(); 19999 > db.user.find().skip(19997); { "_id" : ObjectId("6222d91f69eed283bf054e96"), "userid" : 19998, "name" : "xiao ma guo he" } { "_id" : ObjectId("6222d91f69eed283bf054e97"), "userid" : 19999, "name" : "xiao ma guo he" } > [mongod@mcw01 ~]$ mongo --port 27018 > use shop switched to db shop > db.user.find().count(); 20001 > db.user.find().skip(19999); { "_id" : ObjectId("6222d93669eed283bf059cb7"), "userid" : 39999, "name" : "xiao ma guo he" } { "_id" : ObjectId("6222d93669eed283bf059cb8"), "userid" : 40000, "name" : "xiao ma guo he" } > db.user.find().limit(1); { "_id" : ObjectId("6222d91f69eed283bf054e98"), "userid" : 20000, "name" : "xiao ma guo he" } >