Pytorch實現基於卷積神經網絡的面部表情識別(詳細步驟)

文章目錄

一、項目背景

二、數據處理

1、標籤與特徵分離

2、數據可視化

3、訓練集和測試集

三、模型搭建

四、模型訓練

五、完整代碼

一、項目背景

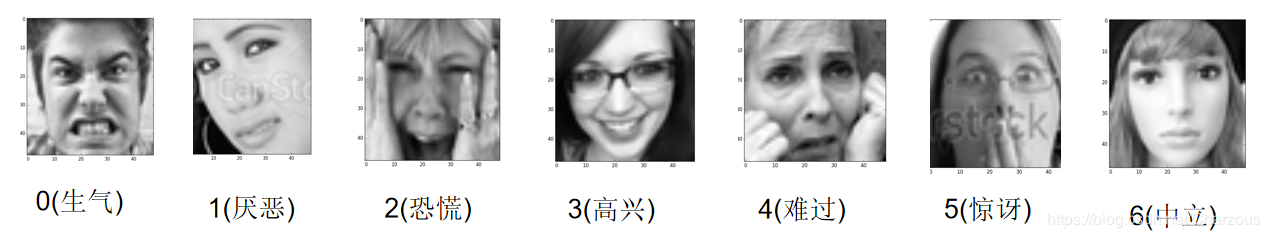

數據集cnn_train.csv包含人類面部表情的圖片的label和feature。在這裡,面部表情識別相當於一個分類問題,共有7個類別。

其中label包括7種類型表情:

一共有28709個label,說明包含了28709張表情包嘿嘿。

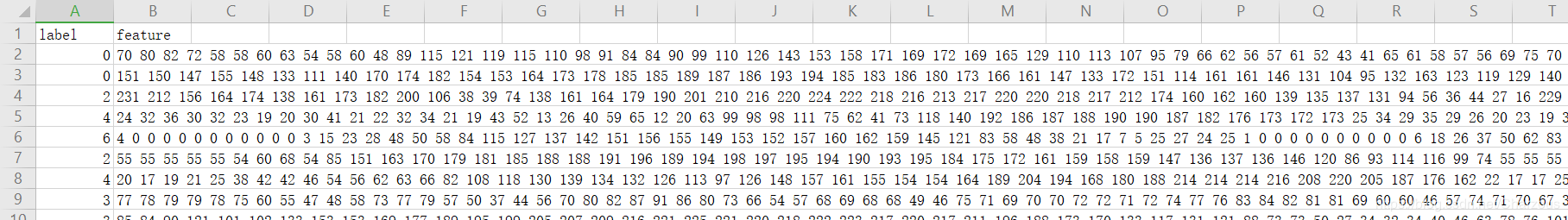

每一行就是一張表情包48*48=2304個像素,相當於4848個灰度值(intensity)(0為黑, 255為白)

二、數據處理

1、標籤與特徵分離

這一步為了後面方便讀取數據集,對原數據進行處理,分離後分別保存為cnn_label.csv和cnn_data.csv.

# cnn_feature_label.py 將label和像素數據分離 import pandas as pd path = 'cnn_train.csv'# 原數據路徑 # 讀取數據 df = pd.read_csv(path) # 提取label數據 df_y = df[['label']] # 提取feature(即像素)數據 df_x = df[['feature']] # 將label寫入label.csv df_y.to_csv('cnn_label.csv', index=False, header=False) # 將feature數據寫入data.csv df_x.to_csv('cnn_data.csv', index=False, header=False)

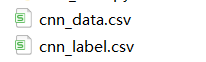

執行之後生成結果文件:

2、數據可視化

完成與標籤分離後,下一步我們對特徵進一步處理,也就是將每個數據行的2304個像素值合成每張48*48的表情圖。

# face_view.py 數據可視化 import cv2 import numpy as np # 指定存放圖片的路徑 path = './/face' # 讀取像素數據 data = np.loadtxt('cnn_data.csv') # 按行取數據 for i in range(data.shape[0]): face_array = data[i, :].reshape((48, 48)) # reshape cv2.imwrite(path + '//' + '{}.jpg'.format(i), face_array) # 寫圖片

這段代碼將寫入28709張表情圖,執行需要一小段時間。

結果如下:

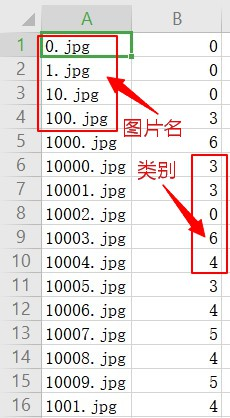

3、訓練集和測試集

第一步,我們要訓練模型,需要劃分一下訓練集和驗證集。一共有28709張圖片,我取前24000張圖片作為訓練集,其他圖片作為驗證集。新建文件夾cnn_train和cnn_val,將0.jpg到23999.jpg放進文件夾cnn_train,將其他圖片放進文件夾cnn_val。

第二步,對每張圖片標記屬於哪一個類別,存放在dataset.csv中,分別在剛剛訓練集和測試集執行標記任務。

# cnn_picture_label.py 表情圖片和類別標註 import os import pandas as pd def data_label(path): # 讀取label文件 df_label = pd.read_csv('cnn_label.csv', header=None) # 查看該文件夾下所有文件 files_dir = os.listdir(path) # 用於存放圖片名 path_list = [] # 用於存放圖片對應的label label_list = [] # 遍歷該文件夾下的所有文件 for file_dir in files_dir: # 如果某文件是圖片,則將其文件名以及對應的label取出,分別放入path_list和label_list這兩個列表中 if os.path.splitext(file_dir)[1] == ".jpg": path_list.append(file_dir) index = int(os.path.splitext(file_dir)[0]) label_list.append(df_label.iat[index, 0]) # 將兩個列表寫進dataset.csv文件 path_s = pd.Series(path_list) label_s = pd.Series(label_list) df = pd.DataFrame() df['path'] = path_s df['label'] = label_s df.to_csv(path + '\\dataset.csv', index=False, header=False) def main(): # 指定文件夾路徑 train_path = 'D:\\PyCharm_Project\\deep learning\\model\\cnn_train' val_path = 'D:\\PyCharm_Project\\deep learning\\model\\cnn_val' data_label(train_path) data_label(val_path) if __name__ == "__main__": main()

完成之後如圖:

第三步,重寫Dataset類,它是Pytorch中圖像數據集加載的一個基類,源碼如下,我們需要重寫類來實現加載上面的圖像數據集。

import bisect import warnings from torch._utils import _accumulate from torch import randperm class Dataset(object): r"""An abstract class representing a :class:`Dataset`. All datasets that represent a map from keys to data samples should subclass it. All subclasses should overwrite :meth:`__getitem__`, supporting fetching a data sample for a given key. Subclasses could also optionally overwrite :meth:`__len__`, which is expected to return the size of the dataset by many :class:`~torch.utils.data.Sampler` implementations and the default options of :class:`~torch.utils.data.DataLoader`. .. note:: :class:`~torch.utils.data.DataLoader` by default constructs a index sampler that yields integral indices. To make it work with a map-style dataset with non-integral indices/keys, a custom sampler must be provided. """ def __getitem__(self, index): raise NotImplementedError def __add__(self, other): return ConcatDataset([self, other]) # No `def __len__(self)` default? # See NOTE [ Lack of Default `__len__` in Python Abstract Base Classes ] # in pytorch/torch/utils/data/sampler.py

重寫之後如下,自定義類名為FaceDataset:

class FaceDataset(data.Dataset): # 初始化 def __init__(self, root): super(FaceDataset, self).__init__() self.root = root df_path = pd.read_csv(root + '\\dataset.csv', header=None, usecols=[0]) df_label = pd.read_csv(root + '\\dataset.csv', header=None, usecols=[1]) self.path = np.array(df_path)[:, 0] self.label = np.array(df_label)[:, 0] # 讀取某幅圖片,item為索引號 def __getitem__(self, item): # 圖像數據用於訓練,需為tensor類型,label用numpy或list均可 face = cv2.imread(self.root + '\\' + self.path[item]) # 讀取單通道灰度圖 face_gray = cv2.cvtColor(face, cv2.COLOR_BGR2GRAY) # 直方圖均衡化 face_hist = cv2.equalizeHist(face_gray) """ 像素值標準化 讀出的數據是48X48的,而後續卷積神經網絡中nn.Conv2d() API所接受的數據格式是(batch_size, channel, width, higth), 本次圖片通道為1,因此我們要將48X48 reshape為1X48X48。 """ face_normalized = face_hist.reshape(1, 48, 48) / 255.0 face_tensor = torch.from_numpy(face_normalized) face_tensor = face_tensor.type('torch.FloatTensor') label = self.label[item] return face_tensor, label # 獲取數據集樣本個數 def __len__(self): return self.path.shape[0]

到此,就實現了數據集加載的過程,下面準備使用這個類將數據餵給模型訓練了。

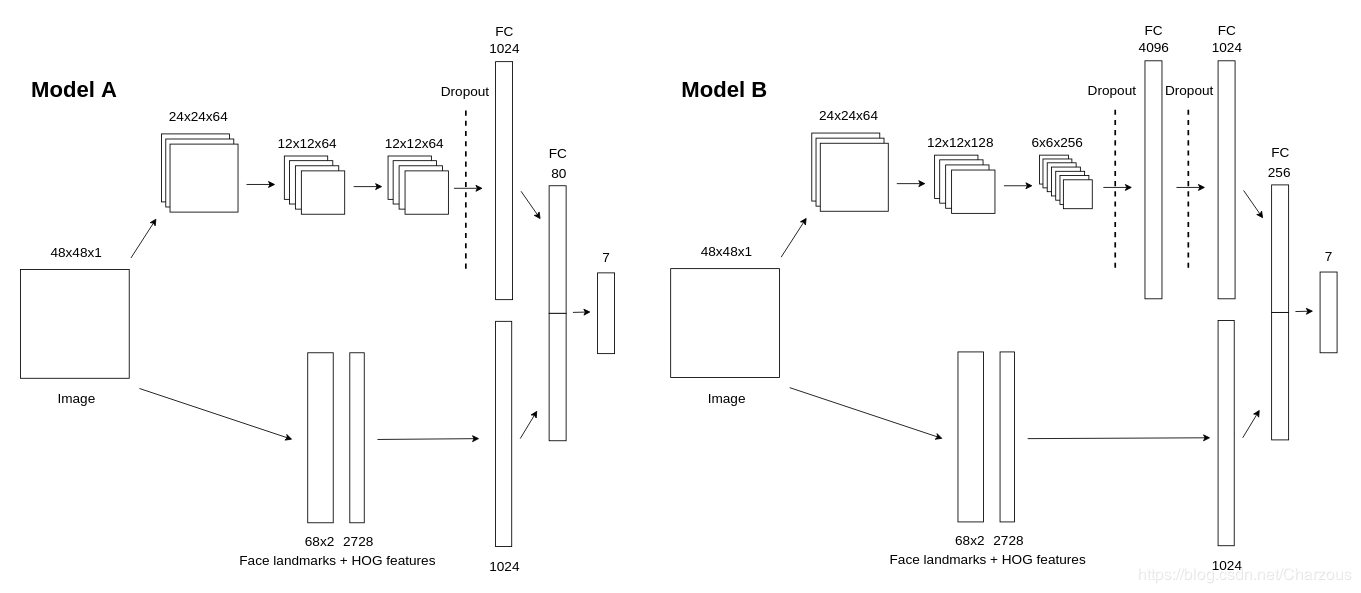

這是Github上面部表情識別的一個開源項目的模型結構,我們使用model B搭建網絡模型。使用RRelu(隨機修正線性單元)作為激活函數。卷積神經網絡模型如下:

class FaceCNN(nn.Module): # 初始化網絡結構 def __init__(self): super(FaceCNN, self).__init__() # 第一層卷積、池化 self.conv1 = nn.Sequential( nn.Conv2d(in_channels=1, out_channels=64, kernel_size=3, stride=1, padding=1), # 卷積層 nn.BatchNorm2d(num_features=64), # 歸一化 nn.RReLU(inplace=True), # 激活函數 nn.MaxPool2d(kernel_size=2, stride=2), # 最大值池化 ) # 第二層卷積、池化 self.conv2 = nn.Sequential( nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1), nn.BatchNorm2d(num_features=128), nn.RReLU(inplace=True), # output:(bitch_size, 128, 12 ,12) nn.MaxPool2d(kernel_size=2, stride=2), ) # 第三層卷積、池化 self.conv3 = nn.Sequential( nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1), nn.BatchNorm2d(num_features=256), nn.RReLU(inplace=True), # output:(bitch_size, 256, 6 ,6) nn.MaxPool2d(kernel_size=2, stride=2), ) # 參數初始化 self.conv1.apply(gaussian_weights_init) self.conv2.apply(gaussian_weights_init) self.conv3.apply(gaussian_weights_init) # 全連接層 self.fc = nn.Sequential( nn.Dropout(p=0.2), nn.Linear(in_features=256 * 6 * 6, out_features=4096), nn.RReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(in_features=4096, out_features=1024), nn.RReLU(inplace=True), nn.Linear(in_features=1024, out_features=256), nn.RReLU(inplace=True), nn.Linear(in_features=256, out_features=7), ) # 前向傳播 def forward(self, x): x = self.conv1(x) x = self.conv2(x) x = self.conv3(x) # 數據扁平化 x = x.view(x.shape[0], -1) y = self.fc(x) return y

參數解析:

輸入通道數in_channels,輸出通道數(即卷積核的通道數)out_channels,卷積核大小kernel_size,步長stride,對稱填0行列數padding。

第一層卷積:input:(bitch_size, 1, 48, 48), output(bitch_size, 64, 24, 24)

第二層卷積:input:(bitch_size, 64, 24, 24), output(bitch_size, 128, 12, 12)

第三層卷積:input:(bitch_size, 128, 12, 12), output:(bitch_size, 256, 6, 6)

四、模型訓練

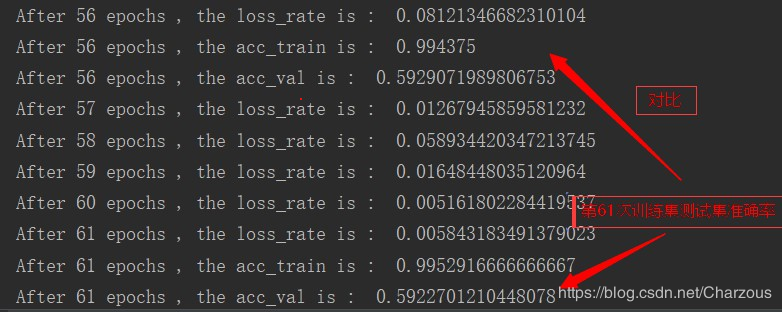

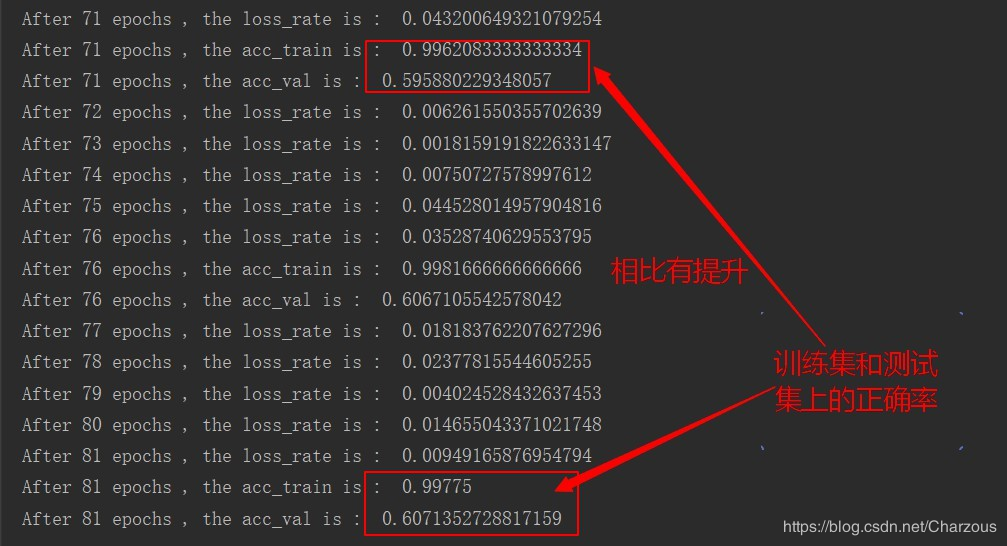

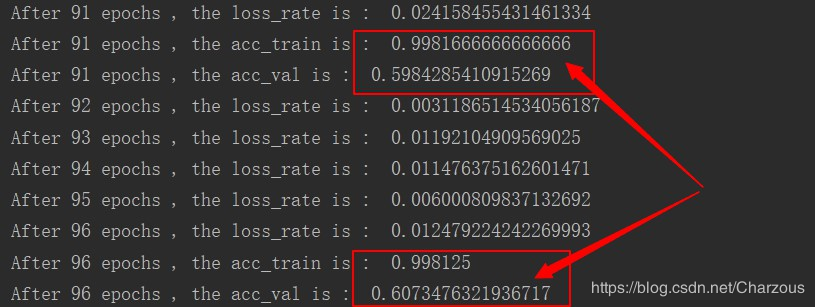

損失函數使用交叉熵,優化器是隨機梯度下降SGD,其中weight_decay為正則項係數,每輪訓練打印損失值,每5輪訓練打印準確率。

def train(train_dataset, val_dataset, batch_size, epochs, learning_rate, wt_decay): # 載入數據並分割batch train_loader = data.DataLoader(train_dataset, batch_size) # 構建模型 model = FaceCNN() # 損失函數 loss_function = nn.CrossEntropyLoss() # 優化器 optimizer = optim.SGD(model.parameters(), lr=learning_rate, weight_decay=wt_decay) # 逐輪訓練 for epoch in range(epochs): # 記錄損失值 loss_rate = 0 # scheduler.step() # 學習率衰減 model.train() # 模型訓練 for images, labels in train_loader: # 梯度清零 optimizer.zero_grad() # 前向傳播 output = model.forward(images) # 誤差計算 loss_rate = loss_function(output, labels) # 誤差的反向傳播 loss_rate.backward() # 更新參數 optimizer.step() # 打印每輪的損失 print('After {} epochs , the loss_rate is : '.format(epoch + 1), loss_rate.item()) if epoch % 5 == 0: model.eval() # 模型評估 acc_train = validate(model, train_dataset, batch_size) acc_val = validate(model, val_dataset, batch_size) print('After {} epochs , the acc_train is : '.format(epoch + 1), acc_train) print('After {} epochs , the acc_val is : '.format(epoch + 1), acc_val) return model

1 """ 2 CNN_face.py 基於卷積神經網絡的面部表情識別(Pytorch實現) 3 """ 4 import torch 5 import torch.utils.data as data 6 import torch.nn as nn 7 import torch.optim as optim 8 import numpy as np 9 import pandas as pd 10 import cv2 11 12 13 # 參數初始化 14 def gaussian_weights_init(m): 15 classname = m.__class__.__name__ 16 # 字符串查找find,找不到返回-1,不等-1即字符串中含有該字符 17 if classname.find('Conv') != -1: 18 m.weight.data.normal_(0.0, 0.04) 19 20 21 # 驗證模型在驗證集上的正確率 22 def validate(model, dataset, batch_size): 23 val_loader = data.DataLoader(dataset, batch_size) 24 result, num = 0.0, 0 25 for images, labels in val_loader: 26 pred = model.forward(images) 27 pred = np.argmax(pred.data.numpy(), axis=1) 28 labels = labels.data.numpy() 29 result += np.sum((pred == labels)) 30 num += len(images) 31 acc = result / num 32 return acc 33 34 35 class FaceDataset(data.Dataset): 36 # 初始化 37 def __init__(self, root): 38 super(FaceDataset, self).__init__() 39 self.root = root 40 df_path = pd.read_csv(root + '\\dataset.csv', header=None, usecols=[0]) 41 df_label = pd.read_csv(root + '\\dataset.csv', header=None, usecols=[1]) 42 self.path = np.array(df_path)[:, 0] 43 self.label = np.array(df_label)[:, 0] 44 45 # 讀取某幅圖片,item為索引號 46 def __getitem__(self, item): 47 # 圖像數據用於訓練,需為tensor類型,label用numpy或list均可 48 face = cv2.imread(self.root + '\\' + self.path[item]) 49 # 讀取單通道灰度圖 50 face_gray = cv2.cvtColor(face, cv2.COLOR_BGR2GRAY) 51 # 直方圖均衡化 52 face_hist = cv2.equalizeHist(face_gray) 53 """ 54 像素值標準化 55 讀出的數據是48X48的,而後續卷積神經網絡中nn.Conv2d() API所接受的數據格式是(batch_size, channel, width, higth), 56 本次圖片通道為1,因此我們要將48X48 reshape為1X48X48。 57 """ 58 face_normalized = face_hist.reshape(1, 48, 48) / 255.0 59 face_tensor = torch.from_numpy(face_normalized) 60 face_tensor = face_tensor.type('torch.FloatTensor') 61 label = self.label[item] 62 return face_tensor, label 63 64 # 獲取數據集樣本個數 65 def __len__(self): 66 return self.path.shape[0] 67 68 69 class FaceCNN(nn.Module): 70 # 初始化網絡結構 71 def __init__(self): 72 super(FaceCNN, self).__init__() 73 74 # 第一次卷積、池化 75 self.conv1 = nn.Sequential( 76 nn.Conv2d(in_channels=1, out_channels=64, kernel_size=3, stride=1, padding=1), # 卷積層 77 nn.BatchNorm2d(num_features=64), # 歸一化 78 nn.RReLU(inplace=True), # 激活函數 79 nn.MaxPool2d(kernel_size=2, stride=2), # 最大值池化 80 ) 81 82 # 第二次卷積、池化 83 self.conv2 = nn.Sequential( 84 nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1), 85 nn.BatchNorm2d(num_features=128), 86 nn.RReLU(inplace=True), 87 nn.MaxPool2d(kernel_size=2, stride=2), 88 ) 89 90 # 第三次卷積、池化 91 self.conv3 = nn.Sequential( 92 nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1), 93 nn.BatchNorm2d(num_features=256), 94 nn.RReLU(inplace=True), 95 nn.MaxPool2d(kernel_size=2, stride=2), 96 ) 97 98 # 參數初始化 99 self.conv1.apply(gaussian_weights_init) 100 self.conv2.apply(gaussian_weights_init) 101 self.conv3.apply(gaussian_weights_init) 102 103 # 全連接層 104 self.fc = nn.Sequential( 105 nn.Dropout(p=0.2), 106 nn.Linear(in_features=256 * 6 * 6, out_features=4096), 107 nn.RReLU(inplace=True), 108 nn.Dropout(p=0.5), 109 nn.Linear(in_features=4096, out_features=1024), 110 nn.RReLU(inplace=True), 111 nn.Linear(in_features=1024, out_features=256), 112 nn.RReLU(inplace=True), 113 nn.Linear(in_features=256, out_features=7), 114 ) 115 116 # 前向傳播 117 def forward(self, x): 118 x = self.conv1(x) 119 x = self.conv2(x) 120 x = self.conv3(x) 121 # 數據扁平化 122 x = x.view(x.shape[0], -1) 123 y = self.fc(x) 124 return y 125 126 127 def train(train_dataset, val_dataset, batch_size, epochs, learning_rate, wt_decay): 128 # 載入數據並分割batch 129 train_loader = data.DataLoader(train_dataset, batch_size) 130 # 構建模型 131 model = FaceCNN() 132 # 損失函數 133 loss_function = nn.CrossEntropyLoss() 134 # 優化器 135 optimizer = optim.SGD(model.parameters(), lr=learning_rate, weight_decay=wt_decay) 136 # 學習率衰減 137 # scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.8) 138 # 逐輪訓練 139 for epoch in range(epochs): 140 # 記錄損失值 141 loss_rate = 0 142 # scheduler.step() # 學習率衰減 143 model.train() # 模型訓練 144 for images, labels in train_loader: 145 # 梯度清零 146 optimizer.zero_grad() 147 # 前向傳播 148 output = model.forward(images) 149 # 誤差計算 150 loss_rate = loss_function(output, labels) 151 # 誤差的反向傳播 152 loss_rate.backward() 153 # 更新參數 154 optimizer.step() 155 156 # 打印每輪的損失 157 print('After {} epochs , the loss_rate is : '.format(epoch + 1), loss_rate.item()) 158 if epoch % 5 == 0: 159 model.eval() # 模型評估 160 acc_train = validate(model, train_dataset, batch_size) 161 acc_val = validate(model, val_dataset, batch_size) 162 print('After {} epochs , the acc_train is : '.format(epoch + 1), acc_train) 163 print('After {} epochs , the acc_val is : '.format(epoch + 1), acc_val) 164 165 return model 166 167 168 def main(): 169 # 數據集實例化(創建數據集) 170 train_dataset = FaceDataset(root='D:\PyCharm_Project\deep learning\model\cnn_train') 171 val_dataset = FaceDataset(root='D:\PyCharm_Project\deep learning\model\cnn_val') 172 # 超參數可自行指定 173 model = train(train_dataset, val_dataset, batch_size=128, epochs=100, learning_rate=0.1, wt_decay=0) 174 # 保存模型 175 torch.save(model, 'model_net.pkl') 176 177 178 if __name__ == '__main__': 179 main()

View Code

以上程序代碼的執行過程需要較長時間,目前我只能在CPU上跑程序,速度慢,算力不足,我差不多用了1天時間訓練100輪,訓練時間看不同電腦設備配置,如果在GPU上跑會快很多。

下面截取幾個訓練結果:

從結果可以看出,訓練在60輪的時候,模型在訓練集上的準確率達到99%以上,而在測試集上只有60%左右,很明顯出現過擬合的情況,還可以進一步優化參數,使用正則等方法防止過擬合。另外,後面幾十輪訓練的提升很低,還需要找出原因。

這個過程我還在學習中,上面是目前達到的結果,希望之後能夠把這個模型進一步優化,提高準確率。

小結:

學習了機器學習和深度學習有一段時間,基本上看的是李宏毅老師講解的理論知識,還未真正去實現訓練一個模型。這篇記錄我第一次學習的項目過程,多有不足,還需不斷實踐。目前遇到的問題是:1、基本的理論知識能夠理解,但是在公式推導和模型選擇還未很好掌握。2、未具備訓練一個模型的經驗(代碼實現),後續需要學習實戰項目。

參考資料:

機器學習-李宏毅(2019)視頻

//ntumlta2019.github.io/ml-web-hw3/

//www.cnblogs.com/HL-space/p/10888556.html

//github.com/amineHorseman/facial-expression-recognition-using-cnn

————————————————

這是我的CSDN博客鏈接,歡迎交流:

版權聲明:本文為CSDN博主「Charzous」的原創文章,遵循CC 4.0 BY-SA版權協議,轉載請附上原文出處鏈接及本聲明。

原文鏈接://blog.csdn.net/Charzous/article/details/107452464