基於模板匹配的車牌識別

基於模板匹配的車牌識別

1、項目準備

# 導入所需模塊

import cv2

from matplotlib import pyplot as plt

import os

import numpy as np

# 定義必要函數

# 顯示圖片

def cv_show(name,img):

cv2.imshow(name,img)

cv2.waitKey()

cv2.destroyAllWindows()

# plt顯示彩色圖片

def plt_show0(img):

b,g,r = cv2.split(img)

img = cv2.merge([r, g, b])

plt.imshow(img)

plt.show()

# plt顯示灰度圖片

def plt_show(img):

plt.imshow(img,cmap='gray')

plt.show()

# 圖像去噪灰度處理

def gray_guss(image):

image = cv2.GaussianBlur(image, (3, 3), 0)

gray_image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

return gray_image

# 讀取待檢測圖片

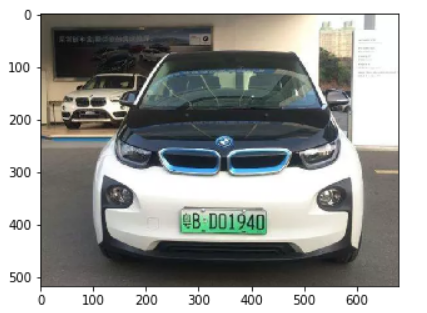

origin_image = cv2.imread('./image/test2.png')

plt_show0(origin_image)

2、提取車牌位置圖片

# 提取車牌部分圖片

def get_carLicense_img(image):

gray_image = gray_guss(image)

Sobel_x = cv2.Sobel(gray_image, cv2.CV_16S, 1, 0)

absX = cv2.convertScaleAbs(Sobel_x)

image = absX

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU)

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (17, 5))

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernelX,iterations = 3)

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (20, 1))

kernelY = cv2.getStructuringElement(cv2.MORPH_RECT, (1, 19))

image = cv2.dilate(image, kernelX)

image = cv2.erode(image, kernelX)

image = cv2.erode(image, kernelY)

image = cv2.dilate(image, kernelY)

image = cv2.medianBlur(image, 15)

contours, hierarchy = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for item in contours:

rect = cv2.boundingRect(item)

x = rect[0]

y = rect[1]

weight = rect[2]

height = rect[3]

if (weight > (height * 3)) and (weight < (height * 4)):

image = origin_image[y:y + height, x:x + weight]

return image

image = origin_image.copy()

carLicense_image = get_carLicense_img(image)

plt_show0(carLicense_image)

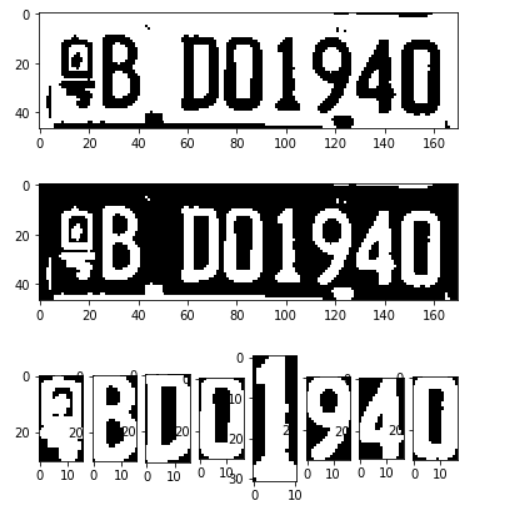

3、車牌字符分割

def carLicense_spilte(image):

gray_image = gray_guss(image)

ret, image = cv2.threshold(gray_image, 0, 255, cv2.THRESH_OTSU)

plt_show(image)

# 計算二值圖像黑白點的個數,處理綠牌照問題,讓車牌號碼始終為白色

area_white = 0

area_black = 0

height, width = image.shape

for i in range(height):

for j in range(width):

if image[i, j] == 255:

area_white += 1

else:

area_black += 1

if area_white>area_black:

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU | cv2.THRESH_BINARY_INV)

plt_show(image)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (2, 2))

image = cv2.dilate(image, kernel)

contours, hierarchy = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

words = []

word_images = []

for item in contours:

word = []

rect = cv2.boundingRect(item)

x = rect[0]

y = rect[1]

weight = rect[2]

height = rect[3]

word.append(x)

word.append(y)

word.append(weight)

word.append(height)

words.append(word)

words = sorted(words,key=lambda s:s[0],reverse=False)

i = 0

for word in words:

if (word[3] > (word[2] * 1.8)) and (word[3] < (word[2] * 3.5)):

i = i+1

splite_image = image[word[1]:word[1] + word[3], word[0]:word[0] + word[2]]

word_images.append(splite_image)

return word_images

image = carLicense_image.copy()

word_images = carLicense_spilte(image)

# 綠牌要改為8,藍牌為7,顯示所用

for i,j in enumerate(word_images):

plt.subplot(1,8,i+1)

plt.imshow(word_images[i],cmap='gray')

plt.show()

4、模板匹配

# 準備模板

template = ['0','1','2','3','4','5','6','7','8','9',

'A','B','C','D','E','F','G','H','J','K','L','M','N','P','Q','R','S','T','U','V','W','X','Y','Z',

'藏','川','鄂','甘','贛','貴','桂','黑','滬','吉','冀','津','晉','京','遼','魯','蒙','閩','寧',

'青','瓊','陝','蘇','皖','湘','新','渝','豫','粵','雲','浙']

# 讀取一個文件夾下的所有圖片,輸入參數是文件名,返迴文件地址列表

def read_directory(directory_name):

referImg_list = []

for filename in os.listdir(directory_name):

referImg_list.append(directory_name + "/" + filename)

return referImg_list

# 中文模板列表(只匹配車牌的第一個字符)

def get_chinese_words_list():

chinese_words_list = []

for i in range(34,64):

c_word = read_directory('./refer1/'+ template[i])

chinese_words_list.append(c_word)

return chinese_words_list

chinese_words_list = get_chinese_words_list()

# 英文模板列表(只匹配車牌的第二個字符)

def get_eng_words_list():

eng_words_list = []

for i in range(10,34):

e_word = read_directory('./refer1/'+ template[i])

eng_words_list.append(e_word)

return eng_words_list

eng_words_list = get_eng_words_list()

# 英文數字模板列表(匹配車牌後面的字符)

def get_eng_num_words_list():

eng_num_words_list = []

for i in range(0,34):

word = read_directory('./refer1/'+ template[i])

eng_num_words_list.append(word)

return eng_num_words_list

eng_num_words_list = get_eng_num_words_list()

# 讀取一個模板地址與圖片進行匹配,返回得分

def template_score(template,image):

template_img=cv2.imdecode(np.fromfile(template,dtype=np.uint8),1)

template_img = cv2.cvtColor(template_img, cv2.COLOR_RGB2GRAY)

ret, template_img = cv2.threshold(template_img, 0, 255, cv2.THRESH_OTSU)

# height, width = template_img.shape

# image_ = image.copy()

# image_ = cv2.resize(image_, (width, height))

image_ = image.copy()

height, width = image_.shape

template_img = cv2.resize(template_img, (width, height))

result = cv2.matchTemplate(image_, template_img, cv2.TM_CCOEFF)

return result[0][0]

def template_matching(word_images):

results = []

for index,word_image in enumerate(word_images):

if index==0:

best_score = []

for chinese_words in chinese_words_list:

score = []

for chinese_word in chinese_words:

result = template_score(chinese_word,word_image)

score.append(result)

best_score.append(max(score))

i = best_score.index(max(best_score))

# print(template[34+i])

r = template[34+i]

results.append(r)

continue

if index==1:

best_score = []

for eng_word_list in eng_words_list:

score = []

for eng_word in eng_word_list:

result = template_score(eng_word,word_image)

score.append(result)

best_score.append(max(score))

i = best_score.index(max(best_score))

# print(template[10+i])

r = template[10+i]

results.append(r)

continue

else:

best_score = []

for eng_num_word_list in eng_num_words_list:

score = []

for eng_num_word in eng_num_word_list:

result = template_score(eng_num_word,word_image)

score.append(result)

best_score.append(max(score))

i = best_score.index(max(best_score))

# print(template[i])

r = template[i]

results.append(r)

continue

return results

word_images_ = word_images.copy()

result = template_matching(word_images_)

result

# ['粵', 'B', 'D', '0', '1', '9', '4', '0']

5、結果渲染

height,weight = origin_image.shape[0:2]

print(height)

print(weight)

# 517

# 680

# 中文無法顯示(百度可解決)

image = origin_image.copy()

cv2.rectangle(image, (int(0.2*weight), int(0.75*height)), (int(weight*0.8), int(height*0.95)), (0, 255, 0), 5)

cv2.putText(image, "".join(result), (int(0.2*weight)+30, int(0.75*height)+80), cv2.FONT_HERSHEY_COMPLEX, 2, (0, 255, 0), 10)

plt_show0(image)

6、總結

- 項目的容錯性還不夠高,圖片比較模糊的時候識別不到車牌,還待提高

- 識別速度不夠快

- 模板匹配算法太依賴模板的質量

- 使用大量的模板可以提高精度,但是速度下降嚴重

- 還有很多待優化的地方,比如一張圖中有多個車牌等

- 我認為整個項目最難的地方是車牌位置的提取

- 項目還是很不錯的一個對OpenCV圖像處理的課題項目

- 自己獨立完成,收穫挺大的

- 作者:曾強

- 日期:2020-4-13

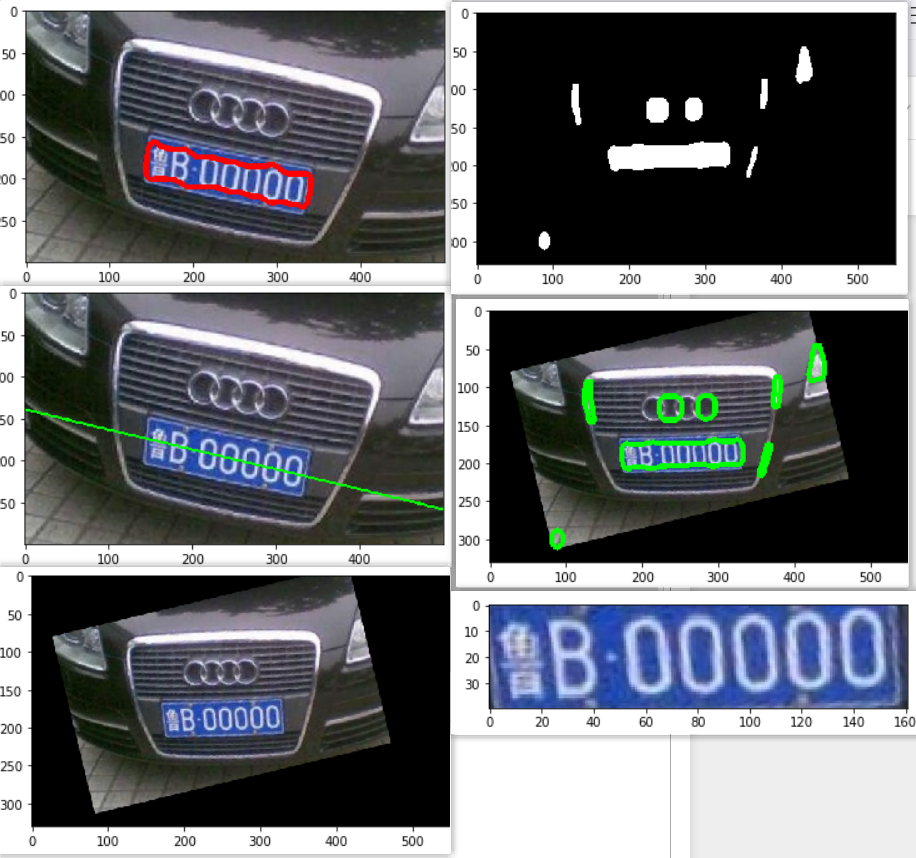

補充-傾斜車牌提取

# 導入所需模塊

import cv2

from matplotlib import pyplot as plt

# 顯示圖片

def cv_show(name,img):

cv2.imshow(name,img)

cv2.waitKey()

cv2.destroyAllWindows()

# plt顯示彩色圖片

def plt_show0(img):

b,g,r = cv2.split(img)

img = cv2.merge([r, g, b])

plt.imshow(img)

plt.show()

# plt顯示灰度圖片

def plt_show(img):

plt.imshow(img,cmap='gray')

plt.show()

# 加載圖片

rawImage = cv2.imread("./image/test3.png")

plt_show0(rawImage)

# 高斯去噪

image = cv2.GaussianBlur(rawImage, (3, 3), 0)

# 預覽效果

plt_show0(image)

# 灰度處理

gray_image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

plt_show(gray_image)

# sobel算子邊緣檢測(做了一個y方向的檢測)

Sobel_x = cv2.Sobel(gray_image, cv2.CV_16S, 1, 0)

# Sobel_y = cv2.Sobel(image, cv2.CV_16S, 0, 1)

absX = cv2.convertScaleAbs(Sobel_x) # 轉回uint8

# absY = cv2.convertScaleAbs(Sobel_y)

# dst = cv2.addWeighted(absX, 0.5, absY, 0.5, 0)

image = absX

plt_show(image)

# 自適應閾值處理

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU)

plt_show(image)

# 閉運算,是白色部分練成整體

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (14, 5))

print(kernelX)

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernelX,iterations = 1)

plt_show(image)

# 去除一些小的白點

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (20, 1))

kernelY = cv2.getStructuringElement(cv2.MORPH_RECT, (1, 19))

# 膨脹,腐蝕

image = cv2.dilate(image, kernelX)

image = cv2.erode(image, kernelX)

# 腐蝕,膨脹

image = cv2.erode(image, kernelY)

image = cv2.dilate(image, kernelY)

plt_show(image)

# 中值濾波去除噪點

image = cv2.medianBlur(image, 15)

plt_show(image)

# 輪廓檢測

# cv2.RETR_EXTERNAL表示只檢測外輪廓

# cv2.CHAIN_APPROX_SIMPLE壓縮水平方向,垂直方向,對角線方向的元素,只保留該方向的終點坐標,例如一個矩形輪廓只需4個點來保存輪廓信息

contours, hierarchy = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# 繪製輪廓

image1 = rawImage.copy()

cv2.drawContours(image1, contours, -1, (0, 255, 0), 5)

plt_show0(image1)

# 篩選出車牌位置的輪廓

# 這裡我只做了一個車牌的長寬比在3:1到4:1之間這樣一個判斷

for index,item in enumerate(contours):

# cv2.boundingRect用一個最小的矩形,把找到的形狀包起來

rect = cv2.boundingRect(item)

x = rect[0]

y = rect[1]

weight = rect[2]

height = rect[3]

# 440mm×140mm

if (weight > (height * 2.5)) and (weight < (height * 4)):

print(index)

image2 = rawImage.copy()

cv2.drawContours(image2, contours, 1, (0, 0, 255), 5)

plt_show0(image2)

直線擬合找斜率

直線擬合fitline //blog.csdn.net/lovetaozibaby/article/details/99482973

參數:

-

InputArray Points: 待擬合的直線的集合,必須是矩陣形式;

-

distType: 距離類型。fitline為距離最小化函數,擬合直線時,要使輸入點到擬合直線的距離和最小化。這裡的** 距離**的類型有以下幾種:

– cv2.DIST_USER : User defined distance

– cv2.DIST_L1: distance = |x1-x2| + |y1-y2|

– cv2.DIST_L2: 歐式距離,此時與最小二乘法相同

– cv2.DIST_C:distance = max(|x1-x2|,|y1-y2|)

– cv2.DIST_L12:L1-L2 metric: distance = 2(sqrt(1+x*x/2) – 1))

– cv2.DIST_FAIR:distance = c^2(|x|/c-log(1+|x|/c)), c = 1.3998

– cv2.DIST_WELSCH: distance = c2/2(1-exp(-(x/c)2)), c = 2.9846

– cv2.DIST_HUBER:distance = |x|<c ? x^2/2 : c(|x|-c/2), c=1.345 -

param: 距離參數,跟所選的距離類型有關,值可以設置為0。

-

reps, aeps: 第5/6個參數用於表示擬合直線所需要的徑向和角度精度,通常情況下兩個值均被設定為1e-2.

output :

- 對於二維直線,輸出output為4維,前兩維代表擬合出的直線的方向,後兩位代表直線上的一點。(即通常說的點斜式直線)

- 其中(vx, vy) 是直線的方向向量,(x, y) 是直線上的一個點。

- 斜率k = vy / vx

- 截距b = y – k * x

cnt = contours[1]

image3 = rawImage.copy()

h, w = image3.shape[:2]

[vx, vy, x, y] = cv2.fitLine(cnt, cv2.DIST_L2, 0, 0.01, 0.01)

print([vx, vy, x, y])

k = vy/vx

b = y-k*x

print(k,b)

lefty = b

righty = k*w+b

img = cv2.line(image3, (w, righty), (0, lefty), (0, 255, 0), 2)

plt_show0(img)

print((w, righty))

print((0, lefty))

import math

a = math.atan(k)

a

a = math.degrees(a)

a

image4 = rawImage.copy()

# 圖像旋轉

h,w = image1.shape[:2]

print(h,w)

#第一個參數旋轉中心,第二個參數旋轉角度,第三個參數:縮放比例

M = cv2.getRotationMatrix2D((w/2,h/2),a,0.8)

#第三個參數:變換後的圖像大小

dst = cv2.warpAffine(image4,M,(int(w*1.1),int(h*1.1)))

plt_show0(dst)

# 高斯去噪

image = cv2.GaussianBlur(dst, (3, 3), 0)

# 預覽效果

plt_show0(image)

# 灰度處理

gray_image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

plt_show(gray_image)

# sobel算子邊緣檢測(做了一個y方向的檢測)

Sobel_x = cv2.Sobel(gray_image, cv2.CV_16S, 1, 0)

# Sobel_y = cv2.Sobel(image, cv2.CV_16S, 0, 1)

absX = cv2.convertScaleAbs(Sobel_x) # 轉回uint8

# absY = cv2.convertScaleAbs(Sobel_y)

# dst = cv2.addWeighted(absX, 0.5, absY, 0.5, 0)

image = absX

plt_show(image)

# 自適應閾值處理

ret, image = cv2.threshold(image, 0, 255, cv2.THRESH_OTSU)

plt_show(image)

# 閉運算,是白色部分練成整體

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (14, 5))

print(kernelX)

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernelX,iterations = 1)

plt_show(image)

# 去除一些小的白點

kernelX = cv2.getStructuringElement(cv2.MORPH_RECT, (20, 1))

kernelY = cv2.getStructuringElement(cv2.MORPH_RECT, (1, 19))

# 膨脹,腐蝕

image = cv2.dilate(image, kernelX)

image = cv2.erode(image, kernelX)

# 腐蝕,膨脹

image = cv2.erode(image, kernelY)

image = cv2.dilate(image, kernelY)

plt_show(image)

# 中值濾波去除噪點

image = cv2.medianBlur(image, 15)

plt_show(image)

# 輪廓檢測

# cv2.RETR_EXTERNAL表示只檢測外輪廓

# cv2.CHAIN_APPROX_SIMPLE壓縮水平方向,垂直方向,對角線方向的元素,只保留該方向的終點坐標,例如一個矩形輪廓只需4個點來保存輪廓信息

contours, hierarchy = cv2.findContours(image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# 繪製輪廓

image1 = dst.copy()

cv2.drawContours(image1, contours, -1, (0, 255, 0), 5)

plt_show0(image1)

c = None

i = None

# 篩選出車牌位置的輪廓

# 這裡我只做了一個車牌的長寬比在3:1到4:1之間這樣一個判斷

for item in contours:

# cv2.boundingRect用一個最小的矩形,把找到的形狀包起來

rect = cv2.boundingRect(item)

x = rect[0]

y = rect[1]

weight = rect[2]

height = rect[3]

# 440mm×140mm

if (weight > (height * 2.5)) and (weight < (height * 5)):

c=rect

i = item

image = dst[y:y + height, x:x + weight]

# cv_show('image',image)

# 圖像保存

plt_show0(image)

cv2.imwrite('./car_license/test3.png', image)

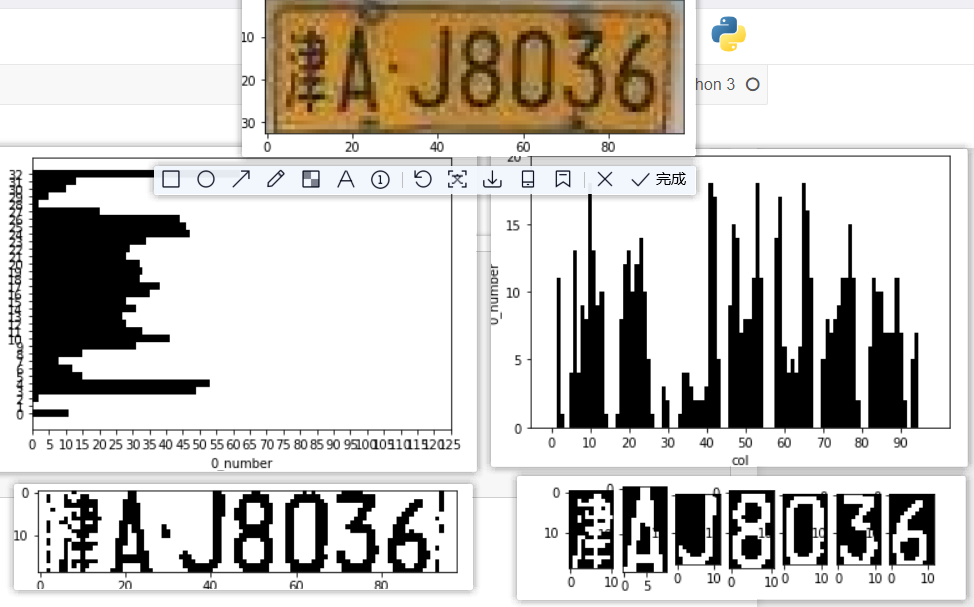

補充-直方圖分割車牌字符

# 導入所需模塊

import cv2

from matplotlib import pyplot as plt

# 顯示圖片

def cv_show(name,img):

cv2.imshow(name,img)

cv2.waitKey()

cv2.destroyAllWindows()

# plt顯示彩色圖片

def plt_show0(img):

b,g,r = cv2.split(img)

img = cv2.merge([r, g, b])

plt.imshow(img)

plt.show()

# plt顯示灰度圖片

def plt_show(img):

plt.imshow(img,cmap='gray')

plt.show()

# 加載圖片

rawImage = cv2.imread("./car_license/test4.png")

plt_show0(rawImage)

# 灰度處理

image = rawImage.copy()

gray_image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

plt_show(gray_image)

字符水平方向的切割

目的:去除車牌邊框和鉚釘的干擾

# 自適應閾值處理(二值化)

ret, image = cv2.threshold(gray_image, 0, 255, cv2.THRESH_OTSU)

plt_show(image)

image.shape # 47行,170列

rows = image.shape[0]

cols = image.shape[1]

print(rows,cols)

33 98

# 二值統計,統計沒每一行的黑值(0)的個數

hd = []

for row in range(rows):

res = 0

for col in range(cols):

if image[row][col] == 0:

res = res+1

hd.append(res)

len(hd)

max(hd)

62

# 畫出柱狀圖

y = [y for y in range(rows)]

x = hd

plt.barh(y,x,color='black',height=1)

# 設置x,y軸標籤

plt.xlabel('0_number')

plt.ylabel('row')

# 設置刻度

plt.xticks([x for x in range(0,130,5)])

plt.yticks([y for y in range(0,rows,1)])

plt.show()

中間較為密集的地方就是車牌有字符的地方,從而很好的去除了牌邊框及鉚釘

從圖中可以明顯看出車牌字符區域的投影值和車牌邊框及鉚釘區域的投影值之間明顯有一個波谷,找到此處波谷,就可以得到車牌的字符區域,去除車牌邊框及鉚釘。

x = range(int(rows/2),2,-1)

x = [*x]

x

[16, 15, 14, 13, 12, 11, 10, 9, 8, 7, 6, 5, 4, 3]

# 定義一個算法,找到波谷,定位車牌字符的行數區域

# 我的思路;對於一個車牌,中間位置肯定是有均勻的黑色點的,所以我將圖片垂直分為兩部分,找波谷

mean = sum(hd[0:int(rows/2)])/(int(rows/2)+1)

mean

region = []

for i in range(int(rows/2),2,-1): # 0,1行肯定是邊框,直接不考慮,直接從第二行開始

if hd[i]<mean:

region.append(i)

break

for i in range(int(rows/2),rows): # 0,1行肯定是邊框,直接不考慮,直接從第二行開始

if hd[i]<mean:

region.append(i)

break

region

[8, 27]

image1 = image[region[0]:region[1],:] # 使用行區間

plt_show(image1)

字符垂直方向的切割

image11 = image1.copy()

image11.shape # 47行,170列

rows = image11.shape[0]

cols = image11.shape[1]

print(rows,cols)

19 98

cols # 170列

98

# 二值統計,統計沒每一列的黑值(0)的個數

hd1 = []

for col in range(cols):

res = 0

for row in range(rows):

if image11[row][col] == 0:

res = res+1

hd1.append(res)

len(hd1)

max(hd1)

18

# 畫出柱狀圖

y = hd1 # 點個數

x = [x for x in range(cols)] # 列數

plt.bar(x,y,color='black',width=1)

# 設置x,y軸標籤

plt.xlabel('col')

plt.ylabel('0_number')

# 設置刻度

plt.xticks([x for x in range(0,cols,10)])

plt.yticks([y for y in range(0,max(hd1)+5,5)])

plt.show()

mean = sum(hd1)/len(hd1)

mean

6.448979591836735

# 簡單的篩選

for i in range(cols):

if hd1[i] < mean/4:

hd1[i] = 0

# 畫出柱狀圖

y = hd1 # 點個數

x = [x for x in range(cols)] # 列數

plt.bar(x,y,color='black',width=1)

# 設置x,y軸標籤

plt.xlabel('col')

plt.ylabel('0_number')

# 設置刻度

plt.xticks([x for x in range(0,cols,10)])

plt.yticks([y for y in range(0,max(hd1)+5,5)])

plt.show()

從直方圖中可以看到很多波谷,這些就是字符分割區域的黑色點的個數等於0,我們就可以通過這些0點進行分割,過濾掉這些不需要的部分部分

# 找所有不為0的區間(列數)

region1 = []

reg = []

for i in range(cols-1):

if hd1[i]==0 and hd1[i+1] != 0:

reg.append(i)

if hd1[i]!=0 and hd1[i+1] == 0:

reg.append(i+2)

if len(reg) == 2:

if (reg[1]-reg[0])>5: # 限定區間長度要大於5(可以更大),過濾掉不需要的點

region1.append(reg)

reg = []

else:

reg = []

region1

[[4, 15], [17, 27], [33, 45], [45, 56], [57, 69], [69, 81], [81, 93]]

# 測試

image2 = image1[:,region1[0][0]:region1[0][1]]

plt_show(image2)

# 為了使字符之間還是存在空格,定義一個2像素白色的區域

import numpy as np

white = []

for i in range(rows*2):

white.append(255)

white = np.array(white)

white = white.reshape(rows,2)

white.shape

(19, 2)

# 遍歷所有區域,保存字符圖片到列表

p = []

for r in region1:

r = image1[:,r[0]:r[1]]

plt_show(r)

p.append(r)

p.append(white)

# 將字符圖片列表拼接為一張圖

image2 = np.hstack(p)

plt_show(image2)

# 將分割好的字符圖片保存到文件夾

print(region)

print(region1)

[8, 27]

[[4, 15], [17, 27], [33, 45], [45, 56], [57, 69], [69, 81], [81, 93]]

plt_show0(rawImage)

v_image = rawImage[region[0]:region[1],:]

plt_show0(v_image)

i = 1

for reg in region1:

h_image = v_image[:,reg[0]:reg[1]]

plt_show0(h_image)

cv2.imwrite('./words/test4_'+str(i)+'.png', h_image)

i = i+1

word_images = []

for i in range(1,8):

word = cv2.imread('./words/test4_'+str(i)+'.png',0)

ret, word = cv2.threshold(word, 0, 255, cv2.THRESH_OTSU | cv2.THRESH_BINARY_INV)

word_images.append(word)

word_images

plt.imshow(word_images[0],cmap='gray')

for i,j in enumerate(word_images):

plt.subplot(1,8,i+1)

plt.imshow(word_images[i],cmap='gray')

plt.show()

詳細源碼(各階段分析,模板圖片,資料等)獲取地址

鏈接://pan.baidu.com/s/16oFDSdD8dMk_rT7t2bLl2A

提取碼:5u8z

視頻講解://www.bilibili.com/video/BV1yg4y187kU/