利用transformer进行中文文本分类(数据集是复旦中文语料)

利用TfidfVectorizer进行中文文本分类(数据集是复旦中文语料)

和之前介绍的不同,重构了些代码,为了使整个流程更加清楚,我们要重新对数据进行预处理。

阅读本文,你可以了解中文文本分类从数据预处理、模型定义、训练和测试的整个流程。

一、熟悉数据

数据的格式是这样子的:

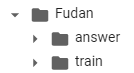

基本目录如下:

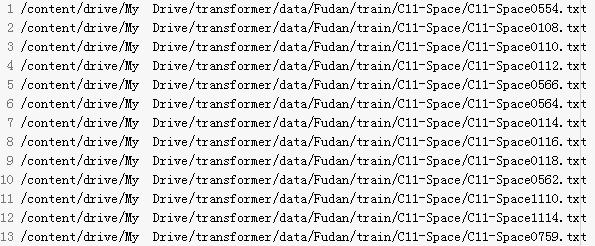

其中train存放的是训练集,answer存放的是测试集,具体看下train中的文件:

下面有20个文件夹,对应着20个类,我们继续看下其中的文件,以C3-Art为例:

每一篇都对应着一个txt文件,编码格式是gb18030.utf8文件夹下的是utf-8编码格式的txt文件。

其中C3-Art0001.txt的部分内容如下:

二、数据预处理

本文数据预处理基本流程:

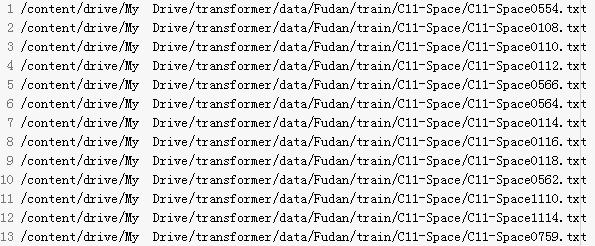

- 先将所有训练数据的txt路径以及测试用的txt路径写入到txt中备用:train.txt、test.txt

def _txtpath_to_txt(self): #将训练集和测试集下的txt路径保存 train_txt_path = os.path.join(PATH, "process/Fudan/train.txt") test_txt_path = os.path.join(PATH, "process/Fudan//test.txt") train_list = os.listdir(os.path.join(PATH, self.trainPath)) #获得该目录下的所有文件夹,返回一个列表 fp1 = open(train_txt_path,"w",encoding="utf-8") fp2 = open(test_txt_path,"w",encoding="utf-8") for train_dir in train_list: #取得下一级目录下的所有的txt路径(绝对路径) for txt in glob.glob(os.path.join(PATH,self.trainPath+train_dir+"/*.txt")): fp1.write(txt+"\n") fp1.close() test_list = os.listdir(os.path.join(PATH,self.testPath)) #获得该目录下的所有文件夹,返回一个列表 for test_dir in test_list: for txt in glob.glob(os.path.join(PATH, self.testPath+test_dir+"/*.txt")): fp2.write(txt+"\n") fp2.close()

- 接下来我们要将txt中的文本分词后写入到分词文本txt中,以及对应的标签写入到标签txt中:train_content.txt、train_label.txt、test_content.txt、test_label.txt

#将txt中的文本和标签存储到txt中 def _contentlabel_to_txt(self, txt_path, content_path, label_path): files = open(txt_path,"r",encoding="utf-8") content_file = open(content_path,"w",encoding="utf-8") label_file = open(label_path,"w",encoding="utf-8") for txt in files.readlines(): #读取每一行的txt txt = txt.strip() #去除掉\n content_list=[] label_str = txt.split("/")[-1].split("-")[-1] #先用/进行切割,获取列表中的最后一个,再利用-进行切割,获取最后一个 label_list = [] #以下for循环用于获取标签,遍历每个字符,如果遇到了数字,就终止 for s in label_str: if s.isalpha(): label_list.append(s) elif s.isalnum(): break else: print("出错了") label = "".join(label_list) #将字符列表转换为字符串,得到标签 #print(label) #以下用于获取所有文本 fp1 = open(txt,"r",encoding="gb18030",errors='ignore') #以gb18030的格式打开文件,errors='ignore'用于忽略掉超过该字符编码范围的字符 for line in fp1.readlines(): #读取每一行 #jieba分词,精确模式 line = jieba.lcut(line.strip(), cut_all=False) #将每一行分词的结果保存在一个list中 content_list.extend(line) fp1.close() content_str = " ".join(content_list) #转成字符串 #print(content_str) content_file.write(content_str+"\n") #将文本保存到tx中 label_file.write(label+"\n") content_file.close() label_file.close() files.close()

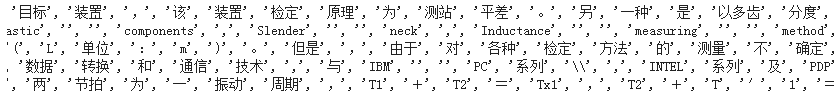

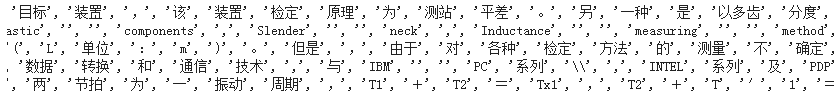

存储的分词后的文本是这个样子的:

标签是这样子的:

- 接下来我们将训练用的分词文本和测试用的分词文本进行合并后利用word2vec训练词向量(不用过滤掉停止词):

from gensim.models import Word2Vec from gensim.models.word2vec import PathLineSentences import multiprocessing import os import sys import logging # 日志信息输出 program = os.path.basename(sys.argv[0]) logger = logging.getLogger(program) logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s') logging.root.setLevel(level=logging.INFO) logger.info("running %s" % ' '.join(sys.argv)) # check and process input arguments # if len(sys.argv) < 4: # print(globals()['__doc__'] % locals()) # sys.exit(1) # input_dir, outp1, outp2 = sys.argv[1:4] # 训练模型 # 输入语料目录:PathLineSentences(input_dir) # embedding size:200 共现窗口大小:10 去除出现次数10以下的词,多线程运行,迭代10次 model = Word2Vec(PathLineSentences('/content/drive/My Drive/transformer/process/Fudan/word2vec/data/'), size=200, window=10, min_count=10, workers=multiprocessing.cpu_count(), iter=10) model.save('/content/drive/My Drive/transformer/process/Fudan/word2vec/model/Word2vec.w2v')

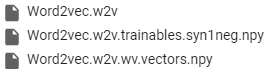

‘/content/drive/My Drive/transformer/Fudan/word2vec/data/’下是train_content.txt和test_content.txt(将它们移过去的)

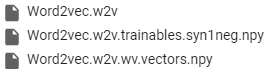

最后会生成:

- 去掉停用词

#去除掉停用词 def _get_clean_data(self, filePath): #先初始化停用词字典 self._get_stopwords() sentence_list = [] with open(filePath,'r',encoding='utf-8') as fp: lines = fp.readlines() for line in lines: tmp = [] words = line.strip().split(" ") for word in words: word = word.strip() if word not in self.stopWordDict and word != '': tmp.append(word) else: continue sentence_list.append(tmp) return sentence_list #读取停用词字典 def _get_stopwords(self): with open(os.path.join(PATH, self.stopWordSource), "r") as f: stopWords = f.read() stopWordList = set(stopWords.splitlines()) # 将停用词用列表的形式生成,之后查找停用词时会比较快 self.stopWordDict = dict(zip(stopWordList, list(range(len(stopWordList)))))

- 创建词汇表

#创建词汇表 def _get_vocaburay(self): train_content = os.path.join(PATH, "process/Fudan/word2vec/data/train_content.txt") sentence_list = self._get_clean_data(train_content) #这里可以计算文本的平均长度,设置配置中的sequenceLength #max_sequence = sum([len(s) for s in sentence_list]) / len(sentence_list) vocab_before = [] for sentence in sentence_list: for word in sentence: vocab_before.append(word) count_vocab = Counter(vocab_before) #统计每个词出现的次数 #print(len(count_vocab)) count_vocab = sorted(count_vocab.items(),key=lambda x:x[1], reverse=True) #将出现频率按从高到低排序 vocab_after = copy.deepcopy(count_vocab[:6000]) return dict(vocab_after) #返回前6000个词,将元组构成的列表转换为字典

-

将文本转换为id,将标签转换为Id

def _wordToIdx(self): #构建词汇和id的映射 vocab = list(self._get_vocaburay().keys()) #取得字典中的键,也就是词语,转换成列表 #print(vocab) tmp = ['PAD','UNK'] vocab = tmp + vocab word2idx = {word:i for i,word in enumerate(vocab)} idx2word = {i:word for i,word in enumerate(vocab)} return word2idx,idx2word def _labelToIdx(self): #构建词汇列表和到id的映射 label_path = os.path.join(PATH, "process/Fudan/train_label.txt") with open(os.path.join(PATH, label_path), "r") as f: labels = f.read() labelsList = sorted(set(labels.splitlines())) #为了避免每次标签id变换,这里排个序 label2idx = {label:i for i,label in enumerate(labelsList)} idx2label = {i:label for i,label in enumerate(labelsList)} self.labelList = [label2idx[label] for label in labelsList] return label2idx,idx2label

- 获取训练数据

def _getData(self,contentPath,labelPath,mode=None): #这里有两种操作,如果文本中的词没有在词汇表中出现,则可以舍去或者用UNK代替,我们这里使用UNK vocab = self._get_vocaburay() word2idx,idx2word = self._wordToIdx() label2idx,idx2label = self._labelToIdx() data = [] content_list = self._get_clean_data(contentPath) for content in content_list: #print(content) tmp = [] if len(content) >= self.config.sequenceLength: #大于最大长度进行截断 content = content[:self.config.sequenceLength] else: #小于最大长度用PAD的id进行填充层 content = ['PAD']*(self.config.sequenceLength-len(content)) + content for word in content: #将词语用id进行映射 if word in word2idx: tmp.append(word2idx[word]) else: tmp.append(word2idx['UNK']) data.append(tmp) with open(labelPath,'r',encoding='utf-8') as fp: labels = fp.read() label = [[label2idx[label]] for label in labels.splitlines()] return data,label

- 将训练数据拆分为训练集和验证集

def _getData(self,contentPath,labelPath,mode=None): #这里有两种操作,如果文本中的词没有在词汇表中出现,则可以舍去或者用UNK代替,我们这里使用UNK vocab = self._get_vocaburay() word2idx,idx2word = self._wordToIdx() label2idx,idx2label = self._labelToIdx() data = [] content_list = self._get_clean_data(contentPath) for content in content_list: #print(content) tmp = [] if len(content) >= self.config.sequenceLength: #大于最大长度进行截断 content = content[:self.config.sequenceLength] else: #小于最大长度用PAD的id进行填充层 content = ['PAD']*(self.config.sequenceLength-len(content)) + content for word in content: #将词语用id进行映射 if word in word2idx: tmp.append(word2idx[word]) else: tmp.append(word2idx['UNK']) data.append(tmp) with open(labelPath,'r',encoding='utf-8') as fp: labels = fp.read() label = [[label2idx[label]] for label in labels.splitlines()] return data,label

- 获取测试集

def _getTrainValData(self,dataPath,labelPath): trainData,trainLabel = self._getData(dataPath,labelPath) #方便起见,我们这里就直接使用sklearn中的函数了 self.trainData,self.valData,self.trainLabels,self.valLabels = train_test_split(trainData,trainLabel,test_size=self.rate,random_state=1) def _getTestData(self,dataPath,labelPath): self.testData,self.testLabels = self._getData(dataPath,labelPath)

- 获得词向量

#获取词汇表中的词向量 def _getWordEmbedding(self): word2idx,idx2word = self._wordToIdx() vocab = sorted(word2idx.items(), key=lambda x:x[1]) #将词按照id进行排序 #print(vocab) w2vModel = Word2Vec.load(os.path.join(PATH,'process/Fudan/word2vec/model/Word2vec.w2v')) self.wordEmbedding.append([0]*self.embeddingSize) #PAD对应的词向量 self.wordEmbedding.append([0]*self.embeddingSize) #UNK对应的词向量 for i in range(2,len(vocab)): self.wordEmbedding.append(list(w2vModel[vocab[i][0]]))

代码就不一一贴了。

三、创建模型

import numpy as np import tensorflow as tf import warnings warnings.filterwarnings("ignore") class Transformer(object): """ Transformer Encoder 用于文本分类 """ def __init__(self, config, wordEmbedding): # 定义模型的输入 #inputX:[None,600],inputY:[None,20] self.inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX") self.inputY = tf.placeholder(tf.int32, [None, config.numClasses], name="inputY") self.lastBatch = False self.dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb") self.config = config # 定义l2损失 l2Loss = tf.constant(0.0) # 词嵌入层, 位置向量的定义方式有两种:一是直接用固定的one-hot的形式传入,然后和词向量拼接,在当前的数据集上表现效果更好。另一种 # 就是按照论文中的方法实现,这样的效果反而更差,可能是增大了模型的复杂度,在小数据集上表现不佳。 with tf.name_scope("wordEmbedding"): self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec"), name="W") self.wordEmbedded = tf.nn.embedding_lookup(self.W, self.inputX) with tf.name_scope("positionEmbedding"): if tf.shape(self.wordEmbedded)[0] == config.batchSize: self.positionEmbedded = self._positionEmbedding() else: self.positionEmbedded = self._positionEmbedding(lastBatch=tf.shape(self.wordEmbedded)[0]) self.embeddedWords = self.wordEmbedded + self.positionEmbedded with tf.name_scope("transformer"): for i in range(config.modelConfig.numBlocks): with tf.name_scope("transformer-{}".format(i + 1)): # 维度[batch_size, sequence_length, embedding_size] multiHeadAtt = self._multiheadAttention(rawKeys=self.wordEmbedded, queries=self.embeddedWords, keys=self.embeddedWords) # 维度[batch_size, sequence_length, embedding_size] self.embeddedWords = self._feedForward(multiHeadAtt, [config.modelConfig.filters, config.modelConfig.embeddingSize]) outputs = tf.reshape(self.embeddedWords, [-1, config.sequenceLength * (config.modelConfig.embeddingSize)]) outputSize = outputs.get_shape()[-1].value with tf.name_scope("dropout"): outputs = tf.nn.dropout(outputs, keep_prob=self.dropoutKeepProb) # 全连接层的输出 with tf.name_scope("output"): outputW = tf.get_variable( "outputW", shape=[outputSize, config.numClasses], initializer=tf.contrib.layers.xavier_initializer()) outputB= tf.Variable(tf.constant(0.1, shape=[config.numClasses]), name="outputB") l2Loss += tf.nn.l2_loss(outputW) l2Loss += tf.nn.l2_loss(outputB) self.logits = tf.nn.xw_plus_b(outputs, outputW, outputB, name="logits") if config.numClasses == 1: self.predictions = tf.cast(tf.greater_equal(self.logits, 0.0), tf.float32, name="predictions") elif config.numClasses > 1: self.predictions = tf.argmax(self.logits, axis=-1, name="predictions") # 计算二元交叉熵损失 with tf.name_scope("loss"): if config.numClasses == 1: losses = tf.nn.sigmoid_cross_entropy_with_logits(logits=self.logits, labels=tf.cast(tf.reshape(self.inputY, [-1, 1]), dtype=tf.float32)) elif config.numClasses > 1: print(self.logits,self.inputY) losses = tf.nn.softmax_cross_entropy_with_logits(logits=self.logits, labels=self.inputY) self.loss = tf.reduce_mean(losses) + config.modelConfig.l2RegLambda * l2Loss def _layerNormalization(self, inputs, scope="layerNorm"): # LayerNorm层和BN层有所不同 epsilon = self.config.modelConfig.epsilon inputsShape = inputs.get_shape() # [batch_size, sequence_length, embedding_size] paramsShape = inputsShape[-1:] # LayerNorm是在最后的维度上计算输入的数据的均值和方差,BN层是考虑所有维度的 # mean, variance的维度都是[batch_size, sequence_len, 1] mean, variance = tf.nn.moments(inputs, [-1], keep_dims=True) beta = tf.Variable(tf.zeros(paramsShape)) gamma = tf.Variable(tf.ones(paramsShape)) normalized = (inputs - mean) / ((variance + epsilon) ** .5) outputs = gamma * normalized + beta return outputs def _multiheadAttention(self, rawKeys, queries, keys, numUnits=None, causality=False, scope="multiheadAttention"): # rawKeys 的作用是为了计算mask时用的,因为keys是加上了position embedding的,其中不存在padding为0的值 numHeads = self.config.modelConfig.numHeads keepProp = self.config.modelConfig.keepProp if numUnits is None: # 若是没传入值,直接去输入数据的最后一维,即embedding size. numUnits = queries.get_shape().as_list()[-1] # tf.layers.dense可以做多维tensor数据的非线性映射,在计算self-Attention时,一定要对这三个值进行非线性映射, # 其实这一步就是论文中Multi-Head Attention中的对分割后的数据进行权重映射的步骤,我们在这里先映射后分割,原则上是一样的。 # Q, K, V的维度都是[batch_size, sequence_length, embedding_size] Q = tf.layers.dense(queries, numUnits, activation=tf.nn.relu) K = tf.layers.dense(keys, numUnits, activation=tf.nn.relu) V = tf.layers.dense(keys, numUnits, activation=tf.nn.relu) # 将数据按最后一维分割成num_heads个, 然后按照第一维拼接 # Q, K, V 的维度都是[batch_size * numHeads, sequence_length, embedding_size/numHeads] Q_ = tf.concat(tf.split(Q, numHeads, axis=-1), axis=0) K_ = tf.concat(tf.split(K, numHeads, axis=-1), axis=0) V_ = tf.concat(tf.split(V, numHeads, axis=-1), axis=0) # 计算keys和queries之间的点积,维度[batch_size * numHeads, queries_len, key_len], 后两维是queries和keys的序列长度 similary = tf.matmul(Q_, tf.transpose(K_, [0, 2, 1])) # 对计算的点积进行缩放处理,除以向量长度的根号值 scaledSimilary = similary / (K_.get_shape().as_list()[-1] ** 0.5) # 在我们输入的序列中会存在padding这个样的填充词,这种词应该对最终的结果是毫无帮助的,原则上说当padding都是输入0时, # 计算出来的权重应该也是0,但是在transformer中引入了位置向量,当和位置向量相加之后,其值就不为0了,因此在添加位置向量 # 之前,我们需要将其mask为0。虽然在queries中也存在这样的填充词,但原则上模型的结果之和输入有关,而且在self-Attention中 # queryies = keys,因此只要一方为0,计算出的权重就为0。 # 具体关于key mask的介绍可以看看这里: //github.com/Kyubyong/transformer/issues/3 # 利用tf,tile进行张量扩张, 维度[batch_size * numHeads, keys_len] keys_len = keys 的序列长度 # tf.tile((?, 200), [8,1]) # 将每一时序上的向量中的值相加取平均值 keyMasks = tf.sign(tf.abs(tf.reduce_sum(rawKeys, axis=-1))) # 维度[batch_size, time_step] print(keyMasks.shape) keyMasks = tf.tile(keyMasks, [numHeads, 1]) # 增加一个维度,并进行扩张,得到维度[batch_size * numHeads, queries_len, keys_len] keyMasks = tf.tile(tf.expand_dims(keyMasks, 1), [1, tf.shape(queries)[1], 1]) # tf.ones_like生成元素全为1,维度和scaledSimilary相同的tensor, 然后得到负无穷大的值 paddings = tf.ones_like(scaledSimilary) * (-2 ** (32 + 1)) # tf.where(condition, x, y),condition中的元素为bool值,其中对应的True用x中的元素替换,对应的False用y中的元素替换 # 因此condition,x,y的维度是一样的。下面就是keyMasks中的值为0就用paddings中的值替换 maskedSimilary = tf.where(tf.equal(keyMasks, 0), paddings, scaledSimilary) # 维度[batch_size * numHeads, queries_len, key_len] # 在计算当前的词时,只考虑上文,不考虑下文,出现在Transformer Decoder中。在文本分类时,可以只用Transformer Encoder。 # Decoder是生成模型,主要用在语言生成中 if causality: diagVals = tf.ones_like(maskedSimilary[0, :, :]) # [queries_len, keys_len] tril = tf.contrib.linalg.LinearOperatorTriL(diagVals).to_dense() # [queries_len, keys_len] masks = tf.tile(tf.expand_dims(tril, 0), [tf.shape(maskedSimilary)[0], 1, 1]) # [batch_size * numHeads, queries_len, keys_len] paddings = tf.ones_like(masks) * (-2 ** (32 + 1)) maskedSimilary = tf.where(tf.equal(masks, 0), paddings, maskedSimilary) # [batch_size * numHeads, queries_len, keys_len] # 通过softmax计算权重系数,维度 [batch_size * numHeads, queries_len, keys_len] weights = tf.nn.softmax(maskedSimilary) # 加权和得到输出值, 维度[batch_size * numHeads, sequence_length, embedding_size/numHeads] outputs = tf.matmul(weights, V_) # 将多头Attention计算的得到的输出重组成最初的维度[batch_size, sequence_length, embedding_size] outputs = tf.concat(tf.split(outputs, numHeads, axis=0), axis=2) outputs = tf.nn.dropout(outputs, keep_prob=keepProp) # 对每个subLayers建立残差连接,即H(x) = F(x) + x outputs += queries # normalization 层 outputs = self._layerNormalization(outputs) return outputs def _feedForward(self, inputs, filters, scope="multiheadAttention"): # 在这里的前向传播采用卷积神经网络 # 内层 params = {"inputs": inputs, "filters": filters[0], "kernel_size": 1, "activation": tf.nn.relu, "use_bias": True} outputs = tf.layers.conv1d(**params) # 外层 params = {"inputs": outputs, "filters": filters[1], "kernel_size": 1, "activation": None, "use_bias": True} # 这里用到了一维卷积,实际上卷积核尺寸还是二维的,只是只需要指定高度,宽度和embedding size的尺寸一致 # 维度[batch_size, sequence_length, embedding_size] outputs = tf.layers.conv1d(**params) # 残差连接 outputs += inputs # 归一化处理 outputs = self._layerNormalization(outputs) return outputs def _positionEmbedding(self, lastBatch=None, scope="positionEmbedding"): # 生成可训练的位置向量 if lastBatch is None: batchSize = self.config.batchSize #128 else: batchSize = lastBatch sequenceLen = self.config.sequenceLength #600 embeddingSize = self.config.modelConfig.embeddingSize #100 # 生成位置的索引,并扩张到batch中所有的样本上 positionIndex = tf.tile(tf.expand_dims(tf.range(sequenceLen), 0), [batchSize, 1]) # 根据正弦和余弦函数来获得每个位置上的embedding的第一部分 positionEmbedding = np.array([[pos / np.power(10000, (i-i%2) / embeddingSize) for i in range(embeddingSize)] for pos in range(sequenceLen)]) # 然后根据奇偶性分别用sin和cos函数来包装 positionEmbedding[:, 0::2] = np.sin(positionEmbedding[:, 0::2]) positionEmbedding[:, 1::2] = np.cos(positionEmbedding[:, 1::2]) # 将positionEmbedding转换成tensor的格式 positionEmbedding_ = tf.cast(positionEmbedding, dtype=tf.float32) # 得到三维的矩阵[batchSize, sequenceLen, embeddingSize] positionEmbedded = tf.nn.embedding_lookup(positionEmbedding_, positionIndex) return positionEmbedded

四、定义训练、测试、预测

import sys import os BASE_DIR = os.path.dirname(os.path.abspath(__file__)) #当前程序上一级目录,这里为transformer from dataset.fudanDataset import FudanDataset from models.transformer import Transformer from utils.utils import * from utils.metrics import * from config.fudanConfig import FudanConfig from config.globalConfig import PATH import numpy as numpy import tensorflow as tf import time import datetime from tkinter import _flatten from sklearn import metrics import jieba def train(): print("配置Saver。。。\n") save_dir = 'checkpoint/transformer/' if not os.path.exists(save_dir): os.makedirs(save_dir) save_path = os.path.join(save_dir, 'best_validation') # 最佳验证结果保存路径 globalStep = tf.Variable(0, name="globalStep", trainable=False) # 配置 Saver saver = tf.train.Saver() #定义session """ session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False) session_conf.gpu_options.allow_growth=True session_conf.gpu_options.per_process_gpu_memory_fraction = 0.9 # 配置gpu占用率 sess = tf.Session(config=session_conf) """ sess = tf.Session() print("定义优化器。。。\n") # 定义优化函数,传入学习速率参数 optimizer = tf.train.AdamOptimizer(config.trainConfig.learningRate) # 计算梯度,得到梯度和变量 gradsAndVars = optimizer.compute_gradients(model.loss) # 将梯度应用到变量下,生成训练器 trainOp = optimizer.apply_gradients(gradsAndVars, global_step=globalStep) sess.run(tf.global_variables_initializer()) def trainStep(batchX, batchY): """ 训练函数 """ feed_dict = { model.inputX: batchX, model.inputY: batchY, model.dropoutKeepProb: config.modelConfig.dropoutKeepProb, } _, step, loss, predictions = sess.run([trainOp, globalStep, model.loss, model.predictions], feed_dict) if config.numClasses == 1: acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY) elif config.numClasses > 1: acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY, labels=labelList) return loss, acc, prec, recall, f_beta def valStep(batchX, batchY): """ 验证函数 """ feed_dict = { model.inputX: batchX, model.inputY: batchY, model.dropoutKeepProb: 1.0, } step, loss, predictions = sess.run([globalStep, model.loss, model.predictions], feed_dict) if config.numClasses == 1: acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY) elif config.numClasses > 1: acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY, labels=labelList) return loss, acc, prec, recall, f_beta print("开始训练。。。\n") best_f_beta_val = 0.0 # 最佳验证集准确率 last_improved = 0 # 记录上一次提升批次 require_improvement = 1000 # 如果超过1000轮未提升,提前结束训练 flag = False for epoch in range(config.trainConfig.epoches): print('Epoch:', epoch + 1) batch_train = batch_iter(train_data, train_label, config.batchSize) for x_batch, y_batch in batch_train: loss, acc, prec, recall, f_beta = trainStep(x_batch, y_batch) currentStep = tf.train.global_step(sess, globalStep) # 多少次迭代打印一次训练结果: if currentStep % config.trainConfig.print_per_step == 0: print("train: step: {}, loss: {:.4f}, acc: {:.4f}, recall: {:.4f}, precision: {:.4f}, f_beta: {:.4f}".format( currentStep, loss, acc, recall, prec, f_beta)) if currentStep % config.trainConfig.evaluateEvery == 0: print("开始验证。。。\n") losses = [] accs = [] f_betas = [] precisions = [] recalls = [] batch_val = batch_iter(val_data, val_label, config.batchSize) for x_batch, y_batch in batch_val: loss, acc, precision, recall, f_beta = valStep(x_batch, y_batch) losses.append(loss) accs.append(acc) f_betas.append(f_beta) precisions.append(precision) recalls.append(recall) if mean(f_betas) > best_f_beta_val: # 保存最好结果 best_f_beta_val = mean(f_betas) last_improved = currentStep saver.save(sess=sess, save_path=save_path) improved_str = '*' else: improved_str = '' time_str = datetime.datetime.now().isoformat() print("{}, step: {:>6}, loss: {:.4f}, acc: {:.4f},precision: {:.4f}, recall: {:.4f}, f_beta: {:.4f} {}".format( time_str, currentStep, mean(losses), mean(accs), mean(precisions), mean(recalls), mean(f_betas), improved_str)) if currentStep - last_improved > require_improvement: # 验证集正确率长期不提升,提前结束训练 print("没有优化很长一段时间了,自动停止") flag = True break # 跳出循环 if flag: # 同上 break sess.close() def test(test_data,test_label): print("开始进行测试。。。") save_path = 'checkpoint/transformer/best_validation' saver = tf.train.Saver() sess = tf.Session() sess.run(tf.global_variables_initializer()) saver.restore(sess=sess, save_path=save_path) # 读取保存的模型 data_len = len(test_data) test_batchsize = 128 batch_test = batch_iter(test_data, test_label, 128, is_train=False) pred_label = [] for x_batch,y_batch in batch_test: feed_dict = { model.inputX: x_batch, model.inputY: y_batch, model.dropoutKeepProb: 1.0, } predictions = sess.run([model.predictions], feed_dict) pred_label.append(predictions[0].tolist()) pred_label = list(_flatten(pred_label)) test_label = [np.argmax(item) for item in test_label] # 评估 print("计算Precision, Recall and F1-Score...") print(metrics.classification_report(test_label, pred_label, target_names=true_labelList)) sess.close() def process_sentence(data): fudanDataset._get_stopwords() sentence_list = [] for content in data: words_list = jieba.lcut(content, cut_all=False) tmp1 = [] for word in words_list: word = word.strip() if word not in fudanDataset.stopWordDict and word != '': tmp1.append(word) else: continue sentence_list.append(tmp1) vocab = fudanDataset._get_vocaburay() word2idx,idx2word = fudanDataset._wordToIdx() label2idx,idx2label = fudanDataset._labelToIdx() res_data = [] #print(content) for content in sentence_list: tmp2 = [] if len(content) >= config.sequenceLength: #大于最大长度进行截断 content = content[:config.sequenceLength] else: #小于最大长度用PAD的id进行填充层 content = ['PAD']*(config.sequenceLength-len(content)) + content for word in content: #将词语用id进行映射 if word in word2idx: tmp2.append(word2idx[word]) else: tmp2.append(word2idx['UNK']) res_data.append(tmp2) return res_data def get_predict_content(content_path,label_path): use_data = 5 txt_list = [] label_list = [] predict_data = [] predict_label = [] content_file = open(content_path,"r",encoding="utf-8") label_file = open(label_path,"r",encoding="utf-8") for txt in content_file.readlines(): #读取每一行的txt txt = txt.strip() #去除掉\n txt_list.append(txt) for label in label_file.readlines(): label = label.strip() label_list.append(label) data = [] for txt,label in zip(txt_list,label_list): data.append((txt,label)) import random predict_data = random.sample(data,use_data) p_data = [] p_label = [] for txt,label in predict_data: with open(txt,"r",encoding="gb18030",errors='ignore') as fp1: tmp = [] for line in fp1.readlines(): #读取每一行 tmp.append(line.strip()) p_data.append("".join(tmp)) p_label.append(label) content_file.close() label_file.close() return p_data,p_label def predict(data,label,p_data): print("开始预测文本的类别。。。") predict_data = data predict_true_data = label save_path = 'checkpoint/transformer/best_validation' saver = tf.train.Saver() sess = tf.Session() sess.run(tf.global_variables_initializer()) saver.restore(sess=sess, save_path=save_path) # 读取保存的模型 feed_dict = { model.inputX: predict_data, model.inputY: predict_true_data, model.dropoutKeepProb: 1.0, } predictions = sess.run([model.predictions], feed_dict) pred_label = predictions[0].tolist() real_label = [np.argmax(item) for item in predict_true_data] for content,pre_label,true_label in zip(p_data,pred_label,real_label): print("输入的文本是:{}...".format(content[:100])) print("预测的类别是:",idx2label[pre_label]) print("真实的类别是:",idx2label[true_label]) print("================================================") sess.close() if __name__ == '__main__': config = FudanConfig() fudanDataset = FudanDataset(config) word2idx,idx2word = fudanDataset._wordToIdx() label2idx,idx2label = fudanDataset._labelToIdx() print("加载数据。。。") train_content_path = os.path.join(PATH, "process/Fudan/word2vec/data/train_content.txt") train_label_path = os.path.join(PATH, "process/Fudan/train_label.txt") test_content_path = os.path.join(PATH, "process/Fudan/word2vec/data/test_content.txt") test_label_path = os.path.join(PATH, "process/Fudan/test_label.txt") fudanDataset._getTrainValData(train_content_path,train_label_path) fudanDataset._getTestData(test_content_path,test_label_path) fudanDataset._getWordEmbedding() train_data,val_data,train_label,val_label = fudanDataset.trainData,fudanDataset.valData,fudanDataset.trainLabels,fudanDataset.valLabels test_data,test_label = fudanDataset.testData,fudanDataset.testLabels train_label = one_hot(train_label) val_label = one_hot(val_label) test_label = one_hot(test_label) wordEmbedding = fudanDataset.wordEmbedding labelList = fudanDataset.labelList true_labelList = [idx2label[label] for label in labelList] print("定义模型。。。") model = Transformer(config, wordEmbedding) test(test_data,test_label) print("进行预测。。。") p_data,p_label = get_predict_content(os.path.join(PATH, "process/Fudan/test.txt"),test_label_path) process_data = process_sentence(p_data) onehot_label = np.zeros((len(p_label),config.numClasses)) for i,value in enumerate(p_label): onehot_label[i][label2idx[value]] = 1 process_label = onehot_label predict(process_data,process_label,p_data)

结果:

计算Precision, Recall and F1-Score... precision recall f1-score support Agriculture 0.83 0.90 0.87 1022 Art 0.79 0.86 0.82 742 Communication 0.00 0.00 0.00 27 Computer 0.93 0.97 0.95 1358 Economy 0.87 0.89 0.88 1601 Education 0.67 0.07 0.12 61 Electronics 0.00 0.00 0.00 28 Energy 1.00 0.03 0.06 33 Enviornment 0.86 0.95 0.90 1218 History 0.68 0.66 0.67 468 Law 0.18 0.12 0.14 52 Literature 0.00 0.00 0.00 34 Medical 0.19 0.06 0.09 53 Military 0.50 0.03 0.05 76 Mine 1.00 0.03 0.06 34 Philosophy 0.62 0.22 0.33 45 Politics 0.78 0.88 0.83 1026 Space 0.91 0.81 0.85 642 Sports 0.86 0.88 0.87 1254 Transport 1.00 0.02 0.03 59 accuracy 0.84 9833 macro avg 0.63 0.42 0.43 9833 weighted avg 0.83 0.84 0.83 9833

输入的文本是:中国环境科学CHINA ENVIRONMENTAL SCIENCE1998年 第18卷 第1期 No.1 Vol.18 1998科技期刊镉胁迫对小麦叶片细胞膜脂过氧化的影响*罗立新 孙铁珩 靳月华(中... 预测的类别是: Enviornment 真实的类别是: Enviornment ================================================ 输入的文本是:自动化学报AGTA AUTOMATICA SINICA1999年 第25卷 第2期 Vol.25 No.2 1999TSP问题分层求解算法的复杂度研究1)卢 欣 李衍达关键词 TSP,局部搜索算法,动... 预测的类别是: Computer 真实的类别是: Computer ================================================ 输入的文本是:【 文献号 】3-5519【原文出处】人民日报【原刊地名】京【原刊期号】19960615【原刊页号】⑵【分 类 号】D4【分 类 名】中国政治【复印期号】199606【 标 题 】中国人民政治协商会... 预测的类别是: Politics 真实的类别是: Politics ================================================ 输入的文本是:软件学报JOURNAL OF SOFTWARE1999年 第2期 No.2 1999视觉导航中基于模糊神经网的消阴影算法研究郭木河 杨 磊 陶西平 何克忠 张 钹摘要 在实际的应用中,由于室外移动机器... 预测的类别是: Computer 真实的类别是: Computer ================================================ 输入的文本是:【 文献号 】2-814【原文出处】中国乡镇企业会计【原刊地名】京【原刊期号】199907【原刊页号】7~9【分 类 号】F22【分 类 名】乡镇企业与农场管理【复印期号】199908【 标 题 】... 预测的类别是: Economy 真实的类别是: Economy ================================================

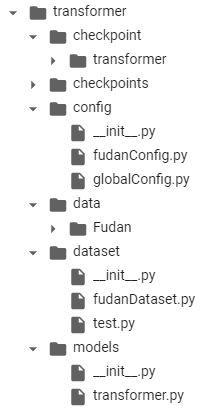

总目录结构:

后续还将继续添加相应的功能,比如tensorboard可视化,其它网络LSTM、GRU等等。

参考:

//www.cnblogs.com/jiangxinyang/p/10210813.html

这里面在transformer模型多头注意力那里漏了一句: